MPI学习笔记

1、什么是MPI(Message Passing Interface)

MPI是一个接口规范

- MPI是消息传递库的设计规范,并不是库。

- MPI主要解决并行编程消息传递模型: 数据从一个进程的地址空间通过协同操作移动到另一个进程的地址空间。

- Simply stated, the goal of the Message Passing Interface is to provide a widely used standard for writing message passing programs. The interface attempts to be:

- practical

- portable

- efficient

- flexible

- The MPI standard has gone through a number of revisions, with the most recent version being MPI-3

- Interface specifications have been defined for C and Fortran90 language bindings:

- C++ bindings from MPI-1 are removed in MPI-3

- MPI-3 also provides support for Fortran 2003 and 2008 features

- Actual MPI library implementations differ in which version and features of the MPI standard they support. Developers/users will need to be aware of this.

2、Level of Thread Support

- MPI libraries vary in their level of thread support:

- MPI_THREAD_SINGLE - Level 0: Only one thread will execute.

- MPI_THREAD_FUNNELED - Level 1: The process may be multi-threaded, but only the main thread will make MPI calls - all MPI calls are funneled to the main thread.

- MPI_THREAD_SERIALIZED - Level 2: The process may be multi-threaded, and multiple threads may make MPI calls, but only one at a time. That is, calls are not made concurrently from two distinct threads as all MPI calls are serialized.

- MPI_THREAD_MULTIPLE - Level 3: Multiple threads may call MPI with no restrictions.

A simple C language example for determining thread level support is shown below.#include "mpi.h"

#include

int main( int argc, char *argv[] )

{

int provided, claimed;

/*** Select one of the following

MPI_Init_thread( 0, 0, MPI_THREAD_SINGLE, &provided );

MPI_Init_thread( 0, 0, MPI_THREAD_FUNNELED, &provided );

MPI_Init_thread( 0, 0, MPI_THREAD_SERIALIZED, &provided );

MPI_Init_thread( 0, 0, MPI_THREAD_MULTIPLE, &provided );

***/

MPI_Init_thread(0, 0, MPI_THREAD_MULTIPLE, &provided );

MPI_Query_thread( &claimed );

printf( "Query thread level= %d Init_thread level= %d\n", claimed, provided );

MPI_Finalize();

}

Sample output:

Query thread level= 3 Init_thread level= 3

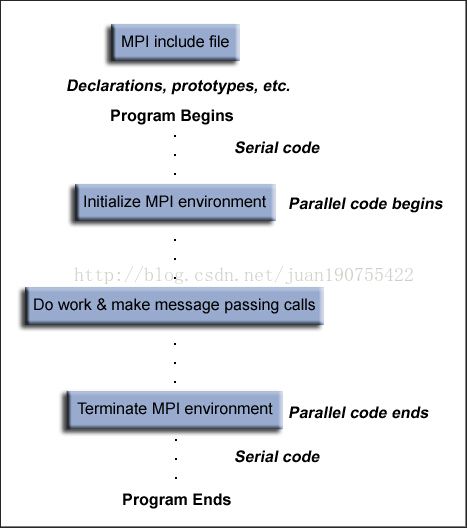

3、Getting Started

General MPI Program Structure:

Header File:

Required for all programs that make MPI library calls.

| C include file | Fortran include file |

| #include "mpi.h" | include "mpif.h " |

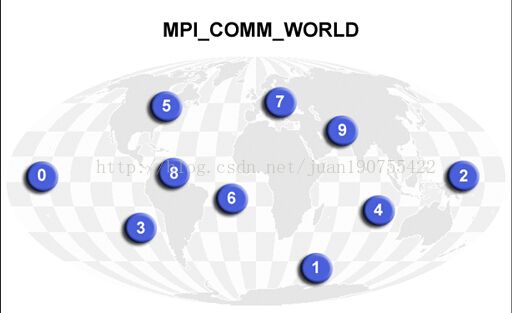

Communicators and Groups:

- MPI uses objects called communicators and groups to define which collection of processes may communicate with each other.

- Most MPI routines require you to specify a communicator as an argument.

- Communicators and groups will be covered in more detail later. For now, simply use MPI_COMM_WORLD whenever a communicator is required - it is the predefined communicator that includes all of your MPI processes.

Environment Management Routines(环境管理例程):

This group of routines is used for interrogating and setting the MPI execution environment, and covers an assortment of purposes, such as initializing and terminating the MPI environment, querying a rank's identity, querying the MPI library's version, etc. Most of the commonly used ones are described below.

MPI_Init

Initializes the MPI execution environment. This function must be called in every MPI program, must be called before any other MPI functions and must be called only once in an MPI program. For C programs, MPI_Init may be used to pass the command line arguments to all processes, although this is not required by the standard and is implementation dependent.

MPI_Init (&argc,&argv)

MPI_INIT (ierr)

MPI_Comm_size

Returns the total number of MPI processes in the specified communicator, such as MPI_COMM_WORLD. If the communicator is MPI_COMM_WORLD, then it represents the number of MPI tasks available to your application.

MPI_Comm_size (comm,&size)

MPI_COMM_SIZE (comm,size,ierr)

MPI_Comm_rank

Returns the rank of the calling MPI process within the specified communicator. Initially, each process will be assigned a unique integer rank between 0 and number of tasks - 1 within the communicator MPI_COMM_WORLD. This rank is often referred to as a task ID. If a process becomes associated with other communicators, it will have a unique rank within each of these as well.

MPI_Comm_rank (comm,&rank)

MPI_COMM_RANK (comm,rank,ierr)

MPI_Abort

Terminates all MPI processes associated with the communicator. In most MPI implementations it terminates ALL processes regardless of the communicator specified.

MPI_Abort (comm,errorcode)

MPI_ABORT (comm,errorcode,ierr)

MPI_Get_processor_name

Returns the processor name. Also returns the length of the name. The buffer for "name" must be at least MPI_MAX_PROCESSOR_NAME characters in size. What is returned into "name" is implementation dependent - may not be the same as the output of the "hostname" or "host" shell commands.

MPI_Get_processor_name (&name,&resultlength)

MPI_GET_PROCESSOR_NAME (name,resultlength,ierr)

MPI_Get_version

Returns the version and subversion of the MPI standard that's implemented by the library.

MPI_Get_version (&version,&subversion)

MPI_GET_VERSION (version,subversion,ierr)

MPI_Initialized

Indicates whether MPI_Init has been called - returns flag as either logical true (1) or false(0). MPI requires that MPI_Init be called once and only once by each process. This may pose a problem for modules that want to use MPI and are prepared to call MPI_Init if necessary. MPI_Initialized solves this problem.

MPI_Initialized (&flag)

MPI_INITIALIZED (flag,ierr)

MPI_Wtime

Returns an elapsed wall clock time in seconds (double precision) on the calling processor.

MPI_Wtime ()

MPI_WTIME ()

MPI_Wtick

Returns the resolution in seconds (double precision) of MPI_Wtime.

MPI_Wtick ()

MPI_WTICK ()

MPI_Finalize

Terminates the MPI execution environment. This function should be the last MPI routine called in every MPI program - no other MPI routines may be called after it.

MPI_Finalize ()

MPI_FINALIZE (ierr)