ansible 二进制安装k8s集群使用

kubeasz: 使用Ansible脚本安装K8S集群,介绍组件交互原理,方便直接,不受国内网络环境影响 mirror of https://github.com/easzlab/kubeasz

理论等见原始文档,我这只说明最简单的一套操作步骤

| 类型/主机名 | 服务器IP | VIP | 系统 |

| master1 | 172.31.7.101 | 172.31.7.188 | centos7.9 |

| master2 | 172.31.7.102 | 172.31.7.188 | centos7.9 |

| master3 | 172.31.7.103 | 172.31.7.188 | centos7.9 |

| harbor1 | 172.31.7.104 | centos7.9 | |

| harbor2 | 172.31.7.105 | centos7.9 | |

| etcd1 | 172.31.7.106 | centos7.9 | |

| etcd2 | 172.31.7.107 | centos7.9 | |

| etcd3 | 172.31.7.108 | centos7.9 | |

| haproxy1 | 172.31.7.109 | centos7.9 | |

| haproxy2 | 172.31.7.110 | centos7.9 | |

| node1 | 172.31.7.111 | centos7.9 | |

| node2 | 172.31.7.112 | centos7.9 | |

| node3 | 172.31.7.113 | centos7.9 |

操作系统 centos7和rocky8.7测试均正常

1.安装 ansible

yum -y install ansible2.配置免秘钥认证 每个节点 除harbor 以及haproxy节点

全网最简便 ssh一键免密登录_zz-zjx的博客-CSDN博客

参考以上文档

3.每个节点安装安装python3 每个节点 除harbor 以及haproxy节点 并做软链接以及替换python版本

yum -y install python36

mv /usr/bin/python /usr/bin/python.bak.$(date +%F).$(date +%R)

ln -s /usr/bin/python3 /usr/bin/python

sed -i "s@/usr/bin/python@/usr/bin/python2@g" /usr/bin/yum

sed -i "s@/usr/bin/python@/usr/bin/python2@g" /usr/libexec/urlgrabber-ext-down

yum --version

python --version注:yum 只能由python2执行,做了软链接之后默认是找python3所以 yum执行文件需要修改

在部署节点编排k8s安装

- 4.1 下载项目源码、二进制及离线镜像

-

推荐版本对照

Kubernetes version 1.22 1.23 1.24 1.25 1.26 kubeasz version 3.1.1 3.2.0 3.3.1 3.4.2 3.5.0

下载工具脚本ezdown,举例使用kubeasz版本3.2.0既k8s1.23版本 , 1.24不在支持docker

export release=3.2.0

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

chmod +x ./ezdown

./ezdown -D【可选】下载额外容器镜像(cilium,flannel,prometheus等)

./ezdown -X

- 4.2 创建集群配置实例

# 容器化运行kubeasz ./ezdown -S # 创建新集群 k8s-01 docker exec -it kubeasz ezctl new k8s-01 2021-01-19 10:48:23 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-01 2021-01-19 10:48:23 DEBUG set version of common plugins 2021-01-19 10:48:23 DEBUG cluster k8s-01: files successfully created. 2021-01-19 10:48:23 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-01/hosts' 2021-01-19 10:48:23 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-01/config.yml'

注意 docker exec -it kubeasz ezctl new k8s-01 new后面可以自己取名字

然后根据提示配置'/etc/kubeasz/clusters/k8s-01/hosts' 和 '/etc/kubeasz/clusters/k8s-01/config.yml':根据前面节点规划修改hosts 文件和其他集群层面的主要配置选项;其他集群组件等配置项可以在config.yml 文件中修改。

例如这次实验

docker exec -it kubeasz ezctl new k8s-cluster1

2023-01-08 14:09:50 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-cluster1

2023-01-08 14:09:50 DEBUG set versions

2023-01-08 14:09:50 DEBUG cluster k8s-cluster1: files successfully created.

2023-01-08 14:09:50 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-cluster1/hosts'

2023-01-08 14:09:50 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-cluster1/config.yml'vim /etc/kubeasz/clusters/k8s-cluster1/hosts注

:%s/192.168.1/172.31.7/g #批量替换前面的ip为自身规划的ip

CONTAINER_RUNTIME 选docker 或者 containerd 本次实验用的docker 可自行更改

docker和containerd 几乎一模一样 只是 containerd体积还要小些

具体参考containerd和docker的区别_钟Li枫的博客-CSDN博客_containerd docker 区别

标准时间设置

echo "LC_TIME=en_DK.UTF-8" >> /etc/default/locale

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo "*/5 * * * * ntpdate time1.aliyun.com &> /dev/null && hwclock -w" >> /var/spool/cron/crontabs/root

reboot修改 镜像加速以及docker配置文件 路径

vim ../../roles/docker/templates/配置文件修改 仅供参考

cat > /etc/kubeasz/roles/docker/templates/daemon.json.j2 << EOF

{

"data-root": "{{ DOCKER_STORAGE_DIR }}",

"exec-opts": ["native.cgroupdriver={{ CGROUP_DRIVER }}"],

{% if ENABLE_MIRROR_REGISTRY %}

"registry-mirrors": [

"https://docker.mirrors.ustc.edu.cn",

"http://hub-mirror.c.163.com"

],

{% endif %}

{% if ENABLE_REMOTE_API %}

"hosts": ["tcp://0.0.0.0:2376", "unix:///var/run/docker.sock"],

{% endif %}

"insecure-registries": ["harbor.zjx.local"],

"max-concurrent-downloads": 10,

"live-restore": true,

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "50m",

"max-file": "1"

},

"storage-driver": "overlay2"

EOF"insecure-registries": ["harbor.zjx.local"], 该处为我自行搭建的harbor私有仓库地址

复制 harbor https免签秘钥到以下路径 新建

mkdir -p /etc/docker/certs.d/harbor.zjx.local #本机创建

echo "172.31.7.104 harbor.zjx.local" >> /etc/hosts #本机创建 172.31.7.104是harbor服务器地址

scp harbor-ca.crt 172.31.7.101:/etc/docker/certs.d/harbor.zjx.local #目标主机执行

systemctl restart docker #重启docker 本机执行

docker login zjx.harbor.local #本机执行 输入对应的账号密码 提示 successfly 即可

docker images #查看本机镜像

docker tag calico/node:v3.19.3 harbor.zjx.local/baseimages/calico/node:v3.19.3 #做标签方便上传镜像

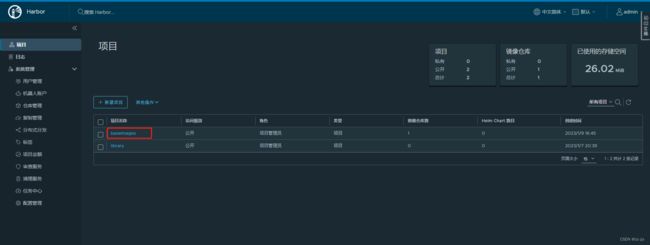

#打开harborweb界面 点创建 项目 /baseimages

docker push harbor.zjx.local/baseimages/calico/node:v3.19.3 #执行后web登录harbor查看有既成功

/etc/kubeasz/clusters/k8s-cluster1/config.yml

cat >/etc/kubeasz/clusters/k8s-cluster1/config.yml << EOF

############################

# prepare

############################

# 可选离线安装系统软件包 (offline|online)

INSTALL_SOURCE: "online"

# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

CA_EXPIRY: "876000h"

CERT_EXPIRY: "876000h"

# force to recreate CA and other certs, not suggested to set 'true'

CHANGE_CA: false

# kubeconfig 配置参数

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

# k8s version

K8S_VER: "1.26.0"

############################

# role:etcd

############################

# 设置不同的wal目录,可以避免磁盘io竞争,提高性能

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# [containerd]基础容器镜像

SANDBOX_IMAGE: "easzlab.io.local:5000/easzlab/pause:3.9"

# [containerd]容器持久化存储目录

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker]容器存储目录

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker]开启Restful API

ENABLE_REMOTE_API: false

# [docker]信任的HTTP仓库

INSECURE_REG: '["http://easzlab.io.local:5000","172.31.7.119"]'

############################

# role:kube-master

############################

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

MASTER_CERT_HOSTS:

- "172.31.7.188"

- "k8s.easzlab.io"

#- "www.test.com"

# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# node节点最大pod 数

MAX_PODS: 500

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel]

flannel_ver: "v0.19.2"

# ------------------------------------------- calico

# [calico] IPIP隧道模式可选项有: [Always, CrossSubnet, Never],跨子网可以配置为Always与CrossSubnet(公有云建议使用always比较省事,其他的话需要修改各自公有云的网络配置,具体可以参考各个公有云说明)

# 其次CrossSubnet为隧道+BGP路由混合模式可以提升网络性能,同子网配置为Never即可.

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]设置calico 是否使用route reflectors

# 如果集群规模超过50个节点,建议启用该特性

CALICO_RR_ENABLED: false

# CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: []

# [calico]更新支持calico 版本: ["3.19", "3.23"]

calico_ver: "v3.23.5"

# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# ------------------------------------------- cilium

# [cilium]镜像版本

cilium_ver: "1.12.4"

cilium_connectivity_check: true

cilium_hubble_enabled: false

cilium_hubble_ui_enabled: false

# ------------------------------------------- kube-ovn

# [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [kube-ovn]离线镜像tar包

kube_ovn_ver: "v1.5.3"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet"

OVERLAY_TYPE: "full"

# [kube-router]NetworkPolicy 支持开关

FIREWALL_ENABLE: true

# [kube-router]kube-router 镜像版本

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

############################

# role:cluster-addon

############################

# coredns 自动安装

dns_install: "no"

corednsVer: "1.9.3"

ENABLE_LOCAL_DNS_CACHE: true

dnsNodeCacheVer: "1.22.13"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# metric server 自动安装

metricsserver_install: "no"

metricsVer: "v0.5.2"

# dashboard 自动安装

dashboard_install: "no"

dashboardVer: "v2.7.0"

dashboardMetricsScraperVer: "v1.0.8"

# prometheus 自动安装

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "39.11.0"

# nfs-provisioner 自动安装

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

# network-check 自动安装

network_check_enabled: false

network_check_schedule: "*/5 * * * *"

############################

# role:harbor

############################

# harbor version,完整版本号

HARBOR_VER: "v2.1.5"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_PATH: /var/data

HARBOR_TLS_PORT: 8443

HARBOR_REGISTRY: "{{ HARBOR_DOMAIN }}:{{ HARBOR_TLS_PORT }}"

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CLAIR: false

HARBOR_WITH_CHARTMUSEUM: true

EOF/etc/kubeasz/clusters/k8s-cluster1/hosts

cat > /etc/kubeasz/clusters/k8s-cluster1/hosts << EOF

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

172.31.7.106

172.31.7.107

172.31.7.108

# master node(s)

[kube_master]

172.31.7.101

172.31.7.102

# work node(s)

[kube_node]

172.31.7.111

172.31.7.112

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#172.31.7.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

172.31.7.6 LB_ROLE=backup EX_APISERVER_VIP=172.31.7.188 EX_APISERVER_PORT=6443

172.31.7.7 LB_ROLE=master EX_APISERVER_VIP=172.31.7.188 EX_APISERVER_PORT=6443

# [optional] ntp server for the cluster

[chrony]

#172.31.7.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="docker"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-65000"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="zz-zjx.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/local/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster1"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

EOF- 4.3 开始安装 如果你对集群安装流程不熟悉,请阅读项目首页 安装步骤 讲解后分步安装,并对 每步都进行验证

#建议配置命令alias,方便执行 echo "alias dk='docker exec -it kubeasz'" >> /root/.bashrc source /root/.bashrc # 一键安装,等价于执行docker exec -it kubeasz ezctl setup k8s-01 all dk ezctl setup k8s-01 all # 或者分步安装,具体使用 dk ezctl help setup 查看分步安装帮助信息 # dk ezctl setup k8s-01 01 # dk ezctl setup k8s-01 02 # dk ezctl setup k8s-01 03 # dk ezctl setup k8s-01 04 ...

因为之前手动搭载了harbor以及lvs所有 删掉脚本帮我们搭载 以上服务

sed -i "6,7d" /etc/kubeasz/playbooks/01.prepare.yml 若 ansible第一步报错 则 节点做个 python2的软链接

若ansible第二步报错 则修改 ansible配置文件

failed: [172.31.7.108] (item=ca.pem) => {"ansible_loop_var": "item", "changed": false, "checksum": "35104974c9704ec5efa4b46a5d51997fc370f582", "item": "ca.pem", "msg": "Destination directory /etc/kubernetes/ssl does not exist"}cat > /etc/kubeasz/roles/etcd/tasks/main.yml << EOF

- name: prepare some dirs

file: name={{ ETCD_DATA_DIR }} state=directory mode=0700

- name: 下载etcd二进制文件

copy: src={{ base_dir }}/bin/{{ item }} dest={{ bin_dir }}/{{ item }} mode=0755

with_items:

- etcd

- etcdctl

tags: upgrade_etcd

- name: 创建etcd证书请求

template: src=etcd-csr.json.j2 dest={{ cluster_dir }}/ssl/etcd-csr.json

connection: local

run_once: true

tags: force_change_certs

- name: 创建 etcd证书和私钥

shell: "cd {{ cluster_dir }}/ssl && {{ base_dir }}/bin/cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes etcd-csr.json | {{ base_dir }}/bin/cfssljson -bare etcd"

connection: local

run_once: true

tags: force_change_certs

- name: Creates directory

file:

path: /etc/kubernetes/ssl

state: directory

- name: 分发etcd证书相关

copy: src={{ cluster_dir }}/ssl/{{ item }} dest={{ ca_dir }}/{{ item }}

with_items:

- ca.pem

- etcd.pem

- etcd-key.pem

tags: force_change_certs

- name: 创建etcd的systemd unit文件

template: src=etcd.service.j2 dest=/etc/systemd/system/etcd.service

tags: upgrade_etcd, restart_etcd

- name: 开机启用etcd服务

shell: systemctl enable etcd

ignore_errors: true

- name: 开启etcd服务

shell: systemctl daemon-reload && systemctl restart etcd

ignore_errors: true

tags: upgrade_etcd, restart_etcd, force_change_certs

- name: 以轮询的方式等待服务同步完成

shell: "systemctl is-active etcd.service"

register: etcd_status

until: '"active" in etcd_status.stdout'

retries: 8

delay: 8

tags: upgrade_etcd, restart_etcd, force_change_certs

EOF注: - name: Creates directory

file:

path: /etc/kubernetes/ssl

state: directory

echo "alias dk='docker exec -it kubeasz'" >> /root/.bashrc

source /root/.bashrc

dk ezctl setup k8s-cluster1 01

dk ezctl setup k8s-cluster1 02

dk ezctl setup k8s-cluster1 03

dk ezctl setup k8s-cluster1 04

..

#或者直接

dk ezctl setup k8s-cluster1 all

master1上创建文件夹,实现自建harbor仓库调用

mkdir /etc/docker/certs.d/harbor.zjx.local -pharbor1上传文件 到master1

scp harbor-ca.crt 172.31.7.101:/etc/docker/certs.d/harbor.zjx.local编辑 仓库地址

cat > /etc/docker/daemon.json << EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://docker.nju.edu.cn/",

"https://kuamavit.mirror.aliyuncs.com"

],

"insecure-registries": ["http://easzlab.io.local:5000","172.31.7.104","harbor.zjx.local"],

"max-concurrent-downloads": 10,

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "10m",

"max-file": "3"

},

"data-root": "/var/lib/docker"

}

EOF添加hosts

echo "172.31.7.104 harbor.zjx.local" >> /etc/hosts重启docker

systemctl restart docker创建项目

测试 上传镜像

docker login harbor.zjx.local

docker images

docker tag quay.io/prometheus/alertmanager:v0.24.0 harbor.zjx.local/baseimages/quay.io/prometheus/alertmanager

docker push harbor.zjx.local/baseimages/quay.io/prometheus/alertmanager若无报错既是成功