NVIDIA GPU开源驱动编译学习&架构分析

2022年5月,社区终于等到了这一天,NVIDIA开源了他们的LINUX GPU 内核驱动, Linux 内核总设计师 Linus Torvalds 十年前说过的一句话,大概意思是英伟达是LINUX开发者遇到的硬件厂商中最麻烦的一个,说完这句话之后,祖师爷毫不客气的朝着镜头竖了中指并表达了对NVIDIA身体某部的亲切问候。关于祖师爷和NVIDIA那点恩怨咱不清楚,也没啥兴趣,不过单纯看开源这个行为还是喜闻乐见的。下面基于NVIDIA GPU驱动的开源代码在UBUNTU系统上建立编译和开发环境。

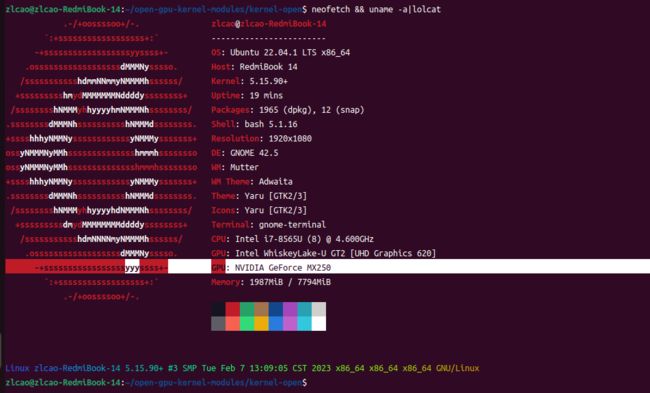

平台环境

PC装有NVIDIA GForce MX250显卡,是低端入门级的,不过用来跑跑CUDA,编译内核是足够了。

开源驱动下载

代码托管在github上,连接为:

https://github.com/NVIDIA/open-gpu-kernel-modules.git查看提交记录发现,驱动是从515.43.04版本开始开源,当前最新的版本为525.85.12.

编译

执行命令

make -j8进行编译

编译结果为:

./kernel-open/nvidia-uvm.ko

./kernel-open/nvidia-drm.ko

./kernel-open/nvidia.ko

./kernel-open/nvidia-peermem.ko

./kernel-open/nvidia-modeset.ko安装

执行如下命令进行安装

sudo insmod nvidia.ko提示检测不到设备,开始不得其解,后面查阅开源仓库的README,才发现MX250GPU不再支持列表,所以也就没有办法使用自己编译的NVIDIA内核驱动驱动GPU了。

LICENSE

查看代码中的LICENSE声明,发现开源代码使用双 GPL/MIT 许可。

而这个许可,是可以通过内核GPL兼容性检查的,不会污染内核。

NVIDIA GPU 公版内核

虽然开源仓库的代码不支持MX250,UBUNTU系统安装后,在/usr/src目录下,存在另一个GPU显卡驱动目录,以我当前的环境为例,它是/usr/src/nvidia-525.78.01/

编译方法有两种:

在目录下直接 make,结果产生和上面一样的几个KO文件。

通过dkms编译:

$ sudo dkms build -m nvidia -v 525.78.01

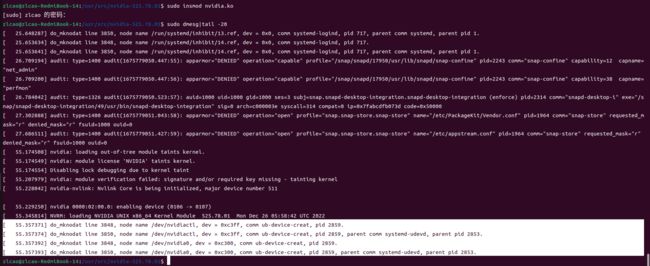

$ sudo dkms install -m nvidia -v 525.78.01安装驱动,可以看到,UBUNTU系统自带的NVIDIA驱动源码编译出来的KO是可以正常加载的,加载nvidia.ko后,系统中出现了/dev/nvidia和/dev/nvidiactl两个节点。

安装nvidia-uvm.ko后,系统中又出现了两个新的节点/dev/nvidia-uvm-tools和/dev/nvidia-uvm

由于依赖关系,下一步需要首先安装nvidia-modeset.ko,否则,直接安装其它两个模块会报错,KMS没有产生新的设备节点。

nvidia-drm.ko貌似也没有创建新的设备节点

开源策略

至于为何存在两套发布方式目前还不得而知。但是经过分析,发现UBUNTU系统自带的代码并非完全开源,协议也非上面使用的双GPL/MIT。

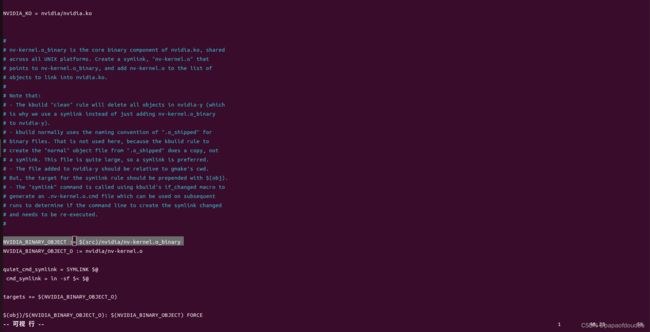

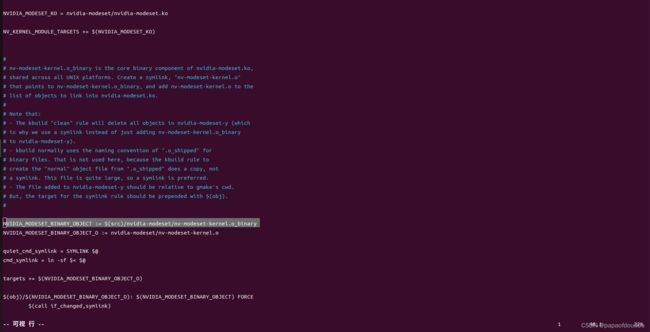

并且存在两个闭源二进制库文件,后缀名为.o_binary的nv-kernel.o_binary和nv-modeset-kernel.o_binary

KMS:

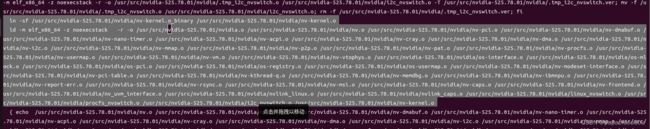

编译时的连接过程

.o_binary中的符号甚至都进行了加密

并且安装KO后,/sys/module/nvidia/taint文件内容为POE,表示内核受到了非GPL协议的代码的污染。

A kernel problem occurred, but your kernel has been tainted (flags:POE). Explanation:

P - Proprietary module has been loaded.

O - Out-of-tree module has been loaded.

E - Unsigned module has been loaded.

Kernel maintainers are unable to diagnose tainted reports. Tainted modules: nvidia_drm,nvidia_modeset,nvidia_uvm,nvidia,vboxne tadp,vboxnetflt,vboxdrv. 所以这样看起来,UBUNTU自带的驱动是闭源无疑的了,不符合开源协议。

另一些发现

后面查看开源代码的构建文件Makefile发现,关于.o_bianry的产生过程也存在于开源代码中,并且开源代码也可以产生.o_binary文件:

make kernel-open/nvidia/nv-kernel.o_binary

所以这样来看,在UBUNTU系统中没有开源的内容,在开源仓库中是能找到对应代码的,并且UBUNTU自带的驱动版本号为525.78.01,和开源仓库最新版非常接近。所以如果纠着闭源的部分不放可能有些吹毛求疵了,毕竟人家在开源仓库中是有开源的。除了部分显卡不支持外,开源仓库的代码没有什么毛病。

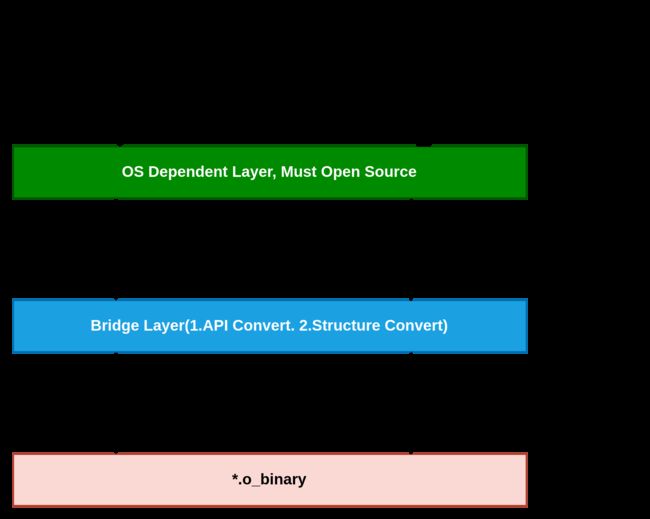

NVIDIA闭源项目的架构如下

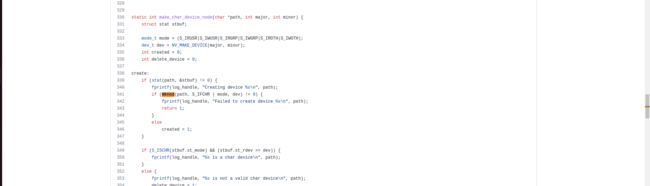

设备节点是如何创建的?

通过前面的实验可以看到NVIDA驱动并不神秘,它就是普通的字符设备或者DRM设备,而DRM设备本质上也是字符设备。

字符设备节点创建一般是通过UEVENT机制实现的,内核需要调用device_create和class_create,前者用于创建内核设备结构体,并提供设备节点名称,后者产生 /sys/class/xxxx目录下的一些列文件,用于用户态mdev或者udev查询并且创建设备节点之用。

但是经过grep代码发现,内核中并没有调用这两个接口。

而前面我们看到,在安装nvidia.ko之后,设备节点/dev/nvidia0立刻便出现了,这不可能是巧合,缺少了class_create和device_create的纽带,设备节点是如何产生的呢?

分析,首先我们用重新编译的内核,在内核中添加打印,反正我们基本确定一定是用户态调用mknod完成创建设备节点的操作,而mknod是通过系统调用实现的,我们在系统调用的必经之路上加入LOG,查看创建调用mknod创建节点的进程名,PID,父进程名,父进程ID。

运行加载后,dmesg发现果然拦截到了创建节点的操作。

原来,节点是一个名称被叫做ub-device-creat,PID为2859,父进程为systemd-udevd,PID为2853.的进程创建的。前者暂且不提,后者对熟悉内核启动的同学应该并不模式,它是systemv启动表中中的一个重要进程,专门负责监听系统中的UVENT事件,在捕捉到对应事件后,创建设备节点。所以看起来NVIDIA GPU设备节点的创建过程讲的通了。systemd-udevd监听到内核发出的设备驱动加载事件,创建进程ub-device-creat调用mknod创建设备节点。

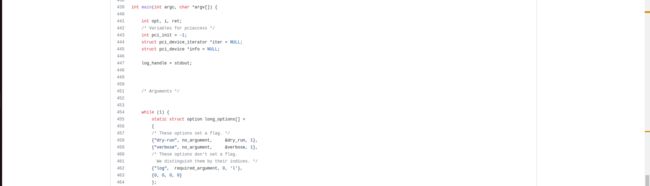

口说无凭,究竟ub-device-creat是什么呢,我们locate一下,发现其是系统文件中的一个命令程序

并且并不是脚本程序,而是一个ELF文件,既然是ELF文件,就一定有对应的C源码。最终在GITHUBS行找到了,它是UBUNTU发行版的

https://github.com/NVIDIA/ubuntu-packaging-nvidia-driver/blob/main/debian/device-create/ub-device-create.c

It belongs to the device-create package of nvidia gpu driver release on ubuntu.

可以看到,其逻辑就是创建设备节点,设备节点好是写死的,不需要event传,所以EVENT只是起到一个通知的作用。

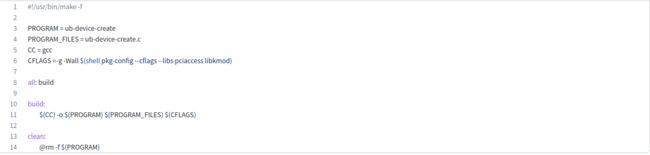

The makefile of this project will compile the ub-device-create.c to elf binary ub-device-tree tool.

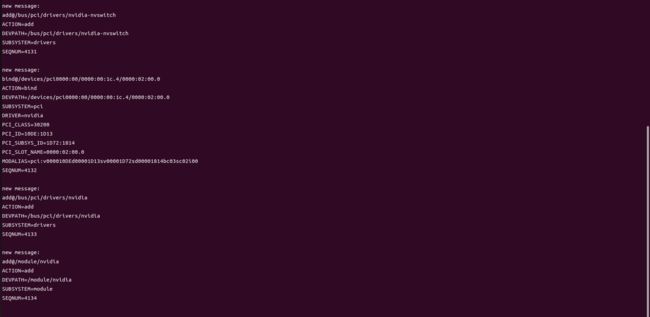

我们可以写一个获取EVENT的应用,看一下插入瞬间,内核发出的EVENT长什么样子。

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#ifndef NEWLINE

#define NEWLINE "\r\n"

#endif

#define UEVENT_BUFFER_SIZE 2048

static int init_hotplug_sock()

{

const int buffersize = 1024;

int ret;

struct sockaddr_nl snl;

bzero(&snl, sizeof(struct sockaddr_nl));

snl.nl_family = AF_NETLINK;

snl.nl_pid = getpid();

snl.nl_groups = 1;

int s = socket(PF_NETLINK, SOCK_DGRAM, NETLINK_KOBJECT_UEVENT);

if (s == -1)

{

perror("socket");

return -1;

}

setsockopt(s, SOL_SOCKET, SO_RCVBUF, &buffersize, sizeof(buffersize));

ret = bind(s, (struct sockaddr *)&snl, sizeof(struct sockaddr_nl));

if (ret < 0)

{

perror("bind");

close(s);

return -1;

}

return s;

}

char *truncate_nl(char *s) {

s[strcspn(s, NEWLINE)] = 0;

return s;

}

int device_new_from_nulstr(uint8_t *nulstr, size_t len) {

int i = 0;

int r;

while (i < len) {

char *key;

const char *end;

key = (char*)&nulstr[i];

end = memchr(key, '\0', len - i);

if (!end)

return 0;

i += end - key + 1;

truncate_nl(key);

printf("%s\n", key);

}

}

int main(int argc, char* argv[])

{

int hotplug_sock = init_hotplug_sock();

int bufpos;

while(1)

{

int len;

/* Netlink message buffer */

char buf[UEVENT_BUFFER_SIZE * 2];

memset(&buf, 0x00, sizeof(buf));

len = recv(hotplug_sock, &buf, sizeof(buf), 0);

if (len <= 0)

continue;

printf("\nnew message:\n");

bufpos = strlen(buf) + 1;

printf("%s\n", buf);

device_new_from_nulstr((uint8_t*)&buf[bufpos], len - bufpos);

}

return 0;

} 上面程序抓去到insmod时的EVENT MSG如下:

UEVENT在内核中的发送堆栈

[ 73.389413] kobject_uevent_net_broadcast line 414, action_String = add.

[ 73.389419] CPU: 6 PID: 2906 Comm: insmod Tainted: P OE 5.15.90+ #5

[ 73.389422] Hardware name: TIMI RedmiBook 14/TM1814, BIOS RMRWL400P0503 11/13/2019

[ 73.389423] Call Trace:

[ 73.389426]

[ 73.389429] dump_stack_lvl+0x4a/0x6b

[ 73.389436] dump_stack+0x10/0x18

[ 73.389440] kobject_uevent_env+0x5c1/0x820

[ 73.389446] kobject_uevent+0xb/0x20

[ 73.389449] driver_register+0xd7/0x110

[ 73.389453] __pci_register_driver+0x68/0x80

[ 73.389458] nvswitch_init.cold+0xef/0x17d [nvidia]

[ 73.389822] nvidia_init_module+0x33b/0x63f [nvidia]

[ 73.390055] ? nvidia_init_module+0x63f/0x63f [nvidia]

[ 73.390292] nvidia_frontend_init_module+0x53/0xa7 [nvidia]

[ 73.390523] ? nvidia_init_module+0x63f/0x63f [nvidia]

[ 73.390753] do_one_initcall+0x46/0x1f0

[ 73.390757] ? kmem_cache_alloc_trace+0x19e/0x2f0

[ 73.390762] do_init_module+0x52/0x260

[ 73.390765] load_module+0x2b87/0x2c40

[ 73.390767] ? ima_post_read_file+0xea/0x110

[ 73.390773] __do_sys_finit_module+0xc2/0x130

[ 73.390775] ? __do_sys_finit_module+0xc2/0x130

[ 73.390778] __x64_sys_finit_module+0x18/0x30

[ 73.390780] do_syscall_64+0x59/0x90

[ 73.390784] ? do_syscall_64+0x69/0x90

[ 73.390786] ? do_syscall_64+0x69/0x90

[ 73.390789] entry_SYSCALL_64_after_hwframe+0x61/0xcb

[ 73.390792] RIP: 0033:0x7fb1e23bba3d

[ 73.390795] Code: 5b 41 5c c3 66 0f 1f 84 00 00 00 00 00 f3 0f 1e fa 48 89 f8 48 89 f7 48 89 d6 48 89 ca 4d 89 c2 4d 89 c8 4c 8b 4c 24 08 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d c3 a3 0f 00 f7 d8 64 89 01 48

[ 73.390797] RSP: 002b:00007ffde1c29128 EFLAGS: 00000246 ORIG_RAX: 0000000000000139

[ 73.390800] RAX: ffffffffffffffda RBX: 0000563d89599770 RCX: 00007fb1e23bba3d

[ 73.390801] RDX: 0000000000000000 RSI: 0000563d88b7bcd2 RDI: 0000000000000003

[ 73.390802] RBP: 0000000000000000 R08: 0000000000000000 R09: 0000000000000000

[ 73.390804] R10: 0000000000000003 R11: 0000000000000246 R12: 0000563d88b7bcd2

[ 73.390805] R13: 0000563d8959cab0 R14: 0000563d88b7a888 R15: 0000563d89599880

[ 73.390808]

[ 73.391955] nvidia 0000:02:00.0: enabling device (0106 -> 0107)

[ 73.508328] kobject_uevent_net_broadcast line 414, action_String = bind.

[ 73.508331] CPU: 6 PID: 2906 Comm: insmod Tainted: P OE 5.15.90+ #5

[ 73.508333] Hardware name: TIMI RedmiBook 14/TM1814, BIOS RMRWL400P0503 11/13/2019

[ 73.508334] Call Trace:

[ 73.508336]

[ 73.508338] dump_stack_lvl+0x4a/0x6b

[ 73.508345] dump_stack+0x10/0x18

[ 73.508348] kobject_uevent_env+0x5c1/0x820

[ 73.508352] ? insert_work+0x8a/0xb0

[ 73.508356] ? __queue_work+0x211/0x4a0

[ 73.508359] ? __cond_resched+0x1a/0x60

[ 73.508362] kobject_uevent+0xb/0x20

[ 73.508364] driver_bound+0xb0/0x100

[ 73.508393] really_probe+0x2d3/0x430

[ 73.508394] __driver_probe_device+0x12a/0x1b0

[ 73.508396] driver_probe_device+0x23/0xd0

[ 73.508398] __driver_attach+0x10f/0x210

[ 73.508399] ? __device_attach_driver+0x160/0x160

[ 73.508401] bus_for_each_dev+0x80/0xe0

[ 73.508404] driver_attach+0x1e/0x30

[ 73.508405] bus_add_driver+0x153/0x220

[ 73.508406] driver_register+0x95/0x110

[ 73.508408] __pci_register_driver+0x68/0x80

[ 73.508411] nv_pci_register_driver+0x3a/0x50 [nvidia]

[ 73.508842] nvidia_init_module+0x4bf/0x63f [nvidia]

[ 73.509020] ? nvidia_init_module+0x63f/0x63f [nvidia]

[ 73.509196] nvidia_frontend_init_module+0x53/0xa7 [nvidia]

[ 73.509372] ? nvidia_init_module+0x63f/0x63f [nvidia]

[ 73.509547] do_one_initcall+0x46/0x1f0

[ 73.509550] ? kmem_cache_alloc_trace+0x19e/0x2f0

[ 73.509554] do_init_module+0x52/0x260

[ 73.509556] load_module+0x2b87/0x2c40

[ 73.509558] ? ima_post_read_file+0xea/0x110

[ 73.509562] __do_sys_finit_module+0xc2/0x130

[ 73.509564] ? __do_sys_finit_module+0xc2/0x130

[ 73.509566] __x64_sys_finit_module+0x18/0x30

[ 73.509568] do_syscall_64+0x59/0x90

[ 73.509571] ? do_syscall_64+0x69/0x90

[ 73.509572] ? do_syscall_64+0x69/0x90

[ 73.509574] entry_SYSCALL_64_after_hwframe+0x61/0xcb

[ 73.509577] RIP: 0033:0x7fb1e23bba3d

[ 73.509579] Code: 5b 41 5c c3 66 0f 1f 84 00 00 00 00 00 f3 0f 1e fa 48 89 f8 48 89 f7 48 89 d6 48 89 ca 4d 89 c2 4d 89 c8 4c 8b 4c 24 08 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d c3 a3 0f 00 f7 d8 64 89 01 48

[ 73.509581] RSP: 002b:00007ffde1c29128 EFLAGS: 00000246 ORIG_RAX: 0000000000000139

[ 73.509583] RAX: ffffffffffffffda RBX: 0000563d89599770 RCX: 00007fb1e23bba3d

[ 73.509584] RDX: 0000000000000000 RSI: 0000563d88b7bcd2 RDI: 0000000000000003

[ 73.509585] RBP: 0000000000000000 R08: 0000000000000000 R09: 0000000000000000

[ 73.509586] R10: 0000000000000003 R11: 0000000000000246 R12: 0000563d88b7bcd2

[ 73.509587] R13: 0000563d8959cab0 R14: 0000563d88b7a888 R15: 0000563d89599880

[ 73.509589]

[ 73.509602] kobject_uevent_net_broadcast line 414, action_String = add.

[ 73.509603] CPU: 6 PID: 2906 Comm: insmod Tainted: P OE 5.15.90+ #5

[ 73.509606] Hardware name: TIMI RedmiBook 14/TM1814, BIOS RMRWL400P0503 11/13/2019

[ 73.509607] Call Trace:

[ 73.509608]

[ 73.509609] dump_stack_lvl+0x4a/0x6b

[ 73.509632] dump_stack+0x10/0x18

[ 73.509635] kobject_uevent_env+0x5c1/0x820

[ 73.509638] kobject_uevent+0xb/0x20

[ 73.509640] driver_register+0xd7/0x110

[ 73.509643] __pci_register_driver+0x68/0x80

[ 73.509646] nv_pci_register_driver+0x3a/0x50 [nvidia]

[ 73.509794] nvidia_init_module+0x4bf/0x63f [nvidia]

[ 73.510072] ? nvidia_init_module+0x63f/0x63f [nvidia]

[ 73.510295] nvidia_frontend_init_module+0x53/0xa7 [nvidia]

[ 73.510484] ? nvidia_init_module+0x63f/0x63f [nvidia]

[ 73.510671] do_one_initcall+0x46/0x1f0

[ 73.510675] ? kmem_cache_alloc_trace+0x19e/0x2f0

[ 73.510677] do_init_module+0x52/0x260

[ 73.510680] load_module+0x2b87/0x2c40

[ 73.510681] ? ima_post_read_file+0xea/0x110

[ 73.510685] __do_sys_finit_module+0xc2/0x130

[ 73.510687] ? __do_sys_finit_module+0xc2/0x130

[ 73.510690] __x64_sys_finit_module+0x18/0x30

[ 73.510691] do_syscall_64+0x59/0x90

[ 73.510693] ? do_syscall_64+0x69/0x90

[ 73.510695] ? do_syscall_64+0x69/0x90

[ 73.510697] entry_SYSCALL_64_after_hwframe+0x61/0xcb

[ 73.510699] RIP: 0033:0x7fb1e23bba3d

[ 73.510701] Code: 5b 41 5c c3 66 0f 1f 84 00 00 00 00 00 f3 0f 1e fa 48 89 f8 48 89 f7 48 89 d6 48 89 ca 4d 89 c2 4d 89 c8 4c 8b 4c 24 08 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d c3 a3 0f 00 f7 d8 64 89 01 48

[ 73.510702] RSP: 002b:00007ffde1c29128 EFLAGS: 00000246 ORIG_RAX: 0000000000000139

[ 73.510705] RAX: ffffffffffffffda RBX: 0000563d89599770 RCX: 00007fb1e23bba3d

[ 73.510707] RDX: 0000000000000000 RSI: 0000563d88b7bcd2 RDI: 0000000000000003

[ 73.510708] RBP: 0000000000000000 R08: 0000000000000000 R09: 0000000000000000

[ 73.510709] R10: 0000000000000003 R11: 0000000000000246 R12: 0000563d88b7bcd2

[ 73.510710] R13: 0000563d8959cab0 R14: 0000563d88b7a888 R15: 0000563d89599880

[ 73.510712]

[ 73.510724] NVRM: loading NVIDIA UNIX x86_64 Kernel Module 525.78.01 Mon Dec 26 05:58:42 UTC 2022

[ 73.510737] kobject_uevent_net_broadcast line 414, action_String = add.

[ 73.510739] CPU: 6 PID: 2906 Comm: insmod Tainted: P OE 5.15.90+ #5

[ 73.510742] Hardware name: TIMI RedmiBook 14/TM1814, BIOS RMRWL400P0503 11/13/2019

[ 73.510743] Call Trace:

[ 73.510744]

[ 73.510745] dump_stack_lvl+0x4a/0x6b

[ 73.510750] dump_stack+0x10/0x18

[ 73.510753] kobject_uevent_env+0x5c1/0x820

[ 73.510755] ? mutex_lock+0x13/0x50

[ 73.510758] kobject_uevent+0xb/0x20

[ 73.510759] do_init_module+0x88/0x260

[ 73.510761] load_module+0x2b87/0x2c40

[ 73.510762] ? ima_post_read_file+0xea/0x110

[ 73.510766] __do_sys_finit_module+0xc2/0x130

[ 73.510768] ? __do_sys_finit_module+0xc2/0x130

[ 73.510771] __x64_sys_finit_module+0x18/0x30

[ 73.510772] do_syscall_64+0x59/0x90

[ 73.510774] ? do_syscall_64+0x69/0x90

[ 73.510776] ? do_syscall_64+0x69/0x90

[ 73.510779] entry_SYSCALL_64_after_hwframe+0x61/0xcb

[ 73.510782] RIP: 0033:0x7fb1e23bba3d

[ 73.510783] Code: 5b 41 5c c3 66 0f 1f 84 00 00 00 00 00 f3 0f 1e fa 48 89 f8 48 89 f7 48 89 d6 48 89 ca 4d 89 c2 4d 89 c8 4c 8b 4c 24 08 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d c3 a3 0f 00 f7 d8 64 89 01 48

[ 73.510785] RSP: 002b:00007ffde1c29128 EFLAGS: 00000246 ORIG_RAX: 0000000000000139

[ 73.510786] RAX: ffffffffffffffda RBX: 0000563d89599770 RCX: 00007fb1e23bba3d

[ 73.510787] RDX: 0000000000000000 RSI: 0000563d88b7bcd2 RDI: 0000000000000003

[ 73.510788] RBP: 0000000000000000 R08: 0000000000000000 R09: 0000000000000000

[ 73.510789] R10: 0000000000000003 R11: 0000000000000246 R12: 0000563d88b7bcd2

[ 73.510790] R13: 0000563d8959cab0 R14: 0000563d88b7a888 R15: 0000563d89599880

[ 73.510792]

[ 73.521876] do_mknodat line 3848, node name /dev/nvidiactl, dev = 0xc3ff, comm ub-device-creat, pid 2915.

[ 73.521883] do_mknodat line 3850, node name /dev/nvidiactl, dev = 0xc3ff, comm ub-device-creat, pid 2915, parent comm systemd-udevd, parent pid 2910.

[ 73.521937] do_mknodat line 3848, node name /dev/nvidia0, dev = 0xc300, comm ub-device-creat, pid 2915.

[ 73.521940] do_mknodat line 3850, node name /dev/nvidia0, dev = 0xc300, comm ub-device-creat, pid 2915, parent comm systemd-udevd, parent pid 2910.

用户态触发主动创建设备节点

设备除了热插拔的,也有冷插拔,热插拔是不断电,不重启系统使新挂载的设备可用,而冷插拔则需要断电重启才能识别设备。比如磁盘这种设备就只能冷插拔,但是冷插拔的设备也可以编译成模块,这个时候,内核启动无法初始化驱动,那么但内核引导枚举设备时,系统运行在内核空间,尚未进入用户空间,更谈不上启动用户空间的systemd-udevd服务了,因此内核发送到用户空间的uevent自然会被丢掉,更不用提加载硬盘驱动模块和建立设备节点了。

为了解决这个问题,社区基于sys文件系统设计了一种巧妙的机制,在进入用户空间,启动systemd-udevd后,通过sys文件系统请求内核重新发出uevent,此时,system-udevd已经启动了,就会收到uevent.然后结合事件和规则,完成驱动的加载,设备节点的创建等。相当于,对于冷插拔的设备,模拟了一遍热插拔的过程。

这个过程如下,当新设备注册时,内核将调用device_create_file在sys文件系统中为设备注册一个名字为uevent的文件。

device_add:

dev_attr_uevent是一个属性文件,隶属于设备目录,定义在同一个文件中:

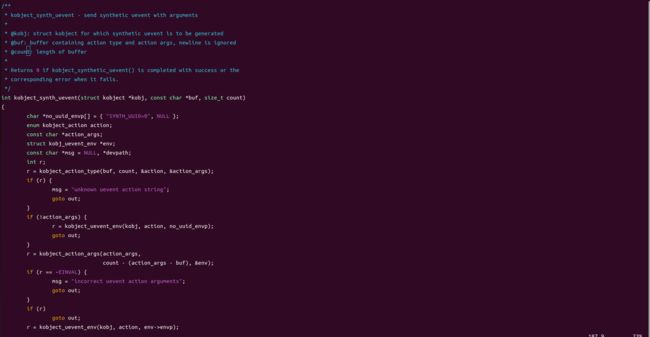

当用户空间读/写这个文件的时候,将会调用uevent_show/uevent_store,而后者将会调用kobject_synth_uevent发送一次,synth也就是synthetic,也就是人工的,合成的意思。

最终调用的是kobject_uevent_env.

UBUNTU关闭独立N卡驱动

带有独立N卡的设备启动后默认会加载NVIDIA的去模块作为DRM的实现,但我们想用集显怎么办?

经过测试,将nvidia驱动模块加入黑名单并没有效果,N卡驱动仍然会正常加载,如下图所示:

虽然没有找到有效办法,不过有一个不太规范的方法到可一试,我们重新升级一版内核,默认的N卡驱动无法是适配新的内核,自然无法加载成功,这样的话,用的应该就是INTEL的集成显卡了。集成显卡模式下的驱动安装情况为:

总结

在NVIDIA开放源码之前,社区一直使用通过对GPU硬件进行逆向工程开发的开源驱动nouveau.ko,编译后,在内核中的路径为./drivers/gpu/drm/nouveau/nouveau.ko

既然是逆向得来的,没能得到NVIDIA的认可与支持,缺少硬件厂商的KNOW HOW,性能肯定无法和NVIDIA自家的驱动相提并论,比如无法对GPU做reclocking, 也无法和GPU中 的固件建立有效通信。虽然使用开源NOUVEAU有很多麻烦和限制,但面对NVIDIA GPU优秀的硬件,社区也无能为力,只能持续开发NOUVEAU,但是现在NVIDIA终于主动开源,开发者无疑又多了一个选择。

参考资料

GPUDirect Storage(GDS)

GPUDirect Storage: A Direct Path Between Storage and GPU Memory | NVIDIA Technical Blog

NVIDIA GPUDirect Storage Overview Guide