Pytorch InstanceNorm2d Feature数与前一层输入不匹配但不报错

问题描述

有一个能够正常运行的网络,其中有几层是这样的:

XXXNet(

(Encoder): Sequential(

... ...

(9): Conv2d(256, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(10): InstanceNorm2d(1024, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

(11): LeakyReLU(negative_slope=0.2, inplace=True)

(12): Conv2d(512, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(13): InstanceNorm2d(1024, eps=1e-05, momentum=0.1, affine=False, track_running_stats=False)

... ...

)

)显然,第9层的输出channel是512但InstanceNorm的输入特征则是1024,这个难道不应该报错的吗?但是竟然没有报错。

所以问题就是这究竟是正常现象?还是数据根本并没有走这个网络?还是数据难道因为某种原因跳过了第10和13层?

解决方法

官网上似乎并没有对这个现象的说明:https://pytorch.org/docs/master/generated/torch.nn.InstanceNorm2d.html

按照官网最下面的例子进一步运行,结果如下“

>>> input = torch.randn(20, 100, 35, 45)

>>> m = nn.InstanceNorm2d(200, affine=True)

>>> output = m(input)

Traceback (most recent call last):

File "", line 1, in

File "/share2/home//anaconda3/envs/python36/lib/python3.6/site-packages/torch/nn/modules/module.py", line 541, in __call__

result = self.forward(*input, **kwargs)

File "/share2/home//anaconda3/envs/python36/lib/python3.6/site-packages/torch/nn/modules/instancenorm.py", line 49, in forward

self.training or not self.track_running_stats, self.momentum, self.eps)

File "/share2/home//anaconda3/envs/python36/lib/python3.6/site-packages/torch/nn/functional.py", line 1685, in instance_norm

use_input_stats, momentum, eps, torch.backends.cudnn.enabled

RuntimeError: weight should contain 2000 elements not 4000

>>> m = nn.InstanceNorm2d(200, affine=False)

>>> output = m(input)

>>> 看来InstanceNorm2d中只要affine=False,通道数不匹配也不会报错!

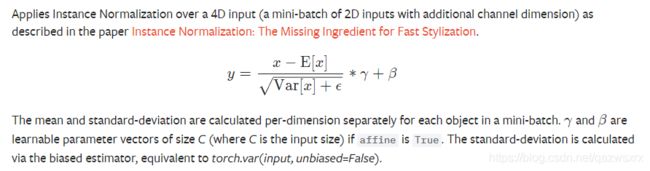

affine的含义为:

也就是说当affine=True时γ和β就会成为可学习的参数,这两个参数称为仿射变换参数。affine默认是False,官网上对此的说明如下:

InstanceNorm2dandLayerNormare very similar, but have some subtle differences.InstanceNorm2dis applied on each channel of channeled data like RGB images, butLayerNormis usually applied on entire sample and often in NLP tasks. Additionally,LayerNormapplies elementwise affine transform, whileInstanceNorm2dusually don’t apply affine transform.

结论

结合上面的现象进行分析,可以猜测:

在mini-batch中的对象的均值和标准差是每个维度分开计算的。因此如果affine=True,则γ和β这两个可学习的参数向量,大小为C,C为输入大小。如果输入的C和设定的不同,那么就会报错;

反之,如果affine为False,那么C的设定大小并不重要,无论对或不对都可以计算。因此不会报错。