马哥SRE第八周课程作业

redis,lvs,nginx

- 一. redis搭建哨兵原理和集群实现。

-

- 1.1 哨兵

-

- 1.1.1 redis Sentinel介绍

- 1.1.2 实现哨兵架构

- 1.1.3 哨兵需要先实现主从复制

- 1.1.4 编辑哨兵配置

- 1.1.5启动哨兵服务

- 1.1.6 验证哨兵服务

-

- 1.1.6.1 查看哨兵服务端口状态

- 1.1.6.2 查看哨兵日志

- 1.1.6.3 当前sentinel状态

- 1.1.7 停止Master 实现故障转移

-

- 1.1.7.1 停止 Master 节点

- 1.1.7.2 验证故障转移

- 1.1.7.3 验证 Redis 各节点状态

- 1.1.7.4 原master重新加入Redis集群

- 1.1.8 Sentinel 运维

- 1.2 集群

-

- 1.2.1 Redis Cluster 介绍

- 1.2.2 Redis cluster 架构

- 1.2.3 Redis cluster的工作原理

-

- 1.2.3.1 数据分区

- 1.2.3.2 集群通信

- 1.2.3.3 集群伸缩

- 1.2.3.4 故障转移

- 1.2.3.4 Redis Cluster 部署架构说明

- 1.2.3.5 部署方式介绍

- 1.2.4 实战案例:基于Redis 5 以上版本的 redis cluster 部署

-

- 1.2.4.1 创建 redis cluster集群的环境准备

- 1.2.4.2 启用 redis cluster 配置

- 1.2.4.3 创建集群

- 1.2.4.4 验证集群

- 1.2.4.5 测试集群写入数据

- 1.2.4.6 模拟故障实现故障转移

- 1.2.5 Redis cluster 管理

-

- 1.2.5.1 集群扩容(不支持多数据库了)

- 1.2.5.2 集群缩容

- 1.2.5.3 集群偏斜

- 1.2.5.4 Redis cluster 的局限性

- 1.2.5.5 单机,哨兵和集群的选择

- 二. LVS常用模型工作原理,及实现。

-

- 2.1 LVS工作原理

- 2.2 LVS集群类型中的术语

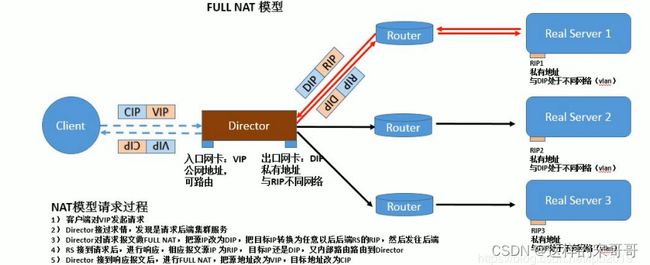

- 2.3 LVS集群的工作模式

-

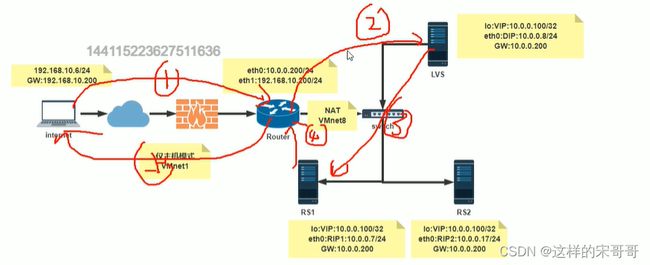

- 2.3.1 LVS的NAT模式

- 2.3.2 LVS的DR模式

- 2.3.3 LVS的TUN模式

- 2.3.4 LVS的FULLNAT模式

-

- 2.3.6 LVS工作模式总结和比较

- 2.3.5 LVS-DR模式单网段案例

-

- 2.3.5.1 LVS的网络配置

- 2.3.5.2 后端RS的IPVS配置

- 2.3.5.3 LVS主机的配置

- 2.3.5.4 测试访问

- 2.3.6 LVS-DR模式多网段案例

- 三. LVS的负载策略有哪些,各应用在什么场景,通过LVS DR任意实现1-2种场景。

-

- 3.1 LVS 调度算法

-

- 3.1.1 静态方法

- 3.1.2 动态方法

- 3.1.3 内核版本 4.15 版本后新增调度算法:FO和OVF

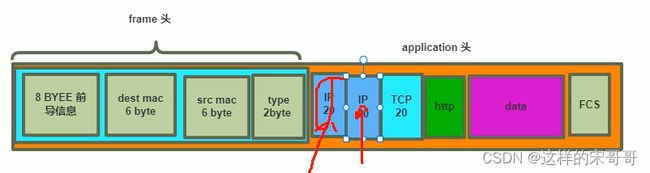

- 四. web http协议通信过程,相关技术术语总结。

-

- 4.1 web http协议通信过程

- 4.2 cookie和session比较

- 4.3 HTTP1.0和HTTP1.1的区别

- 五. 总结网络IO模型和nginx架构。

-

- 5.1 网络IO模型

-

- 5.1.1 模型相关概念

- 5.1.2 网络 I/O 模型

-

- 5.1.2.1 阻塞型 I/O 模型(blocking IO)

- 5.1.2.2 非阻塞型 I/O 模型 (nonblocking IO)

- 5.1.2.3 多路复用型 (I/O multiplexing)

- 5.1.2.4 信号驱动式 I/O 模型 (signal-driven IO)

- 5.1.2.5 异步 I/O 模型 (asynchronous IO)

- 5.1.3 五种 IO 对比

- 5.1.4 I/O常见实现

- 5.1.5 开发的选择

- 5.2 nginx架构

-

- 5.2.1 Nginx 进程结构

- 六. nginx总结核心配置和优化。

-

- 6.1 配置文件说明

- 6.2 优化

-

- 6.2.1 优化内核参数

- 6.2.2 PAM 资源限制优化

- 七. 使用脚本完成一键编译安装nginx任意版本。

- 八. 任意编译一个第3方nginx模块,并使用。

-

- 8.1 nginx-module-vts 模块实现流量监控

- 8.2 echo 模块实现信息显示

一. redis搭建哨兵原理和集群实现。

1.1 哨兵

1.1.1 redis Sentinel介绍

主从架构和MySQL的主从复制一样,无法实现master和slave角色的自动切换,即当master出现故障时,

不能实现自动的将一个slave 节点提升为新的master节点,即主从复制无法实现自动的故障转移功能,如果

想实现转移,则需要手动修改配置,才能将 slave 服务器提升新的master节点.此外只有一个主节点支持写

操作,所以业务量很大时会导致Redis服务性能达到瓶颈

需要解决的主从复制的存在以下弊端:

-

master和slave角色的自动切换,且不能影响业务

-

提升Redis服务整体性能,支持更高并发访问

1.1.2 实现哨兵架构

1.1.3 哨兵需要先实现主从复制

哨兵的前提是已经实现了Redis的主从复制

注意: master 的配置文件中masterauth 和slave 都必须相同

所有主从节点的 redis.conf 中关健配置

范例: 准备主从环境配置

#在所有主从节点执行

[root@centos8 ~]#dnf -y install redis

[root@centos8 ~]#vim /etc/redis.conf

bind 0.0.0.0

masterauth "123456"

requirepass "123456"

#或者非交互执行

[root@centos8 ~]#sed -i -e 's/bind 127.0.0.1/bind 0.0.0.0/' -e 's/^# masterauth

.*/masterauth 123456/' -e 's/^# requirepass .*/requirepass 123456/'

/etc/redis.conf

#在所有从节点执行

[root@centos8 ~]#echo "replicaof 10.0.0.8 6379" >> /etc/redis.conf

#在所有主从节点执行

[root@centos8 ~]#systemctl enable --now redis

master 服务器状态

[root@redis-master ~]#redis-cli -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface

may not

127.0.0.1:6379> INFO replication

# Replication

role:master

connected_slaves:2

slave0:ip=10.0.0.28,port=6379,state=online,offset=112,lag=1

slave1:ip=10.0.0.18,port=6379,state=online,offset=112,lag=0

master_replid:8fdca730a2ae48fb9c8b7e739dcd2efcc76794f3

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:112

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:112

127.0.0.1:6379>

配置 slave1

[root@redis-slave1 ~]#redis-cli -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

127.0.0.1:6379> REPLICAOF 10.0.0.8 6379

OK

127.0.0.1:6379> CONFIG SET masterauth "123456"

OK

127.0.0.1:6379> INFO replication

# Replication

role:slave

master_host:10.0.0.8

master_port:6379

master_link_status:up

master_last_io_seconds_ago:4

master_sync_in_progress:0

slave_repl_offset:140

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:8fdca730a2ae48fb9c8b7e739dcd2efcc76794f3

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:140

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:99

repl_backlog_histlen:42

配置 slave2

[root@redis-slave2 ~]#redis-cli -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

127.0.0.1:6379> REPLICAOF 10.0.0.8 6379

OK

127.0.0.1:6379> CONFIG SET masterauth "123456"

OK

127.0.0.1:6379> INFO replication

# Replication

role:slave

master_host:10.0.0.8

master_port:6379

master_link_status:up

master_last_io_seconds_ago:3

master_sync_in_progress:0

slave_repl_offset:182

slave_priority:100

1.1.4 编辑哨兵配置

sentinel配置

Sentinel实际上是一个特殊的redis服务器,有些redis指令支持,但很多指令并不支持.默认监听在

26379/tcp端口.

哨兵服务可以和Redis服务器分开部署在不同主机,但为了节约成本一般会部署在一起

所有redis节点使用相同的以下示例的配置文件

#如果是编译安装,在源码目录有sentinel.conf,复制到安装目录即可,

如:/apps/redis/etc/sentinel.conf

[root@centos8 ~]#cp redis-6.2.5/sentinel.conf /apps/redis/etc/sentinel.conf

[root@centos8 ~]#chown redis.redis /apps/redis/etc/sentinel.conf

[root@centos8 ~]#vim /etc/redis-sentinel.conf

bind 0.0.0.0

port 26379

daemonize yes

pidfile "redis-sentinel.pid"

logfile "sentinel_26379.log"

dir "/tmp" #工作目录

sentinel monitor mymaster 10.0.0.8 6379 2

#mymaster是集群的名称,此行指定当前mymaster集群中master服务器的地址和端口

#2为法定人数限制(quorum),即有几个sentinel认为master down了就进行故障转移,一般此值是所有

sentinel节点(一般总数是>=3的 奇数,如:3,5,7等)的一半以上的整数值,比如,总数是3,即3/2=1.5,

取整为2,是master的ODOWN客观下线的依据

sentinel auth-pass mymaster 123456

#mymaster集群中master的密码,注意此行要在上面行的下面

sentinel down-after-milliseconds mymaster 30000

#判断mymaster集群中所有节点的主观下线(SDOWN)的时间,单位:毫秒,建议3000

sentinel parallel-syncs mymaster 1

#发生故障转移后,可以同时向新master同步数据的slave的数量,数字越小总同步时间越长,但可以减轻新

master的负载压力

sentinel failover-timeout mymaster 180000

#所有slaves指向新的master所需的超时时间,单位:毫秒

sentinel deny-scripts-reconfig yes #禁止修改脚本

logfile /var/log/redis/sentinel.log

三个哨兵服务器的配置都如下

[root@redis-master ~]#grep -vE "^#|^$" /etc/redis-sentinel.conf

port 26379

daemonize no

pidfile "/var/run/redis-sentinel.pid"

logfile "/var/log/redis/sentinel.log"

dir "/tmp"

sentinel monitor mymaster 10.0.0.8 6379 2 #修改此行

sentinel auth-pass mymaster 123456 #增加此行

sentinel down-after-milliseconds mymaster 3000 #修改此行

sentinel parallel-syncs mymaster 1

sentinel failover-timeout mymaster 180000

sentinel deny-scripts-reconfig yes

#注意此行自动生成必须唯一,一般不需要修改,如果相同则修改此值需重启redis和sentinel服务

sentinel myid 50547f34ed71fd48c197924969937e738a39975b

.....

# Generated by CONFIG REWRITE

protected-mode no

supervised systemd

sentinel leader-epoch mymaster 0

sentinel known-replica mymaster 10.0.0.28 6379

sentinel known-replica mymaster 10.0.0.18 6379

sentinel current-epoch 0

[root@redis-master ~]#scp /etc/redis-sentinel.conf redis-slave1:/etc/

[root@redis-master ~]#scp /etc/redis-sentinel.conf redis-slave2:/etc/

1.1.5启动哨兵服务

将所有哨兵服务器都启动起来

#确保每个哨兵主机myid不同,如果相同,必须手动修改为不同的值

[root@redis-slave1 ~]#vim /etc/redis-sentinel.conf

sentinel myid 50547f34ed71fd48c197924969937e738a39975c

[root@redis-slave2 ~]#vim /etc/redis-sentinel.conf

sentinel myid 50547f34ed71fd48c197924969937e738a39975d

[root@redis-master ~]#systemctl enable --now redis-sentinel.service

[root@redis-slave1 ~]#systemctl enable --now redis-sentinel.service

[root@redis-slave2 ~]#systemctl enable --now redis-sentinel.service

如果是编译安装,在所有哨兵服务器执行下面操作启动哨兵

[root@redis-master ~]##vim /apps/redis/etc/sentinel.conf

bind 0.0.0.0

port 26379

daemonize yes

pidfile "redis-sentinel.pid"

Logfile "sentinel_26379.log"

dir "/apps/redis/data"

sentinel monitor mymaster 10.0.0.8 6379 2

sentinel auth-pass mymaster 123456

sentinel down-after-milliseconds mymaster 15000

sentinel parallel-syncs mymaster 1

sentinel failover-timeout mymaster 180000

sentinel deny-scripts-reconfig yes

[root@redis-master ~]#/apps/redis/bin/redis-sentinel

/apps/redis/etc/sentinel.conf

#如果是编译安装,可以在所有节点生成新的service文件

[root@redis-master ~]#cat /lib/systemd/system/redis-sentinel.service

[Unit]

Description=Redis Sentinel

After=network.target

[Service]

ExecStart=/apps/redis/bin/redis-sentinel /apps/redis/etc/sentinel.conf --

supervised systemd

ExecStop=/bin/kill -s QUIT $MAINPID

User=redis

Group=redis

RuntimeDirectory=redis

RuntimeDirectoryMode=0755

[Install]

WantedBy=multi-user.target

#注意所有节点的目录权限,否则无法启动服务

[root@redis-master ~]#chown -R redis.redis /apps/redis/

1.1.6 验证哨兵服务

1.1.6.1 查看哨兵服务端口状态

[root@redis-master ~]#ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 0.0.0.0:26379 0.0.0.0:*

LISTEN 0 128 0.0.0.0:6379 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 128 [::]:26379 [::]:*

LISTEN 0 128 [::]:6379 [::]:*

1.1.6.2 查看哨兵日志

master的哨兵日志

[root@redis-master ~]#tail -f /var/log/redis/sentinel.log

38028:X 20 Feb 2020 17:13:08.702 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

38028:X 20 Feb 2020 17:13:08.702 # Redis version=5.0.3, bits=64,

commit=00000000, modified=0, pid=38028, just started

38028:X 20 Feb 2020 17:13:08.702 # Configuration loaded

38028:X 20 Feb 2020 17:13:08.702 * supervised by systemd, will signal readiness

38028:X 20 Feb 2020 17:13:08.703 * Running mode=sentinel, port=26379.

38028:X 20 Feb 2020 17:13:08.703 # WARNING: The TCP backlog setting of 511

cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value

of 128.

38028:X 20 Feb 2020 17:13:08.704 # Sentinel ID is

50547f34ed71fd48c197924969937e738a39975b

38028:X 20 Feb 2020 17:13:08.704 # +monitor master mymaster 10.0.0.8 6379 quorum

2

38028:X 20 Feb 2020 17:13:08.709 * +slave slave 10.0.0.28:6379 10.0.0.28 6379 @

mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:13:08.709 * +slave slave 10.0.0.18:6379 10.0.0.18 6379 @

mymaster 10.0.0.8 6379

slave的哨兵日志

[root@redis-slave1 ~]#tail -f /var/log/redis/sentinel.log

25509:X 20 Feb 2020 17:13:27.435 * Removing the pid file.

25509:X 20 Feb 2020 17:13:27.435 # Sentinel is now ready to exit, bye bye...

25572:X 20 Feb 2020 17:13:27.448 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

25572:X 20 Feb 2020 17:13:27.448 # Redis version=5.0.3, bits=64,

commit=00000000, modified=0, pid=25572, just started

25572:X 20 Feb 2020 17:13:27.448 # Configuration loaded

25572:X 20 Feb 2020 17:13:27.448 * supervised by systemd, will signal readiness

25572:X 20 Feb 2020 17:13:27.449 * Running mode=sentinel, port=26379.

25572:X 20 Feb 2020 17:13:27.449 # WARNING: The TCP backlog setting of 511

cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value

of 128.

25572:X 20 Feb 2020 17:13:27.449 # Sentinel ID is

50547f34ed71fd48c197924969937e738a39975b

25572:X 20 Feb 2020 17:13:27.449 # +monitor master mymaster 10.0.0.8 6379 quorum

2

1.1.6.3 当前sentinel状态

在sentinel状态中尤其是最后一行,涉及到masterIP是多少,有几个slave,有几个sentinels,必须是符合全部服务器数量

[root@redis-master ~]#redis-cli -p 26379

127.0.0.1:26379> INFO sentinel

# Sentinel

sentinel_masters:1

sentinel_tilt:0

sentinel_running_scripts:0

sentinel_scripts_queue_length:0

sentinel_simulate_failure_flags:0

master0:name=mymaster,status=ok,address=10.0.0.8:6379,slaves=2,sentinels=3 #两个

slave,三个sentinel服务器,如果sentinels值不符合,检查myid可能冲突

1.1.7 停止Master 实现故障转移

1.1.7.1 停止 Master 节点

[root@redis-master ~]#killall redis-server

# 查看各节点上哨兵信息:

[root@redis-master ~]#redis-cli -a 123456 -p 26379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

127.0.0.1:26379> INFO sentinel

# Sentinel

sentinel_masters:1

sentinel_tilt:0

sentinel_running_scripts:0

sentinel_scripts_queue_length:0

sentinel_simulate_failure_flags:0

master0:name=mymaster,status=ok,address=10.0.0.18:6379,slaves=2,sentinels=2

故障转移时sentinel的信息:

[root@redis-master ~]#tail -f /var/log/redis/sentinel.log

38028:X 20 Feb 2020 17:42:27.362 # +sdown master mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:27.418 # +odown master mymaster 10.0.0.8 6379 #quorum

2/2

38028:X 20 Feb 2020 17:42:27.418 # +new-epoch 1

38028:X 20 Feb 2020 17:42:27.418 # +try-failover master mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:27.419 # +vote-for-leader

50547f34ed71fd48c197924969937e738a39975b 1

38028:X 20 Feb 2020 17:42:27.422 # 50547f34ed71fd48c197924969937e738a39975d

voted for 50547f34ed71fd48c197924969937e738a39975b 1

38028:X 20 Feb 2020 17:42:27.475 # +elected-leader master mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:27.475 # +failover-state-select-slave master mymaster

10.0.0.8 6379

38028:X 20 Feb 2020 17:42:27.529 # +selected-slave slave 10.0.0.18:6379

10.0.0.18 6379 @ mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:27.529 * +failover-state-send-slaveof-noone slave

10.0.0.18:6379 10.0.0.18 6379 @ mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:27.613 * +failover-state-wait-promotion slave

10.0.0.18:6379 10.0.0.18 6379 @ mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:28.506 # +promoted-slave slave 10.0.0.18:6379

10.0.0.18 6379 @ mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:28.506 # +failover-state-reconf-slaves master mymaster

10.0.0.8 6379

38028:X 20 Feb 2020 17:42:28.582 * +slave-reconf-sent slave 10.0.0.28:6379

10.0.0.28 6379 @ mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:28.736 * +slave-reconf-inprog slave 10.0.0.28:6379

10.0.0.28 6379 @ mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:28.736 * +slave-reconf-done slave 10.0.0.28:6379

10.0.0.28 6379 @ mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:28.799 # +failover-end master mymaster 10.0.0.8 6379

38028:X 20 Feb 2020 17:42:28.799 # +switch-master mymaster 10.0.0.8 6379

10.0.0.18 6379

38028:X 20 Feb 2020 17:42:28.799 * +slave slave 10.0.0.28:6379 10.0.0.28 6379 @

mymaster 10.0.0.18 6379

38028:X 20 Feb 2020 17:42:28.799 * +slave slave 10.0.0.8:6379 10.0.0.8 6379 @

mymaster 10.0.0.18 6379

38028:X 20 Feb 2020 17:42:31.809 # +sdown slave 10.0.0.8:6379 10.0.0.8 6379 @

mymaster 10.0.0.18 6379

1.1.7.2 验证故障转移

故障转移后redis.conf中的replicaof行的master IP会被修改

[root@redis-slave2 ~]#grep ^replicaof /etc/redis.conf

replicaof 10.0.0.18 6379

哨兵配置文件的sentinel monitor IP 同样也会被修改

[root@redis-slave1 ~]#grep "^[a-Z]" /etc/redis-sentinel.conf

port 26379

daemonize no

pidfile "/var/run/redis-sentinel.pid"

logfile "/var/log/redis/sentinel.log"

dir "/tmp"

sentinel myid 50547f34ed71fd48c197924969937e738a39975b

sentinel deny-scripts-reconfig yes

sentinel monitor mymaster 10.0.0.18 6379 2 #自动修改此行

sentinel down-after-milliseconds mymaster 3000

sentinel auth-pass mymaster 123456

sentinel config-epoch mymaster 1

protected-mode no

supervised systemd

sentinel leader-epoch mymaster 1

sentinel known-replica mymaster 10.0.0.8 6379

sentinel known-replica mymaster 10.0.0.28 6379

sentinel known-sentinel mymaster 10.0.0.28 26379

50547f34ed71fd48c197924969937e738a39975d

sentinel current-epoch 1

[root@redis-slave2 ~]#grep "^[a-Z]" /etc/redis-sentinel.conf

port 26379

daemonize no

pidfile "/var/run/redis-sentinel.pid"

logfile "/var/log/redis/sentinel.log"

dir "/tmp"

sentinel myid 50547f34ed71fd48c197924969937e738a39975d

sentinel deny-scripts-reconfig yes

sentinel monitor mymaster 10.0.0.18 6379 2 #自动修改此行

sentinel down-after-milliseconds mymaster 3000

sentinel auth-pass mymaster 123456

sentinel config-epoch mymaster 1

protected-mode no

supervised systemd

sentinel leader-epoch mymaster 1

sentinel known-replica mymaster 10.0.0.28 6379

sentinel known-replica mymaster 10.0.0.8 6379

sentinel known-sentinel mymaster 10.0.0.8 26379

50547f34ed71fd48c197924969937e738a39975b

sentinel current-epoch 1

1.1.7.3 验证 Redis 各节点状态

新的master 状态

[root@redis-slave1 ~]#redis-cli -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

127.0.0.1:6379> INFO replication

# Replication

role:master #提升为master

connected_slaves:1

slave0:ip=10.0.0.28,port=6379,state=online,offset=56225,lag=1

master_replid:75e3f205082c5a10824fbe6580b6ad4437140b94

master_replid2:b2fb4653bdf498691e5f88519ded65b6c000e25c

master_repl_offset:56490

second_repl_offset:46451

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:287

repl_backlog_histlen:56204

另一个slave指向新的master

[root@redis-slave2 ~]#redis-cli -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

127.0.0.1:6379> INFO replication

# Replication

role:slave

master_host:10.0.0.18 #指向新的master

master_port:6379

master_link_status:up

master_last_io_seconds_ago:0

master_sync_in_progress:0

slave_repl_offset:61029

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:75e3f205082c5a10824fbe6580b6ad4437140b94

master_replid2:b2fb4653bdf498691e5f88519ded65b6c000e25c

master_repl_offset:61029

second_repl_offset:46451

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:61029

在原 master上观察状态

[root@redis-master ~]#redis-cli -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

127.0.0.1:6379> INFO replication

# Replication

role:slave

master_host:10.0.0.18

master_port:6379

master_link_status:up

master_last_io_seconds_ago:0

master_sync_in_progress:0

slave_repl_offset:764754

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:75e3f205082c5a10824fbe6580b6ad4437140b94

master_replid2:b2fb4653bdf498691e5f88519ded65b6c000e25c

master_repl_offset:764754

second_repl_offset:46451

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:46451

repl_backlog_histlen:718304

[root@redis-master ~]#redis-cli -p 26379

127.0.0.1:26379> INFO sentinel

# Sentinel

sentinel_masters:1

sentinel_tilt:0

sentinel_running_scripts:0

sentinel_scripts_queue_length:0

sentinel_simulate_failure_flags:0

master0:name=mymaster,status=ok,address=10.0.0.18:6379,slaves=2,sentinels=3

127.0.0.1:26379>

观察新master上状态和日志

[root@redis-slave1 ~]#redis-cli -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

127.0.0.1:6379> INFO replication

# Replication

role:master

connected_slaves:2

slave0:ip=10.0.0.28,port=6379,state=online,offset=769027,lag=0

slave1:ip=10.0.0.8,port=6379,state=online,offset=769027,lag=0

master_replid:75e3f205082c5a10824fbe6580b6ad4437140b94

master_replid2:b2fb4653bdf498691e5f88519ded65b6c000e25c

master_repl_offset:769160

second_repl_offset:46451

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:287

repl_backlog_histlen:768874

127.0.0.1:6379>

[root@redis-slave1 ~]#tail -f /var/log/redis/sentinel.log

25717:X 20 Feb 2020 17:42:33.757 # +sdown slave 10.0.0.8:6379 10.0.0.8 6379 @

mymaster 10.0.0.18 6379

25717:X 20 Feb 2020 18:41:29.566 # -sdown slave 10.0.0.8:6379 10.0.0.8 6379 @

mymaster 10.0.0.18 6379

1.1.7.4 原master重新加入Redis集群

1.1.8 Sentinel 运维

[root@redis-master ~]#cat /etc/redis.conf

#sentinel会自动修改下面行指向新的master

replicaof 10.0.0.18 6379

手动让主节点下线(磁盘闪黄灯报警)

127.0.0.1:26379> sentinel failover

范例: 手动故障转移

[root@centos8 ~]#vim /etc/redis.conf

replica-priority 10 #指定优先级,值越小sentinel会优先将之选为新的master,默为值为100

[root@centos8 ~]#systemctl restart redis

#或者动态修改

[root@centos8 ~]#redis-cli -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

127.0.0.1:6379> CONFIG GET replica-priority

1) "replica-priority"

2) "100"

127.0.0.1:6379> CONFIG SET replica-priority 99

OK

127.0.0.1:6379> CONFIG GET replica-priority

1) "replica-priority"

2) "99"

[root@centos8 ~]#redis-cli -p 26379

127.0.0.1:26379> sentinel failover mymaster

OK

1.2 集群

1.2.1 Redis Cluster 介绍

使用哨兵sentinel 只能解决Redis高可用问题,实现Redis的自动故障转移,但仍然无法解决Redis Master

单节点的性能瓶颈问题

为了解决单机性能的瓶颈,提高Redis 服务整体性能,可以使用分布式集群的解决方案

早期 Redis 分布式集群部署方案:

客户端分区:由客户端程序自己实现写入分配、高可用管理和故障转移等,对客户端的开发实现较为

复杂

代理服务:客户端不直接连接Redis,而先连接到代理服务,由代理服务实现相应读写分配,当前代

理服务都是第三方实现.此方案中客户端实现无需特殊开发,实现容易,但是代理服务节点仍存有单点

故障和性能瓶颈问题。比如:豌豆荚开发的 codis

Redis 3.0 版本之后推出无中心架构的 Redis Cluster ,支持多个master节点并行写入和故障的自动转移

动能.

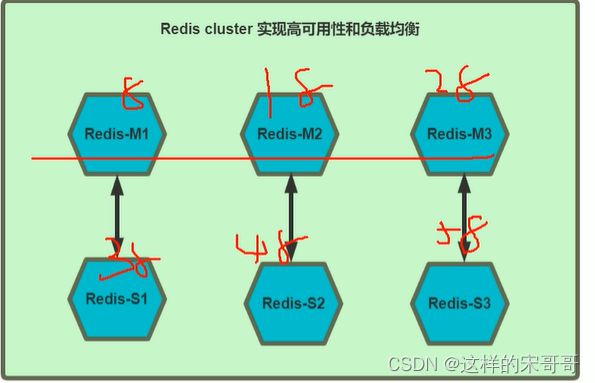

1.2.2 Redis cluster 架构

Redis cluster 需要至少 3个master节点才能实现,slave节点数量不限,当然一般每个master都至少对应的

有一个slave节点

如果有三个主节点采用哈希槽 hash slot 的方式来分配16384个槽位 slot

此三个节点分别承担的slot 区间可以是如以下方式分配

节点M1 0-5460

节点M2 5461-10922

节点M3 10923-16383

1.2.3 Redis cluster的工作原理

1.2.3.1 数据分区

如果是单机存储的话,直接将数据存放在单机redis就行了。但是如果是集群存储,就需要考虑到数据分

区了。

顺序分布保障了数据的有序性,但是离散性低,可能导致某个分区的数据热度高,其他分区数据的热度

低,分区访问不均衡。(偏斜)

哈希分布也分为多种分布方式,比如区域哈希分区,一致性哈希分区等。而redis cluster采用的是虚拟

槽分区的方式。

虚拟槽分区

redis cluster设置有0~16383的槽,每个槽映射一个数据子集,通过hash函数,将数据存放在不同的槽

位中,每个集群的节点保存一部分的槽。

每个key存储时,先经过哈希函数CRC16(key)得到一个整数,然后整数与16384取余,得到槽的数值,

然后找到对应的节点,将数据存放入对应的槽中。

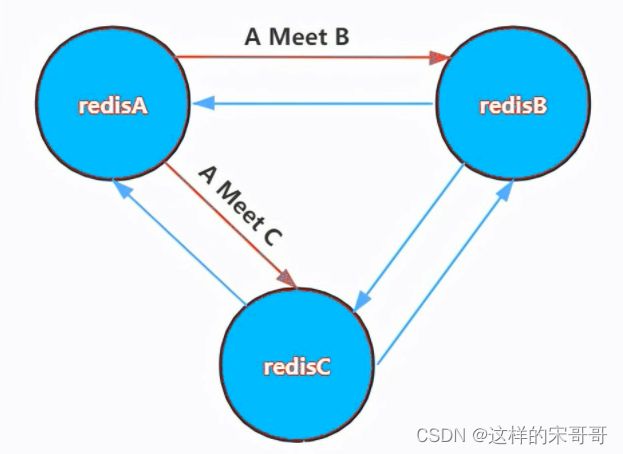

1.2.3.2 集群通信

但是寻找槽的过程并不是一次就命中的,比如上图key将要存放在14396槽中,但是并不是一下就锁定了

node3节点,可能先去询问node1,然后才访问node3。

而集群中节点之间的通信,保证了最多两次就能命中对应槽所在的节点。因为在每个节点中,都保存了

其他节点的信息,知道哪个槽由哪个节点负责。这样即使第一次访问没有命中槽,但是会通知客户端,

该槽在哪个节点,这样访问对应节点就能精准命中。

- 节点A对节点B发送一个meet操作,B返回后表示A和B之间能够进行沟通。

- 节点A对节点C发送meet操作,C返回后,A和C之间也能进行沟通。

- 然后B根据对A的了解,就能找到C,B和C之间也建立了联系。

- 直到所有节点都能建立联系。

这样每个节点都能互相知道对方负责哪些槽。

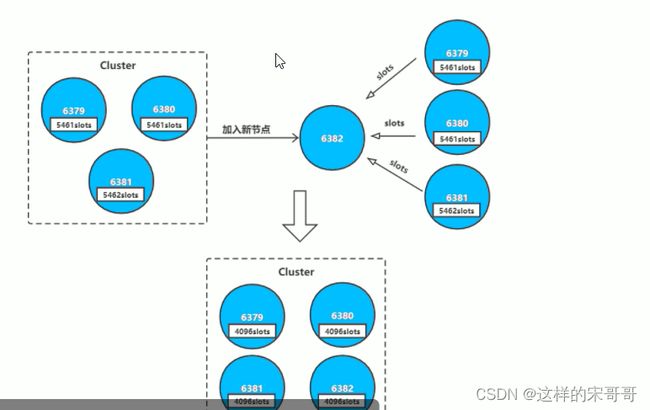

1.2.3.3 集群伸缩

集群并不是建立之后,节点数就固定不变的,也会有新的节点加入集群或者集群中的节点下线,这就是

集群的扩容和缩容。但是由于集群节点和槽息息相关,所以集群的伸缩也对应了槽和数据的迁移

集群扩容

当有新的节点准备好加入集群时,这个新的节点还是孤立节点,加入有两种方式。一个是通过集群节点

执行命令来和孤立节点握手,另一个则是使用脚本来添加节点。

- cluster_node_ip:port: cluster meet ip port new_node_ip:port

- redis-trib.rb add-node new_node_ip:port cluster_node_ip:port

通常这个新的节点有两种身份,要么作为主节点,要么作为从节点:

- 主节点:分摊槽和数据

- 从节点:作故障转移备份

- 判断该节点是否持有槽,如果未持有槽就跳转到下一步,持有槽则先迁移槽到其他节点

- 通知其他节点(cluster forget)忘记该下线节点

- 关闭下线节点的服务

需要注意的是如果先下线主节点,再下线从节点,会进行故障转移,所以要先下线从节点。

1.2.3.4 故障转移

除了手动下线节点外,也会面对突发故障。下面提到的主要是主节点的故障,因为从节点的故障并不影

响主节点工作,对应的主节点只会记住自己哪个从节点下线了,并将信息发送给其他节点。故障的从节

点重连后,继续官复原职,复制主节点的数据。

只有主节点才需要进行故障转移。在之前学习主从复制时,我们需要使用redis sentinel来实现故障转

移。而redis cluster则不需要redis sentinel,其自身就具备了故障转移功能。

根据前面我们了解到,节点之间是会进行通信的,节点之间通过ping/pong交互消息,所以借此就能发

现故障。集群节点发现故障同样是有主观下线和客观下线的

主观下线

对于每个节点有一个故障列表,故障列表维护了当前节点接收到的其他所有节点的信息。当半数以上的

持有槽的主节点都标记某个节点主观下线,就会尝试客观下线。

客观下线

故障转移

集群同样具备了自动转移故障的功能,和哨兵有些类似,在进行客观下线之后,就开始准备让故障节点

的从节点“上任”了。

首先是进行资格检查,只有具备资格的从节点才能参加选举:

- 故障节点的所有从节点检查和故障主节点之间的断线时间

- 超过cluster-node-timeout * cluster-slave-validati-factor(默认10)则取消选举资格

然后是准备选举顺序,不同偏移量的节点,参与选举的顺位不同。offset最大的slave节点,选举顺位最

高,最优先选举。而offset较低的slave节点,要延迟选举。

当有从节点参加选举后,主节点收到信息就开始投票。偏移量最大的节点,优先参与选举就更大可能获

得最多的票数,称为主节点。

当从节点走马上任变成主节点之后,就要开始进行替换主节点:

- 让该slave节点执行slaveof no one变为master节点

- 将故障节点负责的槽分配给该节点

- 向集群中其他节点广播Pong消息,表明已完成故障转移

- 故障节点重启后,会成为new_master的slave节点

1.2.3.4 Redis Cluster 部署架构说明

测试环境:3台服务器,每台服务器启动6379和6380两个redis 服务实例,适用于测试环境

生产环境:6台服务器,分别是三组master/slave,适用于生产环境

#集群节点

10.0.0.8

10.0.0.18

10.0.0.28

10.0.0.38

10.0.0.48

10.0.0.58

#预留服务器扩展使用

10.0.0.68

10.0.0.78

说明:Redis 5.X 和之前版本相比有较大变化,以下分别介绍两个版本5.X和4.X的配置

1.2.3.5 部署方式介绍

redis cluster 有多种部署方法

原生命令安装

理解Redis Cluster架构

生产环境不使用

官方工具安装

高效、准确

生产环境可以使用

自主研发

可以实现可视化的自动化部署

1.2.4 实战案例:基于Redis 5 以上版本的 redis cluster 部署

官方文档:https://redis.io/topics/cluster-tutorial

redis cluster 相关命令

范例: 查看 --cluster 选项帮助

[root@centos8 ~]#redis-cli --cluster help

范例: 查看CLUSTER 指令的帮助

[root@centos8 ~]#redis-cli CLUSTER HELP

1.2.4.1 创建 redis cluster集群的环境准备

每个Redis 节点采用相同的相同的Redis版本、相同的密码、硬件配置

所有Redis服务器必须没有任何数据

准备六台主机,地址如下:

10.0.0.8

10.0.0.18

10.0.0.28

10.0.0.38

10.0.0.48

10.0.0.58

1.2.4.2 启用 redis cluster 配置

所有6台主机都执行以下配置

[root@centos8 ~]#dnf -y install redis

每个节点修改redis配置,必须开启cluster功能的参数

#手动修改配置文件

[root@redis-node1 ~]vim /etc/redis.conf

bind 0.0.0.0

masterauth 123456 #建议配置,否则后期的master和slave主从复制无法成功,还需再配置

requirepass 123456

cluster-enabled yes #取消此行注释,必须开启集群,开启后 redis 进程会有cluster标识

cluster-config-file nodes-6379.conf #取消此行注释,此为集群状态数据文件,记录主从关系

及slot范围信息,由redis cluster 集群自动创建和维护

cluster-require-full-coverage no #默认值为yes,设为no可以防止一个节点不可用导致整

个cluster不可用

#或者执行下面命令,批量修改

[root@redis-node1 ~]#sed -i.bak -e 's/bind 127.0.0.1/bind 0.0.0.0/' -e

'/masterauth/a masterauth 123456' -e '/# requirepass/a requirepass 123456' -

e '/# cluster-enabled yes/a cluster-enabled yes' -e '/# cluster-config-file

nodes-6379.conf/a cluster-config-file nodes-6379.conf' -e '/cluster-require

full-coverage yes/c cluster-require-full-coverage no' /etc/redis.conf

#如果是编译安装可以执行下面操作

[root@redis-node1 ~]#sed -i.bak -e '/masterauth/a masterauth 123456' -e

'/# cluster-enabled yes/a cluster-enabled yes' -e '/# cluster-config-file

nodes-6379.conf/a cluster-config-file nodes-6379.conf' -e '/cluster-require

full-coverage yes/c cluster-require-full-coverage no'

/apps/redis/etc/redis.conf

[root@redis-node1 ~]#systemctl enable --now redis

验证当前Redis服务状态:

#开启了16379的cluster的端口,实际的端口=redis port + 10000

[root@centos8 ~]#ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 100 127.0.0.1:25 0.0.0.0:*

LISTEN 0 128 0.0.0.0:16379 0.0.0.0:*

LISTEN 0 128 0.0.0.0:6379 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

#注意进程有[cluster]状态

[root@centos8 ~]#ps -ef|grep redis

redis 1939 1 0 10:54 ? 00:00:00 /usr/bin/redis-server 0.0.0.0:6379

[cluster]

root 1955 1335 0 10:57 pts/0 00:00:00 grep --color=auto redis

1.2.4.3 创建集群

#命令redis-cli的选项 --cluster-replicas 1 表示每个master对应一个slave节点

[root@redis-node1 ~]#redis-cli -a 123456 --cluster create 10.0.0.8:6379

10.0.0.18:6379 10.0.0.28:6379 10.0.0.38:6379 10.0.0.48:6379

10.0.0.58:6379 --cluster-replicas 1

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 10.0.0.38:6379 to 10.0.0.8:6379

Adding replica 10.0.0.48:6379 to 10.0.0.18:6379

Adding replica 10.0.0.58:6379 to 10.0.0.28:6379

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379 #带M的为master

slots:[0-5460] (5461 slots) master #当前master的槽位起始

和结束位

M: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots:[5461-10922] (5462 slots) master

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[10923-16383] (5461 slots) master

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379 #带S的slave

replicates cb028b83f9dc463d732f6e76ca6bbcd469d948a7

S: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

replicates 99720241248ff0e4c6fa65c2385e92468b3b5993

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

replicates d34da8666a6f587283a1c2fca5d13691407f9462

Can I set the above configuration? (type 'yes' to accept): yes #输入yes自动创建集群

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

....

>>> Performing Cluster Check (using node 10.0.0.8:6379)

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379

slots:[0-5460] (5461 slots) master #已经分配的槽位

1 additional replica(s) #分配了一个slave

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave #slave没有分配槽位

replicates d34da8666a6f587283a1c2fca5d13691407f9462 #对应的master的10.0.0.28的

ID

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379

slots: (0 slots) slave

replicates cb028b83f9dc463d732f6e76ca6bbcd469d948a7 #对应的master的10.0.0.8的ID

S: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots: (0 slots) slave

replicates 99720241248ff0e4c6fa65c2385e92468b3b5993 #对应的master的10.0.0.18的

ID

M: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration. #所有节点槽位分配完成

>>> Check for open slots... #检查打开的槽位

>>> Check slots coverage... #检查插槽覆盖范围

[OK] All 16384 slots covered. #所有槽位(16384个)分配完成

#观察以上结果,可以看到3组master/slave

master:10.0.0.8---slave:10.0.0.38

master:10.0.0.18---slave:10.0.0.48

master:10.0.0.28---slave:10.0.0.58

#如果节点少于3个会出下面提示错误

[root@node1 ~]#redis-cli -a 123456 --cluster create 10.0.0.8:6379

10.0.0.18:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

*** ERROR: Invalid configuration for cluster creation.

*** Redis Cluster requires at least 3 master nodes.

*** This is not possible with 2 nodes and 0 replicas per node.

*** At least 3 nodes are required.

1.2.4.4 验证集群

查看主从状态

[root@redis-node1 ~]#redis-cli -a 123456 -c INFO replication

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

# Replication

role:master

connected_slaves:1

slave0:ip=10.0.0.38,port=6379,state=online,offset=896,lag=1

master_replid:3a388865080d779180ff240cb75766e7e57877da

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:896

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:896

[root@redis-node2 ~]#redis-cli -a 123456 INFO replication

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

# Replication

role:master

connected_slaves:1

slave0:ip=10.0.0.48,port=6379,state=online,offset=980,lag=1

master_replid:b9066d3cbf0c5fecc7f4d1d5cb2433999783fa3f

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:980

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:980

[root@redis-node3 ~]#redis-cli -a 123456 INFO replication

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

# Replication

role:master

connected_slaves:1

slave0:ip=10.0.0.58,port=6379,state=online,offset=980,lag=0

master_replid:53208e0ed9305d721e2fb4b3180f75c689217902

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:980

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:980

[root@redis-node4 ~]#redis-cli -a 123456 INFO replication

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

# Replication

role:slave

master_host:10.0.0.8

master_port:6379

master_link_status:up

master_last_io_seconds_ago:1

master_sync_in_progress:0

slave_repl_offset:1036

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:3a388865080d779180ff240cb75766e7e57877da

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:1036

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:1036

[root@redis-node5 ~]#redis-cli -a 123456 INFO replication

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

# Replication

role:slave

master_host:10.0.0.18

master_port:6379

master_link_status:up

master_last_io_seconds_ago:2

master_sync_in_progress:0

slave_repl_offset:1064

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:b9066d3cbf0c5fecc7f4d1d5cb2433999783fa3f

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:1064

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:1064

[root@redis-node6 ~]#redis-cli -a 123456 INFO replication

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

# Replication

role:slave

master_host:10.0.0.28

master_port:6379

master_link_status:up

master_last_io_seconds_ago:7

master_sync_in_progress:0

slave_repl_offset:1078

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:53208e0ed9305d721e2fb4b3180f75c689217902

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:1078

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:1078

范例: 查看指定master节点的slave节点信息

[root@centos8 ~]#redis-cli -a 123456 cluster nodes

4f146b1ac51549469036a272c60ea97f065ef832 10.0.0.28:6379@16379 master - 0

1602571565772 12 connected 10923-16383

779a24884dbe1ceb848a685c669ec5326e6c8944 10.0.0.48:6379@16379 slave

97c5dcc3f33c2fc75c7fdded25d05d2930a312c0 0 1602571565000 11 connected

97c5dcc3f33c2fc75c7fdded25d05d2930a312c0 10.0.0.18:6379@16379 master - 0

1602571564000 11 connected 5462-10922

07231a50043d010426c83f3b0788e6b92e62050f 10.0.0.58:6379@16379 slave

4f146b1ac51549469036a272c60ea97f065ef832 0 1602571565000 12 connected

a177c5cbc2407ebb6230ea7e2a7de914bf8c2dab 10.0.0.8:6379@16379 myself,master - 0

1602571566000 10 connected 0-5461

cb20d58870fe05de8462787cf9947239f4bc5629 10.0.0.38:6379@16379 slave

a177c5cbc2407ebb6230ea7e2a7de914bf8c2dab 0 1602571566780 10 connected

#以下命令查看指定master节点的slave节点信息,其中

#a177c5cbc2407ebb6230ea7e2a7de914bf8c2dab 为master节点的ID

[root@centos8 ~]#redis-cli -a 123456 cluster slaves

a177c5cbc2407ebb6230ea7e2a7de914bf8c2dab

1) "cb20d58870fe05de8462787cf9947239f4bc5629 10.0.0.38:6379@16379 slave

a177c5cbc2407ebb6230ea7e2a7de914bf8c2dab 0 1602571574844 10 connected"

验证集群状态

[root@redis-node1 ~]#redis-cli -a 123456 CLUSTER INFO

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6 #节点数

cluster_size:3 #三个集群

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:837

cluster_stats_messages_pong_sent:811

cluster_stats_messages_sent:1648

cluster_stats_messages_ping_received:806

cluster_stats_messages_pong_received:837

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:1648

#查看任意节点的集群状态

[root@redis-node1 ~]#redis-cli -a 123456 --cluster info 10.0.0.38:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.18:6379 (99720241...) -> 0 keys | 5462 slots | 1 slaves.

10.0.0.28:6379 (d34da866...) -> 0 keys | 5461 slots | 1 slaves.

10.0.0.8:6379 (cb028b83...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

查看对应关系

[root@redis-node1 ~]#redis-cli -a 123456 CLUSTER NODES

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379@16379 slave

d34da8666a6f587283a1c2fca5d13691407f9462 0 1582344815790 6 connected

f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379@16379 slave

cb028b83f9dc463d732f6e76ca6bbcd469d948a7 0 1582344811000 4 connected

d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379@16379 slave

99720241248ff0e4c6fa65c2385e92468b3b5993 0 1582344815000 5 connected

99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379@16379 master - 0

1582344813000 2 connected 5461-10922

d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379@16379 master - 0

1582344814780 3 connected 10923-16383

cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379@16379 myself,master - 0

1582344813000 1 connected 0-5460

[root@redis-node1 ~]#redis-cli -a 123456 --cluster check 10.0.0.38:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.18:6379 (99720241...) -> 0 keys | 5462 slots | 1 slaves.

10.0.0.28:6379 (d34da866...) -> 0 keys | 5461 slots | 1 slaves.

10.0.0.8:6379 (cb028b83...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.38:6379)

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379

slots: (0 slots) slave

replicates cb028b83f9dc463d732f6e76ca6bbcd469d948a7

S: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots: (0 slots) slave

replicates 99720241248ff0e4c6fa65c2385e92468b3b5993

M: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave

replicates d34da8666a6f587283a1c2fca5d13691407f9462

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

1.2.4.5 测试集群写入数据

#经过算法计算,当前key的槽位需要写入指定的node

[root@redis-node1 ~]#redis-cli -a 123456 -h 10.0.0.8 SET key1 values1

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

(error) MOVED 9189 10.0.0.18:6379 #槽位不在当前node所以无法写入

#指定槽位对应node可写入

[root@redis-node1 ~]#redis-cli -a 123456 -h 10.0.0.18 SET key1 values1

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

OK

[root@redis-node1 ~]#redis-cli -a 123456 -h 10.0.0.18 GET key1

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

"values1"

#对应的slave节点可以KEYS *,但GET key1失败,可以到master上执行GET key1

[root@redis-node1 ~]#redis-cli -a 123456 -h 10.0.0.48 KEYS "*"

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

1) "key1"

[root@redis-node1 ~]#redis-cli -a 123456 -h 10.0.0.48 GET key1

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

(error) MOVED 9189 10.0.0.18:6379

redis cluster 计算key所属的slot

[root@centos8 ~]#redis-cli -h 10.0.0.8 -a 123456 --no-auth-warning cluster

nodes

4f146b1ac51549469036a272c60ea97f065ef832 10.0.0.28:6379@16379 master - 0

1602561649000 12 connected 10923-16383

779a24884dbe1ceb848a685c669ec5326e6c8944 10.0.0.48:6379@16379 slave

97c5dcc3f33c2fc75c7fdded25d05d2930a312c0 0 1602561648000 11 connected

97c5dcc3f33c2fc75c7fdded25d05d2930a312c0 10.0.0.18:6379@16379 master - 0

1602561650000 11 connected 5462-10922

07231a50043d010426c83f3b0788e6b92e62050f 10.0.0.58:6379@16379 slave

4f146b1ac51549469036a272c60ea97f065ef832 0 1602561650229 12 connected

a177c5cbc2407ebb6230ea7e2a7de914bf8c2dab 10.0.0.8:6379@16379 myself,master - 0

1602561650000 10 connected 0-5461

cb20d58870fe05de8462787cf9947239f4bc5629 10.0.0.38:6379@16379 slave

a177c5cbc2407ebb6230ea7e2a7de914bf8c2dab 0 1602561651238 10 connected

#计算得到hello对应的slot

[root@centos8 ~]#redis-cli -h 10.0.0.8 -a 123456 --no-auth-warning cluster

keyslot hello

(integer) 866

[root@centos8 ~]#redis-cli -h 10.0.0.8 -a 123456 --no-auth-warning set hello

magedu

OK

[root@centos8 ~]#redis-cli -h 10.0.0.8 -a 123456 --no-auth-warning cluster

keyslot name

(integer) 5798

[root@centos8 ~]#redis-cli -h 10.0.0.8 -a 123456 --no-auth-warning set name

wang

(error) MOVED 5798 10.0.0.18:6379

[root@centos8 ~]#redis-cli -h 10.0.0.18 -a 123456 --no-auth-warning set name

wang

OK

[root@centos8 ~]#redis-cli -h 10.0.0.18 -a 123456 --no-auth-warning get name

"wang"

#使用选项-c 以集群模式连接

[root@centos8 ~]#redis-cli -c -h 10.0.0.8 -a 123456 --no-auth-warning

10.0.0.8:6379> cluster keyslot linux

(integer) 12299

10.0.0.8:6379> set linux love

-> Redirected to slot [12299] located at 10.0.0.28:6379

OK

10.0.0.28:6379> get linux

"love"

10.0.0.28:6379> exit

[root@centos8 ~]#redis-cli -h 10.0.0.28 -a 123456 --no-auth-warning get linux

"love"

1.2.4.6 模拟故障实现故障转移

#模拟node2节点出故障,需要相应的数秒故障转移时间

[root@redis-node2 ~]#tail -f /var/log/redis/redis.log

[root@redis-node2 ~]#redis-cli -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

127.0.0.1:6379> shutdown

not connected> exit

[root@redis-node2 ~]#ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 100 127.0.0.1:25 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

[root@redis-node2 ~]# redis-cli -a 123456 --cluster info 10.0.0.8:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

Could not connect to Redis at 10.0.0.18:6379: Connection refused

10.0.0.8:6379 (cb028b83...) -> 3331 keys | 5461 slots | 1 slaves.

10.0.0.48:6379 (d04e524d...) -> 3340 keys | 5462 slots | 0 slaves. #10.0.0.48为新

的master

10.0.0.28:6379 (d34da866...) -> 3329 keys | 5461 slots | 1 slaves.

[OK] 10000 keys in 3 masters.

0.61 keys per slot on average.

[root@redis-node2 ~]# redis-cli -a 123456 --cluster check 10.0.0.8:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

Could not connect to Redis at 10.0.0.18:6379: Connection refused

10.0.0.8:6379 (cb028b83...) -> 3331 keys | 5461 slots | 1 slaves.

10.0.0.48:6379 (d04e524d...) -> 3340 keys | 5462 slots | 0 slaves.

10.0.0.28:6379 (d34da866...) -> 3329 keys | 5461 slots | 1 slaves.

[OK] 10000 keys in 3 masters.

0.61 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.8:6379)

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave

replicates d34da8666a6f587283a1c2fca5d13691407f9462

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379

slots: (0 slots) slave

replicates cb028b83f9dc463d732f6e76ca6bbcd469d948a7

M: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots:[5461-10922] (5462 slots) master

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@redis-node2 ~]#redis-cli -a 123456 -h 10.0.0.48

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.48:6379> INFO replication

# Replication

role:master

connected_slaves:0

master_replid:0000698bc2c6452d8bfba68246350662ae41d8fd

master_replid2:b9066d3cbf0c5fecc7f4d1d5cb2433999783fa3f

master_repl_offset:2912424

second_repl_offset:2912425

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1863849

repl_backlog_histlen:1048576

10.0.0.48:6379>

#恢复故障节点node2自动成为slave节点

[root@redis-node2 ~]#systemctl start redis

#查看自动生成的配置文件,可以查看node2自动成为slave节点

[root@redis-node2 ~]#cat /var/lib/redis/nodes-6379.conf

99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379@16379 myself,slave

d04e524daec4d8e22bdada7f21a9487c2d3e1057 0 1582352081847 2 connected

f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379@16379 slave

cb028b83f9dc463d732f6e76ca6bbcd469d948a7 1582352081868 1582352081847 4 connected

cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379@16379 master -

1582352081868 1582352081847 1 connected 0-5460

9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379@16379 slave

d34da8666a6f587283a1c2fca5d13691407f9462 1582352081869 1582352081847 3 connected

d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379@16379 master -

1582352081869 1582352081847 7 connected 5461-10922

d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379@16379 master -

1582352081869 1582352081847 3 connected 10923-16383

vars currentEpoch 7 lastVoteEpoch 0

[root@redis-node2 ~]#redis-cli -a 123456 -h 10.0.0.48

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.48:6379> INFO replication

# Replication

role:master

connected_slaves:1

slave0:ip=10.0.0.18,port=6379,state=online,offset=2912564,lag=1

master_replid:0000698bc2c6452d8bfba68246350662ae41d8fd

master_replid2:b9066d3cbf0c5fecc7f4d1d5cb2433999783fa3f

master_repl_offset:2912564

second_repl_offset:2912425

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1863989

repl_backlog_histlen:1048576

10.0.0.48:6379>

1.2.5 Redis cluster 管理

1.2.5.1 集群扩容(不支持多数据库了)

扩容适用场景:

当前客户量激增,现有的Redis cluster架构已经无法满足越来越高的并发访问请求,为解决此问题,新购

置两台服务器,要求将其动态添加到现有集群,但不能影响业务的正常访问。

注意: 生产环境一般建议master节点为奇数个,比如:3,5,7,以防止脑裂现象

添加节点准备

增加Redis 新节点,需要与之前的Redis node版本和配置一致,然后分别再启动两台Redis node,应为

一主一从。

#配置node7节点

[root@redis-node7 ~]#dnf -y install redis

[root@redis-node7 ~]#sed -i.bak -e 's/bind 127.0.0.1/bind 0.0.0.0/' -e

'/masterauth/a masterauth 123456' -e '/# requirepass/a requirepass 123456' -e '/#

cluster-enabled yes/a cluster-enabled yes' -e '/# cluster-config-file nodes-

6379.conf/a cluster-config-file nodes-6379.conf' -e '/cluster-require-full

coverage yes/c cluster-require-full-coverage no' /etc/redis.conf

#编译安装执行下面操作

[root@redis-node7 ~]#sed -i.bak -e '/masterauth/a masterauth 123456' -e '/#

cluster-enabled yes/a cluster-enabled yes' -e '/# cluster-config-file nodes-

6379.conf/a cluster-config-file nodes-6379.conf' -e '/cluster-require-full

coverage yes/c cluster-require-full-coverage no'

/apps/redis/etc/redis.conf;systemctl restart redis

[root@redis-node7 ~]#systemctl enable --now redis

#配置node8节点

[root@redis-node8 ~]#dnf -y install redis

[root@redis-node8 ~]#sed -i.bak -e 's/bind 127.0.0.1/bind 0.0.0.0/' -e

'/masterauth/a masterauth 123456' -e '/# requirepass/a requirepass 123456' -e '/#

cluster-enabled yes/a cluster-enabled yes' -e '/# cluster-config-file nodes-

6379.conf/a cluster-config-file nodes-6379.conf' -e '/cluster-require-full

coverage yes/c cluster-require-full-coverage no' /etc/redis.conf

#编译安装执行下面操作

[root@redis-node8 ~]#sed -i.bak -e '/masterauth/a masterauth 123456' -e '/#

cluster-enabled yes/a cluster-enabled yes' -e '/# cluster-config-file nodes-

6379.conf/a cluster-config-file nodes-6379.conf' -e '/cluster-require-full

coverage yes/c cluster-require-full-coverage no'

/apps/redis/etc/redis.conf;systemctl restart redis

[root@redis-node8 ~]#systemctl enable --now redis

添加新的master节点到集群

使用以下命令添加新节点,要添加的新redis节点IP和端口添加到的已有的集群中任意节点的IP:端口

add-node new_host:new_port existing_host:existing_port [--slave --master-id

<arg>]

#说明:

new_host:new_port #指定新添加的主机的IP和端口

existing_host:existing_port #指定已有的集群中任意节点的IP和端口

Redis 5 以上版本的添加命令:

#将一台新的主机10.0.0.68加入集群,以下示例中10.0.0.58可以是任意存在的集群节点

[root@redis-node1 ~]#redis-cli -a 123456 --cluster add-node 10.0.0.68:6379 <当前

任意集群节点>:6379

#观察到该节点已经加入成功,但此节点上没有slot位,也无从节点,而且新的节点是master

[root@redis-node1 ~]#redis-cli -a 123456 --cluster info 10.0.0.8:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.8:6379 (cb028b83...) -> 6672 keys | 5461 slots | 1 slaves.

10.0.0.68:6379 (d6e2eca6...) -> 0 keys | 0 slots | 0 slaves.

10.0.0.48:6379 (d04e524d...) -> 6679 keys | 5462 slots | 1 slaves.

10.0.0.28:6379 (d34da866...) -> 6649 keys | 5461 slots | 1 slaves.

[OK] 20000 keys in 5 masters.

1.22 keys per slot on average.

[root@redis-node1 ~]#redis-cli -a 123456 --cluster check 10.0.0.8:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

[root@redis-node1 ~]#cat /var/lib/redis/nodes-6379.conf

#和上面显示结果一样

[root@redis-node1 ~]#redis-cli -a 123456 CLUSTER NODES

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

#查看集群状态

[root@redis-node1 ~]#redis-cli -a 123456 CLUSTER INFO

Warning: Using a password with '-a' or '-u' option on the command line interface

在新的master上重新分配槽位

新的node节点加到集群之后,默认是master节点,但是没有slots,需要重新分配,否则没有槽位将无法访

问

注意: 重新分配槽位需要清空数据,所以需要先备份数据,扩展后再恢复数据

Redis 5以上版本命令:(计算16384/总节点数槽位、新的masterID、从原有哪几个节点分担槽点)

[root@redis-node1 ~]#redis-cli -a 123456 --cluster reshard <当前任意集群节点>:6379

Warning: Using a password with ‘-a’ or ‘-u’ option on the command line interface

may not be safe.Performing Cluster Check (using node 10.0.0.68:6379)

M: d6e2eca6b338b717923f64866bd31d42e52edc98 10.0.0.68:6379

slots: (0 slots) master

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots: (0 slots) slave

replicates d04e524daec4d8e22bdada7f21a9487c2d3e1057

M: f67f1c02c742cd48d3f48d8c362f9f1b9aa31549 10.0.0.78:6379

slots: (0 slots) master

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379

slots: (0 slots) slave

replicates cb028b83f9dc463d732f6e76ca6bbcd469d948a7

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave

replicates d34da8666a6f587283a1c2fca5d13691407f9462

[OK] All nodes agree about slots configuration.

Check for open slots…

Check slots coverage…

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 4096 #新分配多少个槽位=16384/master个数

What is the receiving nodeIDd6e2eca6b338b717923f64866bd31d42e52edc98 #新的

master的ID

Please enter all the source node IDs.

Type ‘all’ to use all the nodes as source nodes for the hash slots.

Type ‘done’ once you entered all the source nodes IDs.

Source node #1: all #输入all,将哪些源主机的槽位分配给新的节点,all是自动在所有的redis node选择划分,如果是从redis cluster删除某个主机可以使用此方式将指定主机上的槽位全部移动到别的redis主机

…

Do you want to proceed with the proposed reshard plan (yes/no)? yes #确认分配

…

Moving slot 12280 from 10.0.0.28:6379 to 10.0.0.68:6379: .

Moving slot 12281 from 10.0.0.28:6379 to 10.0.0.68:6379: .

Moving slot 12282 from 10.0.0.28:6379 to 10.0.0.68:6379:

Moving slot 12283 from 10.0.0.28:6379 to 10.0.0.68:6379: …

Moving slot 12284 from 10.0.0.28:6379 to 10.0.0.68:6379:

Moving slot 12285 from 10.0.0.28:6379 to 10.0.0.68:6379: .

Moving slot 12286 from 10.0.0.28:6379 to 10.0.0.68:6379:

Moving slot 12287 from 10.0.0.28:6379 to 10.0.0.68:6379: …

[root@redis-node1 ~]#

#确定slot分配成功

[root@redis-node1 ~]#redis-cli -a 123456 --cluster check 10.0.0.8:6379

Warning: Using a password with ‘-a’ or ‘-u’ option on the command line interface

may not be safe.

为新的master指定新的slave节点

当前Redis集群中新的master节点存单点问题,还需要给其添加一个对应slave节点,实现高可用功能

有两种方式:

方法1:在新加节点到集群时,直接将之设置为slave

redis-cli -a 123456 --cluster add-node 10.0.0.78:6379 <任意集群节点>:6379 --

cluster-slave --cluster-master-id d6e2eca6b338b717923f64866bd31d42e52edc98

#范例:

#查看当前状态

[root@redis-node1 ~]#redis-cli -a 123456 --cluster check 10.0.0.8:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.8:6379 (cb028b83...) -> 5019 keys | 4096 slots | 1 slaves.

10.0.0.68:6379 (d6e2eca6...) -> 4948 keys | 4096 slots | 0 slaves.

10.0.0.48:6379 (d04e524d...) -> 5033 keys | 4096 slots | 1 slaves.

10.0.0.28:6379 (d34da866...) -> 5000 keys | 4096 slots | 1 slaves.

[OK] 20000 keys in 4 masters.

1.22 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.8:6379)

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

M: d6e2eca6b338b717923f64866bd31d42e52edc98 10.0.0.68:6379

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave

replicates d34da8666a6f587283a1c2fca5d13691407f9462

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379

slots: (0 slots) slave

replicates cb028b83f9dc463d732f6e76ca6bbcd469d948a7

M: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots: (0 slots) slave

replicates d04e524daec4d8e22bdada7f21a9487c2d3e1057

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

#直接加为slave节点

[root@redis-node1 ~]#redis-cli -a 123456 --cluster add-node 10.0.0.78:6379

10.0.0.8:6379 --cluster-slave --cluster-master-id

d6e2eca6b338b717923f64866bd31d42e52edc98

#验证是否成功

[root@redis-node1 ~]#redis-cli -a 123456 --cluster check 10.0.0.8:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.8:6379 (cb028b83...) -> 5019 keys | 4096 slots | 1 slaves.

10.0.0.68:6379 (d6e2eca6...) -> 4948 keys | 4096 slots | 1 slaves.

10.0.0.48:6379 (d04e524d...) -> 5033 keys | 4096 slots | 1 slaves.

10.0.0.28:6379 (d34da866...) -> 5000 keys | 4096 slots | 1 slaves.

[OK] 20000 keys in 4 masters.

1.22 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.8:6379)

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

M: d6e2eca6b338b717923f64866bd31d42e52edc98 10.0.0.68:6379

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

1 additional replica(s)

S: 36840d7eea5835ba540d9b64ec018aa3f8de6747 10.0.0.78:6379

slots: (0 slots) slave

replicates d6e2eca6b338b717923f64866bd31d42e52edc98

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave

replicates d34da8666a6f587283a1c2fca5d13691407f9462

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379

slots: (0 slots) slave

replicates cb028b83f9dc463d732f6e76ca6bbcd469d948a7

M: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots: (0 slots) slave

replicates d04e524daec4d8e22bdada7f21a9487c2d3e1057

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@centos8 ~]#redis-cli -a 123456 -h 10.0.0.8 --no-auth-warning cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:8 #8个节点

cluster_size:4 #4组主从

cluster_current_epoch:11

cluster_my_epoch:10

cluster_stats_messages_ping_sent:1810

cluster_stats_messages_pong_sent:1423

cluster_stats_messages_auth-req_sent:5

cluster_stats_messages_update_sent:14

cluster_stats_messages_sent:3252

cluster_stats_messages_ping_received:1417

cluster_stats_messages_pong_received:1368

cluster_stats_messages_meet_received:2

cluster_stats_messages_fail_received:2

cluster_stats_messages_auth-ack_received:2

cluster_stats_messages_update_received:4

cluster_stats_messages_received:2795

方法2:先将新节点加入集群,再修改为slave

为新的master添加slave节点

Redis 5 以上版本命令:

#把10.0.0.78:6379添加到集群中:

[root@redis-node1 ~]#redis-cli -a 123456 --cluster add-node 10.0.0.78:6379

10.0.0.8:6379

更改新节点更改状态为slave:

需要手动将其指定为某个master的slave,否则其默认角色为master。

[root@redis-node1 ~]#redis-cli -h 10.0.0.78 -p 6379 -a 123456 #登录到新添加节点

10.0.0.78:6380> CLUSTER NODES #查看当前集群节点,找到目标master 的ID

10.0.0.78:6380> CLUSTER REPLICATE 886338acd50c3015be68a760502b239f4509881c #将其设

置slave,命令格式为cluster replicate MASTERID

10.0.0.78:6380> CLUSTER NODES #再次查看集群节点状态,验证节点是否已经更改为指定master 的

slave

1.2.5.2 集群缩容

缩容适用场景:

随着业务萎缩用户量下降明显,和领导商量决定将现有Redis集群的8台主机中下线两台主机挪做它用,缩容

后性能仍能满足当前业务需求

删除节点过程:

扩容时是先添加node到集群,然后再分配槽位,而缩容时的操作相反,是先将被要删除的node上的槽位迁移到集群中的其他node上,然后 才能再将其从集群中删除,如果一个node上的槽位没有被完全迁移空,删除该node时也会提示有数据出错导致无法删除。

迁移要删除的master节点上面的槽位到其它master

注意: 被迁移Redis master源服务器必须保证没有数据,否则迁移报错并会被强制中断。

Redis 5版本以上命令–取之于民,还之于民(多出来的4096不能直接写,因为移动目标只能操作对象只能是一个,所以移3次每次移到不同对象,槽位为多出来4096\原主节点)

#查看当前状态

[root@redis-node1 ~]#redis-cli -a 123456 --cluster check 10.0.0.8:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.8:6379 (cb028b83...) -> 5019 keys | 4096 slots | 1 slaves.

10.0.0.68:6379 (d6e2eca6...) -> 4948 keys | 4096 slots | 1 slaves.

10.0.0.48:6379 (d04e524d...) -> 5033 keys | 4096 slots | 1 slaves.

10.0.0.28:6379 (d34da866...) -> 5000 keys | 4096 slots | 1 slaves.

[OK] 20000 keys in 4 masters.

1.22 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.8:6379)

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

M: d6e2eca6b338b717923f64866bd31d42e52edc98 10.0.0.68:6379

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

1 additional replica(s)

S: 36840d7eea5835ba540d9b64ec018aa3f8de6747 10.0.0.78:6379

slots: (0 slots) slave

replicates d6e2eca6b338b717923f64866bd31d42e52edc98

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave

replicates d34da8666a6f587283a1c2fca5d13691407f9462

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379

slots: (0 slots) slave

replicates cb028b83f9dc463d732f6e76ca6bbcd469d948a7

M: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots: (0 slots) slave

replicates d04e524daec4d8e22bdada7f21a9487c2d3e1057

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

#连接到任意集群节点,#最后1365个slot从10.0.0.8移动到第一个master节点10.0.0.28上

[root@redis-node1 ~]#redis-cli -a 123456 --cluster reshard 10.0.0.18:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

>>> Performing Cluster Check (using node 10.0.0.18:6379)

S: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots: (0 slots) slave

replicates d04e524daec4d8e22bdada7f21a9487c2d3e1057

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379

slots: (0 slots) slave

replicates cb028b83f9dc463d732f6e76ca6bbcd469d948a7

S: 36840d7eea5835ba540d9b64ec018aa3f8de6747 10.0.0.78:6379

slots: (0 slots) slave

replicates d6e2eca6b338b717923f64866bd31d42e52edc98

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

M: d6e2eca6b338b717923f64866bd31d42e52edc98 10.0.0.68:6379

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

1 additional replica(s)

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave

replicates d34da8666a6f587283a1c2fca5d13691407f9462

M: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 1356 #共4096/3分别给其它三个

master节点

What is the receiving node ID? d34da8666a6f587283a1c2fca5d13691407f9462 #master

10.0.0.28

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 #输入要删除节点10.0.0.8的

ID

Source node #2: done

Ready to move 1356 slots.

Source nodes:

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

Destination node:

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

Resharding plan:

Moving slot 1365 from cb028b83f9dc463d732f6e76ca6bbcd469d948a7

......

Moving slot 2719 from cb028b83f9dc463d732f6e76ca6bbcd469d948a7

Moving slot 2720 from cb028b83f9dc463d732f6e76ca6bbcd469d948a7

Do you want to proceed with the proposed reshard plan (yes/no)? yes #确定

......

Moving slot 2718 from 10.0.0.8:6379 to 10.0.0.28:6379: ..

Moving slot 2719 from 10.0.0.8:6379 to 10.0.0.28:6379: .

Moving slot 2720 from 10.0.0.8:6379 to 10.0.0.28:6379: ..

#非交互式方式

#再将1365个slot从10.0.0.8移动到第二个master节点10.0.0.48上

[root@redis-node1 ~]#redis-cli -a 123456 --cluster reshard 10.0.0.18:6379 --

cluster-slots 1365 --cluster-from cb028b83f9dc463d732f6e76ca6bbcd469d948a7 --

cluster-to d04e524daec4d8e22bdada7f21a9487c2d3e1057 --cluster-yes

#最后的slot从10.0.0.8移动到第三个master节点10.0.0.68上

[root@redis-node1 ~]#redis-cli -a 123456 --cluster reshard 10.0.0.18:6379 --

cluster-slots 1375 --cluster-from cb028b83f9dc463d732f6e76ca6bbcd469d948a7 --

cluster-to d6e2eca6b338b717923f64866bd31d42e52edc98 --cluster-yes

#确认10.0.0.8的所有slot都移走了,上面的slave也自动删除,成为其它master的slave

[root@redis-node1 ~]#redis-cli -a 123456 --cluster check 10.0.0.8:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.8:6379 (cb028b83...) -> 0 keys | 0 slots | 0 slaves.

10.0.0.68:6379 (d6e2eca6...) -> 6631 keys | 5471 slots | 2 slaves.

10.0.0.48:6379 (d04e524d...) -> 6694 keys | 5461 slots | 1 slaves.

10.0.0.28:6379 (d34da866...) -> 6675 keys | 5452 slots | 1 slaves.

[OK] 20000 keys in 4 masters.

1.22 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.8:6379)

M: cb028b83f9dc463d732f6e76ca6bbcd469d948a7 10.0.0.8:6379

slots: (0 slots) master

M: d6e2eca6b338b717923f64866bd31d42e52edc98 10.0.0.68:6379

slots:[0-1364],[4086-6826],[10923-12287] (5471 slots) master

2 additional replica(s)

S: 36840d7eea5835ba540d9b64ec018aa3f8de6747 10.0.0.78:6379

slots: (0 slots) slave

replicates d6e2eca6b338b717923f64866bd31d42e52edc98

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave

replicates d34da8666a6f587283a1c2fca5d13691407f9462

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379

slots: (0 slots) slave

replicates d6e2eca6b338b717923f64866bd31d42e52edc98

M: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots:[2721-4085],[6827-10922] (5461 slots) master

1 additional replica(s)

S: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots: (0 slots) slave

replicates d04e524daec4d8e22bdada7f21a9487c2d3e1057

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[1365-2720],[12288-16383] (5452 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

#原有的10.0.0.38自动成为10.0.0.68的slave

[root@redis-node1 ~]#redis-cli -a 123456 -h 10.0.0.68 INFO replication

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

# Replication

role:master

connected_slaves:2

slave0:ip=10.0.0.78,port=6379,state=online,offset=129390,lag=0

slave1:ip=10.0.0.38,port=6379,state=online,offset=129390,lag=0

master_replid:43e3e107a0acb1fd5a97240fc4b2bd8fc85b113f

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:129404

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:129404

[root@centos8 ~]#redis-cli -a 123456 -h 10.0.0.8 --no-auth-warning cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:8 #集群中8个节点

cluster_size:3 #少了一个主从的slot

cluster_current_epoch:16

cluster_my_epoch:13

cluster_stats_messages_ping_sent:3165

cluster_stats_messages_pong_sent:2489

cluster_stats_messages_fail_sent:6

cluster_stats_messages_auth-req_sent:5

cluster_stats_messages_auth-ack_sent:1

cluster_stats_messages_update_sent:27

cluster_stats_messages_sent:5693

cluster_stats_messages_ping_received:2483

cluster_stats_messages_pong_received:2400

cluster_stats_messages_meet_received:2

cluster_stats_messages_fail_received:2

cluster_stats_messages_auth-req_received:1

cluster_stats_messages_auth-ack_received:2

cluster_stats_messages_update_received:4

cluster_stats_messages_received:4894

从集群中删除服务器

上面步骤完成后,槽位已经迁移走,但是节点仍然还属于集群成员,因此还需从集群删除该节点

注意: 删除服务器前,必须清除主机上面的槽位,否则会删除主机失败

Redis 5以上版本命令:

[root@redis-node1 ~]#redis-cli -a 123456 --cluster del-node <任意集群节点的IP>:6379

cb028b83f9dc463d732f6e76ca6bbcd469d948a7

#cb028b83f9dc463d732f6e76ca6bbcd469d948a7是删除节点的ID

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

>>> Removing node cb028b83f9dc463d732f6e76ca6bbcd469d948a7 from cluster

10.0.0.8:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

#删除节点后,redis进程自动关闭

#删除节点信息

[root@redis-node1 ~]#rm -f /var/lib/redis/nodes-6379.conf

删除多余的slave节点验证结果

#验证删除成功

[root@redis-node1 ~]#ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

[root@redis-node1 ~]#redis-cli -a 123456 --cluster check 10.0.0.18:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.68:6379 (d6e2eca6...) -> 6631 keys | 5471 slots | 2 slaves.

10.0.0.48:6379 (d04e524d...) -> 6694 keys | 5461 slots | 1 slaves.

10.0.0.28:6379 (d34da866...) -> 6675 keys | 5452 slots | 1 slaves.

[OK] 20000 keys in 3 masters.

1.22 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.18:6379)

S: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots: (0 slots) slave

replicates d04e524daec4d8e22bdada7f21a9487c2d3e1057

S: f9adcfb8f5a037b257af35fa548a26ffbadc852d 10.0.0.38:6379

slots: (0 slots) slave

replicates d6e2eca6b338b717923f64866bd31d42e52edc98

S: 36840d7eea5835ba540d9b64ec018aa3f8de6747 10.0.0.78:6379

slots: (0 slots) slave

replicates d6e2eca6b338b717923f64866bd31d42e52edc98

M: d6e2eca6b338b717923f64866bd31d42e52edc98 10.0.0.68:6379

slots:[0-1364],[4086-6826],[10923-12287] (5471 slots) master

2 additional replica(s)

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave

replicates d34da8666a6f587283a1c2fca5d13691407f9462

M: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots:[2721-4085],[6827-10922] (5461 slots) master

1 additional replica(s)

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[1365-2720],[12288-16383] (5452 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

#删除多余的slave从节点

[root@redis-node1 ~]#redis-cli -a 123456 --cluster del-node 10.0.0.18:6379

f9adcfb8f5a037b257af35fa548a26ffbadc852d

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

>>> Removing node f9adcfb8f5a037b257af35fa548a26ffbadc852d from cluster

10.0.0.18:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

#删除集群文件

[root@redis-node4 ~]#rm -f /var/lib/redis/nodes-6379.conf

[root@redis-node1 ~]#redis-cli -a 123456 --cluster check 10.0.0.18:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.68:6379 (d6e2eca6...) -> 6631 keys | 5471 slots | 1 slaves.

10.0.0.48:6379 (d04e524d...) -> 6694 keys | 5461 slots | 1 slaves.

10.0.0.28:6379 (d34da866...) -> 6675 keys | 5452 slots | 1 slaves.

[OK] 20000 keys in 3 masters.

1.22 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.18:6379)

S: 99720241248ff0e4c6fa65c2385e92468b3b5993 10.0.0.18:6379

slots: (0 slots) slave

replicates d04e524daec4d8e22bdada7f21a9487c2d3e1057

S: 36840d7eea5835ba540d9b64ec018aa3f8de6747 10.0.0.78:6379

slots: (0 slots) slave

replicates d6e2eca6b338b717923f64866bd31d42e52edc98

M: d6e2eca6b338b717923f64866bd31d42e52edc98 10.0.0.68:6379

slots:[0-1364],[4086-6826],[10923-12287] (5471 slots) master

1 additional replica(s)

S: 9875b50925b4e4f29598e6072e5937f90df9fc71 10.0.0.58:6379

slots: (0 slots) slave

replicates d34da8666a6f587283a1c2fca5d13691407f9462

M: d04e524daec4d8e22bdada7f21a9487c2d3e1057 10.0.0.48:6379

slots:[2721-4085],[6827-10922] (5461 slots) master

1 additional replica(s)

M: d34da8666a6f587283a1c2fca5d13691407f9462 10.0.0.28:6379

slots:[1365-2720],[12288-16383] (5452 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@redis-node1 ~]#redis-cli -a 123456 --cluster info 10.0.0.18:6379

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

10.0.0.68:6379 (d6e2eca6...) -> 6631 keys | 5471 slots | 1 slaves.

10.0.0.48:6379 (d04e524d...) -> 6694 keys | 5461 slots | 1 slaves.

10.0.0.28:6379 (d34da866...) -> 6675 keys | 5452 slots | 1 slaves.

[OK] 20000 keys in 3 masters.

1.22 keys per slot on average.

#查看集群信息

[root@redis-node1 ~]#redis-cli -a 123456 -h 10.0.0.18 CLUSTER INFO

Warning: Using a password with '-a' or '-u' option on the command line interface

may not be safe.

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6 #只有6个节点

cluster_size:3

cluster_current_epoch:11

cluster_my_epoch:10

cluster_stats_messages_ping_sent:12147

cluster_stats_messages_pong_sent:12274

cluster_stats_messages_update_sent:14

cluster_stats_messages_sent:24435

cluster_stats_messages_ping_received:12271

cluster_stats_messages_pong_received:12147

cluster_stats_messages_meet_received:3

cluster_stats_messages_update_received:28

cluster_stats_messages_received:24449

1.2.5.3 集群偏斜

redis cluster 多个节点运行一段时间后,可能会出现倾斜现象,某个节点数据偏多,内存消耗更大,或者接受

用户请求访问更多

发生倾斜的原因可能如下:

- 节点和槽分配不均

- 不同槽对应键值数量差异较大

- 包含bigkey,建议少用

- 内存相关配置不一致

- 热点数据不均衡 : 一致性不高时,可以使用本缓存和MQ

获取指定槽位中对应键key值的个数

#redis-cli cluster countkeysinslot {slot的值}

范例: 获取指定slot对应的key个数

[root@centos8 ~]#redis-cli -a 123456 cluster countkeysinslot 1

(integer) 0

[root@centos8 ~]#redis-cli -a 123456 cluster countkeysinslot 2

(integer) 0

[root@centos8 ~]#redis-cli -a 123456 cluster countkeysinslot 3

(integer) 1

执行自动的槽位重新平衡分布,但会影响客户端的访问,此方法慎用