6. K8S集群的升级、备份、维护及资源对象

1. Kubernetes 集群的升级

-

注意:

- 小版本升级,一般问题较少。

- 大版本升级,一定要做好适配和测试。

-

建议采用滚动升级的方式:

- master节点:

- 先将一台master节点从node节点上的kube-lb中剔除,升级后,重新加入。

- 再将余下的两台master节点从node节点上的kube-lb中剔除,升级后重新加入。

- node节点:

- 升级需要停服,逐台node节点升级。

- 将kubelet和kube-proxy的二进制文件替换后,快速重启服务。

- master节点:

1.1 Master节点的升级

1.1.1 在Node节点剔除要升级的Master节点(Master下线)

在所有node节点上,将要升级的master节点从kube-lb中剔除

root@node1:~# vim /etc/kube-lb/conf/kube-lb.conf

user root;

worker_processes 1;

error_log /etc/kube-lb/logs/error.log warn;

events {

worker_connections 3000;

}

stream {

upstream backend {

server 192.168.6.81:6443 max_fails=2 fail_timeout=3s;

#server 192.168.6.79:6443 max_fails=2 fail_timeout=3s; #将要升级的master节点注释掉

server 192.168.6.80:6443 max_fails=2 fail_timeout=3s;

}

server {

listen 127.0.0.1:6443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

root@node1:~# systemctl restart kube-lb.service #重启服务,配置生效

1.1.2 在已下线的Master上替换相关二进制文件

Master上升级涉及如下组件

- kube-apiserver

- kube-controller-manager

- kube-proxy

- kube-scheduler

- kubectl

- kubelet

1.1.2.1 下载对应版本的安装包,上传服务器后解压

root@master1:~/1.21.5# ls

kubernetes-client-linux-amd64.tar.gz kubernetes-node-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz kubernetes.tar.gz

root@master1:~/1.21.5# tar xf kubernetes-client-linux-amd64.tar.gz

root@master1:~/1.21.5# tar xf kubernetes-node-linux-amd64.tar.gz

root@master1:~/1.21.5# tar xf kubernetes-server-linux-amd64.tar.gz

root@master1:~/1.21.5# tar xf kubernetes.tar.gz

Server端需要的二进制可执行程序位置

root@master1:~/1.21.5/kubernetes/server/bin# pwd

/root/1.21.5/kubernetes/server/bin

root@master1:~/1.21.5/kubernetes/server/bin# ./kube-apiserver --version

Kubernetes v1.21.5

root@master1:~/1.21.5/kubernetes/server/bin# ll

total 1075596

drwxr-xr-x 2 root root 4096 Sep 16 05:22 ./

drwxr-xr-x 3 root root 66 Sep 16 05:27 ../

-rwxr-xr-x 1 root root 50790400 Sep 16 05:22 apiextensions-apiserver*

-rwxr-xr-x 1 root root 48738304 Sep 16 05:22 kube-aggregator*

-rwxr-xr-x 1 root root 122322944 Sep 16 05:22 kube-apiserver*

-rw-r--r-- 1 root root 8 Sep 16 05:21 kube-apiserver.docker_tag

-rw------- 1 root root 127114240 Sep 16 05:21 kube-apiserver.tar

-rwxr-xr-x 1 root root 116359168 Sep 16 05:22 kube-controller-manager*

-rw-r--r-- 1 root root 8 Sep 16 05:21 kube-controller-manager.docker_tag

-rw------- 1 root root 121150976 Sep 16 05:21 kube-controller-manager.tar

-rwxr-xr-x 1 root root 43360256 Sep 16 05:22 kube-proxy*

-rw-r--r-- 1 root root 8 Sep 16 05:21 kube-proxy.docker_tag

-rw------- 1 root root 105362432 Sep 16 05:21 kube-proxy.tar

-rwxr-xr-x 1 root root 47321088 Sep 16 05:22 kube-scheduler*

-rw-r--r-- 1 root root 8 Sep 16 05:21 kube-scheduler.docker_tag

-rw------- 1 root root 52112384 Sep 16 05:21 kube-scheduler.tar

-rwxr-xr-x 1 root root 44851200 Sep 16 05:22 kubeadm*

-rwxr-xr-x 1 root root 46645248 Sep 16 05:22 kubectl*

-rwxr-xr-x 1 root root 55305384 Sep 16 05:22 kubectl-convert*

-rwxr-xr-x 1 root root 118353264 Sep 16 05:22 kubelet*

-rwxr-xr-x 1 root root 1593344 Sep 16 05:22 mounter*

1.1.2.2 停相关服务

root@master1:~/1.21.5/kubernetes/server/bin# systemctl stop kube-apiserver kube-proxy.service kube-controller-manager.service kube-scheduler.service kubelet.service

1.1.2.3 强制替换二进制文件

root@master1:~/1.21.5/kubernetes/server/bin# \cp kube-apiserver kube-controller-manager kube-proxy kube-scheduler kubelet kubectl /usr/local/bin/

1.1.3 重启相关服务

root@master1:~/1.21.5/kubernetes/server/bin# systemctl start kube-apiserver kube-proxy.service kube-controller-manager.service kube-scheduler.service kubelet.service

1.1.4 验证

root@master1:~/1.21.5/kubernetes/server/bin# kubectl get node -A

NAME STATUS ROLES AGE VERSION

192.168.6.79 Ready,SchedulingDisabled master 6d16h v1.21.5 #变为新版本

192.168.6.80 Ready,SchedulingDisabled master 6d16h v1.21.0

192.168.6.81 Ready,SchedulingDisabled master 53m v1.21.0

192.168.6.89 Ready node 6d15h v1.21.0

192.168.6.90 Ready node 6d15h v1.21.0

192.168.6.91 Ready node 45m v1.21.0

1.1.5 使用同样方法升级其它Master节点

1.1.5.1 下线其它两台Master节点

- 在所有node节点上操作

root@node1:~# vim /etc/kube-lb/conf/kube-lb.conf

user root;

worker_processes 1;

error_log /etc/kube-lb/logs/error.log warn;

events {

worker_connections 3000;

}

stream {

upstream backend {

#server 192.168.6.81:6443 max_fails=2 fail_timeout=3s;

server 192.168.6.79:6443 max_fails=2 fail_timeout=3s;

#server 192.168.6.80:6443 max_fails=2 fail_timeout=3s;

}

server {

listen 127.0.0.1:6443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

root@node1:~# systemctl restart kube-lb

1.1.5.2 替换二进制可执行文件,重启服务

root@master2:~/1.21.5# tar xf kubernetes-client-linux-amd64.tar.gz

root@master2:~/1.21.5# tar xf kubernetes-node-linux-amd64.tar.gz

root@master2:~/1.21.5# tar xf kubernetes-server-linux-amd64.tar.gz

root@master2:~/1.21.5# tar xf kubernetes.tar.gz

root@master2:~/1.21.5# cd kubernetes/server/bin/

root@master2:~/1.21.5/kubernetes/server/bin# systemctl stop kube-apiserver kube-proxy.service kube-controller-manager.service kube-scheduler.service kubelet.service

root@master2:~/1.21.5/kubernetes/server/bin# \cp kube-apiserver kube-controller-manager kube-proxy kube-scheduler kubelet kubectl /usr/local/bin/

root@master2:~/1.21.5/kubernetes/server/bin# systemctl start kube-apiserver kube-proxy.service kube-controller-manager.service kube-scheduler.service kubelet.service

root@master3:~/1.21.5# tar xf kubernetes-client-linux-amd64.tar.gz

root@master3:~/1.21.5# tar xf kubernetes-node-linux-amd64.tar.gz

root@master3:~/1.21.5# tar xf kubernetes-server-linux-amd64.tar.gz

root@master3:~/1.21.5# tar xf kubernetes.tar.gz

root@master3:~/1.21.5# cd kubernetes/server/bin/

root@master3:~/1.21.5/kubernetes/server/bin# systemctl stop kube-apiserver kube-proxy.service kube-controller-manager.service kube-scheduler.service kubelet.service

root@master3:~/1.21.5/kubernetes/server/bin# \cp kube-apiserver kube-controller-manager kube-proxy kube-scheduler kubelet kubectl /usr/local/bin/

root@master3:~/1.21.5/kubernetes/server/bin# systemctl start kube-apiserver kube-proxy.service kube-controller-manager.service kube-scheduler.service kubelet.service

1.1.5.3 验证

root@master1:~/1.21.5/kubernetes/server/bin# kubectl get node -A

NAME STATUS ROLES AGE VERSION

192.168.6.79 Ready,SchedulingDisabled master 6d16h v1.21.5

192.168.6.80 Ready,SchedulingDisabled master 6d16h v1.21.5

192.168.6.81 NotReady,SchedulingDisabled master 67m v1.21.5

192.168.6.89 Ready node 6d16h v1.21.0

192.168.6.90 Ready node 6d16h v1.21.0

192.168.6.91 Ready node 60m v1.21.0

1.1.5.4 重新上线升级后的Master节点

- 在所有node节点上操作

root@node1:~# vim /etc/kube-lb/conf/kube-lb.conf

user root;

worker_processes 1;

error_log /etc/kube-lb/logs/error.log warn;

events {

worker_connections 3000;

}

stream {

upstream backend {

server 192.168.6.81:6443 max_fails=2 fail_timeout=3s;

server 192.168.6.79:6443 max_fails=2 fail_timeout=3s;

server 192.168.6.80:6443 max_fails=2 fail_timeout=3s;

}

server {

listen 127.0.0.1:6443;

proxy_connect_timeout 1s;

proxy_pass backend;

}

}

root@node1:~# systemctl restart kube-lb

1.2 Node节点的升级

- Node节点升级涉及到两个服务,kubectl 选升

- kube-proxy

- kubelet

- 逐台node操作升级

1.2.1 Node节点停服

root@node1:~# systemctl stop kubelet kube-proxy

1.2.2 替换二进制可执行文件,重启服务

root@master1:~/1.21.5/kubernetes/server/bin# scp kubelet kube-proxy kubectl [email protected]:/usr/local/bin/

root@node1:~# systemctl start kubelet kube-proxy

1.2.3 验证

root@master1:~/1.21.5/kubernetes/server/bin# kubectl get node -A

NAME STATUS ROLES AGE VERSION

192.168.6.79 Ready,SchedulingDisabled master 6d16h v1.21.5

192.168.6.80 Ready,SchedulingDisabled master 6d16h v1.21.5

192.168.6.81 Ready,SchedulingDisabled master 83m v1.21.5

192.168.6.89 Ready node 6d16h v1.21.5

192.168.6.90 Ready node 6d16h v1.21.0

192.168.6.91 Ready node 75m v1.21.0

2. Yaml文件

需要提前创建好yaml文件,并创建好pod运行所需的namespace、yaml文件等

2.1 创建业务namespace yaml文件

apiVersion: v1 #API版本

kind: Namespace #类型为namespace

metadata: #定义元数据

name: n56 #namespace名称

2.2 创建并验证namespace

root@master1:~# kubectl apply -f n56-namespace.yaml

namespace/n56 created

root@master1:~# kubectl get ns

NAME STATUS AGE

default Active 6d19h

kube-node-lease Active 6d19h

kube-public Active 6d19h

kube-system Active 6d19h

kubernetes-dashboard Active 6d17h

n56 Active 4s

2.3 yaml与json

在线yaml与json编辑器:https://www.bejson.com/validators/yaml_editor/

2.3.1 json格式

{

"人员名单": {

"张三": {

"年龄": 18,

"职业": "Linux运维工程师",

"爱好": ["看书", "学习", "加班"]

},

"李四": {

"年龄": 20,

"职业": "java开发工程师",

"爱好": ["开源技术", "微服务", "分布式存储"]

}

}

}

- json的特点

- json 不能加注释

- json 可读性差

- json 语法很严格

- 比较适合API的返回值,也可以用于配置文件

2.3.2 yaml格式

人员名单:

张三:

年龄: 18

职业: Linux运维工程师

爱好:

- 看书

- 学习

- 加班

李四:

年龄: 20

职业: java开发工程师

爱好:

- 开源技术

- 微服务

- 分布式存储

- yaml格式特点:

- 大小写敏感

- 使用缩进表示层级关系

- 以“-” 表示列表中的元素

- 缩进时不能使用tab键,只允许使用空格

- 缩进空格的数目不重要,只要相同层级的元素左对齐即可

- 使用#表示注释,从这个字符一直到行尾,都会被解释器忽略

- 比json更适合作为配置文件

2.3.3 yaml文件的主要特征

k8s中的yaml文件以及其它场景下的yaml文件,大部分包括一下类型:

- 上下级关系

- 列表

- 键值对(也成为maps,及kv形式的键值对数据)

2.3.4 以nginx.yaml为例说明yaml文件的编写

如果没有模板文件,可以使用如下方法进行查询

kubectl explain namespace

kubectl explain namespace.metadata

#nginx.yaml

kind: Deployment #类型,是deployment控制器,kubectl explain Deployment

apiVersion: apps/v1 #API版本,# kubectl explain Deployment.apiVersion

metadata: #pod的元数据信息,kubectl explain Deployment.metadata

labels: #自定义pod的标签,# kubectl explain Deployment.metadata.labels

app: n56-nginx-deployment-label #标签名称为app值为n56-nginx-deployment-label,后面会用到此标签

name: n56-nginx-deployment #pod的名称

namespace: n56 #pod的namespace,默认是defaule

spec: #定义deployment中容器的详细信息,kubectl explain Deployment.spec

replicas: 1 #创建出的pod的副本数,即多少个pod,默认值为1

selector: #定义标签选择器

matchLabels: #定义匹配的标签,必须要设置

app: n56-nginx-selector #匹配的目标标签,

template: #定义模板,必须定义,模板是起到描述要创建的pod的作用

metadata: #定义模板元数据

labels: #定义模板label,Deployment.spec.template.metadata.labels

app: n56-nginx-selector #定义标签,等于Deployment.spec.selector.matchLabels

spec:

containers:

- name: n56-nginx-container #容器名称

image: nginx:1.16.1

#command: ["/apps/tomcat/bin/run_tomcat.sh"] #容器启动执行的命令或脚本

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always #拉取镜像策略

ports: #定义容器端口列表

- containerPort: 80 #定义一个端口

protocol: TCP #端口协议

name: http #端口名称

- containerPort: 443 #定义一个端口

protocol: TCP #端口协议

name: https #端口名称

env: #配置环境变量

- name: "password" #变量名称。必须要用引号引起来

value: "123456" #当前变量的值

- name: "age" #另一个变量名称

value: "18" #另一个变量的值

resources: #对资源的请求设置和限制设置

limits: #资源限制设置,上限

cpu: 500m #cpu的限制,单位为core数,可以写0.5或者500m等CPU压缩值

memory: 512Mi #内存限制,单位可以为Mib/Gib,将用于docker run --memory参数

requests: #资源请求的设置

cpu: 200m #cpu请求数,容器启动的初始可用数量,可以写0.5或者500m等CPU压缩值

memory: 256Mi #内存请求大小,容器启动的初始可用数量,用于调度pod时候使用

nodeSelector:

#group: python57

project: linux56 #只将容器调度到具有project=linux56标签的node上

#nginx-svc.yaml

kind: Service #类型为service

apiVersion: v1 #service API版本, service.apiVersion

metadata: #定义service元数据,service.metadata

labels: #自定义标签,service.metadata.labels

app: n56-nginx #定义service标签的内容

name: n56-nginx-service #定义service的名称,此名称会被DNS解析

namespace: n56 #该service隶属于的namespaces名称,即把service创建到哪个namespace里面

spec: #定义service的详细信息,service.spec

type: NodePort #service的类型,定义服务的访问方式,默认为ClusterIP, service.spec.type

ports: #定义访问端口, service.spec.ports

- name: http #定义一个端口名称

port: 81 #service 80端口,客户端流量->防火墙->负载均衡->nodeport:30001->service port:81->targetpod:80

protocol: TCP #协议类型

targetPort: 80 #目标pod的端口

nodePort: 30001 #node节点暴露的端口

- name: https #SSL 端口

port: 1443 #service 443端口

protocol: TCP #端口协议

targetPort: 443 #目标pod端口

nodePort: 30043 #node节点暴露的SSL端口

selector: #service的标签选择器,定义要访问的目标pod

app: n56-nginx-selector #将流量路到选择的pod上,须等于Deployment.spec.selector.matchLabels

3. ETCD客户端的使用、数据的备份和恢复

3.1 心跳检测

root@etcd1:~# export node_ip='192.168.6.84 192.168.6.85 192.168.6.86'

root@etcd1:~# for i in ${node_ip}; do ETCDCTL_API=3 /usr/local/bin/etcdctl --endpoints=https://${i}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

https://192.168.6.84:2379 is healthy: successfully committed proposal: took = 14.162089ms

https://192.168.6.85:2379 is healthy: successfully committed proposal: took = 16.070919ms

https://192.168.6.86:2379 is healthy: successfully committed proposal: took = 12.748962ms

3.2 显示成员信息

root@etcd1:~# ETCDCTL_API=3 /usr/local/bin/etcdctl --write-out=table member list --endpoints=https://192.168.6.84:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem

+------------------+---------+-------------------+---------------------------+---------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+-------------------+---------------------------+---------------------------+------------+

| 308a1368f27ba48a | started | etcd-192.168.6.85 | https://192.168.6.85:2380 | https://192.168.6.85:2379 | false |

| c16e08c8cace2cd3 | started | etcd-192.168.6.86 | https://192.168.6.86:2380 | https://192.168.6.86:2379 | false |

| ffe13c54256e7ab9 | started | etcd-192.168.6.84 | https://192.168.6.84:2380 | https://192.168.6.84:2379 | false |

+------------------+---------+-------------------+---------------------------+---------------------------+------------+

3.3 以表格方式显示详细节点状态

root@etcd1:~# export node_ip='192.168.6.84 192.168.6.85 192.168.6.86'

root@etcd1:~# for i in ${node_ip}; do ETCDCTL_API=3 /usr/local/bin/etcdctl --write-out=table endpoint status --endpoints=https://${i}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem; done

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.6.84:2379 | ffe13c54256e7ab9 | 3.4.13 | 3.9 MB | true | false | 16 | 102137 | 102137 | |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.6.85:2379 | 308a1368f27ba48a | 3.4.13 | 3.9 MB | false | false | 16 | 102137 | 102137 | |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.6.86:2379 | c16e08c8cace2cd3 | 3.4.13 | 3.9 MB | false | false | 16 | 102137 | 102137 | |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

3.4 查看etcd数据

3.4.1 查看所有key

root@etcd1:~# ETCDCTL_API=3 etcdctl get / --prefix --keys-only #以路径方式查看所有key

pod信息:

root@etcd1:~# ETCDCTL_API=3 etcdctl get / --prefix --keys-only| grep pods

/registry/pods/kube-system/calico-kube-controllers-759545cb9c-jw8c2

/registry/pods/kube-system/calico-node-67bv2

/registry/pods/kube-system/calico-node-hjm5j

/registry/pods/kube-system/calico-node-lkhdp

/registry/pods/kube-system/calico-node-m5nbf

/registry/pods/kube-system/calico-node-n2vxw

/registry/pods/kube-system/calico-node-wpxj4

/registry/pods/kube-system/coredns-69d445fc94-wsp2w

/registry/pods/kubernetes-dashboard/dashboard-metrics-scraper-67c9c47fc7-fcqzq

/registry/pods/kubernetes-dashboard/kubernetes-dashboard-86d88bf65-l2qh5

namespace信息:

root@etcd1:~# ETCDCTL_API=3 etcdctl get / --prefix --keys-only| grep namespaces

/registry/namespaces/default

/registry/namespaces/kube-node-lease

/registry/namespaces/kube-public

/registry/namespaces/kube-system

/registry/namespaces/kubernetes-dashboard

控制器信息:

root@etcd1:~# ETCDCTL_API=3 etcdctl get / --prefix --keys-only| grep deployments

/registry/deployments/kube-system/calico-kube-controllers

/registry/deployments/kube-system/coredns

/registry/deployments/kubernetes-dashboard/dashboard-metrics-scraper

/registry/deployments/kubernetes-dashboard/kubernetes-dashboard

calico信息:

root@etcd1:~# ETCDCTL_API=3 etcdctl get / --prefix --keys-only| grep calico

/calico/ipam/v2/assignment/ipv4/block/10.200.147.192-26

/calico/ipam/v2/assignment/ipv4/block/10.200.187.192-26

/calico/ipam/v2/assignment/ipv4/block/10.200.213.128-26

/calico/ipam/v2/assignment/ipv4/block/10.200.255.128-26

/calico/ipam/v2/assignment/ipv4/block/10.200.67.0-26

/calico/ipam/v2/assignment/ipv4/block/10.200.99.64-26

3.4.2 查看指定的key

root@etcd1:~# ETCDCTL_API=3 etcdctl get /calico/ipam/v2/assignment/ipv4/block/10.200.147.192-26

/calico/ipam/v2/assignment/ipv4/block/10.200.147.192-26

{"cidr":"10.200.147.192/26","affinity":"host:node3.k8s.local","allocations":[0,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null],"unallocated":[1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63],"attributes":[{"handle_id":"ipip-tunnel-addr-node3.k8s.local","secondary":{"node":"node3.k8s.local","type":"ipipTunnelAddress"}}],"deleted":false}

3.4.3 查看所有calico的数据

root@etcd1:~# ETCDCTL_API=3 etcdctl get --keys-only --prefix /calico | grep local

/calico/ipam/v2/handle/ipip-tunnel-addr-master1.k8s.local

/calico/ipam/v2/handle/ipip-tunnel-addr-master2.k8s.local

/calico/ipam/v2/handle/ipip-tunnel-addr-master3.k8s.local

/calico/ipam/v2/handle/ipip-tunnel-addr-node1.k8s.local

/calico/ipam/v2/handle/ipip-tunnel-addr-node2.k8s.local

/calico/ipam/v2/handle/ipip-tunnel-addr-node3.k8s.local

/calico/ipam/v2/host/master1.k8s.local/ipv4/block/10.200.213.128-26

/calico/ipam/v2/host/master2.k8s.local/ipv4/block/10.200.67.0-26

/calico/ipam/v2/host/master3.k8s.local/ipv4/block/10.200.187.192-26

/calico/ipam/v2/host/node1.k8s.local/ipv4/block/10.200.255.128-26

/calico/ipam/v2/host/node2.k8s.local/ipv4/block/10.200.99.64-26

/calico/ipam/v2/host/node3.k8s.local/ipv4/block/10.200.147.192-26

/calico/resources/v3/projectcalico.org/felixconfigurations/node.master1.k8s.local

/calico/resources/v3/projectcalico.org/felixconfigurations/node.master2.k8s.local

/calico/resources/v3/projectcalico.org/felixconfigurations/node.master3.k8s.local

/calico/resources/v3/projectcalico.org/felixconfigurations/node.node1.k8s.local

/calico/resources/v3/projectcalico.org/felixconfigurations/node.node2.k8s.local

/calico/resources/v3/projectcalico.org/felixconfigurations/node.node3.k8s.local

/calico/resources/v3/projectcalico.org/nodes/master1.k8s.local

/calico/resources/v3/projectcalico.org/nodes/master2.k8s.local

/calico/resources/v3/projectcalico.org/nodes/master3.k8s.local

/calico/resources/v3/projectcalico.org/nodes/node1.k8s.local

/calico/resources/v3/projectcalico.org/nodes/node2.k8s.local

/calico/resources/v3/projectcalico.org/nodes/node3.k8s.local

/calico/resources/v3/projectcalico.org/workloadendpoints/kube-system/node2.k8s.local-k8s-coredns--69d445fc94--wsp2w-eth0

/calico/resources/v3/projectcalico.org/workloadendpoints/kubernetes-dashboard/node1.k8s.local-k8s-dashboard--metrics--scraper--67c9c47fc7--fcqzq-eth0

/calico/resources/v3/projectcalico.org/workloadendpoints/kubernetes-dashboard/node2.k8s.local-k8s-kubernetes--dashboard--86d88bf65--l2qh5-eth0

3.5 etcd增删改查数据

#添加数据

root@etcd1:~# ETCDCTL_API=3 /usr/local/bin/etcdctl put /name "tom"

OK

#查询数据

root@etcd1:~# ETCDCTL_API=3 /usr/local/bin/etcdctl get /name

/name

tom

#修改数据,数据已经存在就直接覆盖,即为修改

root@etcd1:~# ETCDCTL_API=3 /usr/local/bin/etcdctl put /name "jack"

OK

#验证修改成功

root@etcd1:~# ETCDCTL_API=3 /usr/local/bin/etcdctl get /name

/name

jack

#删除数据

root@etcd1:~# ETCDCTL_API=3 /usr/local/bin/etcdctl del /name

1

root@etcd1:~# ETCDCTL_API=3 /usr/local/bin/etcdctl get /name

root@etcd1:~#

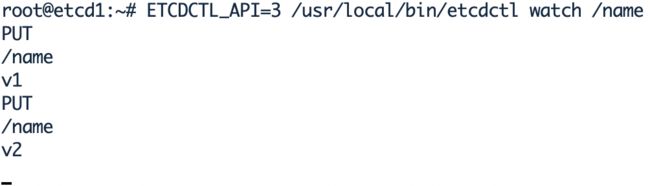

3.6 etcd的watch机制

基于对数据的监看,在发生变化时主动通知客户端,etcd v3的watch机制支持watch某个特定的key,也可以watch一个key的范围,相比v2版本,v3版本的一些主要变化如下:

- 接口通过grpc提供rpc接口,放弃了v2的http接口,优势时长连接的效率明显提升,缺点是使用不如以前方便,尤其对不方便维护长连接的场景。

- 废弃了原来的目录结构,变成了纯粹的kv,用户可以通过前端匹配模式模拟目录。

- 内存中不再保存value,同样的内存可以支持存储更多的key。

- watch机制更加稳定,基本上可以通过watch机制实现数据的完全同步。

- 提供了批量操作以及事物机制,用户可以通过批量事物请求来实现etcd v2 的CAS机制(批量事物支持if条件判断)。

#在etcd node1 上watch一个key,没有key也可以watch,后期可以在创建

root@etcd1:~# ETCDCTL_API=3 /usr/local/bin/etcdctl watch /name #watch 后终端会被占用

root@etcd2:~# ETCDCTL_API=3 /usr/local/bin/etcdctl put /name "v1"

OK

root@etcd2:~# ETCDCTL_API=3 /usr/local/bin/etcdctl put /name "v2"

OK

3.7 etcd数据的备份与恢复

WAL 是write ahead log的缩写,是在执行真正的写操作之前先写一个日志,预写日志。

wal:存放预写日志,最大的作用是记录了整个数据变化的全部历程,在etcd中,所有数据修改在提交前,都要先写入到WAL中。

3.7.1 etcd数据的自动备份与恢复(v3)

#备份

root@master1:/etc/kubeasz# ./ezctl backup k8s-01

root@master1:/etc/kubeasz/clusters/k8s-01/backup# ls

snapshot.db snapshot_202110061653.db

#恢复

root@master1:/etc/kubeasz# ./ezctl restore k8s-01 #恢复时会停服

3.7.2 etcd数据的手动备份与恢复(v3)

#数据的备份

root@etcd1:~# ETCDCTL_API=3 /usr/local/bin/etcdctl snapshot save snapshot.db

{"level":"info","ts":1633509687.2031326,"caller":"snapshot/v3_snapshot.go:119","msg":"created temporary db file","path":"snapshot.db.part"}

{"level":"info","ts":"2021-10-06T16:41:27.203+0800","caller":"clientv3/maintenance.go:200","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":1633509687.2040484,"caller":"snapshot/v3_snapshot.go:127","msg":"fetching snapshot","endpoint":"127.0.0.1:2379"}

{"level":"info","ts":"2021-10-06T16:41:27.233+0800","caller":"clientv3/maintenance.go:208","msg":"completed snapshot read; closing"}

{"level":"info","ts":1633509687.2372715,"caller":"snapshot/v3_snapshot.go:142","msg":"fetched snapshot","endpoint":"127.0.0.1:2379","size":"3.9 MB","took":0.034088666}

{"level":"info","ts":1633509687.2373474,"caller":"snapshot/v3_snapshot.go:152","msg":"saved","path":"snapshot.db"}

Snapshot saved at snapshot.db

root@etcd1:~# ls

Scripts snap snapshot.db

#数据的恢复

root@etcd1:~# ETCDCTL_API=3 /usr/local/bin/etcdctl snapshot restore snapshot.db --data-dir=/opt/etcd-testdir

#--data-dir指定的目录,一定要是不存在或者是空的,否则会还原失败,如果需要恢复到/var/lib/etcd/(即配置文件中的数据存储路径),则停掉etcd,在rm -rf /var/lib/etcd/,删掉后在恢复。

{"level":"info","ts":1633510235.7426238,"caller":"snapshot/v3_snapshot.go:296","msg":"restoring snapshot","path":"snapshot.db","wal-dir":"/opt/etcd-testdir/member/wal","data-dir":"/opt/etcd-testdir","snap-dir":"/opt/etcd-testdir/member/snap"}

{"level":"info","ts":1633510235.7613802,"caller":"mvcc/kvstore.go:380","msg":"restored last compact revision","meta-bucket-name":"meta","meta-bucket-name-key":"finishedCompactRev","restored-compact-revision":85382}

{"level":"info","ts":1633510235.767152,"caller":"membership/cluster.go:392","msg":"added member","cluster-id":"cdf818194e3a8c32","local-member-id":"0","added-peer-id":"8e9e05c52164694d","added-peer-peer-urls":["http://localhost:2380"]}

{"level":"info","ts":1633510235.7712433,"caller":"snapshot/v3_snapshot.go:309","msg":"restored snapshot","path":"snapshot.db","wal-dir":"/opt/etcd-testdir/member/wal","data-dir":"/opt/etcd-testdir","snap-dir":"/opt/etcd-testdir/member/snap"}

#自动备份数据脚本

root@etcd1:~# mkdir /data/etcd-backup-dir/ -p

root@etcd1:~# vim bp-script.sh

#!/bin/bash

source /etc/profile

DATE=`date +%Y-%m-%d_%H-%M-%S`

ETCDCTL_API=3 /usr/local/bin/etcdctl snapshot save /data/etcd-backup-dir/etcd-snapshot-${DATA}.db

3.8 etcd数据的恢复流程

当etcd集群宕机数量超过集群总节点的一半以上时,会导致整个集群不可用,后期需要重新恢复数据,恢复流程如下:

- 恢复服务系系统

- 重新部署etcd集群

- 停止kube-apiserver/controller-manager/scheduler/kubelet/kube-proxy

- 停止etcd集群

- 各etcd节点恢复同一份数据

- 启动各节点并验证etcd集群,验证是否只有一个leader

- 启动kube-apiserver/controller-manager/scheduler/kubelet/kube-proxy

- 验证k8s master状态及pod状态

3.9 etcd节点的删除与添加

root@master1:/etc/kubeasz# ./ezctl del-etcd

root@master1:/etc/kubeasz# ./ezctl add-etcd

4. Kubernetes集群的维护和常用命令

kubectl get service -A -o wide

kubectl get pod -A -o wide

kubectl get nodes -A -o wide

kubectl get deployment -A

kubectl get deployment -n n56 -o wide

kubectl describe pod n56-nginx-deployment-857fc5cb7f-llxmm -n n56 #镜像没起来,先看describe,在看log,在看node节点上的系统日志(ubuntu:syslog,centos:message)

kubectl create -f nginx.yaml #如果后期yaml文件发生变化,只能删掉之前的,才能create,如果最开始用的是create创建,则即使apply也不行。所以一般使用apply,或者在第一次执行create时,添加--save-config。

kubectl apply -f nginx.yaml

kubectl delete -f nginx.yaml

kubectl create -f nginx.yaml --save-config --record

kubectl apply -f nginx.yaml --record #会记录版本信息。新版本不加也行。

kubectl exec -it n56-nginx-deployment-857fc5cb7f-llxmm -n n56

kubectl logs n56-nginx-deployment-857fc5cb7f-llxmm -n n56

kubectl delete pod n56-nginx-deployment-857fc5cb7f-llxmm -n n56

kubectl edit svc n56-nginx-service -n n56 #修改API对象,修改立即生效。不会保存到配置文件。

kubectl scale -n n56 deployment n56-nginx-deployment --replicas=2 #修改pod的副本数为2.

kubectl label node 192.168.6.91 project=linux56 #给node节点添加标签,可配合deployment.spec.template.spec.nodeSelector,在yaml文件中指定将特定容器只运行行在具有特定标签的node上。

kubectl label node 192.168.6.91 project- #去掉标签

kubectl cluster-info

kubectl top node/pod #查看node或者pod的资源使用情况,需要单独安装Metrics API

kubectl cordon 192.168.6.89 #指定某个节点不参与调度

kubectl uncordon 192.168.6.89

kubectl drain 192.168.6.89 --force --ignore-daemonsets --delete-emptydir-data #pod驱散完后,会标记为不参与调度

kubectl api-resources #会显示API资源名称,包括其名称简写及API版本

kubectl config view #可以查看当前kube-config配置,可以参考生成配置文件

http://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands

https://kubernetes.io/zh/docs/concepts/workloads/controllers/deployment/

5. Kubernetes集群的资源对象

5.1 k8s的核心概念

5.1.1 设计理念

5.1.1.1 分层架构

http://docs.kubernetes.org.cn/251.html

5.1.1.2 API设计原则

https://www.kubernetes.org.cn/kubernetes%e8%ae%be%e8%ae%a1%e7%90%86%e5%bf%b5

- 所有API应该是声明式的。正如前文所说,声明式的操作,相对于命令式操作,对于重复操作的效果是稳定的,这对于容易出现数据丢失或重复的分布式环境来说是很重要的。另外,声明式操作更容易被用户使用,可以使系统向用户隐藏实现的细节,隐藏实现的细节的同时,也就保留了系统未来持续优化的可能性。此外,声明式的API,同时隐含了所有的API对象都是名词性质的,例如Service、Volume这些API都是名词,这些名词描述了用户所期望得到的一个目标分布式对象。

- API对象是彼此互补而且可组合的。这里面实际是鼓励API对象尽量实现面向对象设计时的要求,即“高内聚,松耦合”,对业务相关的概念有一个合适的分解,提高分解出来的对象的可重用性。事实上,K8s这种分布式系统管理平台,也是一种业务系统,只不过它的业务就是调度和管理容器服务。

- 高层API以操作意图为基础设计。如何能够设计好API,跟如何能用面向对象的方法设计好应用系统有相通的地方,高层设计一定是从业务出发,而不是过早的从技术实现出发。因此,针对K8s的高层API设计,一定是以K8s的业务为基础出发,也就是以系统调度管理容器的操作意图为基础设计。

- 低层API根据高层API的控制需要设计。设计实现低层API的目的,是为了被高层API使用,考虑减少冗余、提高重用性的目的,低层API的设计也要以需求为基础,要尽量抵抗受技术实现影响的诱惑。

- 尽量避免简单封装,不要有在外部API无法显式知道的内部隐藏的机制。简单的封装,实际没有提供新的功能,反而增加了对所封装API的依赖性。内部隐藏的机制也是非常不利于系统维护的设计方式,例如PetSet和ReplicaSet,本来就是两种Pod集合,那么K8s就用不同API对象来定义它们,而不会说只用同一个ReplicaSet,内部通过特殊的算法再来区分这个ReplicaSet是有状态的还是无状态。

- API操作复杂度与对象数量成正比。这一条主要是从系统性能角度考虑,要保证整个系统随着系统规模的扩大,性能不会迅速变慢到无法使用,那么最低的限定就是API的操作复杂度不能超过O(N),N是对象的数量,否则系统就不具备水平伸缩性了。

- API对象状态不能依赖于网络连接状态。由于众所周知,在分布式环境下,网络连接断开是经常发生的事情,因此要保证API对象状态能应对网络的不稳定,API对象的状态就不能依赖于网络连接状态。

- 尽量避免让操作机制依赖于全局状态,因为在分布式系统中要保证全局状态的同步是非常困难的。

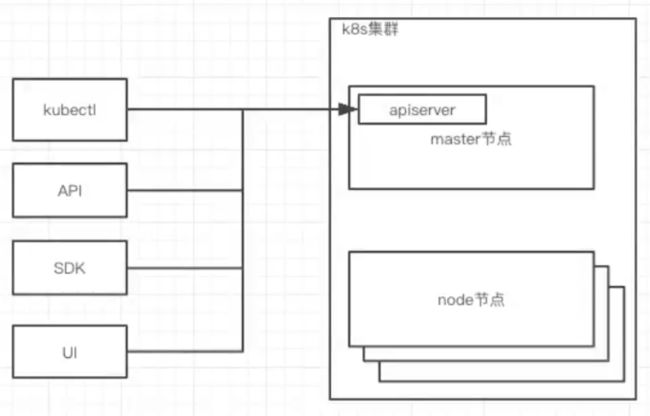

5.2 k8s的资源管理核心-API对象

5.2.1 如何操作

5.2.2 操作什么

5.3 重要的API对象

5.3.1 API对象的一些必要字段(yaml文件中必须要包含的)

| 字段 | 解释 |

|---|---|

| apiVersion | 创建该对象所使用的k8s API版本。 |

| kind | 想要创建的对象类型。 |

| metadata | 帮助识别对象唯一性数据,包括name,可选的namespace。 |

| spec | |

| status | pod创建完成后k8s自动生成status状态。 |

- 每个API对象都有3大类属性:元数据metadata、规范、状态

- spec和status区别

- spec是期望状态

- status是实际状态

5.3.2 Pod

- pod是k8s中的最小单元。

- 一个pod中可以运行一个容器,也可以运行多个容器。

- 运行多个容器,这些容器是被一起调度的。

- pod的生命周期是短暂的,不会自愈,是用完就销毁的实体。

- 一般是通过controller来创建和管理pod的。

5.3.2.1 pod的生命周期

- 初始化容器

- 启动前操作

- 就绪探针

- 存活探针

- 删除pod操作

5.3.2.2 livenessProbe 和 readinessProbe

- livenessProbe 存活探针

- 检查应用发生故障时使用(不提供服务,超时等)。

- 检测失败,重启pod

- readinessProbe 就绪探针

- 检测pod启动之后应用是否就绪,是否可以提供服务。

- 检测成功,pod才开始接收流量。

5.3.3 Controller

- Replication Controller #第一代pod副本控制器

- ReplicaSet #第二代pod副本控制器

- Deployment #第三代pod副本控制器

5.3.3.1 Rc、RS和Deployment

- Replication Controller : 副本控制器(selector = !=)

- https://kubernetes.io/zh/docs/concepts/workloads/controllers/replicationcontroller/

- https://kubernetes.io/zh/docs/concepts/overview/working-with-objects/labels/

apiVersion: v1

kind: ReplicationController

metadata:

name: ng-rc

spec:

replicas: 2

selector:

app: ng-rc-80

#app1: ng-rc-81

template:

metadata:

labels:

app: ng-rc-80

#app1: ng-rc-81

spec:

containers:

- name: ng-rc-80

image: nginx

ports:

- containerPort: 80

- ReplicaSet:副本控制集,和副本控制器的区别:对选择器的支持(selector 还支持In 和 Notin)

- https://kubernetes.io/zh/docs/concepts/workloads/controllers/replicaset/

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: frontend

spec:

replicas: 2

selector:

# matchLabels:

# app: ng-rs-80

matchExpressions:

- {key: app, operator: In, values: [ng-rs-80,ng-rs-81]} #如果ng-rs-80,ng-rs-81的labels都有,则会在两个labels中各建一个,总数也是两个,一般会预期在这两个labels中各建两个,所以这种宽泛匹配用的不多。

template:

metadata:

labels:

app: ng-rs-80

spec:

containers:

- name: ng-rs-80

image: nginx

ports:

- containerPort: 80

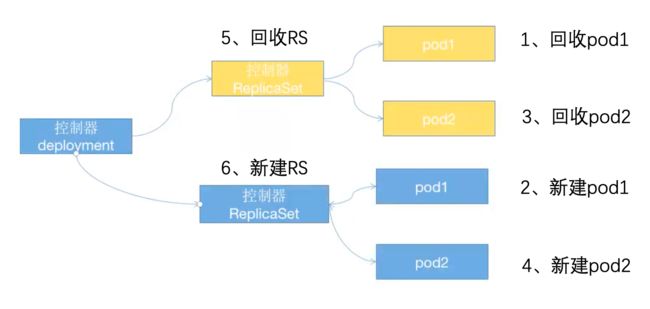

- Deployment:比rs更高一级的控制器,除了有rs的功能外,还有很多高级功能,包括最重要的:滚动升级、回滚等。实际上是调用了ReplicaSet控制器。

- https://kubernetes.io/zh/docs/concepts/workloads/controllers/deployment/

- pod的命名特点:deployment名+rs控制器名称(k8s自生成)+pod名

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2

selector:

#app: ng-deploy-80 #rc

matchLabels: #rs or deployment

app: ng-deploy-80

# matchExpressions:

# - {key: app, operator: In, values: [ng-deploy-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx:1.16.1

ports:

- containerPort: 80

- Deployment控制器的控制过程

5.3.4 Service

当pod重建后,pod的IP可能发生变化,则pod之间的访问会出现问题,所以需要解藕服务和对象,即声明一个service对象。

常用的有2种service:

- k8s集群内的service:selector指定pod,自动创建Endpoint。

- k8s集群外的service:手动创建Endpoint,指定外部服务的IP,端口和协议。

5.3.4.1 kube-proxy和service的关系

kube-proxy—watch–>k8s-apiserver

Kube-proxy会监听k8s-apiserver,一旦service资源发生变化(调k8s-api修改service信息),则kube-proxy就会生成对应的负载调度调整,保证service的最新状态。

5.3.4.2 kube-proxy的三种调度模型

- userspace:k8s v1.1 之前

- iptables: k8s v1.10之前

- ipvs:k8s v1.11之后,如果没有开启ipvs,则自动降级为iptables

#deploy_pod.yaml

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

#matchLabels: #rs or deployment

# app: ng-deploy3-80

matchExpressions:

- {key: app, operator: In, values: [ng-deploy-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx:1.16.1

ports:

- containerPort: 80

#nodeSelector:

# env: group1

#svc-service.yaml #集群内部访问

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 88

targetPort: 80

protocol: TCP

type: ClusterIP

selector:

app: ng-deploy-80

#svc_NodePort.yaml #集群外部访问,在所有node上规则都生效,在k8s集群外的HA上在配置负载均衡,实现外部访问,该方法效率较高。如果加入ingress(7层负载),实现多个service(7层负载)根据域名等匹配,则ingress在nodeport之后,service之前,则实际外部流量访问时,则需要2层7层负责,在访问量特别大的时候,可能出现瓶颈。ingress的转发规则支持较少,配置较难。

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 90

targetPort: 80

nodePort: 30012

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

5.3.5 Volume

数据和镜像要是实现解藕,实现容器间的数据共享,k8s抽象出一个对象,用来保存数据,做为存储使用。

5.3.5.1 常用的几种卷类型

- emptyDir:本地临时卷

- hostPath:本地卷

- nfs等:共享卷

- configmap:配置文件

https://kubernetes.io/zh/docs/concepts/storage/volumes/

5.3.5.2 emptyDir

当Pod被分配在node上时,首先创建emptyDir卷,并且只要该pod在该node上运行,该卷就会存在,正如对象名称所述,其最初时空的。Pod中的容器可以读取和写入 emptyDir卷中的相同文件,尽管该卷可以挂载到每个容器中的相同或不同路径上。当出于任何原因,从node上删除pod时,emptyDir卷中的数据将被永久删除。

宿主机上的卷路径:/var/lib/kubelet/pods/ID/volumes/kubernetes.io~empty-dir/cache-volume/FILE

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /cache

name: cache-volume-n56

volumes:

- name: cache-volume-n56

emptyDir: {}

5.3.5.3 hostPath

将主机节点上的文件系统中的文件或目录挂载到集群中,pod删除时,卷不会被删除

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /data/n56

name: cache-n56-volume

volumes:

- name: cache-n56-volume

hostPath:

path: /opt/n56

5.3.5.4 nfs等共享存储

nfs卷允许将现有的NFS共享挂载到容器中,不同于emptyDir,当删除pod时,nfs卷的内容被保留,卷仅仅是被卸载,意味着NFS卷可以预先填充数据,并且在多个pod之间切换数据,NFS可以同时被多个写入者挂载。实际上NFS是挂载在node上,在映射给容器。

- 创建多个pod,测试挂载同一个NFS

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-site2

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-81

template:

metadata:

labels:

app: ng-deploy-81

spec:

containers:

- name: ng-deploy-81

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html/mysite

name: my-nfs-volume

volumes:

- name: my-nfs-volume

nfs:

server: 172.31.1.103

path: /data/k8sdata

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-81

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30017

protocol: TCP

type: NodePort

selector:

app: ng-deploy-81

- 创建多个pod,测试每个pod挂载多个NFS

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html/mysite

name: my-nfs-volume

- mountPath: /usr/share/nginx/html/js

name: my-nfs-js

volumes:

- name: my-nfs-volume

nfs:

server: 172.31.7.109

path: /data/magedu/n56

- name: my-nfs-js

nfs:

server: 172.31.7.109

path: /data/magedu/js

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30016

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

5.3.5.5 configmap

实现配置信息和镜像的解藕,将配置信息存放到configmap对象中,然后在pod对象中导入configmap对象,实现导入配置操作。声明一个configmap对象,作为volume挂载到pod中。

-

配置变更:

- 直接把服务的配置文件放到镜像中

- configmap:把配置和镜像解藕

- 配置中心:Apollo

-

环境变量的传递:

- 通过dockerfile的env定义

- 可以用configmap中提供

- 直接在yaml文件中写明

- 配置文件

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

default: |

server {

listen 80;

server_name www.mysite.com;

index index.html;

location / {

root /data/nginx/html;

if (!-e $request_filename) {

rewrite ^/(.*) /index.html last;

}

}

}

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-8080

image: tomcat

ports:

- containerPort: 8080

volumeMounts:

- name: nginx-config

mountPath: /data

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /data/nginx/html

name: nginx-static-dir

- name: nginx-config

mountPath: /etc/nginx/conf.d

volumes:

- name: nginx-static-dir

hostPath:

path: /data/nginx/linux39

- name: nginx-config

configMap:

name: nginx-config

items:

- key: default

path: mysite.conf

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30019

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

username: user1

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

env:

- name: "test"

value: "value"

- name: MY_USERNAME

valueFrom:

configMapKeyRef:

name: nginx-config

key: username

ports:

- containerPort: 80

5.3.6 Statefulset

- 为解决有状态服务的问题

- 它所管理的pod具有固定的pod名,主机名,启停顺序

- 创建一个statefulset类型的pod,并指定servicename,创建headless类型的pvc

- 官网示例:https://kubernetes.io/zh/docs/concepts/workloads/controllers/statefulset/

5.3.7 Daemonset

DaemonSet在当前集群中每个节点运行同一个pod,当有新的节点加入建时,也会为新的节点配置相同的pod,当节点从集群中移除时,其pod也会被kubernetes回收,但是删除DaemonSet将删除其创建的所有pod。

https://kubernetes.io/zh/docs/concepts/workloads/controllers/daemonset/

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-elasticsearch

namespace: kube-system

labels:

k8s-app: fluentd-logging

spec:

selector:

matchLabels:

name: fluentd-elasticsearch

template:

metadata:

labels:

name: fluentd-elasticsearch

spec:

tolerations:

# this toleration is to have the daemonset runnable on master nodes

# remove it if your masters can't run pods

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: fluentd-elasticsearch

image: quay.io/fluentd_elasticsearch/fluentd:v2.5.2

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

#日志收集路径,采用简单的正则,配置日志文件路径:/var/lib/docker/overlay2/*/diff/data/*.log

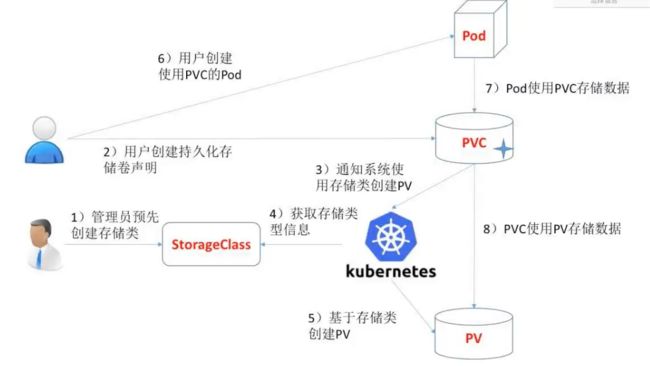

5.3.8 PV/PVC

- 实现pod和storage的解藕,这样在修改storage的时候,不需要修改pod,也可以实现存储的应用权限的隔离

- PersistentVolume 和PersistentVolumeClaim

- PersistentVolume(PV)是由管理员设置的存储,它是集群中的一部分,PV就像节点一样也是集群的资源。PV是volume之类的卷插件,但具有独立于使用PV的pod的生命周期。此API对象包含存储的实现细节,即NFS,iSCSI或者特定于云提供商的存储系统。

- PersistentVolumeClaim(PVC)是用户存储的请求,它与pod相似。Pod消耗节点资源,PVC消耗PV资源。Pod可以请求特定级别的资源(CPU和内存)。声明可以请求特定的大小和模式(如,可以以读/写一次或只读多次模式挂载)。

kubernetes 官方文档:

https://kubernetes.io/docs/concepts/storage/persistent-volumes/#mount-options

PersistentVolume 参数:

~# kubectl explain persistentvolume.spec

capacity 当前PV空间大小,kubectl explain persistentvolume.spec.capacity

accessModes 访问模式,kubectl explainpersistentvolume.spec.accessModes

ReadWriteOnce --PV只能被单个及node以读写的权限挂载,RWO

ReadOnlyMany --PV可以被多个node挂载,但权限只是只读的,ROX

ReadWriteMany --PV可以被多个node以读写模式挂载,RWX

ReadWriteOncePod --PV只能被单个pod以读写方式挂载,需要v1.22+,RWOP

persistentVolumeReclaimPolicy

kubectl explain persistentvolumes.spec.persistentVolumeReclaimPolicy

- Retain 删除PV后保持原状,最后需要管理员手动删除

- Delete 空间回收,及删除存储卷上的所有数据(包括目录和隐藏文件)。目前仅支持NFS和hostpath

- Recycle 自动删除存储卷

volumeMode 卷类型,kubectl explain persistentvolumes.spec.volumeMode

定义存储卷使用的文件系统是块设备还是文件系统,默认是文件系统

mountOptions 附加的挂载选项列表,实现更精细的权限控制,ro等。

PersistentVolumeClaim 参数:

~# kubectl explain PersistentVolumeClaim

accessModes 访问模式,与PV一样。kubectl explain PersistentVolumeClaim.spec.volumeMode

resources 定义PVC创建存储卷的空间大小

https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/

selector 标签选择器,选择要绑定的PV

matchLabels 匹配标签名称

matchExpressions 基于正则表达式匹配(注意要保证匹配准确,不能把PVC绑定错了)

volumeName 要绑定的PV名称

volumeMode 卷类型。定义PVC使用的文件系统是块设备还是文件系统,默认为文件系统。

- PVC一定要指定与pod在一个namespace中。