大数据智慧出行开发第一周:智慧出行底层数据架构剖析纵览全局

第一周:智慧出行底层数据架构剖析纵览全局

0.大数据环境前置准备

一、文档说明

为了统一我们的操作系统与软件环境,我们统一课前基本软件环境,实现全程学习当中的软件版本都是一致的

二、VmWare与linux版本

VmWare版本:

VmWare版本不做要求,使用VmWare10版本以上即可,关于VmWare的安装,直接使用安装包一直下一步安装即可,且安装包当中附带破解秘钥,进行破解即可使用

linux版本

linux统一使用centos

centos统一使用centos7.6 64位版本

种子文件下载地址:http://mirrors.aliyun.com/centos/7.6.1810/isos/x86_64/CentOS-7-x86_64-DVD-1810.torrent

或者官网下载

1.百度搜索centos–>2.点击centos官网连接–>3.点击DVD ISO–>4.点击http://ap.stykers.moe/centos/7.6.1810/isos/x86_64/CentOS-7-x86_64-DVD-1810.iso连接进下载.

三、使用VmWare来安装linux软件

四、三台linux服务器环境准备

三台机器IP设置

三台机器修改ip地址:

vi /etc/sysconfig/network-scripts/ifcfg-ens33

BOOTPROTO="static"

IPADDR=192.168.52.100

NETMASK=255.255.255.0

GATEWAY=192.168.52.1

DNS1=8.8.8.8

准备三台linux机器,IP地址分别设置成为

第一台机器IP地址:192.168.52.100

第二台机器IP地址:192.168.52.110

第三台机器IP地址:192.168.52.120

三台机器关闭防火墙

三台机器在root用户下执行以下命令关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

三台机器关闭selinux

三台机器在root用户下执行以下命令关闭selinux

三台机器执行以下命令,关闭selinux

vi /etc/selinux/config

SELINUX=disabled

三台机器更改主机名

三台机器分别更改主机名

第一台主机名更改为:node01.kaikeba.com

第二台主机名更改为:node02.kaikeba.com

第三台主机名更改为:node03.kaikeba.com

hostnamectl set-hostname node01.kaikeba.com

第一台机器执行以下命令修改主机名

vi /etc/hostname

node01.kaikeba.com

第二台机器执行以下命令修改主机名

vi /etc/hostname

node02.kaikeba.com

第三台机器执行以下命令修改主机名

vi /etc/hostname

node03.kaikeba.com

三台机器更改主机名与IP地址映射

三台机器执行以下命令更改主机名与IP地址映射关系

vi /etc/hosts

192.168.52.100 node01.kaikeba.com node01

192.168.52.110 node02.kaikeba.com node02

192.168.52.120 node03.kaikeba.com node03

三台机器配置时区

Asia>China>beijing

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

三台机器同步时间

三台机器执行以下命令定时同步阿里云服务器时间

date -s ‘2018-10-11 20:55:55’

yum -y install ntpdate

crontab -e

*/1 * * * * /usr/sbin/ntpdate time1.aliyun.com

三台定义统安装包解压目录

mkdir -p /opt/cdh # 安装包解压目录

三台机器安装jdk

根据自己安装的jdk配置相应的环境变量.

使用root用户来重新连接三台机器,然后使用root用户来安装jdk软件

上传压缩包到第一台服务器的root用户/home/root(也就是[root@localhost ~]目录下,顺便放个位置就可以了)下面,然后进行解压,配置环境变量即可,三台机器都依次安装即可

[root@localhost ~]# vi .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

JAVA_HOME=/usr/java/jdk1.8.0_211-amd64

PATH=$PATH:$HOME/bin:$JAVA_HOME/bin

export JAVA_HOME

export PATH

root用户免密码登录

三台机器在root用户下执行以下命令生成公钥与私钥

ssh-keygen -t rsa

三台机器在root用户下,执行以下命令将公钥拷贝到所有服务器上面去

ssh-copy-id node01

ssh-copy-id node02

ssh-copy-id node03

五、网络ping不同问题分析

1.第一种情况,ip配置错误,配置项拼写错误.

2.vnet8的ip需要配置和本地在最好在不同的网段,如果在一个网段要保证vnet8的ip和本地的网关不要一样,一样的话可能导致不能连接外网.

3.虚拟网络子网配置是否和本地在一个网段.

4.虚拟网络配置正常,虚拟机不能平通网络,此时从新修改下虚拟网络配置.

六、免密登录时创建的.ssh文件不是目录

1.如果有root用户登录进程先杀死登录进程,然后删除用户userdel -r root重新进行免密操作.

2.ssh-copy-id -i node01

一、课前准备

1.个人电脑(1T磁盘,内存16G以上,4核八代CPU)或者云环境(三台虚拟机,磁盘40G以上,内存16G以上,4核cpu).

2.准备项目运行环境(3台linux centos7的虚拟机,50G磁盘,4G内存,4核cpu)

3.下载好CDH5.14

4.下载并安装jdk8

二、课堂主题

1.课程整体介绍

2.项目整体介绍

3.技术点和使用场景的介绍

三、课堂目标

1.全局熟悉项目中使用到的技术点.

2.项目的整体框架.

3.课程的整体知识点.

四、知识要点

1、项目架构及解决方案论述

1.1 通过binlog方式实时梳理业务库高QPS压力

QPS:Queries Per Second意思是“每秒查询率”,是一台服务器每秒能够相应的查询次数,是对一个特定的查询服务器在规定时间内所处理流量多少的衡量标准。

官网binlog介绍

MySQL中通常有以下几种类型的文件:

| 日志类型 | 写入日志的信息 |

|---|---|

| 错误日志 | 记录在启动,运行或停止mysqld时遇到的问题 |

| 通用查询日志 | 记录建立的客户端连接和执行的语句 |

| 二进制日志 | 记录更改数据的语句 |

| 中继日志 | 从复制主服务器接收的数据更改 |

| 慢查询日志 | 记录所有执行时间超过 long_query_time 秒的所有查询或不使用索引的查询 |

| DDL日志(元数据日志) | 元数据操作由DDL语句执行 |

业务背景:

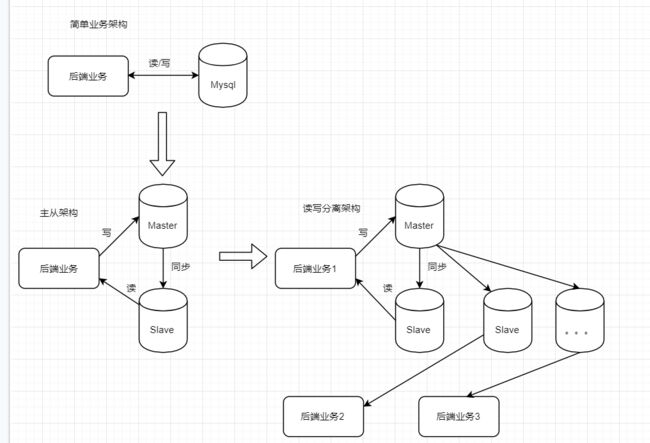

通常我们在进行业务系统开发是开始都会采用关系型数据库(Mysql,Oracle),随着业务量的增大导致数据量增大,我们需要对架构进行调整演进出来主从架构(主库负责写,从库负责读),随着业务量的持续增大我们需要进行分库分表来降低单个表中的数据存储,但是单个表中数据量达到400w~500w性能比较底下,让人无法接受,如果我们按照分库分表的策略进行操作会随着业务量的不断增长导致数据库集群的维护工作变得更加复杂,同时会带来更多的问题:

- 辅助索引只能局部有效;

- 由于分库,无法使用join等函数;由于分表count、order、group等聚合函数也无法做了;

- 扩容:需要再次水平拆分的:迁移数据……

当我们遇到如下业务场景时:

1.业务更新数据写到数据库中

2.业务更新数据需要实时传递给下游业务依赖处理

处理架构如下:

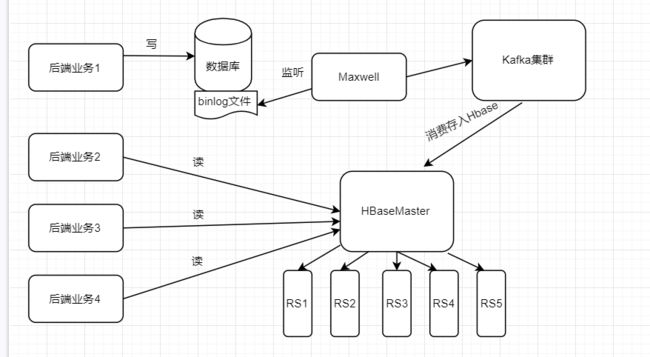

但这个架构也存在着不少弊端:我们需要在项目中维护很多发送消息的代码。新增或者更新消息都会带来不少维护成本。所以,更好的处理方式应该是直接将数据库的数据接入到流式系统中,使用大数据生态圈中的相关技术来剥离业务数据的后续处理以及最大化优化读数据的能力从而减少业务库的压力,业务库只关心数据的写入即可,新的设计架构如下图:

使用maxwell拉取的方式实时监听mysql数据库的binlog文件

1.2 内置源码模块,细粒度监控Spark作业,失败及时邮件报警

业务背景:

通常我们在使用Spark进行数据分析时,需要对Spark应用程序进行性能的优化,此时我们就需要知道Spark应用程度内部涉及到的Job,stage,task的运行情况,虽然Spark UI中已经有了可以查看Job,stage,task运行时的一些信息,但是都是粗粒度的,如果业务中需要更细粒度的监控时就需要开发代码,同时,可以进行自定义一些任务失败时通过邮件通知运维人员及时关注业务运行情况和集群资源使用情况.

监控整个application开始执行状态

监控整个application结束的状态

监控整个Job开始执行状态

监控整个Job结束状态

监控Spark stage提交时状态

监控Spark stage完成时状态

监控Spark task开始时状态

监控Spark task完成时状态

监控整个作业的内存和磁盘变化

监控整个job上下文环境

监控rdd缓存变化状态

监控executor状态

通过实现SparkListener(离线Spark应用监听器)和StreamingListener(实时流Spark应用监听器)来达到对多Spark应用运行时状态的细粒度监控

1.2.1 spark离线任务监控

com.cartravel.programApp.ReadController //启动SparkEngine引擎

com.cartravel.spark.SparkEngine //主要用于把自定义的SparkAppListener的监听器给调用起来

com.cartravel.spark.SparkAppListener //离线监听Spark应用运行状况

1.2.2 sparkstreaming实时任务监控

com.cartravel.programApp.App //创建KafkaManager实例

com.cartravel.spark.StreamingMonitor //监控Spark实时作业状况

com.cartravel.kafka.KafkaManager //启动StreamingMonitor

com.cartravel.kafka.KafkaManager类中启动StreamingMonitor

val endTime = System.currentTimeMillis()

Logger.getLogger("处理事务").info(s"插入数据需要的时间:${(endTime - startTime)}")

ssc.addStreamingListener(new StreamingMonitor(ssc , sparkConf , batchDuration.toInt , "query" , rdd , kafkaManager))

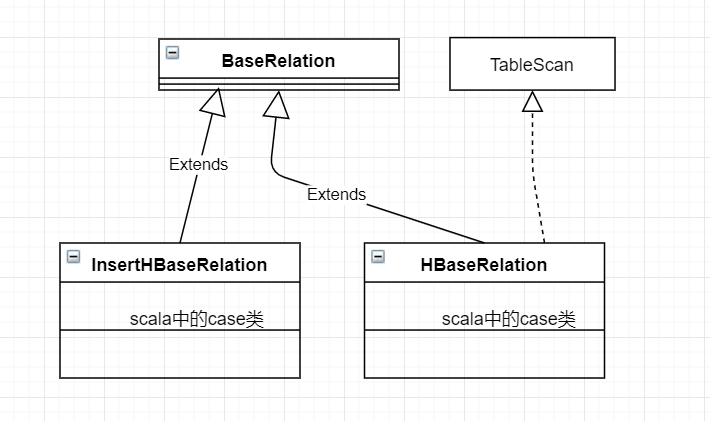

1.3 覆盖源码自定义数据源实现数据加载,从源头进行列剪枝

业务背景:

目前Spark框架是大数据开源技术中比较流行的分布式计算框架,而HBase是基于HDFS之上的分布式列式存储数据库,使用Spark作为实时和离线数据分析的也越来越多,最后把处理的结果保存到HBase中做实时的查询。或者现在越来越多的企业针对用户的行为数据建设用户画像和推荐系统都在使用HBase作为存储媒介,供客户端使用,然儿往往HBase中存储的用户和物品的数据性比较多,我们使用到的属性列比较少,这时我们就需要按需(也就是按照列)查询HBase中的数据,Spark和Hbase集成时默认进行的是全表扫描,这样会代码一个问题,如果表中有几十个G的数据时就会一次性扫描放到内存中,然后在内存中进行按列过滤,这样就会造成内存,磁盘,网络IO的压力,如果按照列进行扫描数据的话,压力会大大减少数十倍或者数百倍之多.

实现方案:

1.4 自定义维护Kafka的偏移量管理,实现exactly once

业务背景:

通常项目开发过程中,我们使用SparkStreaming和Kafka集成处理,Kafka的自动管理offset就能够满足大部分需求,但有些场景我们要保证主体中的消息只能被处理一次,不能出现重复消费的问题,这时我们就需要使用Kafka提供的手动维护offset的功能。

实现方案:

1.业务处理的地方禁用到kafka自动提交offset的配置项.

2.把偏移量手动保存到zookeeper(或hbase)中的一个数据节点中.

3.业务处理时首先获取zookeeper(或bhase)中的偏移量,如果能获取到那就从获取到的offset处进行消费,如果获取不到那就从latest处进行消费,然后把消费掉的offset保存到zookeeper中的数据节点中.

1.5 实现前后端rest接口的开发规范

[参考rest设计文档]

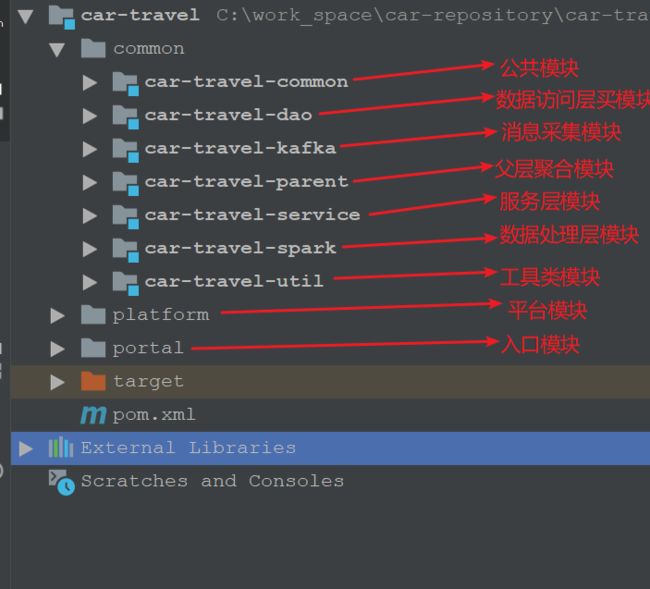

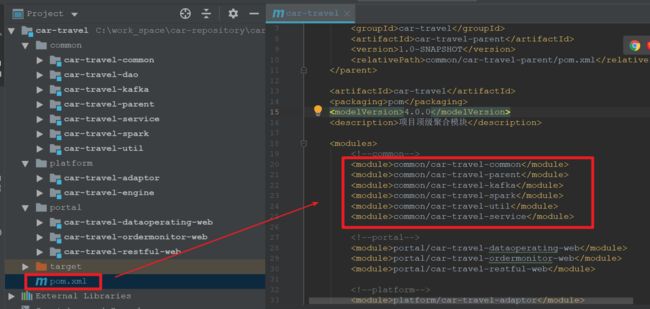

2、项目模块搭建

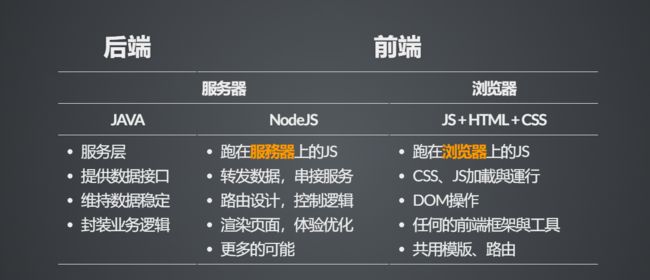

2.1 前后端模块分离

总结:前端项目+后端接口

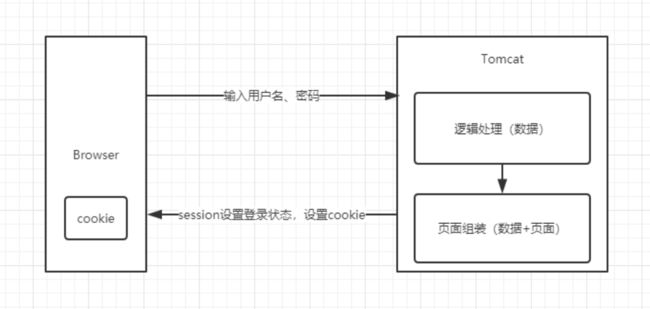

传统项目,前后端统一在一个服务中,后端的代码内嵌在前端的代码中.

Java开发页面代码:

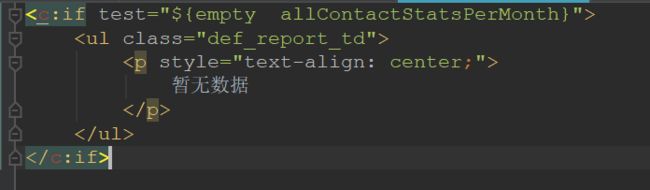

JSP中的JSTL标签:

PHP代码开发页面代码:

请求处理方式:

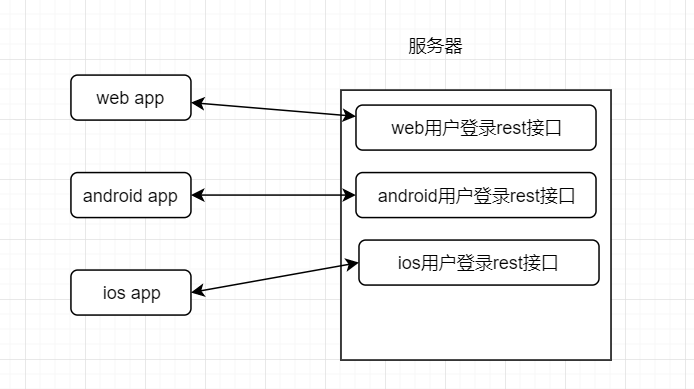

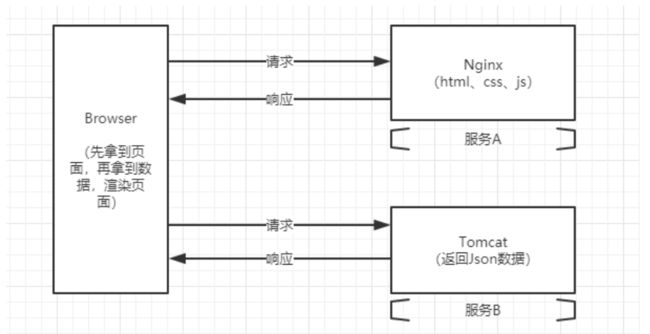

随着近几年互联网的快速发展,传统的做法已经不能满足业务的发展需要,各种前端框架的出现,渐渐出现前后端分离的架构方式,项目结构发生了变化,如下图:

请求处理方式:

实现:

1.后端提供restful接口,向前端返回json格式数据.

2.部署,前端开发好项目把编译生成的static放到后端项目中跟着后端项目一起发布部署.

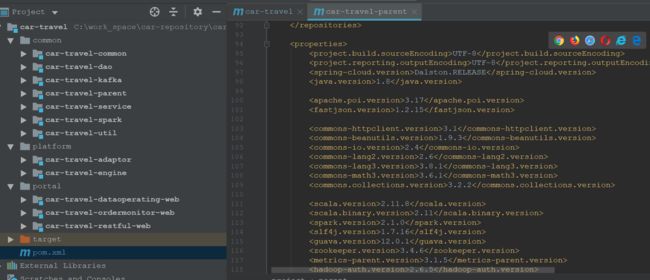

2.2 项目依赖的版本规范

1.整个项目使用mave进行模块管理

2.依赖包版本管理

3、项目平台搭建(Cloudera)

请参考【Cloudera平台搭建】教程

3.1 Cloudera的服务搭建

3.2 Cloudera的Hadoop生态搭建

3.3 Cloudera的分布式消息系统搭建

4、业务库高并发解决方案介绍和架构实现

通常业务库的压力比较大主要分为写(插入,更新,删除)和读两种操作,针对于写操作我们可以通过分库分表来实现,那么针对于读的操作,尤其是各种复杂的报表需要在全局表数据中进行统计时,分库分表实现起来就比较麻烦,代码需要同时统计多个库和多个表,然后在进行统一的汇总,这样频繁的操作对业务库压力比较大,针对这样的需求业内的惯用做法就是读与写的分离,写只关注数据的产生和修改,读只需要关注数据查询,而我们要做的就是对业务库读性能的优化.

4.1 业务库binlog的落地

1.需要开启mysql数据库binlog的功能

4.2 实时抓取binlog并解析到分布式消息队列

1.配置maxwell,zookeeper,kafka

5、项目common模块的开发实现

1.common模块初步搭建

五、MySQL安装之yum安装

在CentOS7中默认安装有MariaDB,这个是MySQL的分支,但为了需要,还是要在系统中安装MySQL,而且安装完成之后可以直接覆盖掉MariaDB。

1.安装wget工具

使用wget工具下载mysql安装包和yum源文件

yum install -y wget

[root@node02 ~]# wget -i -c http://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm

-bash: wget: command not found

You have new mail in /var/spool/mail/root

[root@node02 ~]# yum install -y wget

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirror.jdcloud.com

* extras: mirror.jdcloud.com

* updates: mirrors.tuna.tsinghua.edu.cn

Resolving Dependencies

--> Running transaction check

---> Package wget.x86_64 0:1.14-18.el7_6.1 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

===================================================================================================

Package Arch Version Repository Size

===================================================================================================

Installing:

wget x86_64 1.14-18.el7_6.1 base 547 k

Transaction Summary

===================================================================================================

Install 1 Package

Total download size: 547 k

Installed size: 2.0 M

Downloading packages:

wget-1.14-18.el7_6.1.x86_64.rpm | 547 kB 00:00:08

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : wget-1.14-18.el7_6.1.x86_64 1/1

Verifying : wget-1.14-18.el7_6.1.x86_64 1/1

Installed:

wget.x86_64 0:1.14-18.el7_6.1

Complete!

[root@node02 ~]#

2. 下载并安装MySQL官方的 Yum Repository

[root@node02 ~]# wget -i -c http://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm

--2019-10-11 14:31:09-- http://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm

Resolving dev.mysql.com (dev.mysql.com)... 137.254.60.11

Connecting to dev.mysql.com (dev.mysql.com)|137.254.60.11|:80... connected.

HTTP request sent, awaiting response... 301 Moved Permanently

Location: https://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm [following]

--2019-10-11 14:31:11-- https://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm

Connecting to dev.mysql.com (dev.mysql.com)|137.254.60.11|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://repo.mysql.com//mysql57-community-release-el7-10.noarch.rpm [following]

--2019-10-11 14:31:14-- https://repo.mysql.com//mysql57-community-release-el7-10.noarch.rpm

Resolving repo.mysql.com (repo.mysql.com)... 104.93.1.42

Connecting to repo.mysql.com (repo.mysql.com)|104.93.1.42|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 25548 (25K) [application/x-redhat-package-manager]

Saving to: ‘mysql57-community-release-el7-10.noarch.rpm’

100%[=========================================================>] 25,548 --.-K/s in 0.001s

2019-10-11 14:31:15 (31.3 MB/s) - ‘mysql57-community-release-el7-10.noarch.rpm’ saved [25548/25548]

-c: No such file or directory

No URLs found in -c.

FINISHED --2019-10-11 14:31:15--

Total wall clock time: 5.9s

Downloaded: 1 files, 25K in 0.001s (31.3 MB/s)

You have new mail in /var/spool/mail/root

[root@node02 ~]#

使用上面的命令就直接下载了安装用的Yum Repository,大概25KB的样子,然后就可以直接yum安装了。

[root@node02 ~]# yum -y install mysql57-community-release-el7-10.noarch.rpm

Loaded plugins: fastestmirror

Examining mysql57-community-release-el7-10.noarch.rpm: mysql57-community-release-el7-10.noarch

Marking mysql57-community-release-el7-10.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package mysql57-community-release.noarch 0:el7-10 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

===================================================================================================

Package Arch Version Repository Size

===================================================================================================

Installing:

mysql57-community-release noarch el7-10 /mysql57-community-release-el7-10.noarch 30 k

Transaction Summary

===================================================================================================

Install 1 Package

Total size: 30 k

Installed size: 30 k

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : mysql57-community-release-el7-10.noarch 1/1

Verifying : mysql57-community-release-el7-10.noarch 1/1

Installed:

mysql57-community-release.noarch 0:el7-10

Complete!

You have new mail in /var/spool/mail/root

[root@node02 ~]#

下面就是使用yum安装MySQL了,这步可能会花些时间,安装完成后就会覆盖掉之前的mariadb。

[root@node02 ~]# yum -y install mysql-community-server

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirror.jdcloud.com

* extras: mirror.jdcloud.com

* updates: mirrors.tuna.tsinghua.edu.cn

mysql-connectors-community | 2.5 kB 00:00:00

mysql-tools-community | 2.5 kB 00:00:00

mysql57-community | 2.5 kB 00:00:00

(1/3): mysql57-community/x86_64/primary_db | 184 kB 00:00:01

(2/3): mysql-tools-community/x86_64/primary_db | 61 kB 00:00:02

(3/3): mysql-connectors-community/x86_64/primary_db | 44 kB 00:00:03

Resolving Dependencies

--> Running transaction check

---> Package mysql-community-server.x86_64 0:5.7.27-1.el7 will be installed

--> Processing Dependency: mysql-community-common(x86-64) = 5.7.27-1.el7 for package: mysql-community-server-5.7.27-1.el7.x86_64

--> Processing Dependency: mysql-community-client(x86-64) >= 5.7.9 for package: mysql-community-server-5.7.27-1.el7.x86_64

--> Running transaction check

---> Package mysql-community-client.x86_64 0:5.7.27-1.el7 will be installed

--> Processing Dependency: mysql-community-libs(x86-64) >= 5.7.9 for package: mysql-community-client-5.7.27-1.el7.x86_64

---> Package mysql-community-common.x86_64 0:5.7.27-1.el7 will be installed

--> Running transaction check

---> Package mariadb-libs.x86_64 1:5.5.60-1.el7_5 will be obsoleted

--> Processing Dependency: libmysqlclient.so.18()(64bit) for package: 2:postfix-2.10.1-7.el7.x86_64

--> Processing Dependency: libmysqlclient.so.18(libmysqlclient_18)(64bit) for package: 2:postfix-2.10.1-7.el7.x86_64

---> Package mysql-community-libs.x86_64 0:5.7.27-1.el7 will be obsoleting

--> Running transaction check

---> Package mysql-community-libs-compat.x86_64 0:5.7.27-1.el7 will be obsoleting

--> Finished Dependency Resolution

Dependencies Resolved

===================================================================================================

Package Arch Version Repository Size

===================================================================================================

Installing:

mysql-community-libs x86_64 5.7.27-1.el7 mysql57-community 2.2 M

replacing mariadb-libs.x86_64 1:5.5.60-1.el7_5

mysql-community-libs-compat x86_64 5.7.27-1.el7 mysql57-community 2.0 M

replacing mariadb-libs.x86_64 1:5.5.60-1.el7_5

mysql-community-server x86_64 5.7.27-1.el7 mysql57-community 165 M

Installing for dependencies:

mysql-community-client x86_64 5.7.27-1.el7 mysql57-community 24 M

mysql-community-common x86_64 5.7.27-1.el7 mysql57-community 275 k

Transaction Summary

===================================================================================================

Install 3 Packages (+2 Dependent packages)

Total download size: 194 M

Downloading packages:

warning: /var/cache/yum/x86_64/7/mysql57-community/packages/mysql-community-common-5.7.27-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEY

Public key for mysql-community-common-5.7.27-1.el7.x86_64.rpm is not installed

(1/5): mysql-community-common-5.7.27-1.el7.x86_64.rpm | 275 kB 00:00:01

(2/5): mysql-community-libs-5.7.27-1.el7.x86_64.rpm | 2.2 MB 00:00:04

(3/5): mysql-community-libs-compat-5.7.27-1.el7.x86_64.rpm | 2.0 MB 00:00:06

mysql-community-client-5.7.27- FAILED 18 MB 170:42:08 ETA

http://repo.mysql.com/yum/mysql-5.7-community/el/7/x86_64/mysql-community-client-5.7.27-1.el7.x86_64.rpm: [Errno 12] Timeout on http://repo.mysql.com/yum/mysql-5.7-community/el/7/x86_64/mysql-community-client-5.7.27-1.el7.x86_64.rpm: (28, 'Operation too slow. Less than 1000 bytes/sec transferred the last 30 seconds')

Trying other mirror.

mysql-community-server-5.7.27- FAILED 16 MB --:--:-- ETA

http://repo.mysql.com/yum/mysql-5.7-community/el/7/x86_64/mysql-community-server-5.7.27-1.el7.x86_64.rpm: [Errno 12] Timeout on http://repo.mysql.com/yum/mysql-5.7-community/el/7/x86_64/mysql-community-server-5.7.27-1.el7.x86_64.rpm: (28, 'Operation too slow. Less than 1000 bytes/sec transferred the last 30 seconds')

Trying other mirror.

(4/5): mysql-community-client-5.7.27-1.el7.x86_64.rpm | 24 MB 00:11:58

mysql-community-server-5.7.27- FAILED 4 MB 2600:47:50 ETA

http://repo.mysql.com/yum/mysql-5.7-community/el/7/x86_64/mysql-community-server-5.7.27-1.el7.x86_64.rpm: [Errno 12] Timeout on http://repo.mysql.com/yum/mysql-5.7-community/el/7/x86_64/mysql-community-server-5.7.27-1.el7.x86_64.rpm: (28, 'Operation too slow. Less than 1000 bytes/sec transferred the last 30 seconds')

Trying other mirror.

(5/5): mysql-community-server-5.7.27-1.el7.x86_64.rpm | 165 MB 00:07:44

---------------------------------------------------------------------------------------------------

Total 148 kB/s | 194 MB 00:22:20

Retrieving key from file:///etc/pki/rpm-gpg/RPM-GPG-KEY-mysql

Importing GPG key 0x5072E1F5:

Userid : "MySQL Release Engineering "

Fingerprint: a4a9 4068 76fc bd3c 4567 70c8 8c71 8d3b 5072 e1f5

Package : mysql57-community-release-el7-10.noarch (installed)

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-mysql

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : mysql-community-common-5.7.27-1.el7.x86_64 1/6

Installing : mysql-community-libs-5.7.27-1.el7.x86_64 2/6

Installing : mysql-community-client-5.7.27-1.el7.x86_64 3/6

Installing : mysql-community-server-5.7.27-1.el7.x86_64 4/6

Installing : mysql-community-libs-compat-5.7.27-1.el7.x86_64 5/6

Erasing : 1:mariadb-libs-5.5.60-1.el7_5.x86_64 6/6

Verifying : mysql-community-libs-compat-5.7.27-1.el7.x86_64 1/6

Verifying : mysql-community-common-5.7.27-1.el7.x86_64 2/6

Verifying : mysql-community-server-5.7.27-1.el7.x86_64 3/6

Verifying : mysql-community-client-5.7.27-1.el7.x86_64 4/6

Verifying : mysql-community-libs-5.7.27-1.el7.x86_64 5/6

Verifying : 1:mariadb-libs-5.5.60-1.el7_5.x86_64 6/6

Installed:

mysql-community-libs.x86_64 0:5.7.27-1.el7 mysql-community-libs-compat.x86_64 0:5.7.27-1.el7

mysql-community-server.x86_64 0:5.7.27-1.el7

Dependency Installed:

mysql-community-client.x86_64 0:5.7.27-1.el7 mysql-community-common.x86_64 0:5.7.27-1.el7

Replaced:

mariadb-libs.x86_64 1:5.5.60-1.el7_5

#提示安装完成,表示安装成功

Complete!

You have new mail in /var/spool/mail/root

#已经查询不到mariadb数据库了

[root@node02 ~]# rpm -qa|grep mariadb

You have new mail in /var/spool/mail/root

[root@node02 ~]#

3. MySQL数据库设置

首先启动MySQL

#启动mysql服务

[root@node02 ~]# systemctl start mysqld.service

#查看mysql运行状态

[root@node02 ~]# systemctl status mysqld.service

● mysqld.service - MySQL Server

Loaded: loaded (/usr/lib/systemd/system/mysqld.service; enabled; vendor preset: disabled)

#表示已经启动(linux)

Active: active (running) since Fri 2019-10-11 15:14:57 CST; 6s ago

Docs: man:mysqld(8)

http://dev.mysql.com/doc/refman/en/using-systemd.html

Process: 22525 ExecStart=/usr/sbin/mysqld --daemonize --pid-file=/var/run/mysqld/mysqld.pid $MYSQLD_OPTS (code=exited, status=0/SUCCESS)

Process: 22449 ExecStartPre=/usr/bin/mysqld_pre_systemd (code=exited, status=0/SUCCESS)

Main PID: 22528 (mysqld)

CGroup: /system.slice/mysqld.service

└─22528 /usr/sbin/mysqld --daemonize --pid-file=/var/run/mysqld/mysqld.pid

Oct 11 15:14:54 node02.kaikeba.com systemd[1]: Starting MySQL Server...

Oct 11 15:14:57 node02.kaikeba.com systemd[1]: Started MySQL Server.

[root@node02 ~]#

此时MySQL已经开始正常运行,不过要想进入MySQL还得先找出此时root用户的密码,通过如下命令可以在日志文件中找出密码:

#查找到root用户登录mysql数据库的密码:7UOv>SVzygyB

[root@node02 ~]# grep "password" /var/log/mysqld.log

2019-10-11T07:14:54.482816Z 1 [Note] A temporary password is generated for root@localhost: 7UOv>SVzygyB

You have new mail in /var/spool/mail/root

[root@node02 ~]#

命令进入数据库:

[root@node02 ~]# mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 3

Server version: 5.7.27

Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

#提示修改初始密码

ERROR 1820 (HY000): You must reset your password using ALTER USER statement before executing this statement.

mysql> show databases;

ERROR 1820 (HY000): You must reset your password using ALTER USER statement before executing this statement.

#注意密码设置不能过于简单,mysql有密码设置规范(特殊字符、字母大小写,数字三者的组合)

mysql> ALTER USER 'root'@'localhost' IDENTIFIED BY '!Qaz123456';

#添加scm用户对scm库的访问权限

mysql> grant all on scm.* to scm@'%' identified by '!Qaz123456';

Query OK, 0 rows affected, 1 warning (0.01 sec)

mysql> select user,host from user;

+---------------+-----------+

| user | host |

+---------------+-----------+

| scm | % |

| mysql.session | localhost |

| mysql.sys | localhost |

| root | localhost |

+---------------+-----------+

4 rows in set (0.01 sec)

#刷新访问权限的设置,这一步非常重要,如果没有操作,scm远程访问mysql数据库就失败.

mysql> flush privileges;

Query OK, 0 rows affected (0.01 sec)

#添加root用户远程访问数据库

mysql>grant all on *.* to root@'%' identified by '!Qaz123456';

mysql> flush privileges;

mysql> select user,host from user;

+---------------+-----------+

| user | host |

+---------------+-----------+

| root | % |

| scm | % |

| mysql.session | localhost |

| mysql.sys | localhost |

| root | localhost |

+---------------+-----------+

5 rows in set (0.00 sec)

mysql> delete from user where user='root' and host='localhost';

Query OK, 1 row affected (0.02 sec)

mysql> select user,host from user;

+---------------+-----------+

| user | host |

+---------------+-----------+

| root | % |

| scm | % |

| mysql.session | localhost |

| mysql.sys | localhost |

+---------------+-----------+

4 rows in set (0.00 sec)

mysql> flush privileges;

mysql> update mysql.user set Grant_priv='Y',Super_priv='Y' where user = 'root' and host = '%';

Query OK, 1 row affected (0.00 sec)

Rows matched: 1 Changed: 1 Warnings: 0

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

mysql> quit

Bye

You have new mail in /var/spool/mail/root

#从起mysql服务

[root@node02 ~]# systemctl restart mysqld.service

[root@node02 ~]#

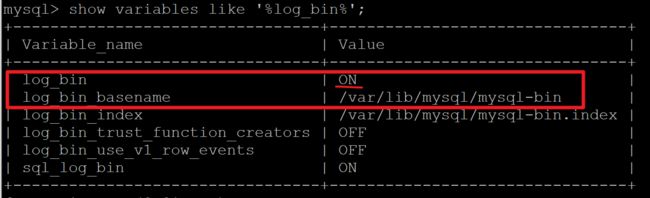

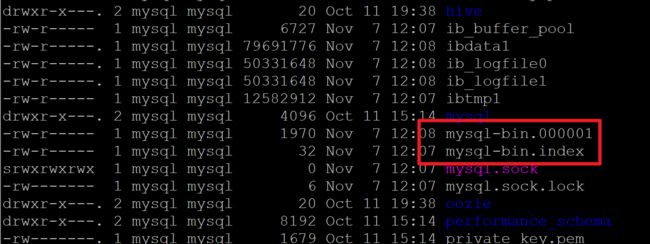

开启mysql的binlog

Mysql的binlog⽇日志作⽤用是⽤用来记录mysql内部增删等对mysql数据库有更更新的内容的记录(对数据库 的改动),对数据库的查询select或show等不不会被binlog⽇日志记录;主要⽤用于数据库的主从复制以及增 量量恢复。

mysql的binlog⽇日志必须打开log-bin功能才能⽣生存binlog⽇日志

-rw-rw---- 1 mysql mysql 449229328 Sep 2 19:21 mysql-bin.000001

-rw-rw---- 1 mysql mysql 860032004 Sep 4 15:08 mysql-bin.000002

-rw-rw---- 1 mysql mysql 613773 Sep 4 15:17 mysql-bin.000003

-rw-rw---- 1 mysql mysql 125 Sep 4 15:18 mysql-bin.000004

-rw-rw---- 1 mysql mysql 645768398 Sep 24 00:40 mysql-bin.000005

-rw-rw---- 1 mysql mysql 81087585 Oct 25 14:33 mysql-bin.000006

-rw-rw---- 1 mysql mysql 192 Oct 9 18:25 mysql-bin.index

3.1:修改/etc/my.cnf

[mysqld]

log-bin=/var/lib/mysql/mysql-bin 【binlog⽇日志存放路路径】

binlog-format=ROW 【⽇日志中会记录成每⼀一⾏行行数据被修改的形式】

server_id=1 【指定当前机器器的服务ID(如果是集群,不不能重复)】

3.2:重启mysql,并验证

输入命令查看:

mysql> show variables like '%log_bin%';

进入指定的binlog路径查看是否生产binlog

cd /var/lib/mysql/

4.安装maxwell

4.1 下载maxwell

https://github.com/zendesk/maxwell/releases/download/v1.22.1/maxwell-1.22.1.tar.gz

Maxwell是一个能实时读取MySQL二进制日志binlog,并生成 JSON 格式的消息,作为生产者发送给 Kafka,Kinesis、RabbitMQ、Redis、Google Cloud Pub/Sub、文件或其它平台的应用程序。它的常见应用场景有ETL、维护缓存、收集表级别的dml指标、增量到搜索引擎、数据分区迁移、切库binlog回滚方案等。官网(http://maxwells-daemon.io)、GitHub(https://github.com/zendesk/maxwell)

Maxwell主要提供了下列功能:

支持 SELECT * FROM table 的方式进行全量数据初始化

支持在主库发生failover后,自动恢复binlog位置(GTID)

可以对数据进行分区,解决数据倾斜问题,发送到kafka的数据支持database、table、column等级别的数据分区

工作方式是伪装为Slave,接收binlog events,然后根据schemas信息拼装,可以接受ddl、xid、row等各种event

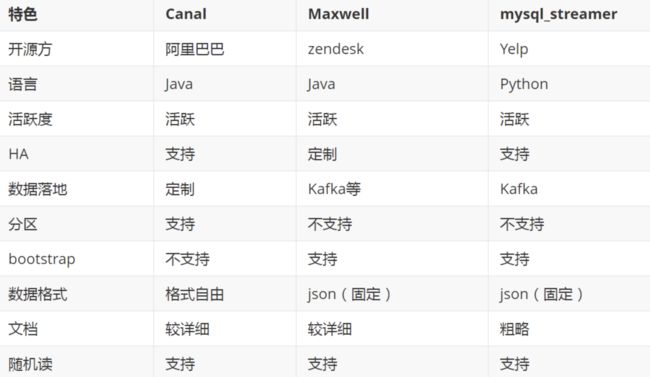

除了Maxwell外,目前常用的MySQL Binlog解析工具主要有阿里的canal、mysql_streamer,三个工具对比如下:

canal 由Java开发,分为服务端和客户端,拥有众多的衍生应用,性能稳定,功能强大;canal 需要自己编写客户端来消费canal解析到的数据。

maxwell相对于canal的优势是使用简单,它直接将数据变更输出为json字符串,不需要再编写客户端

4.2:解压maxwell-1.21.1.tar.gz

tar -zxvf maxwell-1.21.1.tar.gz -C /opt

4.3:Maxwell解析binlog到Kafka

在maxwell的目录下创建driver.properties配置文件并编辑填入如下内容,主要用来指定解析业务库的binlog和发送数据到kafka

[root@node02 maxwell-1.22.1]# touch driver.properties

You have new mail in /var/spool/mail/root

[root@node02 maxwell-1.22.1]# vi driver.properties

binlog ###############

log_level=INFO

producer=kafka

host = 10.20.3.155

user = maxwell

password = 123456

producer_ack_timeout = 600000

######### binlog ###############

######### output format stuff ###############

output_binlog_position=ture

output_server_id=true

output_thread_id=ture

output_nulls=true

output_xoffset=true

output_schema_id=true

######### output format stuff ###############

############ kafka stuff #############

kafka.bootstrap.servers=node01:9092,node02:9092,node03:9092

kafka_topic=veche

kafka_partition_hash=murmur3

kafka_key_format=hash

kafka.compression.type=snappy

kafka.retries=5

kafka.acks=all

producer_partition_by=primary_key

############ kafka stuff #############

############## misc stuff ###########

bootstrapper=async

############## misc stuff ##########

############## filter ###############

filter=exclude:*.*, include: test.order_info_201904,include: test.order_info_201905,include: test.o

rder_info_201906,include: test.order_info_201907,include: test.order_info_201908,include: test.orde

r_info_201906,include: test.order_info_201910,include: test.order_info_201911,include: test.order_i

nfo_201912,include: test.renter_info,include: test.driver_info ,include: test.opt_alliance_business

############## filter ###############

"driver.properties" 36L, 1343C written

[root@node02 maxwell-1.22.1]#

以上参数解释:http://maxwells-daemon.io/config/

######### binlog ###############

用于指定解析目标机器的binlog(业务库所在机器地址)

######### output format stuff ###############

配置输出格式

############ kafka stuff #############

指定kafka地址

############## misc stuff ###########

在处理bootstrap时,是否会阻塞正常的binlog解析 async不会阻塞

############## filter ###############

用于指定需要监控哪些库、哪些表

3.3:给maxwell添加权限

创建Maxwell用户,并赋予 maxwell 库的一些权限

CREATE USER 'maxwell'@'%' IDENTIFIED BY '!Qaz123456';

GRANT ALL ON maxwell.* TO 'maxwell'@'%' IDENTIFIED BY '!Qaz123456';

GRANT SELECT, REPLICATION CLIENT, REPLICATION SLAVE on *.* to 'maxwell'@'%';

FLUSH PRIVILEGES;

3.4:启动maxwell

bin/maxwell --user='maxwell' --password='!Qaz123456' --host='127.0.0.1' --producer=kafka --kafka.bootstrap.servers=node01:9092 --kafka_topic=maxwell

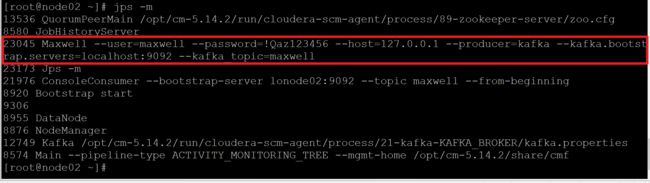

启动之后,通过jps -m查看进程

测试:

cd /opt/cloudera/parcels/KAFKA-3.1.0-1.3.1.0.p0.35/lib/kafka/bin/

MySQL错误:Access denied for user ‘root’@‘%’ to database ‘mytest’

https://blog.csdn.net/Roy_70/article/details/82669138

六、总结(5分钟)

1.前后端分离总结:

2.环境搭建

七、作业

1.按照课程内容搭建项目运行环境CDH大数据管理平台.

2.搭建整个项目架子.

八、Cloudera平台搭建

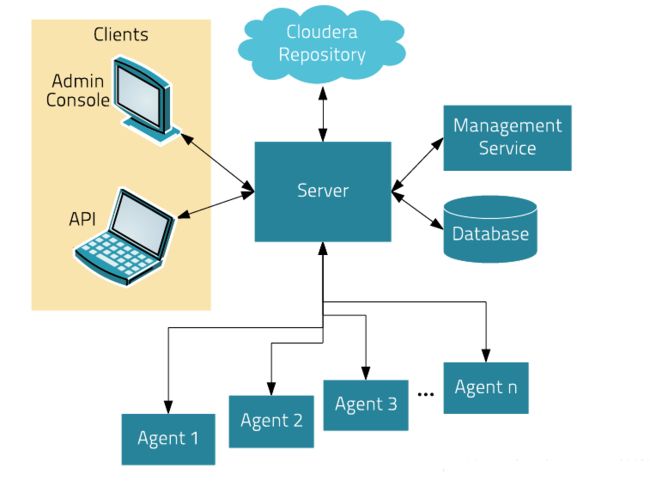

1.cloudera manager简单介绍

Cloudera Manager是一个拥有集群自动化安装、中心化管理、集群监控、报警功能的一个工具(软件),使得安装集群从几天的时间缩短在几个小时内,运维人员从数十人降低到几人以内,极大的提高集群管理的效率。

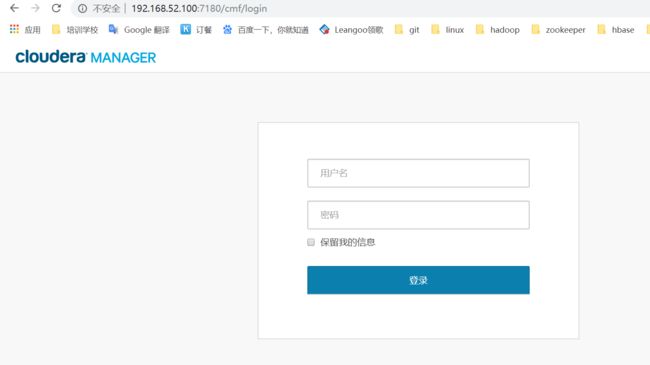

安装完成的界面.如下图:

2.cloudera manager主要核心功能

• 管理:对集群进行管理,如添加、删除节点等操作。

• 监控:监控集群的健康情况,对设置的各种指标和系统运行情况进行全面监控。

• 诊断:对集群出现的问题进行诊断,对出现的问题给出建议解决方案。

• 集成:多组件进行整合。

3.cloudera manager 的架构

4.准备三台虚拟机

参考【0.大数据环境前置准备】

5.准备cloudera安装包

由于是离线部署,因此需要预先下载好需要的文件。

需要准备的文件有:

Cloudera Manager 5

文件名: cloudera-manager-centos7-cm5.14.0_x86_64.tar.gz

下载地址: https://archive.cloudera.com/cm5/cm/5/

CDH安装包(Parecls包)

版本号必须与Cloudera Manager相对应

下载地址: https://archive.cloudera.com/cdh5/parcels/5.14.0/

需要下载下面3个文件:

CDH-5.14.0-1.cdh5.14.0.p0.23-el7.parcel

CDH-5.14.0-1.cdh5.14.0.p0.23-el7.parcel.sha1

manifest.json

MySQL jdbc驱动

文件名: mysql-connector-java-.tar.gz

下载地址: https://dev.mysql.com/downloads/connector/j/

解压出: mysql-connector-java-bin.jar

6.所有机器安装安装jdk

7.所有机器安装依赖包

yum -y install chkconfig python bind-utils psmisc libxslt zlib sqlite cyrus-sasl-plain cyrus-sasl-gssapi fuse portmap fuse-libs redhat-lsb

8.安装mysql数据库

在第二台机器上(随机选择的机器,计划在第一台机器上安装cloudera管理服务比较耗费资源,所以在第二台机器上安装mysql数据库)安装mysql数据库.

参考【MySQL安装之yum安装教程】

9.安装cloudera服务端

9.1 解压服务端管理安装包

#所有节点上传cloudera-manager-centos7-cm5.14.0_x86_64.tar.gz文件并解压

[root@node01 ~]# tar -zxvf cloudera-manager-centos7-cm5.14.2_x86_64.tar.gz -C /opt

[root@node02 ~]# tar -zxvf cloudera-manager-centos7-cm5.14.2_x86_64.tar.gz -C /opt

[root@node03 ~]# tar -zxvf cloudera-manager-centos7-cm5.14.2_x86_64.tar.gz -C /opt

解压完可以在/opt目录下看到文件

[root@node01 ~]# cd /opt/

[root@node01 opt]# ll

total 0

drwxr-xr-x. 4 1106 4001 36 Apr 3 2018 cloudera

drwxr-xr-x. 9 1106 4001 88 Apr 3 2018 cm-5.14.2

[root@node01 opt]# cd cloudera/

[root@node01 cloudera]# ll

total 0

drwxr-xr-x. 2 1106 4001 6 Apr 3 2018 csd

drwxr-xr-x. 2 1106 4001 6 Apr 3 2018 parcel-repo

[root@node01 cloudera]#

9.2 创建客户端运行目录

#所有节点手动创建文件夹

[root@node01 ~]# mkdir /opt/cm-5.14.2/run/cloudera-scm-agent

[root@node02 ~]# mkdir /opt/cm-5.14.2/run/cloudera-scm-agent

[root@node03 ~]# mkdir /opt/cm-5.14.2/run/cloudera-scm-agent

9.3 创建cloudera-scm用户

#所有节点创建cloudera-scm用户

useradd --system --home=/opt/cm-5.14.0/run/cloudera-scm-server --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

9.4 初始化数据库

初始化数据库(只需要在Cloudera Manager Server节点执行)

将提供的msyql驱动包上传到第一台机器的root home目录下,然后将mysql jdbc驱动放入相应位置:

[root@node01 ~]# cp mysql-connector-java.jar /opt/cm-5.14.2/share/cmf/lib/

[root@node01 ~]# /opt/cm-5.14.2/share/cmf/schema/scm_prepare_database.sh mysql -h node02 -uroot -p'!Qaz123456' --scm-host node01 scm scm '!Qaz123456'

JAVA_HOME=/usr/java/jdk1.8.0_211-amd64

Verifying that we can write to /opt/cm-5.14.2/etc/cloudera-scm-server

Creating SCM configuration file in /opt/cm-5.14.2/etc/cloudera-scm-server

Executing: /usr/java/jdk1.8.0_211-amd64/bin/java -cp /usr/share/java/mysql-connector-java.jar:/usr/share/java/oracle-connector-java.jar:/opt/cm-5.14.2/share/cmf/schema/../lib/* com.cloudera.enterprise.dbutil.DbCommandExecutor /opt/cm-5.14.2/etc/cloudera-scm-server/db.properties com.cloudera.cmf.db.

[ main] DbCommandExecutor INFO Successfully connected to database.

#显示初始化成功

All done, your SCM database is configured correctly!

[root@node01 ~]#

脚本参数说明:

${数据库类型} -h 数 据 库 所 在 节 点 i p / h o s t n a m e − u {数据库所在节点ip/hostname} -u 数据库所在节点ip/hostname−u{数据库用户名} -p${数据库密码} –scm-host ${Cloudera Manager Server节点ip/hostname} scm(数据库) scm(用户名) scm(密码)

mysql-connector-java.jar驱动同时需要复制到node02相同目录下.

9.5 修改所有节点客户端配置

#将其中的server_host参数修改为Cloudera Manager Server节点的主机名

[root@node01 ~]# vi /opt/cm-5.14.2/etc/cloudera-scm-agent/config.ini

[root@node01 ~]# vi /opt/cm-5.14.2/etc/cloudera-scm-agent/config.ini

[General]

# 将默认的server_host=localhost 修改成node01

server_host=node01

9.6 上传CDH安装包

#将如下文件放到Server节点的/opt/cloudera/parcel-repo/目录中:

#CDH-5.14.2-1.cdh5.14.2.p0.3-el7.parcel

#CDH-5.14.2-1.cdh5.14.2.p0.3-el7.parcel.sha1

#manifest.json

# 重命名sha1文件

[root@node01 parcel-repo]# mv CDH-5.14.2-1.cdh5.14.2.p0.3-el7.parcel.sha1 CDH-5.14.2-1.cdh5.14.2.p0.3-el7.parcel.sha

9.7 更改安装目录用户组权限

所有节点更改cm相关文件夹的用户及用户组

[root@node01 ~]# chown -R cloudera-scm:cloudera-scm /opt/cloudera

[root@node01 ~]# chown -R cloudera-scm:cloudera-scm /opt/cm-5.14.2

[root@node01 ~]#

9.8 启动Cloudera Manager和agent

Server(node01)节点

[root@node01 ~]# /opt/cm-5.14.2/etc/init.d/cloudera-scm-server start

Starting cloudera-scm-server: [ OK ]

#客户端需要在所有节点上启动

[root@node01 ~]# /opt/cm-5.14.2/etc/init.d/cloudera-scm-agent start

Starting cloudera-scm-agent: [ OK ]

[root@node01 ~]#

10.服务安装

使用浏览器登录cloudera-manager的web界面,用户名和密码都是admin

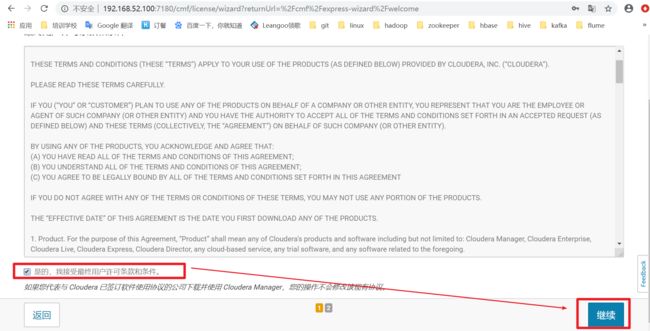

登陆之后,在协议页面勾选接受协议,点击继续

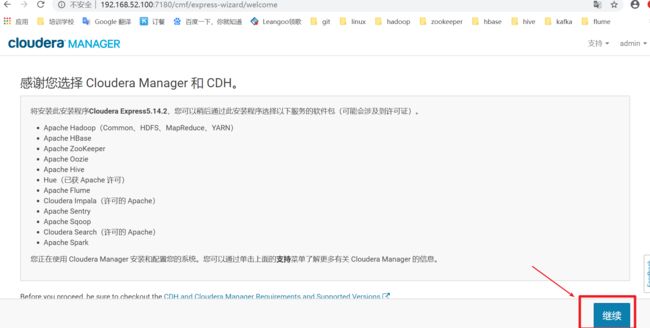

选择免费版本,免费版本已经能够满足我们日常业务需求,选择免费版即可.点击继续

如下图,点击继续

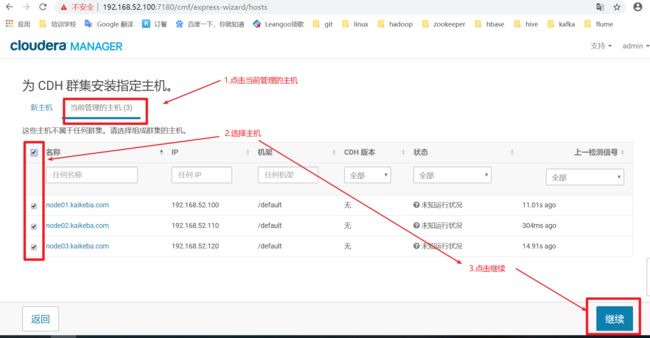

如下图,点击当前管理的机器,然后选择机器,点击继续

如下图,然后选择你的parcel对应版本的包

点击后,进入安装页面,稍等片刻

如下图,集群安装中

如下图,安装包分配成功,点击继续

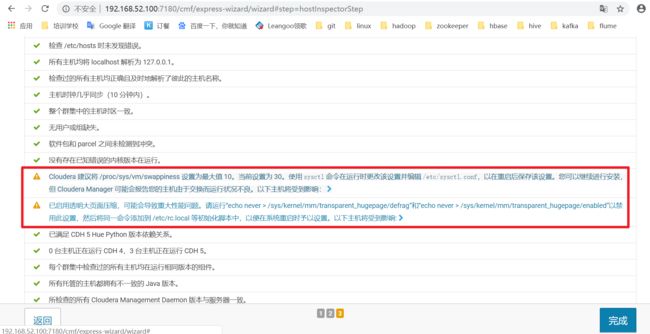

针对这样的警告,需要在每一台机器输入如下命令:

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

echo 'vm.swappiness=10'>> /etc/sysctl.conf

sysctl vm.swappiness=10

echo never > /sys/kernel/mm/transparent_hugepage/defrag”和

“echo never > /sys/kernel/mm/transparent_hugepage/enabled”

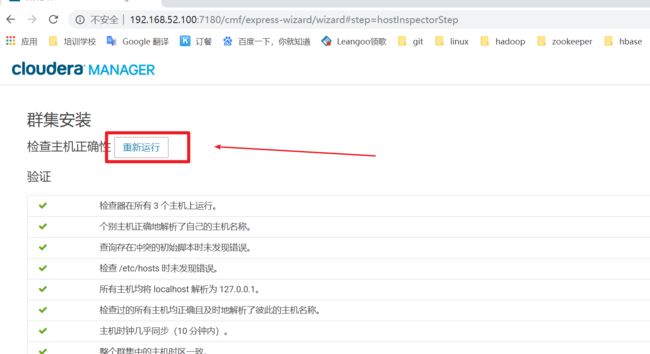

如下图,然后点击重新运行,不出以为,就不会在出现警告了,点击完成,进入hadoop生态圈服务组件的安装

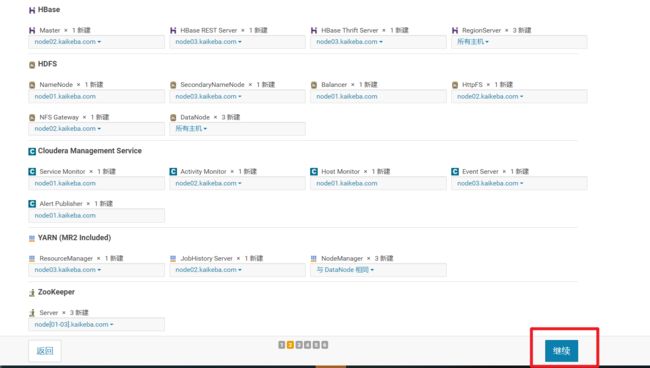

如下图,选择自定义服务,我们先安装好最基础的服务组合。那么在安装之前,如果涉及到hive和oozie的安装,那么先去mysql中,自己创建数据库,并赋予权限;

因此:

create database hive;

create database oozie;

grant all on *.* to hive identified by '!Qaz123456';

grant all on *.* to oozie identified by '!Qaz123456';

如果出现如下错误:

mysql> grant all on *.* to oozie identified by '!Qaz123456';

ERROR 1045 (28000): Access denied for user 'root'@'localhost' (using password: YES)

mysql> update mysql.user set Grant_priv='Y',Super_priv='Y' where user = 'root' and host = 'localhost';

Query OK, 1 row affected (0.00 sec)

Rows matched: 1 Changed: 1 Warnings: 0

mysql> flush privileges;

mysql> quit

Bye

You have new mail in /var/spool/mail/root

[root@node02 ~]# systemctl restart mysqld.service

[root@node02 ~]# mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 3

Server version: 5.7.27 MySQL Community Server (GPL)

Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> grant all on *.* to hive identified by '!Qaz123456';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> grant all on *.* to oozie identified by '!Qaz123456';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql>

这样再安装软件!

那么,选择自定义服务,如果我们后续需要其他服务时我们在进行添加

然后点击继续,进入选择服务添加分配页面,分配即可

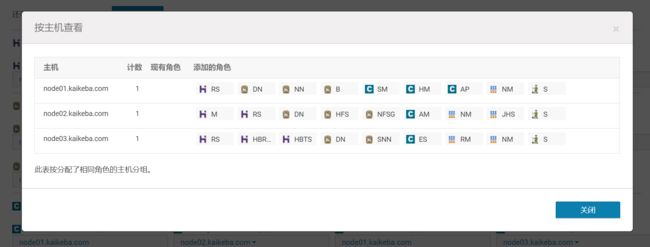

选择完成后服务,如下图,可以点击按照主机查看服务分部情况

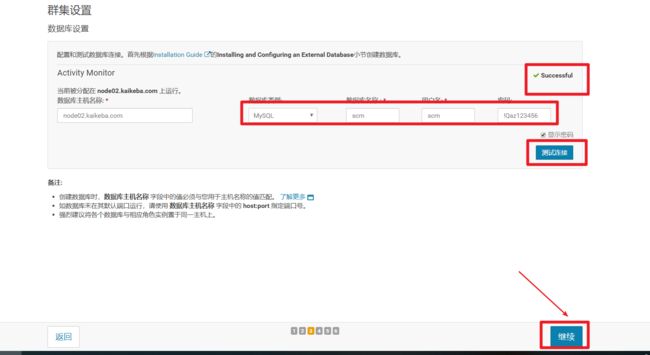

点击继续后,如下图,输入mysql数据库中数数据库scm,用户名scm,密码!Qaz123456,点击测试连接,大概等30s,显示成功,点击继续

一路点击继续,剩下的就是等待

如上图,如果等待时间过长,我们可以将manager所在机器(也就是node01)停止后把内存调整的大一些建议如果是笔记本4g以上,如果是云环境8g以上,我们这里先调整为4g以上,重新启node01机器后重新启动cloudera的server和agent

[root@node01 ~]# cd /opt/cm-5.14.2/etc/init.d

#启动server

[root@node01 init.d]# ./cloudera-scm-server start

#启动agent

[root@node01 init.d]# ./cloudera-scm-agent start

11.重新登录cloudera manager

登录成功后,如下图,重新启动集群,接下来就是等待.

12.集群测试

12.1 文件系统测试

#切换hdfs用户对hdfs文件系统进行测试是否能够进行正常读写

[root@node01 ~]# su hdfs

[hdfs@node01 ~]# hadoop dfs -ls /

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Found 1 items

d-wx------ - hdfs supergroup 0 2019-10-11 08:21 /tmp

[hdfs@node01 ~]# touch words

[hdfs@node01 ~]# vi words

hello world

[hdfs@node01 ~]$ hadoop dfs -put words /test

[hdfs@node01 ~]$ hadoop dfs -ls /

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Found 2 items

drwxr-xr-x - hdfs supergroup 0 2019-10-11 09:09 /test

d-wx------ - hdfs supergroup 0 2019-10-11 08:21 /tmp

[hdfs@node01 ~]$ hadoop dfs -ls /test

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Found 1 items

-rw-r--r-- 3 hdfs supergroup 12 2019-10-11 09:09 /test/words

[hdfs@node01 ~]$ hadoop dfs -text /test/words

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

hello world

12.2 yarn集群测试

[hdfs@node01 ~]$ hadoop jar /opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/jars/hadoop-mapreduce-examples-2.6.0-cdh5.14.2.jar wordcount /test/words /test/output

19/10/11 22:47:59 INFO client.RMProxy: Connecting to ResourceManager at node03.kaikeba.com/192.168.52.120:8032

19/10/11 22:47:59 INFO mapreduce.JobSubmissionFiles: Permissions on staging directory /user/hdfs/.staging are incorrect: rwx---rwx. Fixing permissions to correct value rwx------

19/10/11 22:48:00 INFO input.FileInputFormat: Total input paths to process : 1

19/10/11 22:48:00 INFO mapreduce.JobSubmitter: number of splits:1

19/10/11 22:48:00 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1570847238197_0001

19/10/11 22:48:01 INFO impl.YarnClientImpl: Submitted application application_1570847238197_0001

19/10/11 22:48:01 INFO mapreduce.Job: The url to track the job: http://node03.kaikeba.com:8088/proxy/application_1570847238197_0001/

19/10/11 22:48:01 INFO mapreduce.Job: Running job: job_1570847238197_0001

19/10/11 22:48:28 INFO mapreduce.Job: Job job_1570847238197_0001 running in uber mode : false

19/10/11 22:48:28 INFO mapreduce.Job: map 0% reduce 0%

19/10/11 22:50:10 INFO mapreduce.Job: map 100% reduce 0%

19/10/11 22:50:17 INFO mapreduce.Job: map 100% reduce 17%

19/10/11 22:50:19 INFO mapreduce.Job: map 100% reduce 33%

19/10/11 22:50:21 INFO mapreduce.Job: map 100% reduce 50%

19/10/11 22:50:24 INFO mapreduce.Job: map 100% reduce 67%

19/10/11 22:50:25 INFO mapreduce.Job: map 100% reduce 83%

19/10/11 22:50:29 INFO mapreduce.Job: map 100% reduce 100%

19/10/11 22:50:29 INFO mapreduce.Job: Job job_1570847238197_0001 completed successfully

19/10/11 22:50:30 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=144

FILE: Number of bytes written=1044048

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=118

HDFS: Number of bytes written=16

HDFS: Number of read operations=21

HDFS: Number of large read operations=0

HDFS: Number of write operations=12

Job Counters

Launched map tasks=1

Launched reduce tasks=6

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=100007

Total time spent by all reduces in occupied slots (ms)=24269

Total time spent by all map tasks (ms)=100007

Total time spent by all reduce tasks (ms)=24269

Total vcore-milliseconds taken by all map tasks=100007

Total vcore-milliseconds taken by all reduce tasks=24269

Total megabyte-milliseconds taken by all map tasks=102407168

Total megabyte-milliseconds taken by all reduce tasks=24851456

Map-Reduce Framework

Map input records=1

Map output records=2

Map output bytes=20

Map output materialized bytes=120

Input split bytes=106

Combine input records=2

Combine output records=2

Reduce input groups=2

Reduce shuffle bytes=120

Reduce input records=2

Reduce output records=2

Spilled Records=4

Shuffled Maps =6

Failed Shuffles=0

Merged Map outputs=6

GC time elapsed (ms)=581

CPU time spent (ms)=11830

Physical memory (bytes) snapshot=1466945536

Virtual memory (bytes) snapshot=19622957056

Total committed heap usage (bytes)=1150287872

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=12

File Output Format Counters

Bytes Written=16

You have new mail in /var/spool/mail/root

[hdfs@node01 ~]$ hdfs dfs -ls /test/output

Found 7 items

-rw-r--r-- 3 hdfs supergroup 0 2019-10-11 22:50 /test/output/_SUCCESS

-rw-r--r-- 3 hdfs supergroup 0 2019-10-11 22:50 /test/output/part-r-00000

-rw-r--r-- 3 hdfs supergroup 8 2019-10-11 22:50 /test/output/part-r-00001

-rw-r--r-- 3 hdfs supergroup 0 2019-10-11 22:50 /test/output/part-r-00002

-rw-r--r-- 3 hdfs supergroup 0 2019-10-11 22:50 /test/output/part-r-00003

-rw-r--r-- 3 hdfs supergroup 0 2019-10-11 22:50 /test/output/part-r-00004

-rw-r--r-- 3 hdfs supergroup 8 2019-10-11 22:50 /test/output/part-r-00005

[hdfs@node01 ~]$ hdfs dfs -text /test/output/part-r-00001

world 1

[hdfs@node01 ~]$ hdfs dfs -text /test/output/part-r-00005

hello 1

You have new mail in /var/spool/mail/root

[hdfs@node01 ~]$

13.手动添加Kafka服务

我们以安装kafka为例进行演示

13.1 检查kafka安装包

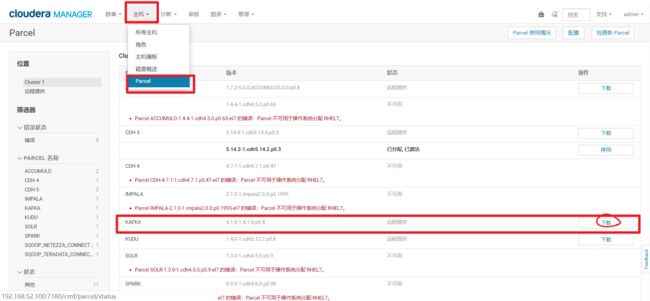

首先检查是否已经存在Kafka的parcel安装包,如下图提示远程提供,说明我们下载的parcel安装包中不包含Kafka的parcel安装包,这时需要我们手动到官网上下载

13.2 检查Kafka安装包版本

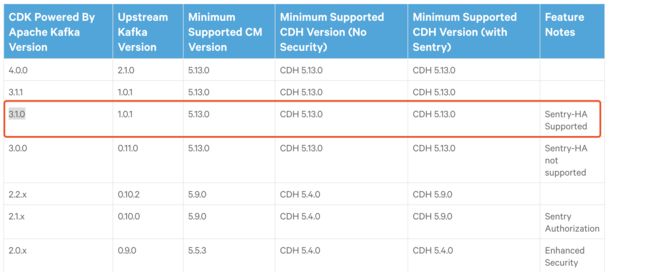

首先查看搭建cdh版本 和kafka版本,是否是支持的:

登录如下网址:

https://www.cloudera.com/documentation/enterprise/release-notes/topics/rn_consolidated_pcm.html#pcm_kafka

我的CDH版本是cdh5.14.0 ,我想要的kafka版本是1.0.1

因此选择:

13.3 下载Kafka parcel安装包

然后下载:http://archive.cloudera.com/kafka/parcels/3.1.0/

需要将下载的KAFKA-3.1.0-1.3.1.0.p0.35-el7.parcel.sha1 改成 KAFKA-3.1.0-1.3.1.0.p0.35-el7.parcel.sha

[root@node01 ~]# mv KAFKA-3.1.0-1.3.1.0.p0.35-el7.parcel.sha1 KAFKA-3.1.0-1.3.1.0.p0.35-el7.parcel.sha

You have new mail in /var/spool/mail/root

然后将这三个文件,拷贝到parcel-repo目录下。如果有相同的文件,即manifest.json,只需将之前的重命名备份即可。

[root@node01 ~] cd /opt/cloudera/parcel-repo/

[root@node01 parcel-repo]# mv manifest.json bak_manifest.json

#拷贝到parcel-repo目录下

[root@node01 ~]# mv KAFKA-3.1.0-1.3.1.0.p0.35-el7.parcel* manifest.json /opt/cloudera/parcel-repo/

[root@node01 ~]# ll

total 989036

-rw-------. 1 root root 1260 Apr 16 01:35 anaconda-ks.cfg

-rw-r--r--. 1 root root 832469335 Oct 11 13:23 cloudera-manager-centos7-cm5.14.2_x86_64.tar.gz

-rw-r--r--. 1 root root 179439263 Oct 10 20:14 jdk-8u211-linux-x64.rpm

-rw-r--r--. 1 root root 848399 Oct 11 17:02 mysql-connector-java.jar

-rw-r--r-- 1 root root 12 Oct 11 21:01 words

You have new mail in /var/spool/mail/root

[root@node01 ~]# ll

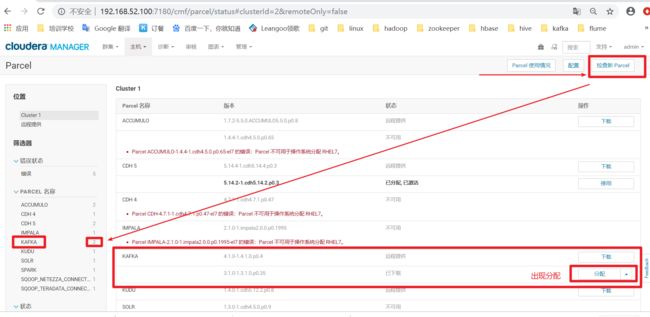

13.4 分配激活Kafka

如下图,在管理首页选择parcel

如下图,检查更新多点击几次,就会出现分配按钮

点击分配,等待分配按钮激活

如下图,正在分配中…

如下图按钮已经激活

如上两张图图,点击激活和确定,然后等待激活

正在激活…

如下图,分配并激活成功

13.5 添加Kafka服务

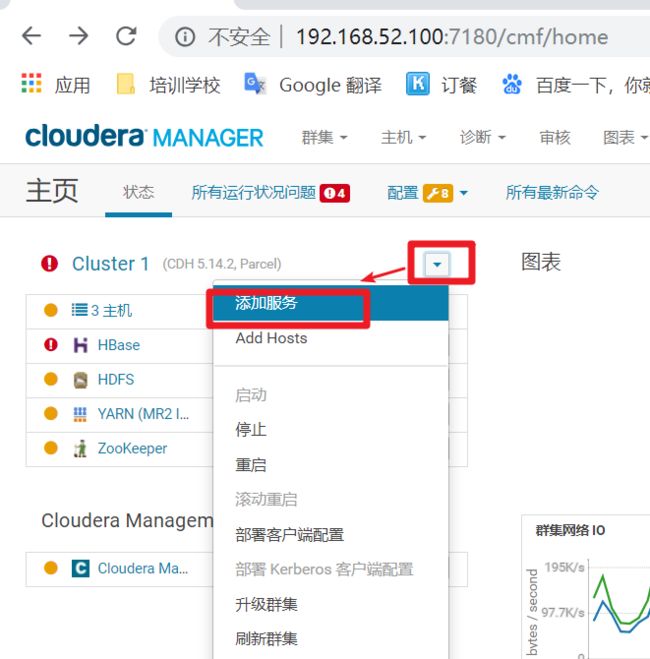

点击cloudera manager回到主页

页面中点击下拉操作按钮,点击添加服务

如下图,点击选择kafka,点击继续

如下图,选择Kakka Broker在三个节点上安装,Kafka MirrorMaker安装在node03上,Gateway安装在node02上(服务选择安装,需要自己根据每台机器上健康状态而定,这里只是作为参考)

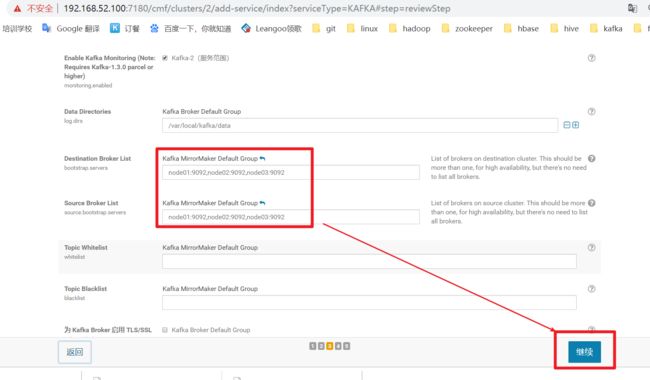

如下图,填写Destination Broker List和Source Broker List后点击继续

注意:这里和上一步中选择的角色分配有关联,Kafka Broker选择的是三台机器Destination Broker List中就填写三台机器的主机名,中间使用逗号分开,如果选择的是一台机器那么久选择一台,一次类推.Source Broker List和Destination Broker List填写一样.

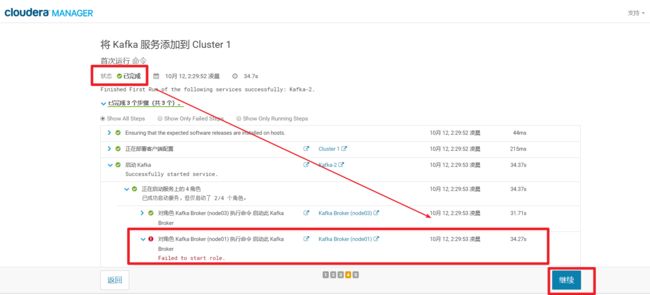

如下图,添加服务,最终状态为已完成,启动过程中会出现错误不用管,这时因为CDH给默认将kafka的内存设置为50M,太小了, 后续需要我们手动调整,点击继续

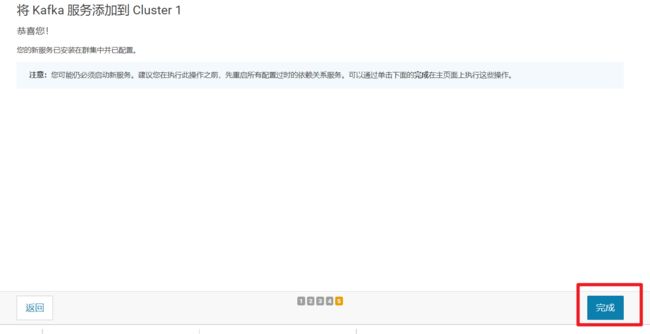

如下图,点击完成.

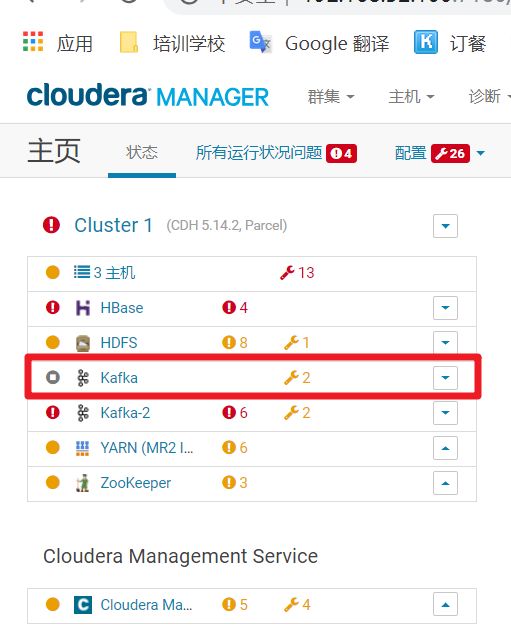

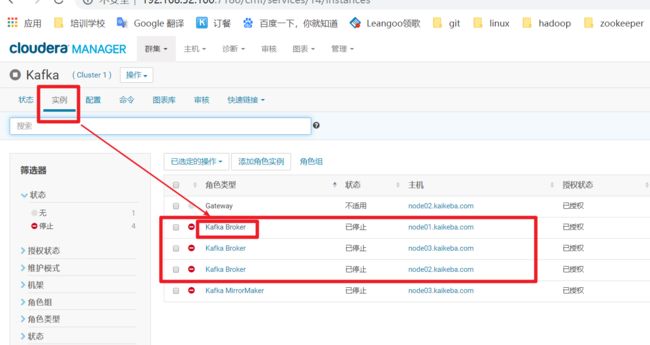

如下图,添加成功的Kafka服务

13.6 配置Kafka的内存

如下图,点击Kafka服务

如下图,点击实例,点击Kafka Broker(我们先配置node01节点的内存大小,node02和node03内存配置方式相同,需要按照此方式进行修改)

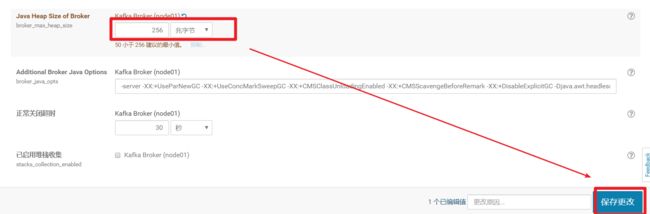

如上图,点击Kafka Broker之后,如下图所示,点击配置

右侧浏览器垂直滚动条往下找到broker_max_heap_size,修改值为256或者更大一些比如1G,点击保存更改

node02和node03按照上述步骤进行同样修改.

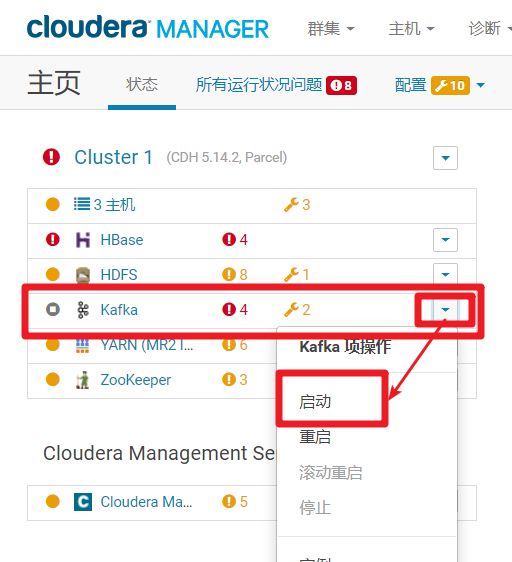

13.7 重新启动kafka集群

点击启动

点击启动

然后kafka在启动中肯定会报错,如下图,因为默认broker最低内存是1G

但是CDH给调节成50M了

因此调整过来

启动成功

14.手动添加服务

请参考【13.手动添加Kafka服务】操作步骤.