rocketMq源码分析-消费者

角色:

消费者:

消费组:

消费模式:

集群模式:默认开启的是集群模式,集群模式下同一个消费组中只能有一个消费者消费某个topic在broker中的队列

广播模式:广播模式下,所有消费者都可以消费topic的信息。

消息传送方式:

推模式:broker主动push消息给消费者

拉模式:主动从broker中pull消息

消息的拉取

默认使用的是DefaultMQPushConsumer类,处于org.apache.rocketmq.client.consumer包下,该类继承ClientConfig配置文件类实现MQPushConsumer接口,MQPushConsumer接口继承MQConsumer接口,MQConsumer接口继承MQAdmin接口,即DefaultMQPushConsumer--》MQConsumer--》MQAdmin。

通过实现的关系,可以发现无论生产者还是消费最后都是实现了MQAdmin接口,因此MQAdmin接口中都是跟topic相关的方法,MQConsumer接口中就是三个方法,一个是通过topic从消费者本地缓存获取消息队列,另外两个都是消费消息失败以后给broker的反馈。因此跟消费者启动、销毁以及消费有关的大部分方法都是在MQPushConsumer接口中。

1、启动消费者

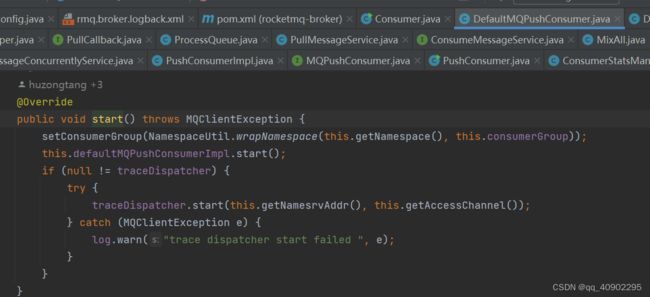

还是先从消费者启动开始看,消费者启动的方法在DefaultMQPushConsumer的start()方法。

启动类中,先是检查一些参数的合法性,例如消费者名之类的。然后就是根据topic以及其相对应的表达式,构建当前消费者订阅的topic的信息,然后就是集群模式下将当前进程id设置为消费者实例的名称,再就是通过MQClient管理器创建一个MQClient用于跟broker交互。其实发现这些流程跟生产者的启动流程没有什么区别,就是多个一个构建订阅的topic的相关信息。 咱们接下来就是根据启动类中的步骤一个个分析。

2、参数检查

检查一些常规参数是否为空,消费者组、消费模式等等,这个就不细说了。

3、构建订阅的topic的信息

根据tags的过滤表达式来构建消费者订阅的不同topic的相关信息。

private void copySubscription() throws MQClientException {

try {

// 获取订阅的topic

Map sub = this.defaultMQPushConsumer.getSubscription();

if (sub != null) {

for (final Map.Entry entry : sub.entrySet()) {

final String topic = entry.getKey();

final String subString = entry.getValue();

SubscriptionData subscriptionData = FilterAPI.buildSubscriptionData(topic, subString);

this.rebalanceImpl.getSubscriptionInner().put(topic, subscriptionData);

}

}

if (null == this.messageListenerInner) {

this.messageListenerInner = this.defaultMQPushConsumer.getMessageListener();

}

switch (this.defaultMQPushConsumer.getMessageModel()) {

case BROADCASTING:

break;

case CLUSTERING:

// 若是cluster模式需要订阅 "%RETRY%"+消费组 的重试topic

final String retryTopic = MixAll.getRetryTopic(this.defaultMQPushConsumer.getConsumerGroup());

SubscriptionData subscriptionData = FilterAPI.buildSubscriptionData(retryTopic, SubscriptionData.SUB_ALL);

this.rebalanceImpl.getSubscriptionInner().put(retryTopic, subscriptionData);

break;

default:

break;

}

} catch (Exception e) {

throw new MQClientException("subscription exception", e);

}

}

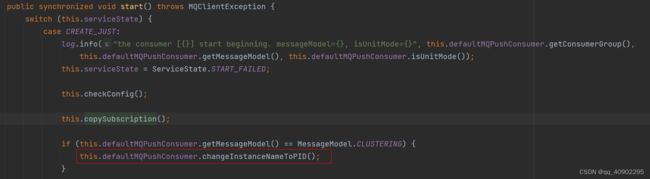

4、 集群模式下将当前进程id设置为消费者实例的名称

5、MQClient管理器创建一个MQClient

6、设置负载均衡策略

消费者的负载均衡策略很多,但是最常用还是以下两个。例如一共有三个broker,其中前两个都是有3个消息队列,最后一个有2个消息队列,这样就有8个消息队列,而消费者组中若有3个消费者。那么基于同一个消费者组下的消费者不可消费同一个topic下的同一个消息,那么就会有以下两种复杂均衡策略。

注:因为同一个消费者组下的消费者不能消费同一个topic下的同一个消息,因此,如果消费者的数量大于消息队列的个数,那么就有消费者没有消息可消费。例如上面有8个队列,但是有10个消费者,那么就注定有消费者没有消息可消费了。

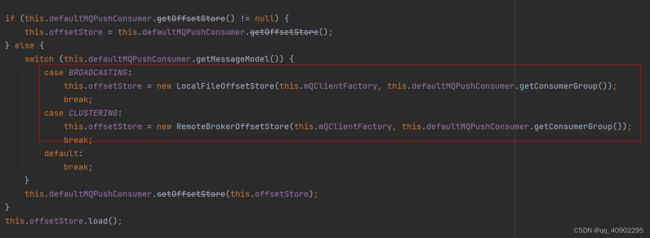

7、读取上次消费消息的偏移量

若是广播模式,则消息的偏移量存储在本地,如果是集群模式,则消息的偏移量存储在远程。

若是广播模式,则根据解析出来的路径,读取本地存储偏移量的json,然后将messagequeue对应偏移量存储在ConcurrentMap

表中。

若是集群模式,本地并没有做额外的操作,broker收到请求以后会查询broker端存储的偏移量,然后做处理的,后面再说broker端的。

8、启动消费消息的服务

若是顺序消费,则初始化一个顺序消费消费的服务,然后启动,如果是并发消费消息,则初始化一个并发消费消息的服务,然后再启动。实现ConsumeMessageService接口的有两个,一个是顺序消息的,一个是并发消息的服务。

if (this.getMessageListenerInner() instanceof MessageListenerOrderly) {

this.consumeOrderly = true;

this.consumeMessageService =

new ConsumeMessageOrderlyService(this, (MessageListenerOrderly) this.getMessageListenerInner());

//POPTODO reuse Executor ?

this.consumeMessagePopService = new ConsumeMessagePopOrderlyService(this, (MessageListenerOrderly) this.getMessageListenerInner());

} else if (this.getMessageListenerInner() instanceof MessageListenerConcurrently) {

this.consumeOrderly = false;

this.consumeMessageService =

new ConsumeMessageConcurrentlyService(this, (MessageListenerConcurrently) this.getMessageListenerInner());

//POPTODO reuse Executor ?

this.consumeMessagePopService =

new ConsumeMessagePopConcurrentlyService(this, (MessageListenerConcurrently) this.getMessageListenerInner());

}

this.consumeMessageService.start();

// POPTODO

this.consumeMessagePopService.start();

9、将当前消费者注册到MQClient实例中

boolean registerOK = mQClientFactory.registerConsumer(this.defaultMQPushConsumer.getConsumerGroup(), this);

10、启动

启动的时候加了同步锁,启动的程序中启动的东西也不少,咱们一个个将,获取namesrv的地址咱们就不说了。

mQClientFactory.start();

public void start() throws MQClientException {

synchronized (this) {

switch (this.serviceState) {

case CREATE_JUST:

this.serviceState = ServiceState.START_FAILED;

// If not specified,looking address from name server

if (null == this.clientConfig.getNamesrvAddr()) {

this.mQClientAPIImpl.fetchNameServerAddr();

}

// Start request-response channel

this.mQClientAPIImpl.start();

// Start various schedule tasks

this.startScheduledTask();

// Start pull service

this.pullMessageService.start();

// Start rebalance service

this.rebalanceService.start();

// Start push service

this.defaultMQProducer.getDefaultMQProducerImpl().start(false);

log.info("the client factory [{}] start OK", this.clientId);

this.serviceState = ServiceState.RUNNING;

break;

case START_FAILED:

throw new MQClientException("The Factory object[" + this.getClientId() + "] has been created before, and failed.", null);

default:

break;

}

}

11、this.mQClientAPIImpl.start(),启动一个nettyClient跟broker交互。

12、this.startScheduledTask(),启动各种定时任务,定时获取namesrv地址,定时更新topic路由信息,定时发送心跳,定时持久化消费的偏移量。

13、先看这个,this.rebalanceService.start(),RebalanceService继承了Thread,所以看其启动的方法,主要是做了重新分发,具体怎么做的就不讲了,可以自己看看。

14、这个是重点,消息的拉取,this.pullMessageService.start(),PullMessageService继承了Thread,所以看其启动的方法,从拉取请求队列中获取一个拉取请求。

@Override

public void run() {

logger.info(this.getServiceName() + " service started");

while (!this.isStopped()) {

try {

MessageRequest messageRequest = this.messageRequestQueue.take();

if (messageRequest.getMessageRequestMode() == MessageRequestMode.POP) {

this.popMessage((PopRequest)messageRequest);

} else {

// 获取消息

this.pullMessage((PullRequest)messageRequest);

}

} catch (InterruptedException ignored) {

} catch (Exception e) {

logger.error("Pull Message Service Run Method exception", e);

}

}

logger.info(this.getServiceName() + " service end");

}从拉去请求中获得消费者组然后获得消费者,然后impl.pullMessage(pullRequest);开始拉取消息

public void pullMessage(final PullRequest pullRequest) {

/**

* 拉取方法中首先获取的就是ProcessQueue,我解释解释这个类,这个类可以理解为消息的中转站,拉取的消息都扔在这个队列中,然后被消费。

* 如果这个队列被抛弃了,那就不用继续走下去了。

*/

final ProcessQueue processQueue = pullRequest.getProcessQueue();

if (processQueue.isDropped()) {

log.info("the pull request[{}] is dropped.", pullRequest.toString());

return;

}

pullRequest.getProcessQueue().setLastPullTimestamp(System.currentTimeMillis());

try {

this.makeSureStateOK();

} catch (MQClientException e) {

log.warn("pullMessage exception, consumer state not ok", e);

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

return;

}

if (this.isPause()) {

log.warn("consumer was paused, execute pull request later. instanceName={}, group={}", this.defaultMQPushConsumer.getInstanceName(), this.defaultMQPushConsumer.getConsumerGroup());

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_SUSPEND);

return;

}

long cachedMessageCount = processQueue.getMsgCount().get();

long cachedMessageSizeInMiB = processQueue.getMsgSize().get() / (1024 * 1024);

// 消息做流控,消息数量大于1000, 若processQueue队列中堆积较多消息未处理就会触发拉取消息的留空

if (cachedMessageCount > this.defaultMQPushConsumer.getPullThresholdForQueue()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_CACHE_FLOW_CONTROL);

if ((queueFlowControlTimes++ % 1000) == 0) {

log.warn(

"the cached message count exceeds the threshold {}, so do flow control, minOffset={}, maxOffset={}, count={}, size={} MiB, pullRequest={}, flowControlTimes={}",

this.defaultMQPushConsumer.getPullThresholdForQueue(), processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), cachedMessageCount, cachedMessageSizeInMiB, pullRequest, queueFlowControlTimes);

}

return;

}

// 消息大小大于100M则不再拉取

if (cachedMessageSizeInMiB > this.defaultMQPushConsumer.getPullThresholdSizeForQueue()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_CACHE_FLOW_CONTROL);

if ((queueFlowControlTimes++ % 1000) == 0) {

log.warn(

"the cached message size exceeds the threshold {} MiB, so do flow control, minOffset={}, maxOffset={}, count={}, size={} MiB, pullRequest={}, flowControlTimes={}",

this.defaultMQPushConsumer.getPullThresholdSizeForQueue(), processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), cachedMessageCount, cachedMessageSizeInMiB, pullRequest, queueFlowControlTimes);

}

return;

}

if (!this.consumeOrderly) {

if (processQueue.getMaxSpan() > this.defaultMQPushConsumer.getConsumeConcurrentlyMaxSpan()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_CACHE_FLOW_CONTROL);

if ((queueMaxSpanFlowControlTimes++ % 1000) == 0) {

log.warn(

"the queue's messages, span too long, so do flow control, minOffset={}, maxOffset={}, maxSpan={}, pullRequest={}, flowControlTimes={}",

processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), processQueue.getMaxSpan(),

pullRequest, queueMaxSpanFlowControlTimes);

}

return;

}

} else {

if (processQueue.isLocked()) {

if (!pullRequest.isPreviouslyLocked()) {

long offset = -1L;

try {

offset = this.rebalanceImpl.computePullFromWhereWithException(pullRequest.getMessageQueue());

if (offset < 0) {

throw new MQClientException(ResponseCode.SYSTEM_ERROR, "Unexpected offset " + offset);

}

} catch (Exception e) {

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

log.error("Failed to compute pull offset, pullResult: {}", pullRequest, e);

return;

}

boolean brokerBusy = offset < pullRequest.getNextOffset();

log.info("the first time to pull message, so fix offset from broker. pullRequest: {} NewOffset: {} brokerBusy: {}",

pullRequest, offset, brokerBusy);

if (brokerBusy) {

log.info("[NOTIFYME]the first time to pull message, but pull request offset larger than broker consume offset. pullRequest: {} NewOffset: {}",

pullRequest, offset);

}

pullRequest.setPreviouslyLocked(true);

pullRequest.setNextOffset(offset);

}

} else {

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

log.info("pull message later because not locked in broker, {}", pullRequest);

return;

}

}

// 获取topic信息

final SubscriptionData subscriptionData = this.rebalanceImpl.getSubscriptionInner().get(pullRequest.getMessageQueue().getTopic());

if (null == subscriptionData) {

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

log.warn("find the consumer's subscription failed, {}", pullRequest);

return;

}

final long beginTimestamp = System.currentTimeMillis();

// 结果回调函数

PullCallback pullCallback = new PullCallback() {

@Override

public void onSuccess(PullResult pullResult) {

if (pullResult != null) {

// 转换成msgFoundList,并对消息进行tag过滤

pullResult = DefaultMQPushConsumerImpl.this.pullAPIWrapper.processPullResult(pullRequest.getMessageQueue(), pullResult,

subscriptionData);

switch (pullResult.getPullStatus()) {

case FOUND:

long prevRequestOffset = pullRequest.getNextOffset();

pullRequest.setNextOffset(pullResult.getNextBeginOffset());

long pullRT = System.currentTimeMillis() - beginTimestamp;

DefaultMQPushConsumerImpl.this.getConsumerStatsManager().incPullRT(pullRequest.getConsumerGroup(),

pullRequest.getMessageQueue().getTopic(), pullRT);

long firstMsgOffset = Long.MAX_VALUE;

// 拉取的消息为空的情况,立即重新拉取(若在客户端tag过滤后有可能为空)

if (pullResult.getMsgFoundList() == null || pullResult.getMsgFoundList().isEmpty()) {

DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

} else {

firstMsgOffset = pullResult.getMsgFoundList().get(0).getQueueOffset();

DefaultMQPushConsumerImpl.this.getConsumerStatsManager().incPullTPS(pullRequest.getConsumerGroup(),

pullRequest.getMessageQueue().getTopic(), pullResult.getMsgFoundList().size());

// 放入processQueue中,然后进行异步消费, 这里也和自己写的消费者处理逻辑关联起来了(953行将消费者关联的)

boolean dispatchToConsume = processQueue.putMessage(pullResult.getMsgFoundList());

DefaultMQPushConsumerImpl.this.consumeMessageService.submitConsumeRequest(

pullResult.getMsgFoundList(),

processQueue,

pullRequest.getMessageQueue(),

dispatchToConsume);

// 调用下次继续拉取消息

if (DefaultMQPushConsumerImpl.this.defaultMQPushConsumer.getPullInterval() > 0) {

DefaultMQPushConsumerImpl.this.executePullRequestLater(pullRequest,

DefaultMQPushConsumerImpl.this.defaultMQPushConsumer.getPullInterval());

} else {

DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

}

}

if (pullResult.getNextBeginOffset() < prevRequestOffset

|| firstMsgOffset < prevRequestOffset) {

log.warn(

"[BUG] pull message result maybe data wrong, nextBeginOffset: {} firstMsgOffset: {} prevRequestOffset: {}",

pullResult.getNextBeginOffset(),

firstMsgOffset,

prevRequestOffset);

}

break;

case NO_NEW_MSG:

case NO_MATCHED_MSG:

pullRequest.setNextOffset(pullResult.getNextBeginOffset());

DefaultMQPushConsumerImpl.this.correctTagsOffset(pullRequest);

DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

break;

case OFFSET_ILLEGAL:

log.warn("the pull request offset illegal, {} {}",

pullRequest.toString(), pullResult.toString());

pullRequest.setNextOffset(pullResult.getNextBeginOffset());

pullRequest.getProcessQueue().setDropped(true);

DefaultMQPushConsumerImpl.this.executeTaskLater(new Runnable() {

@Override

public void run() {

try {

DefaultMQPushConsumerImpl.this.offsetStore.updateOffset(pullRequest.getMessageQueue(),

pullRequest.getNextOffset(), false);

DefaultMQPushConsumerImpl.this.offsetStore.persist(pullRequest.getMessageQueue());

DefaultMQPushConsumerImpl.this.rebalanceImpl.removeProcessQueue(pullRequest.getMessageQueue());

log.warn("fix the pull request offset, {}", pullRequest);

} catch (Throwable e) {

log.error("executeTaskLater Exception", e);

}

}

}, 10000);

break;

default:

break;

}

}

}

@Override

public void onException(Throwable e) {

if (!pullRequest.getMessageQueue().getTopic().startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) {

log.warn("execute the pull request exception", e);

}

if (e instanceof MQBrokerException && ((MQBrokerException) e).getResponseCode() == ResponseCode.FLOW_CONTROL) {

DefaultMQPushConsumerImpl.this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_BROKER_FLOW_CONTROL);

} else {

DefaultMQPushConsumerImpl.this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

}

}

};

boolean commitOffsetEnable = false;

long commitOffsetValue = 0L;

if (MessageModel.CLUSTERING == this.defaultMQPushConsumer.getMessageModel()) {

commitOffsetValue = this.offsetStore.readOffset(pullRequest.getMessageQueue(), ReadOffsetType.READ_FROM_MEMORY);

if (commitOffsetValue > 0) {

commitOffsetEnable = true;

}

}

String subExpression = null;

boolean classFilter = false;

SubscriptionData sd = this.rebalanceImpl.getSubscriptionInner().get(pullRequest.getMessageQueue().getTopic());

if (sd != null) {

if (this.defaultMQPushConsumer.isPostSubscriptionWhenPull() && !sd.isClassFilterMode()) {

subExpression = sd.getSubString();

}

classFilter = sd.isClassFilterMode();

}

// 构建系统拉取标识,标识哪个节点进行的拉取

int sysFlag = PullSysFlag.buildSysFlag(

commitOffsetEnable, // commitOffset

true, // suspend

subExpression != null, // subscription

classFilter // class filter

);

try {

this.pullAPIWrapper.pullKernelImpl(

pullRequest.getMessageQueue(),

subExpression,

subscriptionData.getExpressionType(),

subscriptionData.getSubVersion(),

pullRequest.getNextOffset(),

// 本次拉取最大条数,默认32条

this.defaultMQPushConsumer.getPullBatchSize(),

this.defaultMQPushConsumer.getPullBatchSizeInBytes(),

sysFlag,

commitOffsetValue,

BROKER_SUSPEND_MAX_TIME_MILLIS,

CONSUMER_TIMEOUT_MILLIS_WHEN_SUSPEND,

CommunicationMode.ASYNC,

pullCallback

);

} catch (Exception e) {

log.error("pullKernelImpl exception", e);

this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

}

}15、Broker组装消息并反馈

org.apache.rocketmq.broker.processor.PullMessageProcessor#processRequest(io.netty.channel.Channel, org.apache.rocketmq.remoting.protocol.RemotingCommand, boolean)

private RemotingCommand processRequest(final Channel channel, RemotingCommand request, boolean brokerAllowSuspend)

throws RemotingCommandException {

RemotingCommand response = RemotingCommand.createResponseCommand(PullMessageResponseHeader.class);

final PullMessageResponseHeader responseHeader = (PullMessageResponseHeader) response.readCustomHeader();

final PullMessageRequestHeader requestHeader =

(PullMessageRequestHeader) request.decodeCommandCustomHeader(PullMessageRequestHeader.class);

response.setOpaque(request.getOpaque());

LOGGER.debug("receive PullMessage request command, {}", request);

if (!PermName.isReadable(this.brokerController.getBrokerConfig().getBrokerPermission())) {

response.setCode(ResponseCode.NO_PERMISSION);

response.setRemark(String.format("the broker[%s] pulling message is forbidden",

this.brokerController.getBrokerConfig().getBrokerIP1()));

return response;

}

if (request.getCode() == RequestCode.LITE_PULL_MESSAGE && !this.brokerController.getBrokerConfig().isLitePullMessageEnable()) {

response.setCode(ResponseCode.NO_PERMISSION);

response.setRemark(

"the broker[" + this.brokerController.getBrokerConfig().getBrokerIP1() + "] for lite pull consumer is forbidden");

return response;

}

SubscriptionGroupConfig subscriptionGroupConfig =

this.brokerController.getSubscriptionGroupManager().findSubscriptionGroupConfig(requestHeader.getConsumerGroup());

if (null == subscriptionGroupConfig) {

response.setCode(ResponseCode.SUBSCRIPTION_GROUP_NOT_EXIST);

response.setRemark(String.format("subscription group [%s] does not exist, %s", requestHeader.getConsumerGroup(), FAQUrl.suggestTodo(FAQUrl.SUBSCRIPTION_GROUP_NOT_EXIST)));

return response;

}

if (!subscriptionGroupConfig.isConsumeEnable()) {

response.setCode(ResponseCode.NO_PERMISSION);

responseHeader.setForbiddenType(ForbiddenType.GROUP_FORBIDDEN);

response.setRemark("subscription group no permission, " + requestHeader.getConsumerGroup());

return response;

}

final boolean hasCommitOffsetFlag = PullSysFlag.hasCommitOffsetFlag(requestHeader.getSysFlag());

final boolean hasSubscriptionFlag = PullSysFlag.hasSubscriptionFlag(requestHeader.getSysFlag());

TopicConfig topicConfig = this.brokerController.getTopicConfigManager().selectTopicConfig(requestHeader.getTopic());

if (null == topicConfig) {

LOGGER.error("the topic {} not exist, consumer: {}", requestHeader.getTopic(), RemotingHelper.parseChannelRemoteAddr(channel));

response.setCode(ResponseCode.TOPIC_NOT_EXIST);

response.setRemark(String.format("topic[%s] not exist, apply first please! %s", requestHeader.getTopic(), FAQUrl.suggestTodo(FAQUrl.APPLY_TOPIC_URL)));

return response;

}

if (!PermName.isReadable(topicConfig.getPerm())) {

response.setCode(ResponseCode.NO_PERMISSION);

responseHeader.setForbiddenType(ForbiddenType.TOPIC_FORBIDDEN);

response.setRemark("the topic[" + requestHeader.getTopic() + "] pulling message is forbidden");

return response;

}

TopicQueueMappingContext mappingContext = this.brokerController.getTopicQueueMappingManager().buildTopicQueueMappingContext(requestHeader, false);

{

RemotingCommand rewriteResult = rewriteRequestForStaticTopic(requestHeader, mappingContext);

if (rewriteResult != null) {

return rewriteResult;

}

}

if (requestHeader.getQueueId() < 0 || requestHeader.getQueueId() >= topicConfig.getReadQueueNums()) {

String errorInfo = String.format("queueId[%d] is illegal, topic:[%s] topicConfig.readQueueNums:[%d] consumer:[%s]",

requestHeader.getQueueId(), requestHeader.getTopic(), topicConfig.getReadQueueNums(), channel.remoteAddress());

LOGGER.warn(errorInfo);

response.setCode(ResponseCode.SYSTEM_ERROR);

response.setRemark(errorInfo);

return response;

}

ConsumerManager consumerManager = brokerController.getConsumerManager();

switch (RequestSource.parseInteger(requestHeader.getRequestSource())) {

case PROXY_FOR_BROADCAST:

consumerManager.compensateBasicConsumerInfo(requestHeader.getConsumerGroup(), ConsumeType.CONSUME_PASSIVELY, MessageModel.BROADCASTING);

break;

case PROXY_FOR_STREAM:

consumerManager.compensateBasicConsumerInfo(requestHeader.getConsumerGroup(), ConsumeType.CONSUME_ACTIVELY, MessageModel.CLUSTERING);

break;

default:

consumerManager.compensateBasicConsumerInfo(requestHeader.getConsumerGroup(), ConsumeType.CONSUME_PASSIVELY, MessageModel.CLUSTERING);

break;

}

SubscriptionData subscriptionData = null;

ConsumerFilterData consumerFilterData = null;

if (hasSubscriptionFlag) {

try {

subscriptionData = FilterAPI.build(

requestHeader.getTopic(), requestHeader.getSubscription(), requestHeader.getExpressionType()

);

consumerManager.compensateSubscribeData(requestHeader.getConsumerGroup(), requestHeader.getTopic(), subscriptionData);

if (!ExpressionType.isTagType(subscriptionData.getExpressionType())) {

consumerFilterData = ConsumerFilterManager.build(

requestHeader.getTopic(), requestHeader.getConsumerGroup(), requestHeader.getSubscription(),

requestHeader.getExpressionType(), requestHeader.getSubVersion()

);

assert consumerFilterData != null;

}

} catch (Exception e) {

LOGGER.warn("Parse the consumer's subscription[{}] failed, group: {}", requestHeader.getSubscription(),

requestHeader.getConsumerGroup());

response.setCode(ResponseCode.SUBSCRIPTION_PARSE_FAILED);

response.setRemark("parse the consumer's subscription failed");

return response;

}

} else {

ConsumerGroupInfo consumerGroupInfo =

this.brokerController.getConsumerManager().getConsumerGroupInfo(requestHeader.getConsumerGroup());

if (null == consumerGroupInfo) {

LOGGER.warn("the consumer's group info not exist, group: {}", requestHeader.getConsumerGroup());

response.setCode(ResponseCode.SUBSCRIPTION_NOT_EXIST);

response.setRemark("the consumer's group info not exist" + FAQUrl.suggestTodo(FAQUrl.SAME_GROUP_DIFFERENT_TOPIC));

return response;

}

if (!subscriptionGroupConfig.isConsumeBroadcastEnable()

&& consumerGroupInfo.getMessageModel() == MessageModel.BROADCASTING) {

response.setCode(ResponseCode.NO_PERMISSION);

responseHeader.setForbiddenType(ForbiddenType.BROADCASTING_DISABLE_FORBIDDEN);

response.setRemark("the consumer group[" + requestHeader.getConsumerGroup() + "] can not consume by broadcast way");

return response;

}

boolean readForbidden = this.brokerController.getSubscriptionGroupManager().getForbidden(//

subscriptionGroupConfig.getGroupName(), requestHeader.getTopic(), PermName.INDEX_PERM_READ);

if (readForbidden) {

response.setCode(ResponseCode.NO_PERMISSION);

responseHeader.setForbiddenType(ForbiddenType.SUBSCRIPTION_FORBIDDEN);

response.setRemark("the consumer group[" + requestHeader.getConsumerGroup() + "] is forbidden for topic[" + requestHeader.getTopic() + "]");

return response;

}

subscriptionData = consumerGroupInfo.findSubscriptionData(requestHeader.getTopic());

if (null == subscriptionData) {

LOGGER.warn("the consumer's subscription not exist, group: {}, topic:{}", requestHeader.getConsumerGroup(), requestHeader.getTopic());

response.setCode(ResponseCode.SUBSCRIPTION_NOT_EXIST);

response.setRemark("the consumer's subscription not exist" + FAQUrl.suggestTodo(FAQUrl.SAME_GROUP_DIFFERENT_TOPIC));

return response;

}

if (subscriptionData.getSubVersion() < requestHeader.getSubVersion()) {

LOGGER.warn("The broker's subscription is not latest, group: {} {}", requestHeader.getConsumerGroup(),

subscriptionData.getSubString());

response.setCode(ResponseCode.SUBSCRIPTION_NOT_LATEST);

response.setRemark("the consumer's subscription not latest");

return response;

}

if (!ExpressionType.isTagType(subscriptionData.getExpressionType())) {

consumerFilterData = this.brokerController.getConsumerFilterManager().get(requestHeader.getTopic(),

requestHeader.getConsumerGroup());

if (consumerFilterData == null) {

response.setCode(ResponseCode.FILTER_DATA_NOT_EXIST);

response.setRemark("The broker's consumer filter data is not exist!Your expression may be wrong!");

return response;

}

if (consumerFilterData.getClientVersion() < requestHeader.getSubVersion()) {

LOGGER.warn("The broker's consumer filter data is not latest, group: {}, topic: {}, serverV: {}, clientV: {}",

requestHeader.getConsumerGroup(), requestHeader.getTopic(), consumerFilterData.getClientVersion(), requestHeader.getSubVersion());

response.setCode(ResponseCode.FILTER_DATA_NOT_LATEST);

response.setRemark("the consumer's consumer filter data not latest");

return response;

}

}

}

if (!ExpressionType.isTagType(subscriptionData.getExpressionType())

&& !this.brokerController.getBrokerConfig().isEnablePropertyFilter()) {

response.setCode(ResponseCode.SYSTEM_ERROR);

response.setRemark("The broker does not support consumer to filter message by " + subscriptionData.getExpressionType());

return response;

}

MessageFilter messageFilter;

if (this.brokerController.getBrokerConfig().isFilterSupportRetry()) {

messageFilter = new ExpressionForRetryMessageFilter(subscriptionData, consumerFilterData,

this.brokerController.getConsumerFilterManager());

} else {

messageFilter = new ExpressionMessageFilter(subscriptionData, consumerFilterData,

this.brokerController.getConsumerFilterManager());

}

final MessageStore messageStore = brokerController.getMessageStore();

final boolean useResetOffsetFeature = brokerController.getBrokerConfig().isUseServerSideResetOffset();

String topic = requestHeader.getTopic();

String group = requestHeader.getConsumerGroup();

int queueId = requestHeader.getQueueId();

Long resetOffset = brokerController.getConsumerOffsetManager().queryThenEraseResetOffset(topic, group, queueId);

GetMessageResult getMessageResult = null;

if (useResetOffsetFeature && null != resetOffset) {

getMessageResult = new GetMessageResult();

getMessageResult.setStatus(GetMessageStatus.OFFSET_RESET);

getMessageResult.setNextBeginOffset(resetOffset);

getMessageResult.setMinOffset(messageStore.getMinOffsetInQueue(topic, queueId));

getMessageResult.setMaxOffset(messageStore.getMaxOffsetInQueue(topic, queueId));

getMessageResult.setSuggestPullingFromSlave(false);

} else {

long broadcastInitOffset = queryBroadcastPullInitOffset(topic, group, queueId, requestHeader, channel);

if (broadcastInitOffset >= 0) {

getMessageResult = new GetMessageResult();

getMessageResult.setStatus(GetMessageStatus.OFFSET_RESET);

getMessageResult.setNextBeginOffset(broadcastInitOffset);

} else {

SubscriptionData finalSubscriptionData = subscriptionData;

RemotingCommand finalResponse = response;

// 获取消息

messageStore.getMessageAsync(group, topic, queueId, requestHeader.getQueueOffset(),

requestHeader.getMaxMsgNums(), messageFilter)

.thenApply(result -> {

if (null == result) {

finalResponse.setCode(ResponseCode.SYSTEM_ERROR);

finalResponse.setRemark("store getMessage return null");

return finalResponse;

}

return pullMessageResultHandler.handle(

result,

request,

requestHeader,

channel,

finalSubscriptionData,

subscriptionGroupConfig,

brokerAllowSuspend,

messageFilter,

finalResponse,

mappingContext

);

})

.thenAccept(result -> NettyRemotingAbstract.writeResponse(channel, request, result));

}

}

if (getMessageResult != null) {

return this.pullMessageResultHandler.handle(

getMessageResult,

request,

requestHeader,

channel,

subscriptionData,

subscriptionGroupConfig,

brokerAllowSuspend,

messageFilter,

response,

mappingContext

);

}

return null;

} @Override

public GetMessageResult getMessage(final String group, final String topic, final int queueId, final long offset,

final int maxMsgNums, final int maxTotalMsgSize, final MessageFilter messageFilter) {

if (this.shutdown) {

LOGGER.warn("message store has shutdown, so getMessage is forbidden");

return null;

}

if (!this.runningFlags.isReadable()) {

LOGGER.warn("message store is not readable, so getMessage is forbidden " + this.runningFlags.getFlagBits());

return null;

}

Optional topicConfig = getTopicConfig(topic);

CleanupPolicy policy = CleanupPolicyUtils.getDeletePolicy(topicConfig);

//check request topic flag

if (Objects.equals(policy, CleanupPolicy.COMPACTION) && messageStoreConfig.isEnableCompaction()) {

return compactionStore.getMessage(group, topic, queueId, offset, maxMsgNums, maxTotalMsgSize);

} // else skip

long beginTime = this.getSystemClock().now();

GetMessageStatus status = GetMessageStatus.NO_MESSAGE_IN_QUEUE;

long nextBeginOffset = offset;

long minOffset = 0;

long maxOffset = 0;

GetMessageResult getResult = new GetMessageResult();

final long maxOffsetPy = this.commitLog.getMaxOffset();

ConsumeQueueInterface consumeQueue = findConsumeQueue(topic, queueId);

if (consumeQueue != null) {

minOffset = consumeQueue.getMinOffsetInQueue();

maxOffset = consumeQueue.getMaxOffsetInQueue();

// 表示当前队列中无消息

if (maxOffset == 0) {

status = GetMessageStatus.NO_MESSAGE_IN_QUEUE;

nextBeginOffset = nextOffsetCorrection(offset, 0);

} else if (offset < minOffset) {

status = GetMessageStatus.OFFSET_TOO_SMALL;

nextBeginOffset = nextOffsetCorrection(offset, minOffset);

} else if (offset == maxOffset) {

status = GetMessageStatus.OFFSET_OVERFLOW_ONE;

nextBeginOffset = nextOffsetCorrection(offset, offset);

} else if (offset > maxOffset) {

status = GetMessageStatus.OFFSET_OVERFLOW_BADLY;

nextBeginOffset = nextOffsetCorrection(offset, maxOffset);

} else {

final int maxFilterMessageSize = Math.max(16000, maxMsgNums * consumeQueue.getUnitSize());

final boolean diskFallRecorded = this.messageStoreConfig.isDiskFallRecorded();

long maxPullSize = Math.max(maxTotalMsgSize, 100);

if (maxPullSize > MAX_PULL_MSG_SIZE) {

LOGGER.warn("The max pull size is too large maxPullSize={} topic={} queueId={}", maxPullSize, topic, queueId);

maxPullSize = MAX_PULL_MSG_SIZE;

}

status = GetMessageStatus.NO_MATCHED_MESSAGE;

long maxPhyOffsetPulling = 0;

int cqFileNum = 0;

while (getResult.getBufferTotalSize() <= 0

&& nextBeginOffset < maxOffset

&& cqFileNum++ < this.messageStoreConfig.getTravelCqFileNumWhenGetMessage()) {

ReferredIterator bufferConsumeQueue = consumeQueue.iterateFrom(nextBeginOffset);

if (bufferConsumeQueue == null) {

status = GetMessageStatus.OFFSET_FOUND_NULL;

nextBeginOffset = nextOffsetCorrection(nextBeginOffset, this.consumeQueueStore.rollNextFile(consumeQueue, nextBeginOffset));

LOGGER.warn("consumer request topic: " + topic + "offset: " + offset + " minOffset: " + minOffset + " maxOffset: "

+ maxOffset + ", but access logic queue failed. Correct nextBeginOffset to " + nextBeginOffset);

break;

}

try {

long nextPhyFileStartOffset = Long.MIN_VALUE;

while (bufferConsumeQueue.hasNext()

&& nextBeginOffset < maxOffset) {

CqUnit cqUnit = bufferConsumeQueue.next();

long offsetPy = cqUnit.getPos();

int sizePy = cqUnit.getSize();

boolean isInMem = estimateInMemByCommitOffset(offsetPy, maxOffsetPy);

if ((cqUnit.getQueueOffset() - offset) * consumeQueue.getUnitSize() > maxFilterMessageSize) {

break;

}

if (this.isTheBatchFull(sizePy, cqUnit.getBatchNum(), maxMsgNums, maxPullSize, getResult.getBufferTotalSize(), getResult.getMessageCount(), isInMem)) {

break;

}

if (getResult.getBufferTotalSize() >= maxPullSize) {

break;

}

maxPhyOffsetPulling = offsetPy;

//Be careful, here should before the isTheBatchFull

nextBeginOffset = cqUnit.getQueueOffset() + cqUnit.getBatchNum();

if (nextPhyFileStartOffset != Long.MIN_VALUE) {

if (offsetPy < nextPhyFileStartOffset) {

continue;

}

}

if (messageFilter != null

&& !messageFilter.isMatchedByConsumeQueue(cqUnit.getValidTagsCodeAsLong(), cqUnit.getCqExtUnit())) {

if (getResult.getBufferTotalSize() == 0) {

status = GetMessageStatus.NO_MATCHED_MESSAGE;

}

continue;

}

SelectMappedBufferResult selectResult = this.commitLog.getMessage(offsetPy, sizePy);

if (null == selectResult) {

if (getResult.getBufferTotalSize() == 0) {

status = GetMessageStatus.MESSAGE_WAS_REMOVING;

}

nextPhyFileStartOffset = this.commitLog.rollNextFile(offsetPy);

continue;

}

if (messageFilter != null

&& !messageFilter.isMatchedByCommitLog(selectResult.getByteBuffer().slice(), null)) {

if (getResult.getBufferTotalSize() == 0) {

status = GetMessageStatus.NO_MATCHED_MESSAGE;

}

// release...

selectResult.release();

continue;

}

this.storeStatsService.getGetMessageTransferredMsgCount().add(cqUnit.getBatchNum());

getResult.addMessage(selectResult, cqUnit.getQueueOffset(), cqUnit.getBatchNum());

status = GetMessageStatus.FOUND;

nextPhyFileStartOffset = Long.MIN_VALUE;

}

} finally {

bufferConsumeQueue.release();

}

}

if (diskFallRecorded) {

long fallBehind = maxOffsetPy - maxPhyOffsetPulling;

brokerStatsManager.recordDiskFallBehindSize(group, topic, queueId, fallBehind);

}

long diff = maxOffsetPy - maxPhyOffsetPulling;

long memory = (long) (StoreUtil.TOTAL_PHYSICAL_MEMORY_SIZE

* (this.messageStoreConfig.getAccessMessageInMemoryMaxRatio() / 100.0));

getResult.setSuggestPullingFromSlave(diff > memory);

}

} else {

status = GetMessageStatus.NO_MATCHED_LOGIC_QUEUE;

nextBeginOffset = nextOffsetCorrection(offset, 0);

}

if (GetMessageStatus.FOUND == status) {

this.storeStatsService.getGetMessageTimesTotalFound().add(1);

} else {

this.storeStatsService.getGetMessageTimesTotalMiss().add(1);

}

long elapsedTime = this.getSystemClock().now() - beginTime;

this.storeStatsService.setGetMessageEntireTimeMax(elapsedTime);

// lazy init no data found.

if (getResult == null) {

getResult = new GetMessageResult(0);

}

getResult.setStatus(status);

getResult.setNextBeginOffset(nextBeginOffset);

getResult.setMaxOffset(maxOffset);

getResult.setMinOffset(minOffset);

return getResult;

}

16、下面就是消费者收到broker的反馈了,我们直接看上面说的那个回调函数,PullCallback

消息被分装成pullResult

看到了吧,在这一步,消息被放到了processQueue中,然后processQueue又被给提交到消费者的消费线程池中。

// 结果回调函数

PullCallback pullCallback = new PullCallback() {

@Override

public void onSuccess(PullResult pullResult) {

if (pullResult != null) {

// 转换成msgFoundList,并对消息进行tag过滤

pullResult = DefaultMQPushConsumerImpl.this.pullAPIWrapper.processPullResult(pullRequest.getMessageQueue(), pullResult,

subscriptionData);

switch (pullResult.getPullStatus()) {

case FOUND:

long prevRequestOffset = pullRequest.getNextOffset();

pullRequest.setNextOffset(pullResult.getNextBeginOffset());

long pullRT = System.currentTimeMillis() - beginTimestamp;

DefaultMQPushConsumerImpl.this.getConsumerStatsManager().incPullRT(pullRequest.getConsumerGroup(),

pullRequest.getMessageQueue().getTopic(), pullRT);

long firstMsgOffset = Long.MAX_VALUE;

// 拉取的消息为空的情况,立即重新拉取(若在客户端tag过滤后有可能为空)

if (pullResult.getMsgFoundList() == null || pullResult.getMsgFoundList().isEmpty()) {

DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

} else {

firstMsgOffset = pullResult.getMsgFoundList().get(0).getQueueOffset();

DefaultMQPushConsumerImpl.this.getConsumerStatsManager().incPullTPS(pullRequest.getConsumerGroup(),

pullRequest.getMessageQueue().getTopic(), pullResult.getMsgFoundList().size());

// 放入processQueue中,然后进行异步消费, 这里也和自己写的消费者处理逻辑关联起来了(953行将消费者关联的)

boolean dispatchToConsume = processQueue.putMessage(pullResult.getMsgFoundList());

DefaultMQPushConsumerImpl.this.consumeMessageService.submitConsumeRequest(

pullResult.getMsgFoundList(),

processQueue,

pullRequest.getMessageQueue(),

dispatchToConsume);

// 调用下次继续拉取消息

if (DefaultMQPushConsumerImpl.this.defaultMQPushConsumer.getPullInterval() > 0) {

DefaultMQPushConsumerImpl.this.executePullRequestLater(pullRequest,

DefaultMQPushConsumerImpl.this.defaultMQPushConsumer.getPullInterval());

} else {

DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

}

}

if (pullResult.getNextBeginOffset() < prevRequestOffset

|| firstMsgOffset < prevRequestOffset) {

log.warn(

"[BUG] pull message result maybe data wrong, nextBeginOffset: {} firstMsgOffset: {} prevRequestOffset: {}",

pullResult.getNextBeginOffset(),

firstMsgOffset,

prevRequestOffset);

}

break;

case NO_NEW_MSG:

case NO_MATCHED_MSG:

pullRequest.setNextOffset(pullResult.getNextBeginOffset());

DefaultMQPushConsumerImpl.this.correctTagsOffset(pullRequest);

DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

break;

case OFFSET_ILLEGAL:

log.warn("the pull request offset illegal, {} {}",

pullRequest.toString(), pullResult.toString());

pullRequest.setNextOffset(pullResult.getNextBeginOffset());

pullRequest.getProcessQueue().setDropped(true);

DefaultMQPushConsumerImpl.this.executeTaskLater(new Runnable() {

@Override

public void run() {

try {

DefaultMQPushConsumerImpl.this.offsetStore.updateOffset(pullRequest.getMessageQueue(),

pullRequest.getNextOffset(), false);

DefaultMQPushConsumerImpl.this.offsetStore.persist(pullRequest.getMessageQueue());

DefaultMQPushConsumerImpl.this.rebalanceImpl.removeProcessQueue(pullRequest.getMessageQueue());

log.warn("fix the pull request offset, {}", pullRequest);

} catch (Throwable e) {

log.error("executeTaskLater Exception", e);

}

}

}, 10000);

break;

default:

break;

}

}

}

@Override

public void onException(Throwable e) {

if (!pullRequest.getMessageQueue().getTopic().startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) {

log.warn("execute the pull request exception", e);

}

if (e instanceof MQBrokerException && ((MQBrokerException) e).getResponseCode() == ResponseCode.FLOW_CONTROL) {

DefaultMQPushConsumerImpl.this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_BROKER_FLOW_CONTROL);

} else {

DefaultMQPushConsumerImpl.this.executePullRequestLater(pullRequest, pullTimeDelayMillsWhenException);

}

}

};

三、消息的推送

其实RocketMQ并没有实现推送,消息的推送其实还是基于消息的拉取。RocketMQ有个长轮询机制,每隔5s查询一次,看是否有自己需要的消息。但是这种效率不高,因此就有了另一个机制,在消息达到broker时进行存储的时候,监听器监控到新消息以后唤醒pull请求。

该方法在org.apache.rocketmq.store下的DefaultMessageStore.start()方法中。

在doReput()方法中有以下代码,如果不是从节点,意味着是主节点,代表读取到了数据,那么就会触发消息到达的监听器。

然后拉取消息的服务就会被唤醒,然后处理消息,有兴趣的可以自己看

四、并发消息和顺序消息

前面讲了consumer发送拉取消息的请求给broker,然后发送请求的时候还有一个消息的回调PullCallback,在PullCallback中把消息从封装的pullResult中拿出来放到了processQueue,然后再把processQueue交给consumeMessageService处理,其实就是在这里对消息进行了处理。

consumeMessageService是一个接口,它的实现类有两个ConsumeMessageConcurrentlyService和ConsumeMessageOrderlyService,分别是对顺序消息和并发消息的处理,我们一个个来讲。

1、并发消息(ConsumeMessageConcurrentlyService的submitConsumeRequest方法)

将消息传递过去,如果消息的条数小于32条,则将消息直接扔给消费者线程池。如果消息大于32条,那么就会分页,每页32条,然后一次交给线程池一页。

然后就看线程ConsumeRequest的run()方法,这里是ConsumeMessageConcurrentlyService中的内部类ConsumeRequest。如果processQueue被抛弃,那就不用走下去了,消息都在里面。再接着就是将消息交给消费的监听器。

如果之前设置了钩子函数,那么接下来就执行钩子函数的方法

接下来就是将消息交给listen来继续处理了。

2、顺序消息(ConsumeMessageOrderlyService的submitConsumeRequest方法)

顺序消费一般都是局部顺序消息,都是对某个队列进行消息的顺序消费,因此不用担心消息的条数过多。

然后就看线程ConsumeRequest的run()方法,这里是ConsumeMessageOrderlyService中的内部类ConsumeRequest。如果processQueue被抛弃,那就不用走下去了,消息都在里面。

接下来就是顺序消息和并发消息的区别,顺序消息这里使用了锁,对消息的处理流程加锁,只能一个个消费,至于对消息的处理还是跟并发消息的流程一样,主要是加了锁。

————————————————

版权声明:本文为CSDN博主「请叫我小叶子」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/xiaoye319/article/details/102953592