Openstack-mitaka安装部署

openstack

一台controller,一台compute,且两台均为双网卡,ens33为主网卡,ens36不需要配置IP

主机名称 |

网卡名称 |

网卡类型 |

网卡IP(ens33) |

controller |

ens33,ens36 |

NAT,NAT |

192.168.115.10 |

compute |

ens33,ens36 |

NAT,NAT |

192.168.115.20 |

(一)openstack包制作以及安装

1.配置域名解析

[root@controller ~]# vim /etc/hosts

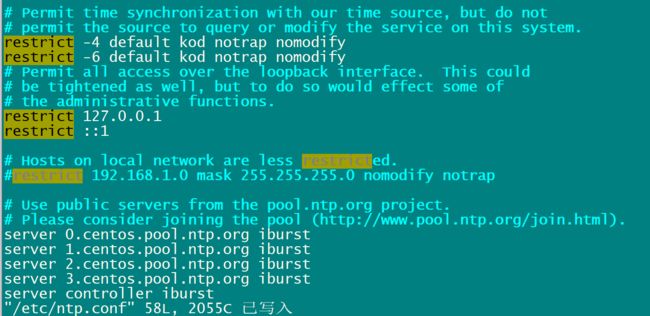

2.配置时间同步(这里我选用的是ntp自带的服务)

controller:

compute:

启动 NTP 服务并将其配置为随系统启动:

sysytemctl start ntpd --now

验证时间是否同步

3.配置yum源

解压此网盘文件并移动到/opt下 链接:https://pan.baidu.com/s/1hseqXZe 密码:fi07。

1.mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup(将原来的源备份重命名)

2.wget –P /etc/yum.repos.d/ http://mirrors.163.com/.help/CentOS7-Base-163.repo(下载相对应的源)

3.yum -y install epel-release(下载扩展源)

4.tar -zxvf mitake.tar.gz(解压文件到当前目录)

5.mv openstack-mitaka /opt (移动文件到opt文件夹下)

6.vim rdo-mitaka-testing.repo (在/etc/yum.repos.d/中新增repo文件作为源)

4.安装python-openstack客户端软件

[root@controller ~]# yum install python-openstackclient -y

5.安装mariadb数据库

[root@controller ~]# yum install mariadb mariadb-server python2-PyMySQL

创建并编辑/etc/my.cof.d/openstack.cnf

[root@controller ~]# cat /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address = 192.168.115.10 #指定mysql监听ip

default-storage-engine = innodb #数据库的存储引擎innodb

innodb_file_per_table = 1

max_connections = 1000 #数据库的最大连接数1000

collation-server = utf8_general_ci #数据库的排序规则

character-set-server = utf8 #数据库的字符集

完成安装并启动mysql,并配置成mysql开机自启动

[root@controller ~]# systemctl start mariadb --now

初始化数据库

[root@controller ~]# mysql_secure_installation

6.安装rabbitMQ消息队列,目的是为了让openstack内部组件将来通过rabbitmq相互通信

[root@controller ~]# yum install -y rabbitmq-server -y

启动rabbitmq服务,配置开机自启

[root@controller ~]# systemctl start rabbitmq-server --now

为了消息队列的安全性,所以需要创建openstack用户,将来任何一个组件使用消息队列和其他主件通信的时候,要通过用户的认证

[root@controller ~]# rabbitmqctl add_user openstack redhat

Creating user "openstack" ...

给用户(openstack)设置权限,给这个用户授予所有权限

[root@controller ~]# rabbitmqctl set_permissions openstack "." "." ".*"

Setting permissions for user "openstack" in vhost "/" ...

7、安装memcached,目的主要给Keystone使用,保存用户令牌信息,实现会话持久

[root@controller ~]# yum install -y memcached python-memcached

[root@controller ~]# systemctl start memcached.service --now

(二)在控制节点安装部署keystone节点

1、创建keystone数据库,并建立授权用户

MariaDB [(none)]> CREATE DATABASE keystone;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT all ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT all ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT all ON keystone.* TO 'keystone'@'controller' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)2、生成一个随机数,作为初始配置中管理员的令牌

[root@controller ~]# openssl rand -hex 10

3.安装keystone需要的相关软件

[root@controller ~]# yum install -y openstack-keystone httpd mod_wsgi

4.修改keystone的配置文件

1.cp /etc/keystone/keystone.conf /etc/keystone/keystone.conf.bak

2.grep -Ev '^$|#' keystone.conf.bak >keystone.conf

3.openstack-config --set /etc/keystone/keystone.conf DEFAULT admin_token 上面生成的令牌值

4.openstack-config --set /etc/keystone/keystone.conf database connection mysql+pymysql://keystone:redhat@controller/keystone

5.openstack-config --set /etc/keystone/keystone.conf token provider fernet5.初始化身份认证服务的数据库

[root@controller ~]# su -s /bin/sh -c "keystone-manage db_sync" keystone

6.初始化fernet keys

[root@controller ~]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

7.配置httpd虚拟主机提供认证服务

echo "ServerName controller" >>/etc/httpd/conf/httpd.conf

echo 'Listen 5000

Listen 35357

WSGIDaemonProcess keystone-public processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-public

WSGIScriptAlias / /usr/bin/keystone-wsgi-public

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined

Require all granted

WSGIDaemonProcess keystone-admin processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-admin

WSGIScriptAlias / /usr/bin/keystone-wsgi-admin

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined

Require all granted

' > /etc/httpd/conf.d/wsgi-keystone.conf [root@controller ~]# systemctl enable httpd

Created symlink from /etc/systemd/system/multi-user.target.wants/httpd.service to /usr/lib/systemd/system/httpd.service. --now

8.、定义环境变量,用于保存keystone的令牌及endpoint

export OS_TOKEN=ADMIN_TOKEN

export OS_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=39.创建服务实体和API端点

openstack service create \

--name keystone --description "OpenStack Identity" identity

openstack endpoint create --region RegionOne \

identity public http://controller:5000/v3

openstack endpoint create --region RegionOne \

identity internal http://controller:5000/v3

openstack endpoint create --region RegionOne \

identity admin http://controller:35357/v3验证操作

[root@controller ~]# openstack endpoint list

+---------------------------+-----------+--------------+--------------+---------+-----------+---------------------------+

| ID | Region | Service Name | Service Type | Enabled | Interface | URL |

+---------------------------+-----------+--------------+--------------+---------+-----------+---------------------------+

| 0b0e7ac7f19a465cbeecd9dcb | RegionOne | keystone | identity | True | admin | http://controller:5000/v3 |

| 1298830 | | | | | | |

| 85de47fcf5044742b7290cd41 | RegionOne | keystone | identity | True | public | http://controller:5000/v3 |

| bff531f | | | | | | |

| ea7d565a57334a838a87e4215 | RegionOne | keystone | identity | True | internal | http://controller:5000/v3 |

| 45e9be2 | | | | | | |

+---------------------------+-----------+--------------+--------------+---------+-----------+---------------------------+

[root@controller ~]# 10.创建域、项目、用户和角色

openstack domain create --description "Default Domain" default

openstack project create --domain default \

--description "Admin Project" admin

openstack user create --domain default \

--password redhat admin

openstack role create admin

openstack role add --project admin --user admin admin

openstack project create --domain default \

--description "Service Project" service

openstack project create --domain default \

--description "Demo Project" demo

openstack user create --domain default \

--password redhat demo

openstack role create user

openstack role add --project demo --user demo user验证以上创建的服务、项目、用户、角色

[root@controller ~]# openstack project list

+----------------------------------+---------+

| ID | Name |

+----------------------------------+---------+

| 0171ec74e07a48948e3e871bef1327fc | demo |

| 6b1f664532184e829034be563be313b6 | admin |

| a2dd0c374a364d8c90791fe282b1eb4f | service |

+----------------------------------+---------+

[root@controller ~]# [root@controller ~]# openstack user list

+----------------------------------+-------+

| ID | Name |

+----------------------------------+-------+

| 24968e3a6bd644a69ee058d5c7d55eed | demo |

| 2aa2c376deb6491caafd75e961825ad4 | admin |

+----------------------------------+-------+[root@controller ~]# openstack role list

+----------------------------------+-------+

| ID | Name |

+----------------------------------+-------+

| 6a44426bbb41475ea3d4f02197e093a8 | user |

| fa9b6d9a266c49ff9dd8311eee6bd169 | admin |

+----------------------------------+-------+11.为了安全需要关闭临时令牌验证

编辑 /etc/keystone/keystone-paste.ini 文件,从[pipeline:public_api],[pipeline:admin_api]和[pipeline:api_v3]部分删除admin_token_auth 。12.重置OS_TOKEN和OS_URL 环境变量:

[root@controller ~]# unset OS_TOKEN OS_URL

13.创建 OpenStack 客户端环境脚本

echo 'export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=redhat

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2' >admin-openrc

echo 'export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=redhat

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2' >demo-openrc安装部署glance镜像服务,用来实现提供镜像

1、准备数据库

MariaDB [(none)]> CREATE DATABASE glance;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT all ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT all ON glance.* TO 'glance'@'%' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)2.创建glance用户,并关联admin角色,创建glance服务,创建glance服务的endpoint

openstack user create --domain default --password CLANCE_PASS glance

openstack role add --project service --user glance admin

openstack service create --name glance \

--description "OpenStack Image" image

openstack endpoint create --region RegionOne \

image public http://controller:9292

openstack endpoint create --region RegionOne \

image internal http://controller:9292

openstack endpoint create --region RegionOne \

image admin http://controller:92923.安装glance软件

[root@controller ~]# yum install -y openstack-glance

4.编辑glance-api配置文件

[root@controller ~]# vim /etc/glance/glance-api.conf

[database]

connection = mysql+pymysql://glance:redhat@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = redhat

[paste_deploy]

flavor = keystone

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images5.编辑glance-registry.conf文件

[root@controller ~]# vim /etc/glance/glance-registry.conf

[database]

connection = mysql+pymysql://glance:redhat@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = redhat

[paste_deploy]

flavor = keystone6.同步后台数据库

[root@controller ~]# su -s /bin/sh -c "glance-manage db_sync" glance

7.启动glance相关服务

[root@controller ~]# systemctl enable openstack-glance-api.service openstack-glance-registry.service

[root@controller ~]# systemctl start openstack-glance-api.service openstack-glance-registry.service

[root@controller ~]# systemctl is-active openstack-glance-api.service openstack-glance-registry.service

active

active

[root@controller ~]# ss -antp | grep glance

LISTEN 0 128 *:9191 *:* users:(("glance-registry",pid=429,fd=4),("glance-registry",pid=412,fd=4))

LISTEN 0 128 *:9292 *:* users:(("glance-api",pid=430,fd=4),("glance-api",pid=411,fd=4))

[root@controller ~]# 8.下载并添加镜像

[root@controller ~]# source admin-openrc

[root@controller ~]# wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

[root@controller ~]# du -sh cirros-0.3.4-x86_64-disk.img

13M cirros-0.3.4-x86_64-disk.img

[root@controller ~]# [root@controller ~]# openstack image create "cirrors-0.3.4" \

> --file cirros-0.3.4-x86_64-disk.img \

> --disk-format qcow2 --container-format bare \

> --public

[root@controller ~]# openstack image list

安装部署nova计算服务

一、在控制节点安装nova

1.创建数据库

MariaDB [(none)]> CREATE DATABASE nova_api;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> CREATE DATABASE nova;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT all ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT all ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT all ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT all ON nova.* TO 'nova'@'%' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)2.创建nova服务,创建nova用户,创建nova服务的endpoint

openstack user create --domain default \

--password NOVA_PASS nova

openstack role add --project service --user nova admin

openstack service create --name nova \

--description "OpenStack Compute" compute

openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1/%\(tenant_id\)s3.安装nova软件

[root@controller ~]# yum install -y openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler

4.编辑nova配置文件

[root@controller ~]# vim /etc/nova/nova.conf

[DEFAULT]

enabled_apis=osapi_compute,metadata

rpc_backend=rabbit

auth_strategy=keystone

my_ip = 192.168.115.10

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api_database]

connection = mysql+pymysql://nova:redhat@controller/nova_api

[database]

connection = mysql+pymysql://nova:redhat@controller/nova

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = redhat

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = redhat

[vnc]

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path=/var/lib/nova/tmp5.同步数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova

6.启动nova服务

[root@controller ~]# systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

[root@controller ~]# systemctl start openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

[root@controller ~]# systemctl is-active openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

active

active

active

active

active二、在计算节点安装nova

1.安装软件

[root@compute ~]# yum install -y openstack-nova-compute

2.编辑nova配置文件

[root@compute ~]# vim /etc/nova/nova.conf

[DEFAULT]

rpc_backend=rabbit

auth_strategy=keystone

my_ip=192.168.115.20

use_neutron=true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = redhat

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = redhat

[vnc]

enabled = true

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[glance]

api_servers=http://controller:9292

[oslo_concurrency]

lock_path=/var/lib/nova/tmp3.对kvm虚拟机启用虚拟化

[root@compute ~]# openstack-config --set /etc/nova/nova.conf libvirt virt_type qemu

4.启动计算节点服务

[root@compute ~]# systemctl enable libvirtd openstack-nova-compute.service

[root@compute ~]# systemctl start libvirtd openstack-nova-compute.service

[root@compute ~]# systemctl is-active libvirtd openstack-nova-compute.service

active

active5.验证compute服务是否工作正常,在控制节点执行以下命令

[root@compute ~]# openstack compute service list

安装部署neutron网络服务

(一)在控制节点安装neutron

1.创建neutron数据库

MariaDB [(none)]> CREATE DATABASE neutron;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT all ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT all ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)2.创建neutron用户,关联admin角色

openstack user create --domain default --password redhat neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron \

--description "OpenStack Networking" network

openstack endpoint create --region RegionOne \

network public http://controller:9696

openstack endpoint create --region RegionOne \

network internal http://controller:9696

openstack endpoint create --region RegionOne \

network admin http://controller:96963.安装neutron相关软件

[root@controller ~]# yum install -y openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables

4.编辑neutron配置文件

[root@controller ~]# vim /etc/neutron/neutron.conf

[database]

connection = mysql+pymysql://neutron:redhat@controller/neutron

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

rpc_backend = rabbit

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = redhat

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = redhat

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = redhat

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

5.配置Module Layer 2插件

[root@controller ~]# vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true6.配置Linux-Bridge代理

[root@controller ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:ens33

[vxlan]

enable_vxlan = true

local_ip = 192.168.115.10

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver7.配置Layer 3代理 ,提供路由、NAT功能

[root@controller ~]# vim /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

external_network_bridge = 8.配置DHCP代理

[root@controller ~]# vim /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true9.配置元数据代理

[root@controller ~]# vim /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_ip = controller

metadata_proxy_shared_secret = redhat

10.编辑nova配置文件

[root@controller ~]# vim /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = redhat

service_metadata_proxy = true

metadata_proxy_shared_secret = redhat11.为ml2插件配置文件建立超链接

[root@controller ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

12.同步数据库

[root@controller ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

13.启动相关服务

[root@controller ~]# systemctl restart openstack-nova-api.service

[root@controller ~]# systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-l3-agent.service

[root@controller ~]# systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-l3-agent.service

[root@controller ~]# systemctl is-active neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-l3-agent.service

active

active

active

active

active(二)在计算节点安装neutron

1、安装软件

[root@compute ~]# yum install -y openstack-neutron-linuxbridge ebtables ipset

2.编辑neutron配置文件

[root@compute ~]# vim /etc/neutron/neutron.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = redhat

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = redhat

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp3.配置网络选项

[root@compute ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth0

[vxlan]

enable_vxlan = true

local_ip = 10.0.0.31

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver4.编辑nova配置文件

[root@compute ~]# vim /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = redhat5.启动服务

[root@compute ~]# systemctl restart openstack-nova-compute.service

[root@compute ~]# systemctl enable neutron-linuxbridge-agent.service

[root@compute ~]# systemctl start neutron-linuxbridge-agent.service

[root@compute ~]# systemctl is-active neutron-linuxbridge-agent.service

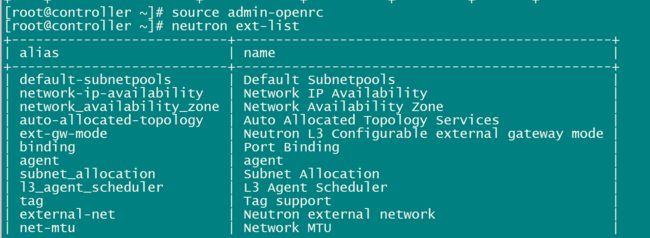

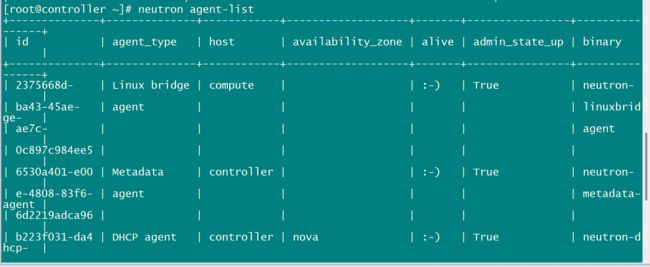

active在控制节点验证neutron配置

安装配置DashBoard

1、安装dashboard软件

[root@controller ~]# yum install -y openstack-dashboard

2.编辑dashboard配置文件

[root@controller ~]# vim /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*', ]

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

},

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = 'default'

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

TIME_ZONE = "UTC"3.编辑memcached配置文件,指定监听的IP地址

[root@controller ~]# cat /etc/sysconfig/memcached

PORT="11211"

USER="memcached"

MAXCONN="1024"

CACHESIZE="64"

OPTIONS="-l 192.168.115.10,::1"4.重启httpd, memcached服务

[root@controller ~]# systemctl restart httpd memcached

浏览器栏输入192.168.115.10/dashboard

安装部署cinder提供块存储服务

1.创建cinder数据库

MariaDB [(none)]> CREATE DATABASE cinder;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT all ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT all ON cinder.* TO 'cinder'@'controller' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT all ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'redhat';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)2.创建cinder服务,用户,创建endpoint

[root@controller ~]# openstack service create --name cinder

> --description "Openstack Block Storage" volume

[root@controller ~]# openstack service create --name cinderv2 \

> --description "Openstack Block Storage" volumev2

[root@controller ~]# openstack endpoint create --region RegionOne volume public http://controller:8776/v1/%\(tenant_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne volume internal http://controller:8776/v1/%\(tenant_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne volume admin http://controller:8776/v1/%\(tenant_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne volumev2 public http://controller:8776/v2/%\(tenant_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne volumev2 internal http://controller:8776/v2/%\(tenant_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne volumev2 admin http://controller:8776/v2/%\(tenant_id\)s3.安装cinder软件

[root@controller ~]# yum install -y openstack-cinder

4.编辑cinder的配置文件

[root@controller ~]# vim /etc/cinder/cinder.conf

[database]

connection = mysql+pymysql://cinder:redhat@controller/cinder

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 192.168.115.10

[oslo_messaging_rabbit]

...

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = redhat

[keystone_authtoken]

...

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = redhat

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp5.初始化cinder数据库

[root@controller ~]# su -s /bin/sh -c "cinder-manage db sync" cinder

6.配置nova使用cinder为vm提供块存储,重启nova服务

[root@controller ~]# vim /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne7.启动cinder服务

[root@controller ~]# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

[root@controller ~]# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

[root@controller ~]#