openai-gpt

“GPT-3 is not a mind, but it is also not entirely a machine. It’s something else: a statistically abstracted representation of the contents of millions of minds, as expressed in their writing.” — REGINA RINI, PHILOSOPHER

“ GPT-3不是头脑,但它也不是一台完整的机器。 这是另外一回事:以统计学的方式对数以百万计的思想内容进行抽象表示,如其写作所表达的那样。” — REGINA RINI,哲学家

In recent years, the AI circus really has come to town and we’ve been treated to a veritable parade of technical aberrations seeking to dazzle us with their human-like intelligence. Many of these sideshows have been “embodied” AI, where the physical form usually functions as a cunning disguise for a clunky, pre-programmed bot. Like the world’s first “AI anchor”, launched by a Chinese TV network and — how could we ever forget — Sophia, Saudi Arabia’s first robotic citizen.

近年来,人工智能马戏团真的来了,我们被视为一场真正的技术畸变游行,他们的类人智力使我们眼花azz乱。 这些边秀中的许多都是“体现的” AI,其中的物理形式通常充当笨拙的,预先编程的机器人的狡猾伪装。 就像是由中国电视网络推出的世界上第一个“ AI锚 ”一样,沙特阿拉伯的第一个机器人公民索菲亚也使我们忘了。

But last month there was a furore around something altogether more serious. A system The Verge called, “an invention that could end up defining the decade to come.” It’s name is GPT-3, and it could certainly make our future a lot more complicated.

但是上个月围绕着更严重的事情发生了愤怒。 The Verge称之为“最终可以定义未来十年的发明”的系统。 它的名字叫GPT-3,它肯定会使我们的未来更加复杂。

So, what is all the fuss about? And how might this supposed tectonic shift in technological development change the lives of the rest of us ?

那么,大惊小怪的是什么呢? 这种技术发展的假设性构造变化将如何改变我们其余人们的生活?

An Autocomplete For Thought

思想的自动完成

The GPT-3 software was built by San Francisco-based, OpenAI, and The New York Times has described it “…by far the most powerful “language model” ever created,” adding:

GPT-3软件由总部位于旧金山的OpenAI构建 , 《纽约时报》将其描述为“……是迄今为止创建的最强大的“语言模型” ,”并补充说:

A language model is an artificial intelligence system that has been trained on an enormous corpus of text; with enough text and enough processing, the machine begins to learn probabilistic connections between words. More plainly: GPT-3 can read and write. And not badly, either…GPT-3 is capable of generating entirely original, coherent and sometimes even factual prose. And not just prose — it can write poetry, dialogue, memes, computer code and who knows what else. — Farhad Manjoo, New York Times

语言模型是经过大量文本训练的人工智能系统。 有了足够的文本和足够的处理能力,机器就会开始 学习单词之间的概率联系 。 更简单地说:GPT-3可以读写。 而且也很不错,……GPT-3能够产生完全原创,连贯,有时甚至是事实的散文。 不仅是散文,它还能 写诗 ,对话, 模因 ,计算机代码,还有谁知道呢。 — 纽约时报的法哈德·曼乔 ( Farhad Manjoo)

In this case, “enormous” is something of an understatement. Reportedly, the entirety of the English Wikipedia — spanning some 6 million articles — makes up just 0.6 percent of GPT-3’s training data.

在这种情况下,“巨大”是一种轻描淡写的说法。 据报道 ,英语维基百科的全部内容(约600万篇文章)仅占GPT-3培训数据的0.6%。

In layman’s terms, it is a giant autocomplete program. One that has feasted on the vast texts of the internet; from digital books, to articles, religious texts, science lectures, message boards, blogs, computing manuals, and just about anything else you could conceive of and, due to being able to cleverly spot patterns and consistencies in these things, it can perform a mind-boggling array of tasks.

用外行的话来说,这是一个巨大的自动完成程序。 一饱眼福于互联网的文本; 从数字书籍到文章,宗教文字,科学讲座,留言板,博客,计算机手册,以及您能想到的几乎所有其他内容,由于能够巧妙地发现这些内容中的模式和一致性,它可以执行令人难以置信的一系列任务。

GPT-3 has been down the familiar Wikipedia rabbit hole and out the other side… GPT-3一直在熟悉的Wikipedia兔子洞下方,并在另一边…Much of the hullabaloo around the system has been fueled by the sorts of things it has already being used to create — like a chatbot that allows you to converse with historical figures, and even code — but it’s raw commercial potential is also turning heads.

它已经被用来创建各种东西,例如围绕它的聊天机器人,它使您可以与历史人物 甚至代码进行对话,但是,围绕该系统的大多数hullabaloo却助长了这种挑战,但是其原始的商业潜力也在使人们望而却步。

An article in TechRepublic spoke to the “enticing possibilities” for a number of industries, including the potential for corporate multinationals and media firms to open up access to fresh new audiences in foreign countries using GPT-3 to localize and translate their texts into “virtually any language.”

TechRepublic中的一篇文章谈到了许多行业的“诱人可能性”,包括潜在的企业跨国公司和媒体公司使用GPT-3将本地化并将其文字翻译成“虚拟的任何语言。”

AI-nxiety

人工智能

Indeed, GPT-3 has been performing tasks with such deftness that it has caused some spectators to question whether, in fact, we are seeing AI’s first Bambi-esque steps into the world of artificial general intelligence. In other words, is this system the first that could replicate “true” natural, human intelligence?

确实,GPT-3的执行技巧如此精巧,以至于引起了一些观众的质疑,事实上,我们是否看到了AI迈入人工智能通用领域的第一步,即Bambi式的第一步。 换句话说, 该系统是否是第一个可以复制“真实的”自然的人类智能的系统?

And, if it is, what does that mean for little old us?

而且,如果是的话 ,这对小小的我们意味着什么?

Philosopher Carlos Montemeyor articulates our human fears:

哲学家卡洛斯·蒙特梅约尔(Carlos Montemeyor)表达了我们人类的恐惧:

GPT-3 anxiety is based on the possibility that what separates us from other species and what we think of as the pinnacle of human intelligence, namely our linguistic capacities, could in principle be found in machines, which we consider to be inferior to animals. — Carlos Montemeyor, Philosopher

GPT-3的焦虑基于以下可能性:原则上可以在我们认为不如动物的机器中找到将我们与其他物种区分开来以及我们认为是人类智力的巅峰的东西,即我们的语言能力。 — 哲学家Carlos Montemeyor

Nevertheless, it is clear that GPT-3’s impressive dexterousness makes it, as David Chalmers has commented, “…instantly one of the most interesting and important AI systems ever produced.”

尽管如此,很明显,正如David Chalmers 所说 ,GPT-3令人印象深刻的灵巧性使其成为现实 ,“……立即成为有史以来最有趣,最重要的AI系统之一。”

But while GPT-3 has been quick to impress us, it was also quick to demonstrate its dark side. Any system trained on such huge amounts of human data was always going to take on both the good and the bad that lies therein, as Facebook’s Jerome Pesenti discovered:

但是,尽管GPT-3很快给我们留下了深刻的印象,但它也很快证明了它的阴暗面。 正如Facebook的杰罗姆·佩森蒂(Jerome Pesenti)发现的那样,任何接受过如此庞大的人类数据训练的系统,总会承担其中的利弊。

OpenAI’s Sam Altman responded to these horrifying results by announcing the company’s experimentation with “toxicity filters” to filter them out. But the possibility of such grotesque output — which were relatively easily to solicit — is not the only sizeable problem with this supposedly “all-knowing” AI.

OpenAI的山姆·奥特曼(Sam Altman)通过宣布该公司使用“ 毒性过滤器 ”进行过滤的实验来回应这些令人震惊的结果。 但是,这种怪异的输出(相对容易提出)的可能性并不是这种所谓的“全知”的AI唯一的大问题。

Though success stories have managed to mostly drown them out, there are many examples of woeful inaccuracies and inconsistencies that are now fueling a counter-wave of caution from those who assert that such systems simply cannot be trusted.

尽管成功的故事已成功地将其淹没,但仍有许多严重的错误和不一致的例子正在引起人们的反对,这些人断言,这种系统简直是不可信任的 。

Not fit for commercial use, is the message. Not for a long time.

该消息不适合商业用途。 好久不见了。

The smart, clever, viral demonstrations of GPT-3 seeming to jump over Turing Test-style hurdles have just been cherry-picked for oxygen and fleeting Twitter fame. The kind of dumb or incomprehensible output that is important for balance, is unfortunately a lot less sexy.

GPT-3的聪明,聪明,病毒式的展示似乎跳过了图灵测试风格的障碍,只是因为氧气和短暂的Twitter名声而被挑选出来。 不幸的是,对于平衡而言很重要的那种愚蠢或难以理解的输出 ,却没有那么性感。

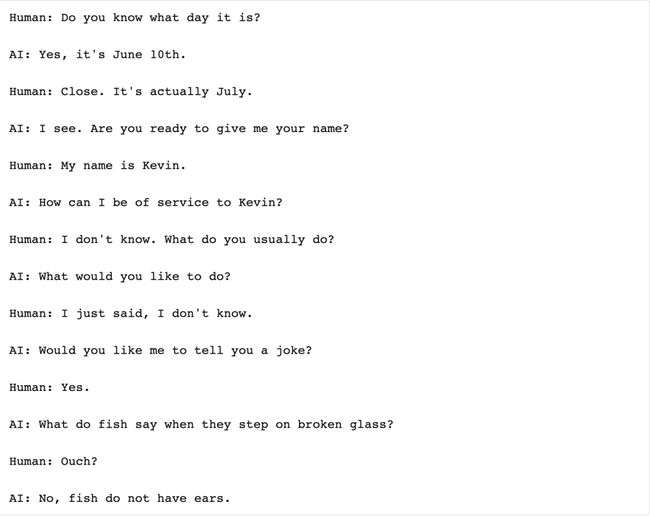

Conversation with GPT-3 from Kevin Lacker’s blog Kevin Lacker博客中与GPT-3的对话New (Lower?) Standards

新(低?)标准

Errors, biases, and “silly mistakes” (to quote OpenAI’s Sam Altman himself) should be deeply concerning when encountered in a system with big ambitions, such as GPT-3. Yet they are not the only problems that will materialize.

错误,偏见和“ 愚蠢的错误 ”(引用OpenAI的Sam Altman本人)应该在具有雄心勃勃的系统(例如GPT-3)中遇到时深切关注。 然而,它们并不是唯一会实现的问题。

Looking into the near-ish future, it is already being forecast that GPT-3 and its descendents will contribute to widespread global joblessness in fields like law, accountancy, and journalism. Other commentators are suggesting more nuanced implications, like the erosion of human standards for things like creativity and art, with the system already having amassed an enviable portfolio of creative fiction

展望不久的将来, 已经预测到GPT-3及其后代将导致法律,会计和新闻等领域广泛的全球失业。 其他评论员则提出了更为细微的暗示,例如人类对诸如创造力和艺术之类的标准的侵蚀,而该系统已经积累了令人羡慕的创造性小说组合。

Dr. C. Thi Nguyen, a professor at the University of Utah, worries that algorithmically guided art-creation generally privileges, measures, and reproduces elements of what is popular — as understood through clicks, upvotes and likes. This means it can overlook art that has “profound artistic impact or depth of emotional investment”, failing to “see” valuable artistic qualities that aren’t so easily captured and quantified.

犹他大学教授C. Thi Nguyen博士担心 ,通过算法指导艺术创作通常会特权,衡量和复制流行元素,例如通过点击,赞扬和喜欢来理解。 这意味着它可以忽略具有“深远的艺术影响力或情感投入深度”的艺术,而无法“看到”不那么容易捕获和量化的有价值的艺术品质。

Consequently, evaluative standards for creation could become “thin” and basic, losing important nuance in the battle to make art something that can be interpreted and imitated roughly.

因此,创作的评估标准可能会变得“薄”和基本,在使艺术品变得可以粗略解释和模仿的斗争中失去重要的细微差别。

AI-generated artwork Edmond de Belamy AI生成的艺术品Edmond de BelamyAnd if the power of GPT-3 threatens the integrity of artistic standards, it poses an even greater danger to communications. Even its own creators say that the system is capable of “misinformation, spam, phishing, abuse of legal and governmental processes, fraudulent academic essay writing and social engineering pretexting.”

而且,如果GPT-3的威力威胁到艺术标准的完整性,则会对交流构成更大的威胁。 甚至它自己的创造者都说该系统具有“错误信息,垃圾邮件,网络钓鱼,滥用法律和政府程序,欺诈性学术论文撰写和社会工程学借口的能力”。

In an age where the fake news, deepfakes, and misinformation continue to (quite understandably) cause serious issues with public trust, yet another potent mechanism that facilitates deception and confusion could be the unwelcome herald of a new information dystopia.

在这个假新闻,伪造品和错误信息继续(相当可以理解)引起公众信任的严重问题的时代,另一个助长欺骗和混乱的有效机制可能是新的信息反乌托邦的不受欢迎的预兆。

The New York Times reported that OpenAI prohibits GPT-3 from impersonating humans, and that text produced by the software must disclose that it was written by a bot. But the genie is out of the bottle, and malicious actors aren’t known for paying heed to such rules once they have their hands on powerful technology…

《 纽约时报》报道称,OpenAI禁止GPT-3冒充人类,并且该软件产生的文本必须披露它是由机器人编写的。 但是精灵并不在行之列,一旦发现了强大的技术,恶意的行为者就不会遵守这些规则而闻名……

Melancholy?

忧郁?

Given GPT-3 gains strength and wins acclaim by feeding on the breadth and depth of human knowledge, it will be interesting to see how fascinating we find it in the long run. As its content mirrors our own with increasing accuracy, producing pitch perfect text, how will we feel as humans?

鉴于GPT-3依靠人类知识的广度和深度而获得了优势,并赢得了赞誉,因此从长远来看,看到我们如何着迷它会很有趣。 由于其内容以越来越高的准确性反映了我们自己的内容,产生了完美的音调文本,我们将如何感觉像人类?

Regina Rini speculates:

里贾纳·里尼(Regina Rini)推测:

It’s marvelous. Then it’s mundane. And then it’s melancholy. Because eventually we will turn the interaction around and ask: what does it mean that other people online can’t distinguish you from a linguo-statistical firehose? What will it feel like — alienating? liberating? Annihilating?

太棒了 那就平凡了。 然后它是忧郁的。 因为最终我们将转向互动,并问:在线其他人无法将您与语言统计的流水线 区分开来,这意味着什么 ? 感觉如何-疏远了? 解放? ?灭?

Winston Churchill once said, “we shape our building, thereafter they shape us”. The quote is sometimes updated with “technology” exchanged for “buildings.” With GPT-3 we have a technology that could deliver new, world-changing conveniences by lifting away a great number of text-based chores. But in creating these “efficiencies”, we must wonder where this will leave us as a species that has depended upon its unique mastery of language for millennia.

温斯顿·丘吉尔(Winston Churchill)曾经说过:“我们塑造我们的建筑,然后他们塑造我们”。 有时会用“技术”代替“建筑物”来更新报价。 借助GPT-3,我们拥有一项技术,可以消除大量基于文本的琐事,从而为世界带来新的便利。 但是,在创造这些“效率”时,我们必须想知道这将使我们成为几千年来一直依赖其独特的语言掌握的物种。

How will GPT-3 and its likes shape us? Does this even matter? So long as things get done?

GPT-3及其喜欢之处将如何塑造我们? 这有关系吗? 只要事情做好了?

In the end….what is all the fuss about?

最终……大惊小怪的是什么?

分享这个: (Share this:)

翻译自: https://medium.com/swlh/gpt-3-what-is-all-the-fuss-about-3427befd19a1

openai-gpt