android音量调节

android音量调节可以参考下面的链接:

1、音量调节流程分析

android音频系统(4):AudioService之音量管理_renshuguo123723的博客-CSDN博客_audioservice

Android java层音频相关的分析与理解(二)音量控制相关_u012440406的专栏-CSDN博客

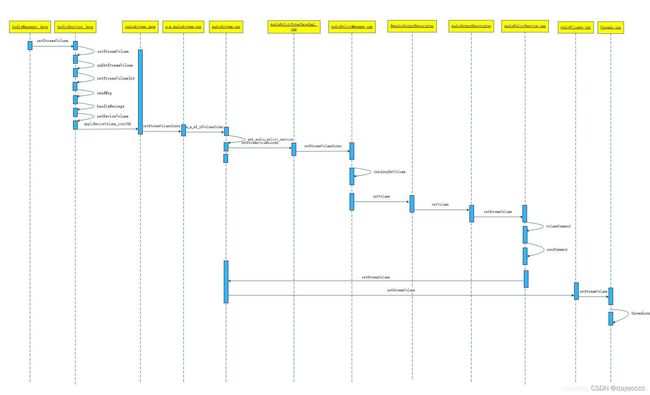

2、AudioManager里面setStreamVolume调用流程

Android Audio:setStreamVolume()音量调节过程_Good Good Study Day Day Up!!!-CSDN博客_android setstreamvolume

3、流程图 以及音量初始化

Android Audio(八)—— Volume_tudouhuashengmi的博客-CSDN博客

4、AudioTrack的音量

android 音量调节_renshuguo123723的博客-CSDN博客

5、音量保存到数据库

Android Audio音量保存_xlnaan的博客-CSDN博客_android volume_music_hdmi

注意点:

(1)alias流别名

虽然Android中拥有10种流类型,但是为了便于使用,不可能每一种流都有一个音量条,所以就将功能相同的流归为一类。

(2)streamState:

所以AudioService提供了VolumeStreamState类,为每一种流类型都分配了一个VolumeStreamState对象,VolumeStreamState保存了一个流类型所有音量相关的信息。并且以流类型的值为索引,将它保存在一个名为mStreamStates的数组中。

比如:

a、 VolumeStreamState streamState = mStreamStates[streamTypeAlias];

final int device = getDeviceForStream(streamTypeAlias);

int aliasIndex = streamState.getIndex(device);

b、int oldIndex = mStreamStates[streamType].getIndex(device);

(3)adjustIndex

public boolean adjustIndex(int deltaIndex, int device, String caller) {

//将现有的音量值加上变化量,然后调用setIndex进行设置

return setIndex(getIndex(device) + deltaIndex, device, caller);

}

public boolean setIndex(int index, int device, String caller) {

boolean changed = false;

int oldIndex;

synchronized (VolumeStreamState.class) {

oldIndex = getIndex(device);

index = getValidIndex(index);

synchronized (mCameraSoundForced) {

if ((mStreamType == AudioSystem.STREAM_SYSTEM_ENFORCED) && mCameraSoundForced) {

index = mIndexMax;

}

}

//保存设置的音量值,使用了Map

mIndexMap.put(device, index);

changed = oldIndex != index;

//同时设置所有映射到当前流类型的其他流的音量

final boolean currentDevice = (device == getDeviceForStream(mStreamType));

final int numStreamTypes = AudioSystem.getNumStreamTypes();

for (int streamType = numStreamTypes - 1; streamType >= 0; streamType--) {

final VolumeStreamState aliasStreamState = mStreamStates[streamType];

if (streamType != mStreamType &&

mStreamVolumeAlias[streamType] == mStreamType &&

(changed || !aliasStreamState.hasIndexForDevice(device))) {

final int scaledIndex = rescaleIndex(index, mStreamType, streamType);

aliasStreamState.setIndex(scaledIndex, device, caller);

if (currentDevice) {

aliasStreamState.setIndex(scaledIndex,

getDeviceForStream(streamType), caller);

}

}

}

}

if (changed) {

//加5是为了四舍五入,除10,因为10的整数容易操作

oldIndex = (oldIndex + 5) / 10;

index = (index + 5) / 10;

//发送广播

mVolumeChanged.putExtra(AudioManager.EXTRA_VOLUME_STREAM_VALUE, index);

mVolumeChanged.putExtra(AudioManager.EXTRA_PREV_VOLUME_STREAM_VALUE, oldIndex);

mVolumeChanged.putExtra(AudioManager.EXTRA_VOLUME_STREAM_TYPE_ALIAS,

mStreamVolumeAlias[mStreamType]);

sendBroadcastToAll(mVolumeChanged);

}

return changed;

}

这个函数做的事情是:

1》首先是保存设置的音量值到hashMap表里,音量值与设备相关联,。对同一种流类型来说,在不同的音频设备下将会有不同的音量值;

2》再就是对流映射的处理。既然A→B,那么在设置B的音量的同时要改变A的音量;

可以看出,VolumeStreamState.adjustIndex()除了更新自己所保存的音量值外,没有做其他的事情;

(4)将音量保存在数据库里面。

//从data/system/users/userid/settings_system.xml中把各个stream_device的index音量取出来。

private void persistVolume(VolumeStreamState streamState, int device) {

if (mUseFixedVolume) {

return;

}

if (mIsSingleVolume && (streamState.mStreamType != AudioSystem.STREAM_MUSIC)) {

return;

}

if (streamState.hasValidSettingsName()) {

//将音量值写进SettingProvider

System.putIntForUser(mContentResolver,

streamState.getSettingNameForDevice(device),

(streamState.getIndex(device) + 5)/ 10,

UserHandle.USER_CURRENT);

}

}

获取数据库里面的device音量:

public void readSettings() {

//先锁定,避免出错

synchronized (VolumeStreamState.class) {

……

String name = getSettingNameForDevice(device);

int defaultIndex = (device == AudioSystem.DEVICE_OUT_DEFAULT) ?

AudioSystem.DEFAULT_STREAM_VOLUME[mStreamType] : -1;

//通过读取setting数据库去获取值

int index = Settings.System.getIntForUser(

mContentResolver, name, defaultIndex, UserHandle.USER_CURRENT);

……

}

(5)setStreamVolume API的调用流程如下图:

(6)native层音量调节流程:

音量分3个部分,分别是master volume(硬件音量,控制声卡),stream volume(流音量)和track volume(app音量)。

app音量大小公式:

app_mix = master_volume * stream_volume * track_volume;

其中master_volume,stream_volume和track_volume都是百分比,1表示音量调到最大;

音量最大分贝是0db,表示没有衰减,也就是音源音量;

//native层

//AudioSystem.java

AudioSystem.setStreamVolumeIndex()

//AudioSystem.cpp

AudioSystem::setStreamVolumeIndex()

//AudioPolicyManager.cpp

AudioPolicyManager::setStreamVolumeIndex()

for (size_t i = 0; i < mOutputs.size(); i++) {

checkAndSetVolume();//设置每个输出设备的音量

float volumeDb = computeVolume(stream, index, device);//传进去index计算出DB值

volumeDB = mVolumeCurves->volIndexToDb(stream, Volume::getDeviceCategory(device), index);

//VolumeCurve.cpp

VolumeCurve::volIndexToDb()//根据音量曲线计算出音量

outputDesc->setVolume(volumeDb, stream, device, delayMs, force);

//AudioOutputDescriptor.cpp

SwAudioOutputDescriptor::setVolume()

bool changed = AudioOutputDescriptor::setVolume(volume, stream, device, delayMs, force);

AudioOutputDescriptor::setVolume()

mCurVolume[stream] = volume;

mClientInterface->setStreamVolume(stream, volume, mIoHandle, delayMs);

//AudioPolicyService.cpp

AudioPolicyService::setStreamVolume()

mAudioCommandThread->volumeCommand()//AudioPolicyService::AudioCommandThread::volumeCommand()

sp

command->mCommand = SET_VOLUME;

sendCommand(command, delayMs);//AudioPolicyService::AudioCommandThread::sendCommand()

insertCommand_l(command, delayMs);//插入命令,执行AudioPolicyService::AudioCommandThread::threadLoop()

AudioSystem::setStreamVolume();//AudioSystem::setStreamVolume()

//AudioSystem.cpp

af->setStreamVolume(stream, value, output);//AudioFlinger::setStreamVolume()

//AudioFlinger.cpp

VolumeInterface *volumeInterface = getVolumeInterface_l(output);

volumeInterface->setStreamVolume(stream, value);//AudioFlinger::PlaybackThread::setStreamVolume()

//Threads.cpp

mStreamTypes[stream].volume = value;//保存volume值

broadcast_l();//唤醒PlaybackThread线程

AudioFlinger::PlaybackThread::threadLoop()

mMixerStatus = prepareTracks_l(&tracksToRemove);//不同类型的Thread对prepareTracks_l有不同的实现

AudioFlinger::PlaybackThread::mixer_state AudioFlinger::MixerThread::prepareTracks_l()

//该函数在track volume中分析

......

}

========================================================================================================

track volume(APP设置的是AudioTrack的音量)

//AudioTrack.cpp

AudioTrack::setVolume(float left, float right)

/*传入的音量值保存在mVolume数组中*/

mVolume[AUDIO_INTERLEAVE_RIGHT] = right;

/*setVolumeLR会把做声道与右声道的值,组装成一个数*/

mProxy->setVolumeLR(gain_minifloat_pack(gain_from_float(left), gain_from_float(right)));

//AudioTrackShared.h

/*mCblk表示共享内存的头部,也就是说这个音量值会保存到共享内存的头部*/

mCblk->mVolumeLR = volumeLR;

播放声音时需要AudioMixer进行混音,继续分析AudioFlinger::MixerThread::prepareTracks_l():

//Threads.cpp

AudioFlinger::PlaybackThread::mixer_state AudioFlinger::MixerThread::prepareTracks_l()

/*取出硬件声音*/

float masterVolume = mMasterVolume;

bool masterMute = mMasterMute;

/*把所有活跃的Tracks取出来*/

for (size_t i=0 ; i

//这就是stream volume

float typeVolume = mStreamTypes[track->streamType()].volume;

float v = masterVolume * typeVolume;

/*从proxy中取出刚才组装的音量,其就是通过头部保存的音量,其含有左右声道的音量*/

gain_minifloat_packed_t vlr = proxy->getVolumeLR();

/*提取左右声道的值*/

vlf = float_from_gain(gain_minifloat_unpack_left(vlr)); //这里的vlf等于AudoTrack的left

vrf = float_from_gain(gain_minifloat_unpack_right(vlr)); //这里的vrf等于AudoTrack的right

const float vh = track->getVolumeHandler()->getVolume(

track->mAudioTrackServerProxy->framesReleased()).first;

/*都与之前的V进行相乘*/

vlf *= v * vh;//

vrf *= v * vh;

/*把vlf与vrf传入给AudioMixer*/

mAudioMixer->setParameter(name, param, AudioMixer::VOLUME0, &vlf);

mAudioMixer->setParameter(name, param, AudioMixer::VOLUME1, &vrf);

}