Pytorch学习笔记----DRL

Pytorch--Advanced CNN

- Convolutional Neural Network

-

- Inception Module

-

- 1X1 convolution

- Implementation of Inception Module

- Deep Residual Learning

- Implementation of simple Residual Network

- Reference

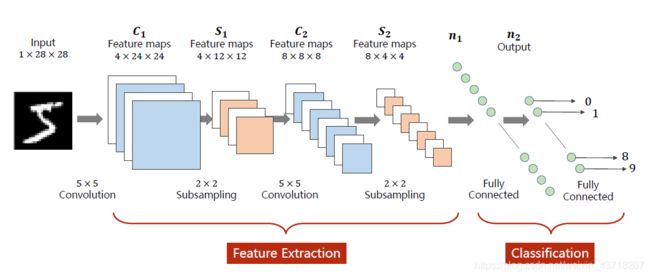

Convolutional Neural Network

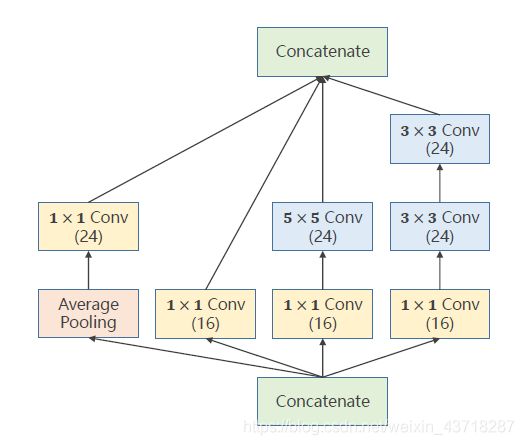

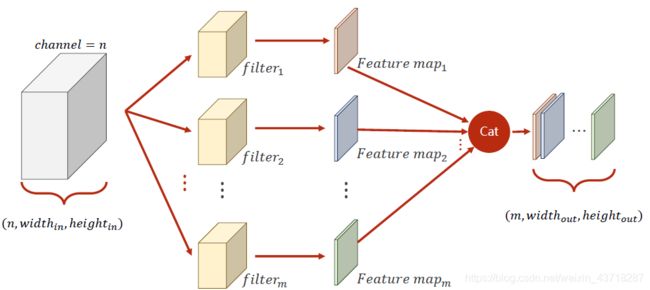

Inception Module

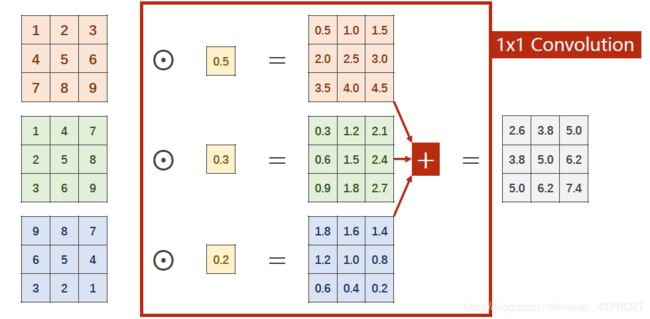

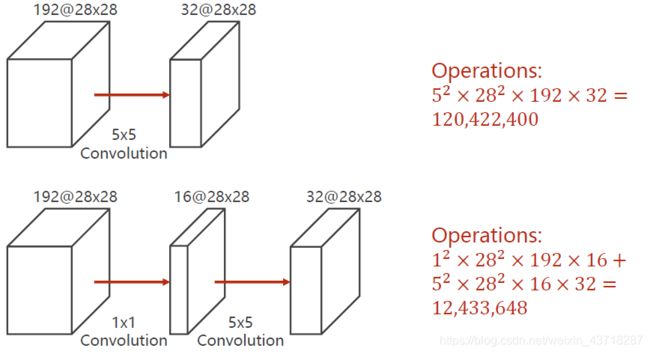

1X1 convolution

1x1卷积核的个数取决于输入张量的通道C个数,1x1卷积可以进行信息融合,改变通道数量

性能比较:

性能比较:

每个张量(b,c,w,h)通过设置padding、stride使得w、h保持不变,通过1x1的卷积来降低计算次数

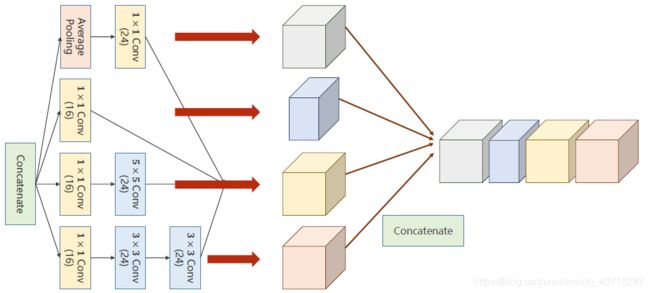

Implementation of Inception Module

import torch.nn as nn

import torch.nn.functional as F

import torchvision

class InceptionA(nn.Module):

def __init__(self,in_channels):

super(InceptionA, self).__init__()

self.branch1x1=nn.Conv2d(in_channels,16,kernel_size=1)

#kernel_size=5,设置padding=2,使得w,h不变

self.branch5x5_1=nn.Conv2d(in_channels,16,kernel_size=1)

self.branch5x5_2=nn.Conv2d(16,24,kernel_size=5,padding=2,stride=1)

# kernel_size=3,padding=1使得w,h不变

self.branch3x3_1=nn.Conv2d(in_channels,16,kernel_size=1)

self.branch3x3_2=nn.Conv2d(16,24,kernel_size=3,padding=1)

self.branch3x3_3=nn.Conv2d(24,24,kernel_size=3,padding=1)

self.branch_pool=nn.Conv2d(in_channels,24,kernel_size=1)

def forward(self,x):

branch1x1=self.branch1x1(x)

branch5x5=self.branch5x5_1(x)

branch5x5=self.branch5x5_2(branch5x5)

branch3x3=self.branch3x3_1(x)

branch3x3=self.branch3x3_2(branch3x3)

branch3x3=self.branch3x3_3(branch3x3)

branch_pool=F.avg_pool2d(x,kernel_size=3,stride=1,padding=1)

branch_pool=self.branch_pool(branch_pool)

outputs=[branch1x1,branch5x5,branch3x3,branch_pool]

return torch.cat(outputs,dim=1) #(b,c,w,h)沿着channel方向拼接

构建网络结构:

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1=nn.Conv2d(1,10,kernel_size=5)

self.conv2=nn.Conv2d(88,20,kernel_size=5)

self.incep1=InceptionA(in_channels=10)

self.incep2=InceptionA(in_channels=20)

self.mp=nn.MaxPool2d(2) #图像高度和宽度在减小

#定义模块时,可以先不写以下语句,直接输出size()然后根据实际size()再填入全连接层的层数

self.fc=nn.Linear(1408,10) #MINIST数据集88*4*4=1408

def forward(self,x):

in_size=x.size(0)

x=F.relu(self.mp(self.conv1(x))) #输出通道为10

x=self.incep1(x) #输出通道数24+24+24+16=88

x=F.relu(self.mp(self.conv2(x))) #输出通道数为20

x=self.incep2(x) #输出通道为88

# 定义模块时,可以先不写以下语句,直接输出size()然后根据实际size()再填入全连接层的层数

x=x.view(in_size,-1)

x=self.fc(x) #全连接层

return x

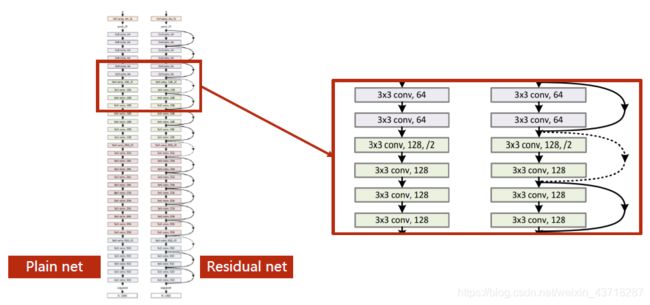

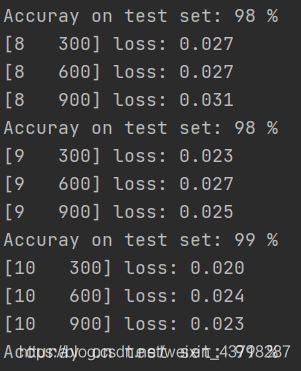

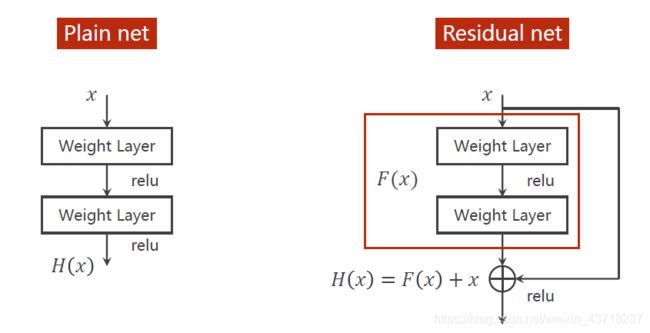

Deep Residual Learning

[解决梯度消失问题-----Residual Net]

Residual Block 输入张量维度和输出张量维度是一致的

Residual Block 输入张量维度和输出张量维度是一致的

class ResidualBlock(torch.nn.Module):

def __init__(self,channels):

super(ResidualBlock, self).__init__()

self.channels=channels

self.conv1=torch.nn.Conv2d(channels,channels,

kernel_size=3,padding=1)

self.conv2=torch.nn.Conv2d(channels,channels,

kernel_size=3,padding=1)

def forward(self,x):

y=F.relu(self.conv1(x))

y=self.conv2(y)

return F.relu(x+y)

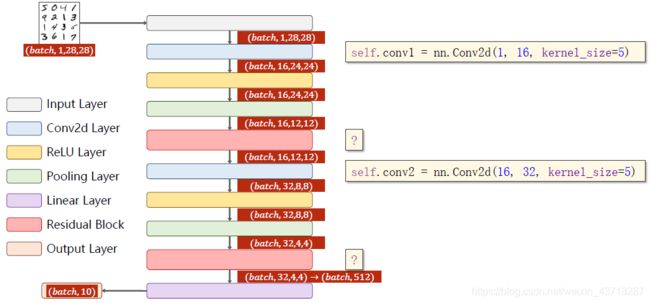

Implementation of simple Residual Network

import torch

import torch.nn.functional as F

class ResidualBlock(torch.nn.Module):

def __init__(self,channels):

super(ResidualBlock, self).__init__()

self.channels=channels

self.conv1=torch.nn.Conv2d(channels,channels,

kernel_size=3,padding=1)

self.conv2=torch.nn.Conv2d(channels,channels,

kernel_size=3,padding=1)

def forward(self,x):

y=F.relu(self.conv1(x))

y=self.conv2(y)

return F.relu(x+y)

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1=torch.nn.Conv2d(1,16,kernel_size=5)

self.conv2=torch.nn.Conv2d(16,32,kernel_size=5)

self.rblock1=ResidualBlock(16)

self.rblock2=ResidualBlock(32)

self.mp=torch.nn.MaxPool2d(2)

self.fc=torch.nn.Linear(512,10)

def forward(self,x):

in_size=x.size(0)

x=self.mp(F.relu(self.conv1(x)))

x=self.rblock1(x)

x==self.mp(F.relu(self.conv2(x)))

x=self.rblock2(x)

x=x.view(in_size,-1)

x=self.fc(x)

return x #使用交叉熵损失函数,最后一步返回不使用激活函数

Reference

B站河北工业大学刘老师Pytorch框架深度学习