Seatunnel源码解析(6) -Web接口启动Seatunnel

Seatunnel源码解析(6) -SparkLauncher启动SeatunnelSpark应用

需求

公司在使用Seatunnel的过程中,规划将Seatunnel集成在平台中,提供可视化操作。

因此目前有如下几个相关的需求:

- 可以通过Web接口,传递参数,启动一个Seatunnel应用

- 可以自定义日志,收集相关指标,目前想到的包括:应用的入流量、出流量;启动时间、结束时间等

- 在任务结束后,可以用applicationId自动从yarn上收集日志(一是手动收集太麻烦,二是时间稍长日志就没了)

材料

- Seatunnel:2.0.5

目前官方2版本还没有正式发布,只能自己下载源码编译。

从Github下载官方源码,clone到本地Idea

github:https://github.com/apache/incubator-seatunnel

官方地址:http://seatunnel.incubator.apache.org/

Idea下方Terminal命令行里,maven打包,执行:mvn clean install -Dmaven.test.skip=true

打包过程大约十几分钟,执行结束后,seatunnel-dist模块的target目录下,可以找到打好包的*.tar.gz压缩安装包

- Spark:2.4.8

- Hadoop:2.7

任意门

Seatunnel源码解析(1)-启动应用

Seatunnel源码解析(2)-加载配置文件

Seatunnel源码解析(3)-加载插件

Seatunnel源码解析(4) -启动Spark/Flink程序

Seatunnel源码解析(5)-修改启动LOGO

导读

本章修改源代码,实现修改应用配置传递方式,封装SparkLauncher,使用JavaApi启动Seatunnel-Spark应用

修改配置解析加载方式

- 自定义插件配置格式如下:

module:plugin:key=value

module:env、source、transform、sink

样例:

- env:spark:app.name=seatunnel

- source:Fake:result_table=source

- transform:sql:sql=select id,name from source where age=20

- sink:Console:limit=10

- sink:Console

- module下没有插件时,不需要写该module

- 将传入的配置文件,解析组织成如下Java数据格式

Map<String, Object> configMap = new HashMap<>();

Map<String, Object> envMap = new HashMap<>();

List<Map<String, Object>> sourceList = new ArrayList<>();

List<Map<String, Object>> transformList = new ArrayList<>();

List<Map<String, Object>> sinkList = new ArrayList<>();

configMap.put("env", envMap);

configMap.put("source", envMap);

configMap.put("transform", envMap);

configMap.put("sink", envMap);

- 解析参数

public class CommandMapArgs extends CommandLineArgs{

private Map<String, Object> envMap;

private List<Map<String, Object>> sourceList;

private List<Map<String, Object>> transformList;

private List<Map<String, Object>> sinkList;

public CommandMapArgs(String deployMode, String configFile, boolean testConfig) {

super(deployMode, configFile, testConfig);

}

public CommandMapArgs(String[] args){

super(DeployMode.CLIENT.getName(), "", false);

List<ConfigLine> configLines = Arrays.stream(args)

.map(this::parseConfigLine)

.collect(Collectors.toList());

envMap = initEnvConfig(configLines);

sourceList = initPluginConfig("source", configLines);

transformList = initPluginConfig("transform", configLines);

sinkList = initPluginConfig("sink", configLines);

}

public Map<String, Object> initEnvConfig(List<ConfigLine> configLines){

List<ConfigLine> moduleConfigLines = configLines.stream().filter(line -> line.isType("env")).collect(Collectors.toList());

Map<String, Object> envMap = new HashMap<>();

moduleConfigLines.forEach(line -> envMap.put(line.key, line.value));

return envMap;

}

public List<Map<String, Object>> initPluginConfig(String module, List<ConfigLine> configLines) {

List<ConfigLine> moduleConfigLines = configLines.stream().filter(line -> line.isType(module)).collect(Collectors.toList());

List<Map<String, Object>> moduleConfigList = new ArrayList<>();

Map<String, List<ConfigLine>> plugins = moduleConfigLines.stream().collect(Collectors.groupingBy(ConfigLine::getPlugin));

plugins.forEach((plugin, lines) -> {

Map<String, Object> pluginMap = new HashMap<>();

pluginMap.put("plugin_name", plugin);

lines.forEach(line -> pluginMap.put(line.key, line.value));

moduleConfigList.add(pluginMap);

});

return moduleConfigList;

}

public ConfigLine parseConfigLine(String line){

ConfigLine configLine = new ConfigLine();

String format = ".+?:.+?:.+?=.+?";

try {

if (Pattern.matches(format, line)){

// env[source/transform/sink]:plugin:key=value

String format1 = "(.+?):(.+?):(.+?)=([\\s\\S]*)";

Pattern pattern = Pattern.compile(format1);

Matcher m = pattern.matcher(line);

if (m.find()){

configLine.setModule(m.group(1));

configLine.setPlugin(m.group(2));

configLine.setKey(m.group(3));

configLine.setValue(m.group(4));

}else {

throw new RuntimeException("config format error, please input correct format: env[source/transform/sink]:plugin:key=value or env[source/transform/sink]:plugin");

}

}else {

// env[source/transform/sink]:plugin

String format1 = "(.+?):(.+)";

Pattern pattern = Pattern.compile(format1);

Matcher m = pattern.matcher(line);

if (m.find()){

configLine.setModule(m.group(1));

configLine.setPlugin(m.group(2));

}else {

throw new RuntimeException("config format error, please input correct format: env[source/transform/sink]:plugin:key=value or env[source/transform/sink]:plugin");

}

}

}catch (Exception e){

String errMsg = e.getMessage();

throw new RuntimeException("parseConfigLine error! Line:" + line + "\nerrorMsg:" + errMsg);

}

return configLine;

}

public Map<String, Object> getAppConfig(){

Map<String, Object> appConfig = new HashMap<>();

appConfig.put("env", envMap);

appConfig.put("source", sourceList);

appConfig.put("transform", transformList);

appConfig.put("sink", sinkList);

return appConfig;

}

@Getter

@Setter

static class ConfigLine {

private String module;

private String plugin;

private String key;

private String value;

public boolean isType(String type){

return type.equals(module);

}

}

}

- 修改Configbuilder类,配置加载方法

private Config loadByMap() {

Map<String, Object> configMap = ((CommandMapArgs) commandLineArgs).getAppConfig();

if (Objects.isNull(configMap) || configMap.isEmpty()) {

throw new ConfigRuntimeException("Please specify config file");

}

LOGGER.info("Loading config map: {}", configMap);

// variables substitution / variables resolution order:

// config file --> system environment --> java properties

Config config = ConfigFactory

.parseMap(configMap)

.resolve(ConfigResolveOptions.defaults().setAllowUnresolved(true))

.resolveWith(ConfigFactory.systemProperties(),

ConfigResolveOptions.defaults().setAllowUnresolved(true));

ConfigRenderOptions options = ConfigRenderOptions.concise().setFormatted(true);

LOGGER.info("parsed config map: {}", config.root().render(options));

return config;

}

- 新建seatunnel启动类

public class SeatunnelSpark2 {

public static void main(String[] args) throws Exception {

CommandLineArgs sparkArgs = new CommandMapArgs(args);

Seatunnel.run(sparkArgs, SPARK);

}

}

新建SparkLauncher启动类

目标:使用SparkLauncher工具,启动一个Seatunnel样例程序

- SparkLauncher代码

新增依赖

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-launcher_2.11artifactId>

<version>${spark.version}version>

dependency>

public class SeatunnelSparkLauncher {

private SparkLauncher launcher;

public SeatunnelSparkLauncher(String SPARK_HOME, String APP_RESOURCE, String MAIN_CLASS, String MASTER, String DEPLOY_MODE, String APP_NAME, Map<String, String> config, String[] args, boolean verbose) {

launcher = new SparkLauncher()

.setSparkHome(SPARK_HOME)

.setAppResource(APP_RESOURCE)

.setMainClass(MAIN_CLASS)

.setMaster(MASTER)

.setDeployMode(DEPLOY_MODE)

.setAppName(APP_NAME)

.addAppArgs(args)

.setVerbose(verbose);

config.forEach((key, value) -> launcher.setConf(key, value));

}

public void startApplication() throws IOException {

SparkAppHandle handler = this.launcher.startApplication(new SparkAppHandle.Listener(){

@Override

public void stateChanged(SparkAppHandle handle) {

System.out.println("********** state changed **********");

String name = handle.getState().name();

System.out.println("state:" + name);

}

@Override

public void infoChanged(SparkAppHandle handle) {

System.out.println("********** info changed **********");

String name = handle.getState().toString();

System.out.println("state:" + name);

}

});

while(!"FINISHED".equalsIgnoreCase(handler.getState().toString()) && !"FAILED".equalsIgnoreCase(handler.getState().toString())){

System.out.println("Application Execution End");

System.out.println("applicationId:"+handler.getAppId());

System.out.println("state:"+handler.getState());

try {

Thread.sleep(10000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

- 新建SparkLauncher启动类

public class SeatunnelSparkSDK {

public static void main(String[] args) throws IOException {

String sparkHome = null;

String jar = null;

String mainClass = "org.apache.seatunnel.SeatunnelSpark2";

String master = "yarn";

String deployMode = "client";

String appName = "seatunnel" + System.currentTimeMillis();

Map<String, String> sparkConf = new HashMap<>();

List<String> appConf = new ArrayList<>();

for (int i = 0; i < args.length;) {

String key = args[i];

String value;

if (key.startsWith("--") && (i+1) < args.length){

value = args[i+1];

i+=2;

}else{

value = key;

i++;

}

switch (key){

case "--spark":

sparkHome = value;

break;

case "--jar":

jar = value;

break;

case "--class":

mainClass = value;

break;

case "--master":

master = value;

break;

case "--deploy-mode":

deployMode = value;

break;

case "--name":

appName = value;

break;

case "--conf":

String[] split = value.split("=");

String confKey = split[0];

String confValue = split.length >=2 ? split[1] : "";

sparkConf.put(confKey, confValue);

break;

default:

appConf.add(value);

}

}

SeatunnelSparkLauncher launcher = new SeatunnelSparkLauncher(

sparkHome,

jar,

mainClass,

master,

deployMode,

appName,

sparkConf,

appConf.toArray(new String[0]),

true

);

launcher.startApplication();

}

}

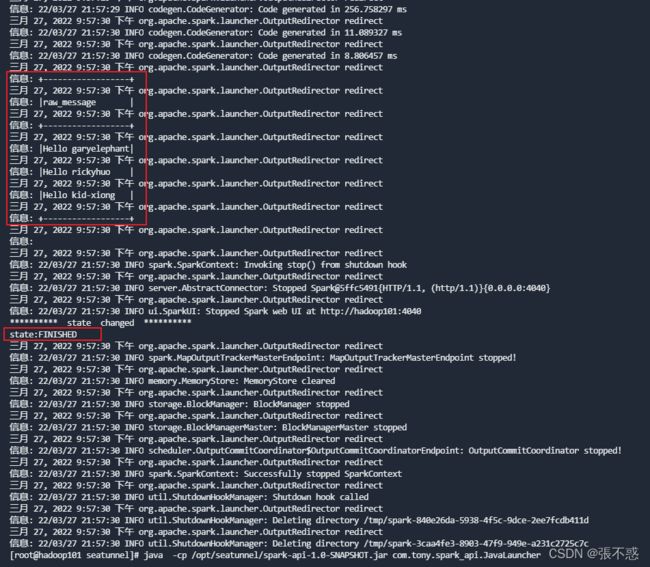

测试

- 启动脚本

#!/bin/bash

JAVA_HOME=/usr/local/jdk1.8.0_102

JRE_HOME=/usr/local/jdk1.8.0_102/jre

CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

export PATH JAVA_HOME CLASSPATH JRE_HOME

home='/opt/seatunnel'

jar='seatunnel-core-spark.jar'

mainClass='org.apache.seatunnel.SeatunnelSparkSDK'

java -cp ${home}/lib/${jar} ${mainClass} \

--spark /opt/spark \

--jar ${home}/lib/${jar} \

--class org.apache.seatunnel.SeatunnelSpark2 \

--master yarn \

--deploy-mode client \

env:spark:spark.app.name=Seatunnel \

env:spark:spark.executor.cores=1 \

env:spark:spark.execurot.instances=2 \

env:spark:spark.execurot.memory=1g \

source:Fake:result_table_name=source \

sink:Console:limit=100