kubernetes使用二进制方法安装部署k8s的v1.23版本安装步骤

二进制安装k8s环境

@

- 二进制安装k8s环境

- 前置环境准备及网段规划

- 一、k8s基础环境准备

- 1.1 配置静态IP

- 1.2 配置主机名

- 1.3 配置 hosts 文件

- 1.4 免密登录

- 1.5 关闭 firewalld 防火墙

- 1.6 关闭 selinux

- 1.7 关闭交换分区 swap

- 1.8 修改内核参数

- 1.9 配置阿里云 repo 源

- 1.10 配置时间同步

- 1.11 安装 iptables

- 1.12 安装环境基础软件包

- 1.13 安装 docker环境

- 1.14 配置 docker 镜像加速器

- 二、k8s环境部署

- 2.1 搭建 etcd 集群

- 2.1.1 配置 etcd 工作目录

- 2.1.2 安装签发证书工具 cfssl

- 2.1.3 配置 ca 证书

- 2.1.4 生成 etcd 证书

- 2.1.5 部署 etcd 集群

- 2.2 部署 apiserver 组件

- TLS bootstrapping 具体引导过程

- 2.3 部署 kubectl 组件

- 2.3.1 kubectl组件介绍

- 2.3.2 创建 csr 请求文件

- 2.3.2 生成客户端的证书

- 2.3.3 配置安全上下文

- 2.1 搭建 etcd 集群

- 查看集群组件状态

- 配置 kubectl 子命令补全,依次执行下面命令即可

- 2.4 部署 kube-controller-manager 组件

- 2.4.1 创建 kube-controller-manager csr 请求文件

- 2.4.2 生成 kube-controller-manager证书

- 2.4.3 创建 kube-controller-manager 的 kubeconfig

- 2.4.4 创建kube-controller-manager配置文件

- 2.4.5 创建kube-controller-manager启动文件

- 2.4.6 启动 kube-controller-manager服务

- 2.5 部署 kube-scheduler 组件

- 2.5.1 创建kube-scheduler的csr 请求

- 2.5.2 生成kube-scheduler证书

- 2.5.3 创建 kube-scheduler 的 kubeconfig文件

- 2.5.4 创建配置文件 kube-scheduler的配置文件

- 2.5.5 创建kube-scheduler的服务启动文件

- 2.5.6 拷贝文件到master2节点并启动服务

- 2.6. 部署 kubelet 组件

- 2.6.1 创建 kubelet-bootstrap.kubeconfig

- 2.6.2 创建配置文件 kubelet.json

- 2.6.3 创建kubelet服务启动文件

- 2.6.4 拷贝kubelet的证书及配置文件到node节点

- 2.6.5 在node节点上启动 kubelet 服务

- 2.6.6 在master节点审批node节点的请求

- 2.7 部署 kube-proxy 组件

- 2.7.1 创建kube-proxy的 csr 请求

- 2.7.2 生成证书

- 2.7.3 创建 kubeconfig 文件

- 2.7.4 创建 kube-proxy 配置文件

- 2.7.5 创建kube-proxy服务启动文件

- 2.7.6 拷贝kube-proxy文件到node节点上

- 2.7.7 启动kube-proxy服务

- 2.8 部署 calico 组件

- 2.4 部署 kube-controller-manager 组件

- 解压离线镜像压缩包

- 把 calico.yaml 文件上传到 k8s-master1 上的的/data/work 目录

- 2.9 部署 coredns 组件

- 2.10 查看集群状态

- 三、集群组件功能验证测试

- 3.1 对系统用户 kubernetes 做授权

- 3.2 测试 k8s 集群部署 tomcat 服务

- 3.3 验证 cordns 是否正常

- 四、 实现k8s apiserver 高可用

前置环境准备及网段规划

*k8s 环境规划:*

*Pod* *网段:* *10.0.0.0/16*

*Service 网段: 10.255.0.0/16*

*实验环境规划:*

*操作系统:centos7.6*

配置: 4Gib 内存/4vCPU/100G 硬盘开启虚拟机的虚拟化:

| 集群角色 | Ip | 主机名 | 安装的组件 |

|---|---|---|---|

| 控制节点 | 192.168.7.10 | k8s-master1 | apiserver、controller-manager、****scheduler、etcd、docker、keepalived、 nginx |

| 控制节点 | 192.168.7.11 | k8s-maste2 | apiserver、controller-manager、****scheduler、etcd、docker、keepalived、 nginx |

| 工作节点 | 192.168.1.13 | k8s-node1 | kubelet、kube-proxy、docker、****calico、coredns |

| Vip | 192.168.7.99 |

一、k8s基础环境准备

1.1 配置静态IP

虚拟机或者物理机配置成静态 ip 地址,这样机器重新启动后 ip 地址也不会发生改变。

以 k8s-master1 主机修改静态 IP 为例

修改/etc/sysconfig/network-scripts/ifcfg-ens33 文件,变成如下:

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

IPADDR=192.168.7.10

NETMASK=255.255.255.0

GATEWAY=192.168.7.2

DNS1=192.168.7.2

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=eth0

DEVICE=eth0

ONBOOT=yes1.2 配置主机名

#配置主机名:

在 192.168.7.10 上执行如下:

hostnamectl set-hostname k8s-master1 && bash

在 192.168.7.11 上执行如下:

hostnamectl set-hostname k8s-master2 && bash

在 192.168.7.13 上执行如下:

hostnamectl set-hostname k8s-node1 && bash1.3 配置 hosts 文件

#修改 k8s-master1、k8s-master2、k8s-node1 机器的/etc/hosts 文件,增加如下四行:

192.168.7.10 k8s-master1 master1

192.168.7.11 k8s-master2 master2

192.168.7.13 k8s-node1 node11.4 免密登录

配置主机之间无密码登录每台机器都按照如下操作

#生成 ssh 密钥对

ssh-keygen -t rsa #一路回车,不输入密码把本地的 ssh 公钥文件安装到远程主机对应的账户

ssh-copy-id -i .ssh/id_rsa.pub k8s-master1

ssh-copy-id -i .ssh/id_rsa.pub k8s-master2

ssh-copy-id -i .ssh/id_rsa.pub k8s-node11.5 关闭 firewalld 防火墙

在 k8s-master1、k8s-master2、k8s-node1 上操作:

systemctl stop firewalld && systemctl disable firewalld && systemctl status firewalld1.6 关闭 selinux

在 k8s-master1、k8s-master2、k8s-node1 上操作:

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

#修改 selinux 配置文件之后,重启机器,selinux 配置才能永久生效重启之后登录机器验证是否修改成功:

getenforce

#显示 Disabled 说明 selinux 已经关闭1.7 关闭交换分区 swap

在 k8s-master1、k8s-master2、k8s-node1 上操作:

临时关闭 swapoff -a

永久关闭:注释 swap 挂载,给 swap 这行开头加一下注释

vim /etc/fstab

#dev/mapper/centos-swap swap swap defaults 0 01.8 修改内核参数

在 k8s-master1、k8s-master2、k8s-node1 上操作:

# 加载 br_netfilter 模块

modprobe br_netfilter

# 验证模块是否加载成功:

lsmod |grep br_netfilter

# 修改内核参数

cat > /etc/sysctl.d/k8s.conf <为什么要执行 modprobe br_netfilter?

如不执行上面步骤则在修改/etc/sysctl.d/k8s.conf 文件后再执行 sysctl -p /etc/sysctl.d/k8s.conf 会出现如下报错:

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory

所以解决方法就是提前加载相应模块

modprobe br_netfilternet.ipv4.ip_forward 是数据包转发:

出于安全考虑,Linux 系统默认是禁止数据包转发的。所谓转发即当主机拥有多于一块的网卡时,其中一块收到数据包,根据数据包的目的 ip 地址将数据包发往本机另一块网卡,该网卡根据路由表继续发送数据包。这通常是路由器所要实现的功能。

要让 Linux 系统具有路由转发功能,需要配置一个 Linux 的内核参数 net.ipv4.ip_forward。这个参数指定了 Linux 系统当前对路由转发功能的支持情况;其值为 0 时表示禁止进行 IP 转发;如果是 1,则说明 IP 转发功能已经打开。

1.9 配置阿里云 repo 源

在 k8s-master1、k8s-master2、k8s-node1 上操作:

安装 rzsz scp命令

yum install lrzsz openssh-clients yum-utils -y

#配置国内阿里云 docker 的 repo 源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo1.10 配置时间同步

#安装 ntpdate 或chrony服务都可以

yum install ntpdate -y

#跟网络源做同步

ntpdate cn.pool.ntp.org

#把时间同步做成计划任务

crontab -e

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

#重启 crond 服务

service crond restart1.11 安装 iptables

如果用 firewalld 不习惯,可以安装 iptables ,在 k8s-master1、k8s-master2、k8s-node1 上操作:

#安装 iptables

yum install iptables-services -y

#禁用 iptables

service iptables stop && systemctl disable iptables

#清空防火墙规则

iptables -F1.12 安装环境基础软件包

yum安装

yum install -y device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet rsync1.13 安装 docker环境

在 k8s-master1、k8s-master2、k8s-node1 上操作:

yum install docker-ce -y

systemctl start docker && systemctl enable docker.service && systemctl status docker1.14 配置 docker 镜像加速器

在 k8s-master1、k8s-master2、k8s-node1 上操作:

tee /etc/docker/daemon.json << 'EOF'

{

"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com","http://qtid6917.mirror.aliyuncs.com", "https://rncxm540.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl daemon-reload && systemctl restart docker && systemctl status docker

Active: active (running) since Tue 2022-08-09 23:55:58 CST; 4ms ago#修改 docker 文件驱动为 systemd,默认为 cgroupfs,kubelet 默认使用 systemd,两者必须一致才可以。

二、k8s环境部署

2.1 搭建 etcd 集群

2.1.1 配置 etcd 工作目录

#创建配置文件和证书文件存放目录(master1和2同时操作)

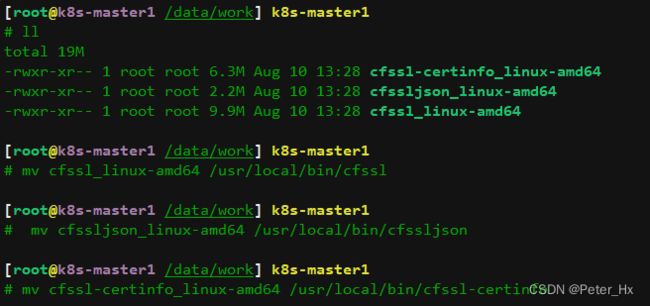

[root@k8s-master1 ~]# mkdir -p /etc/etcd/ssl2.1.2 安装签发证书工具 cfssl

在master1操作

[root@k8s-master1 ~]# mkdir /data/work -p

[root@k8s-master1 ~]# cd /data/work/

#cfssl-certinfo_linux-amd64 cfssljson_linux-amd64 cfssl_linux-amd64 上传到/data/work/目录下

[root@k8s-master1 work]# ls

cfssl-certinfo_linux-amd64 cfssljson_linux-amd64 cfssl_linux-amd64

#把文件变成可执行权限

[root@k8s-master1 work]# chmod +x *

[root@k8s-master1 work]# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[root@k8s-master1 work]# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@k8s-master1 work]# mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo2.1.3 配置 ca 证书

#生成 ca 证书请求文件

[root@k8s-master1 work]# vim ca-csr.json

# cat ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Hubei",

"L": "Wuhan",

"O": "k8s",

"OU": "system"

}

],

"ca": {

"expiry": "87600h"

}

}

[root@k8s-master1 work]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

2022/08/10 13:37:04 [INFO] generating a new CA key and certificate from CSR

2022/08/10 13:37:04 [INFO] generate received request

2022/08/10 13:37:04 [INFO] received CSR

2022/08/10 13:37:04 [INFO] generating key: rsa-2048

2022/08/10 13:37:04 [INFO] encoded CSR

2022/08/10 13:37:04 [INFO] signed certificate with serial number 277004694657827998333236437935739196848568561695注:

CN:Common Name(公用名称),kube-apiserver 从证书中提取该字段作为请求的用户名 (User Name);浏览器使用该字段验证网站是否合法;对于 SSL 证书,一般为网站域名;而对于代码签名证书则为申请单位名称;而对于客户端证书则为证书申请者的姓名。

O:Organization(单位名称),kube-apiserver 从证书中提取该字段作为请求用户所属的组

(Group);对于 SSL 证书,一般为网站域名;而对于代码签名证书则为申请单位名称;而对于客户端单位证书则为证书申请者所在单位名称。

L 字段:所在城市

S 字段:所在省份

C 字段:只能是国家字母缩写,如中国:CN

#生成 ca 证书文件

# cat ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}2.1.4 生成 etcd 证书

#配置 etcd 证书请求,hosts 的 ip 变成自己 etcd 所在节点的 ip

# cat etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.7.10",

"192.168.7.11",

"192.168.7.13",

"192.168.7.99"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "Hubei",

"L": "Wuhan",

"O": "k8s",

"OU": "system"

}]

}#上述文件 hosts 字段中 IP 为所有 etcd 节点的集群内部通信 IP,可以预留几个,做扩容用。

[root@k8s-master1 work]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

2022/08/10 13:49:29 [INFO] generate received request

2022/08/10 13:49:29 [INFO] received CSR

2022/08/10 13:49:29 [INFO] generating key: rsa-2048

2022/08/10 13:49:29 [INFO] encoded CSR

2022/08/10 13:49:29 [INFO] signed certificate with serial number 580147585393953277974184358784294478670114176750

2022/08/10 13:49:29 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

# 查看一下

[root@k8s-master1 work]# ls etcd*.pem

etcd-key.pem etcd.pem2.1.5 部署 etcd 集群

把 etcd-v3.4.13-linux-amd64.tar.gz 上传到/data/work 目录下

[root@k8s-master1 work]# pwd

/data/work

[root@k8s-master1 work]# tar -xf etcd-v3.4.13-linux-amd64.tar.gz

[root@k8s-master1 work]# cp -ar etcd-v3.4.13-linux-amd64/etcd* /usr/local/bin/

[root@k8s-master1 work]# chmod +x /usr/local/bin/etcd*

# 同样拷贝到master2下

[root@k8s-master1 work]# scp -r etcd-v3.4.13-linux-amd64/etcd* k8s-master2:/usr/local/bin/

[root@k8s-master2]# chmod +x /usr/local/bin/etcd*#创建配置文件

[root@k8s-master1 /data/work] k8s-master1

# cat etcd.conf

#[Member]

ETCD_NAME="etcd1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.7.10:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.7.10:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.7.10:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.7.10:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.7.10:2380,etcd2=https://192.168.7.11:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"#注:

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:集群 Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new 是新集群,existing 表示加入已有集群

#创建启动服务文件

[root@k8s-master1 work]# vim etcd.service

# cat etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/etc/etcd/etcd.conf

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-cert-file=/etc/etcd/ssl/etcd.pem \

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-client-cert-auth \

--client-cert-auth

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target把etcd的证书都拷贝到一开始创建证书目录下,并同步拷贝到master2

[root@k8s-master1 work]# cp ca*.pem /etc/etcd/ssl/

[root@k8s-master1 work]# cp etcd*.pem /etc/etcd/ssl/

[root@k8s-master1 work]# cp etcd.conf /etc/etcd/

[root@k8s-master1 work]# cp etcd.service /usr/lib/systemd/system/

[root@k8s-master1 work]# for i in k8s-master2 ;do rsync -vaz etcd.conf $i:/etc/etcd/;done

[root@k8s-master1 work]# for i in k8s-master2 ;do rsync -vaz etcd*.pem ca*.pem $i:/etc/etcd/ssl/;done

[root@k8s-master1 work]# for i in k8s-master2;do rsync -vaz etcd.service $i:/usr/lib/systemd/system/;done#启动 etcd 集群

[root@k8s-master1 work]# mkdir -p /var/lib/etcd/default.etcd

[root@k8s-master2 work]# mkdir -p /var/lib/etcd/default.etcd

修改master2的etcd配置文件

[root@k8s-master2 ~]# vim /etc/etcd/etcd.conf

# cat /etc/etcd/etcd.conf

#[Member]

ETCD_NAME="etcd2"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.7.11:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.7.11:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.7.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.7.11:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.7.10:2380,etcd2=https://192.168.7.11:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"启动etc服务

[root@k8s-master1 work]# systemctl daemon-reload && systemctl enable etcd.service && systemctl start etcd.service

[root@k8s-master2 work]# systemctl daemon-reload && systemctl enable etcd.service && systemctl start etcd.service注意:

启动 etcd 的时候,先启动 k8s-master1 的 etcd 服务,会一直卡住在启动的状态,然后接着再启动k8s-master2 的 etcd,这样 k8s-master1 这个节点 etcd 才会正常起来

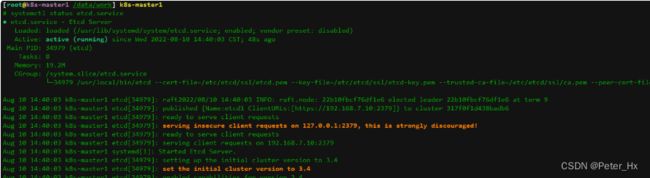

[root@k8s-master1]# systemctl status etcd

[root@k8s-master2]# systemctl status etcd

#查看 etcd 集群

[root@k8s-master1 work]#

ETCDCTL_API=3 && /usr/local/bin/etcdctl --write-out=table --cacert=/etc/etcd/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem --endpoints=https://192.168.7.10:2379,https://192.168.7.11:2379 endpoint health

+---------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+---------------------------+--------+-------------+-------+

| https://192.168.7.10:2379 | true | 8.808558ms | |

| https://192.168.7.11:2379 | true | 10.902347ms | |

+---------------------------+--------+-------------+-------+2.2 安装kubernetes组件

下载安装包

二进制包所在的 github 地址如下:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/

#把 kubernetes1.23-server-linux-amd64.tar.gz 上传到 k8s-master1 上的/data/work 目录下:

[root@k8s-master1 work]# tar zxvf kubernetes1.23-server-linux-amd64.tar.gz

[root@k8s-master1 work]# cd kubernetes/server/bin/

[root@k8s-master1 bin]# cp kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

[root@k8s-master1 bin]# rsync -vaz kube-apiserver kube-controller-manager kube- scheduler kubectl k8s-master2:/usr/local/bin/

[root@k8s-master1 bin]# scp kubelet kube-proxy k8s-node1:/usr/local/bin/

[root@k8s-master1 bin]# cd /data/work/

[root@k8s-master1 work]# mkdir -p /etc/kubernetes/ssl

[root@k8s-master1 work]# mkdir /var/log/kubernetes2.2 部署 apiserver 组件

启动 TLS Bootstrapping 机制

Master apiserver 启用 TLS 认证后,每个节点的 kubelet 组件都要使用由 apiserver 使用的CA 签发的有效证书才能与 apiserver 通讯,当 Node 节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。

为了简化流程,Kubernetes 引入了 TLS bootstraping 机制来自动颁发客户端证书,kubelet 会以一个低权限用户自动向 apiserver 申请证书,kubelet 的证书由 apiserver 动态签署。

Bootstrap 是很多系统中都存在的程序,比如 Linux 的 bootstrap,bootstrap 一般都是作为预先配置在开启或者系统启动的时候加载,这可以用来生成一个指定环境。Kubernetes 的 kubelet 在启动时同样可以加载一个这样的配置文件,这个文件的内容类似如下形式:

apiVersion: v1 clusters: null contexts:

- context:

cluster: kubernetes

user: kubelet-bootstrap

name: default

current-context: default

kind: Config preferences: {}

users:

-name: kubelet-bootstrap user: {}

TLS bootstrapping 具体引导过程

- TLS 作用

TLS 的作用就是对通讯加密,防止中间人窃听;同时如果证书不信任的话根本就无法与 apiserver建立连接,更不用提有没有权限向 apiserver 请求指定内容。

2. RBAC 作用

当 TLS 解决了通讯问题后,那么权限问题就应由 RBAC 解决(可以使用其他权限模型,如ABAC);RBAC 中规定了一个用户或者用户组(subject)具有请求哪些 api 的权限;在配合 TLS 加密的时候,实际上 apiserver 读取客户端证书的 CN 字段作为用户名,读取 O 字段作为用户组.

以上说明:第一,想要与 apiserver 通讯就必须采用由 apiserver CA 签发的证书,这样才能形成信任关系,建立 TLS 连接;第二,可以通过证书的 CN、O 字段来提供 RBAC 所需的用户与用户组。

kubelet 首次启动流程

TLS bootstrapping 功能是让 kubelet 组件去 apiserver 申请证书,然后用于连接apiserver;那么第一次启动时没有证书如何连接 apiserver ?

在 apiserver 配置中指定了一个 token.csv 文件,该文件中是一个预设的用户配置;同时该用户的Token 和 由 apiserver 的 CA 签发的用户被写入了 kubelet 所使用的 bootstrap.kubeconfig 配置文件中;这样在首次请求时,kubelet 使用 bootstrap.kubeconfig 中被 apiserver CA 签发证书时信任的用户来与 apiserver 建立 TLS 通讯,使用 bootstrap.kubeconfig 中的用户 Token 来向apiserver 声明自己的 RBAC 授权身份.

token.csv 格式:

3940fd7fbb391d1b4d861ad17a1f0613,kubelet-bootstrap,10001,"system:kubelet- bootstrap"

首次启动时,可能与遇到 kubelet 报 401 无权访问 apiserver 的错误;这是因为在默认情况下,kubelet 通过 bootstrap.kubeconfig 中的预设用户 Token 声明了自己的身份,然后创建 CSR请求;但是不要忘记这个用户在我们不处理的情况下他没任何权限的,包括创建 CSR 请求;所以需要创建一个ClusterRoleBinding,将预设用户 kubelet-bootstrap 与内置的 ClusterRole system:node-bootstrapper 绑定到一起,使其能够发起 CSR 请求。稍后安装 kubelet 的时候演示。

#创建 token.csv 文件

[root@k8s-master1 ~]# cd /data/work/

[root@k8s-master1 work]# cat > token.csv << EOF

$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

# 格式:token,用户名,UID,用户组#创建 csr 请求文件,替换为自己机器的 IP

[root@k8s-master1 work]# vim kube-apiserver-csr.json

#注: 如果 hosts 字段不为空则需要指定授权使用该证书的 IP 或域名列表。 由于该证书后续被 kubernetes master 集群使用,需要将 master 节点的 IP 都填上,同时还需要填写 service 网络的首个 IP。(一般是 kube-apiserver 指定的 service-cluster-ip-range 网段的第一个 IP,如 10.255.0.1)

# cat kube-apiserver-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.7.10",

"192.168.7.11",

"192.168.7.12",

"192.168.7.13",

"192.168.7.99",

"10.255.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Hubei",

"L": "Wuhan",

"O": "k8s",

"OU": "system"

}

]

}#生成证书

[root@k8s-master1 work]#

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

2022/08/10 16:34:32 [INFO] generate received request

2022/08/10 16:34:32 [INFO] received CSR

2022/08/10 16:34:32 [INFO] generating key: rsa-2048

2022/08/10 16:34:32 [INFO] encoded CSR

2022/08/10 16:34:32 [INFO] signed certificate with serial number 477144676195233738114152251729193994277731824828

2022/08/10 16:34:32 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").创建apiserver的配置文件

#创建 api-server 的配置文件,替换成自己的 ip

[root@k8s-master1 work]# vim kube-apiserver.conf

# cat kube-apiserver.conf

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=192.168.7.10 \

--secure-port=6443 \

--advertise-address=192.168.7.10 \

--insecure-port=0 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.255.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://192.168.7.10:2379,https://192.168.7.11:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=4"

# 注解

--logtostderr:启用日志

--v:日志等级

--log-dir:日志目录

--etcd-servers:etcd 集群地址

--bind-address:监听地址

--secure-port:https 安全端口

--advertise-address:集群通告地址

--allow-privileged:启用授权

--service-cluster-ip-range:Service 虚拟 IP 地址段

--enable-admission-plugins:准入控制模块

--authorization-mode:认证授权,启用 RBAC 授权和节点自管理

--enable-bootstrap-token-auth:启用 TLS bootstrap 机制

--token-auth-file:bootstrap token 文件

--service-node-port-range:Service nodeport 类型默认分配端口范围

--kubelet-client-xxx:apiserver 访问 kubelet 客户端证书

--tls-xxx-file:apiserver https 证书

--etcd-xxxfile:连接 Etcd 集群证书

–-audit-log-xxx:审计日志#创建服务启动文件

[root@k8s-master1 work]# vim kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

# 拷贝证书文件到相应的目录,同时也拷贝到master2节点

[root@k8s-master1 work]# cp ca*.pem /etc/kubernetes/ssl

cp kube-apiserver*.pem /etc/kubernetes/ssl/

cp token.csv /etc/kubernetes/

cp kube-apiserver.conf /etc/kubernetes/

cp kube-apiserver.service /usr/lib/systemd/system/

[root@k8s-master1 work]# rsync -vaz token.csv k8s-master2:/etc/kubernetes/

[root@k8s-master1 work]# rsync -vaz kube-apiserver*.pem k8s-master2:/etc/kubernetes/ssl/

[root@k8s-master1 work]# rsync -vaz ca*.pem k8s-master2:/etc/kubernetes/ssl/

rsync -vaz kube-apiserver.conf k8s-master2:/etc/kubernetes/

rsync -vaz kube-apiserver.service k8s-master2:/usr/lib/systemd/system/

注意!!!!k8s-master2 配置文件 kube-apiserver.conf 的 IP 地址修改为实际的本机 IP

启动kube-apiserver

[root@k8s-master1 work]# systemctl daemon-reload && systemctl enable kube-apiserver && systemctl start kube-apiserver

[root@k8s-master2 work]# systemctl daemon-reload && systemctl enable kube-apiserver && systemctl start kube-apiserver

[root@k8s-master1 work]# systemctl status kube-apiserver

Active: active (running) since Wed 2022-08-10 16:58:26 CST; 15s ago

[root@k8s-master1 work]#

curl --insecure https://192.168.7.10:6443/

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

}

上面看到 401,这个是正常的的状态,还没认证2.3 部署 kubectl 组件

2.3.1 kubectl组件介绍

Kubectl 是客户端工具,操作k8s 资源的,如增删改查等。

Kubectl 操作资源的时候,怎么知道连接到哪个集群,需要一个文件/etc/kubernetes/admin.conf,kubectl 会根据这个文件的配置,去访问 k8s 资源。

/etc/kubernetes/admin.conf 文件记录了访问的 k8s 集群,和用到的证书。可以设置一个环境变量 KUBECONFIG

[root@ k8s-master1 ~]# export KUBECONFIG=/etc/kubernetes/admin.conf这样在操作 kubectl,就会自动加载 KUBECONFIG 来操作要管理哪个集群的 k8s 资源了也可以按照下面方法,这个是在 kubeadm 初始化 k8s 的时候会提示我们要用的一个方法

[root@ k8s-master1 ~]# cp /etc/kubernetes/admin.conf /root/.kube/config这样我们在执行 kubectl,就会加载/root/.kube/config 文件,去操作 k8s 资源了

如果设置了 KUBECONFIG,那就会先找到 KUBECONFIG 去操作 k8s,如果没有 KUBECONFIG变量,那就会使用/root/.kube/config 文件决定管理哪个 k8s 集群的资源注意:admin.conf 还没创建,下面步骤创建

2.3.2 创建 csr 请求文件

[root@k8s-master1 work]# vim admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Hubei",

"L": "Wuhan",

"O": "system:masters",

"OU": "system"

}

]

}说明: 后续 kube-apiserver 使用 RBAC 对客户端(如 kubelet、kube-proxy、Pod)请求进行授权; kube-apiserver 预定义了一些 RBAC 使用的 RoleBindings,如 cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予了调用 kube-apiserver 的所有 API 的权限; O 指定该证书的 Group 为 system:masters,kubelet 使用该证书访问 kube-apiserver 时 ,由于证书被 CA 签名,所以认证通过,同时由于证书用户组为经过预授权的system:masters,所以被授予访问所有 API 的权限;

注: 这个 admin 证书,是将来生成管理员用的 kube config 配置文件用的,现在我们一般建议使用 RBAC 来对 kubernetes 进行角色权限控制, kubernetes 将证书中的 CN 字段 作为 User,

O 字段作为 Group; "O": "system:masters", 必须是 system:masters,否则后面 kubectl create

clusterrolebinding 报错。

证书 O 配置为 system:masters 在集群内部 cluster-admin 的 clusterrolebinding 将system:masters 组和cluster-admin clusterrole 绑定在一起

2.3.2 生成客户端的证书

[root@k8s-master1 work]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2022/08/10 17:14:04 [INFO] generate received request

2022/08/10 17:14:04 [INFO] received CSR

2022/08/10 17:14:04 [INFO] generating key: rsa-2048

2022/08/10 17:14:04 [INFO] encoded CSR

2022/08/10 17:14:04 [INFO] signed certificate with serial number 116808315728556635428311377758058019811028156802

2022/08/10 17:14:04 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master1 work]# cp admin*.pem /etc/kubernetes/ssl/2.3.3 配置安全上下文

#创建 kubeconfig 配置文件,比较重要

kubeconfig 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、 CA 证书和自身使用的证书(这里如果报错找不到 kubeconfig 路径,请手动复制到相应路径下,没有则忽略)

1.设置集群参数

[root@k8s-master1 /data/work] k8s-master1 #

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.7.10:6443 --kubeconfig=kube.config

Cluster "kubernetes" set#查看 kube.config 内容

cat kube.config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR0akNDQXA2Z0F3SUJBZ0lVTUlWUjhrM0haSHhmb0tPZmNrSE9hU05nY0I4d0RRWUpLb1pJaHZjTkFRRUwKQlFBd1lURUxNQWtHQTFVRUJoTUNRMDR4RGpBTUJnTlZCQWdUQlVoMVltVnBNUTR3REFZRFZRUUhFd1ZYZFdoaApiakVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WnplWE4wWlcweEV6QVJCZ05WQkFNVENtdDFZbVZ5CmJtVjBaWE13SGhjTk1qSXdPREV3TURVek1qQXdXaGNOTXpJd09EQTNNRFV6TWpBd1dqQmhNUXN3Q1FZRFZRUUcKRXdKRFRqRU9NQXdHQTFVRUNCTUZTSFZpWldreERqQU1CZ05WQkFjVEJWZDFhR0Z1TVF3d0NnWURWUVFLRXdOcgpPSE14RHpBTkJnTlZCQXNUQm5ONWMzUmxiVEVUTUJFR0ExVUVBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKCktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQU1PSlFwMDBtZ1AzZkUvMzQwN0ZqTUhWYWVGd2FGQWwKeXJTU1lqT1h0emlkdC9DSTRiVTU4TERQbEFqM1FrYUJQOEZnc3podnJxY0s0aGEydUNDTG93dWFzYkJVR2RsNgp0ejRyY2w3TWFuNzFEWDVXaWVKOWxQVWxlbWRKblFwc3NlR3puRmZtU1NSMHFqTVNtbXlMSzRvci81OW1NVkxvCk1sL0JNd2pMSzk3Q1NQdHhDL2g5ODBydDhWbENsNWxGWnBYbmJJWW1FbkhaakxGa2xnRzVLTktvMm54MGhUNk4KTzd4M0FtYTBzb0VjUVgxWXBtYy82ZHBFcXRnZXE1Ry9GajJkanZud2o4R09aSEJhVmNLNFBWWG9pYi81U3JmTgppamR1S2dBeis3Q1MxRGFYcytocndEMjNBRm1FelJNZVF3aG0yK0ZWVjFkRXBVRDlkMFg0UWE4Q0F3RUFBYU5tCk1HUXdEZ1lEVlIwUEFRSC9CQVFEQWdFR01CSUdBMVVkRXdFQi93UUlNQVlCQWY4Q0FRSXdIUVlEVlIwT0JCWUUKRkNIN3dmRUVQWG1EY2RxT25wcUdad2JjSGptVU1COEdBMVVkSXdRWU1CYUFGQ0g3d2ZFRVBYbURjZHFPbnBxRwpad2JjSGptVU1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQ2ZLWUhCZ2JwSkZMMkxKT2FOeGNCWDEwYlMvbkJECnI5VTJSRGdtRkNSVXQ0LzRMT25BaU9QTUpvZ3UxQndWZ0lLc2UrS1NSVFVnMjc4S1lBc002QzBkeW9mM2dVN0sKdWpMYWV4NDZQNXJvTXJINEY3N2xtL2FqaUdSS1lNRTQvNjV0clF3VDJtenBQYVhUZFZGb3grRUVsbTZnNU5wSApKYWk1L0QzSzNYRUdDL1lMYmdaUFpUYVRCSDN6bUlkV2hNQXo1N1U0U1FVak0vRTdIN09rcDRiTEQzM2pVRitSCndDL1dTWTVvenM2SkVqZ1V3SGpjR2o4MFpTdnZLcU1OTXplUjdMQmVVUXRQY20wU1ppQlp1VE1HL0l4aFFLN0sKeU1DVzhieXlUM3EzNFUrN1pYNGE4cDZRd04xVURSb3gxZ3lyajJaYUo2aHFWeTR0c1VLMTl2RTgKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://192.168.7.10:6443

name: kubernetes

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null2.设置客户端认证参数

kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.config

User "admin" set3.设置上下文参数

[root@k8s-master1 work]#

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config

Context "kubernetes" created4.设置当前上下文

[root@k8s-master1 /data/work] k8s-master1

# kubectl config use-context kubernetes --kubeconfig=kube.config

Switched to context "kubernetes".

[root@k8s-master1 /data/work] k8s-master1

# mkdir ~/.kube -p

[root@k8s-master1 /data/work] k8s-master1

# cp kube.config ~/.kube/config

[root@k8s-master1 /data/work] k8s-master1

# cp kube.config /etc/kubernetes/admin.conf5.授权 kubernetes 证书访问kubelet api 权限

[root@k8s-master1 work]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

clusterrolebinding.rbac.authorization.k8s.io/kube-apiserver:kubelet-apis created查看集群组件状态

[root@k8s-master1 work]# kubectl cluster-info

# kubectl cluster-info

Kubernetes control plane is running at https://192.168.7.10:6443

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

执行下面命令查看集群信息

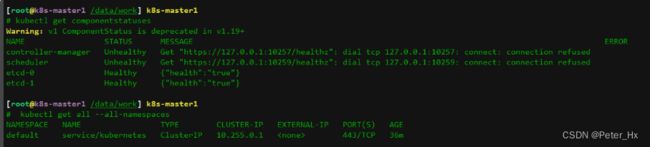

[root@k8s-master1 /data/work] k8s-master1

# kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get "https://127.0.0.1:10257/healthz": dial tcp 127.0.0.1:10257: connect: connection refused

scheduler Unhealthy Get "https://127.0.0.1:10259/healthz": dial tcp 127.0.0.1:10259: connect: connection refused

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}[root@k8s-master1 /data/work] k8s-master1

# kubectl get all --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.255.0.1 443/TCP 36m 同步 kubectl 文件到其他节点

[root@k8s-master2 ~]# mkdir /root/.kube/

[root@k8s-master1 work]# rsync -vaz /root/.kube/config k8s-master2:/root/.kube/配置 kubectl 子命令补全,依次执行下面命令即可

[root@k8s-master1 work]# yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

kubectl completion bash > ~/.kube/completion.bash.inc

source '/root/.kube/completion.bash.inc'

source $HOME/.bash_profileKubectl 官方备忘单:(自动补全)

kubectl 备忘单 | Kubernetes

2.4 部署 kube-controller-manager 组件

2.4.1 创建 kube-controller-manager csr 请求文件

[root@k8s-master1 /data/work] k8s-master1

# cat kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.7.10",

"192.168.7.11",

"192.168.7.13",

"192.168.7.99"

],

"names": [

{

"C": "CN",

"ST": "Hubei",

"L": "Wuhan",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

# 注: hosts 列表包含所有 kube-controller-manager 节点 IP;

CN 为 system:kube- controller-manager

O 为 system:kube-controller-manager,

kubernetes 内置的 ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限2.4.2 生成 kube-controller-manager证书

[root@k8s-master1 work]#

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2022/08/10 17:46:58 [INFO] generate received request

2022/08/10 17:46:58 [INFO] received CSR

2022/08/10 17:46:58 [INFO] generating key: rsa-2048

2022/08/10 17:46:58 [INFO] encoded CSR

2022/08/10 17:46:58 [INFO] signed certificate with serial number 402122087182562792164197882344665077108635062015

2022/08/10 17:46:58 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").2.4.3 创建 kube-controller-manager 的 kubeconfig

1.设置集群参数

[root@k8s-master1 work]#

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.7.10:6443 --kubeconfig=kube-controller-manager.kubeconfig

Cluster "kubernetes" set2.设置客户端认证参数

[root@k8s-master1 work]# kubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfig

User "system:kube-controller-manager" set.3.设置上下文参数

[root@k8s-master1 work]#

kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

Context "system:kube-controller-manager" created.4.设置当前上下文

[root@k8s-master1 work]# kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

Switched to context "system:kube-controller-manager"2.4.4 创建kube-controller-manager配置文件

[root@k8s-master1 work]# cat kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--port=0 \

--secure-port=10257 \

--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.255.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.0.0.0/16 \

--experimental-cluster-signing-duration=87600h \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--horizontal-pod-autoscaler-sync-period=10s \

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"2.4.5 创建kube-controller-manager启动文件

# cat kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target2.4.6 启动 kube-controller-manager服务

[root@k8s-master1 work]# cp kube-controller-manager*.pem /etc/kubernetes/ssl/

[root@k8s-master1 work]# cp kube-controller-manager.kubeconfig /etc/kubernetes/

[root@k8s-master1 work]# cp kube-controller-manager.conf /etc/kubernetes/

[root@k8s-master1 work]# cp kube-controller-manager.service /usr/lib/systemd/system/

[root@k8s-master1 work]# rsync -vaz kube-controller-manager.pem k8s-master2:/etc/kubernetes/ssl/

[root@k8s-master1 work]# rsync -vaz kube-controller-manager.kubeconfig kube-controller-manager.conf k8s-master2:/etc/kubernetes/

[root@k8s-master1 work]# rsync -vaz kube-controller-manager.service k8s-master2:/usr/lib/systemd/system/启动

[root@k8s-master1 work]# systemctl daemon-reload &&systemctl enable kube-controller-manager && systemctl start kube-controller-manager && systemctl status kube-controller-manager

Active: active (running) since Wed 2022-08-10 18:18:05 CST; 51ms ago2.5 部署 kube-scheduler 组件

2.5.1 创建kube-scheduler的csr 请求

# cat kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.7.10",

"192.168.7.11",

"192.168.7.13",

"192.168.7.99"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Hubei",

"L": "Wuhan",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

注: hosts 列表包含所有 kube-scheduler 节点 IP; CN 为 system:kube-scheduler、O 为 system:kube-scheduler,kubernetes 内置的 ClusterRoleBindings system:kube-scheduler 将赋予 kube-scheduler 工作所需的权限。2.5.2 生成kube-scheduler证书

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2022/08/10 20:47:45 [INFO] generate received request

2022/08/10 20:47:45 [INFO] received CSR

2022/08/10 20:47:45 [INFO] generating key: rsa-2048

2022/08/10 20:47:46 [INFO] encoded CSR

2022/08/10 20:47:46 [INFO] signed certificate with serial number 579944385128158770562264376350906952867199765265

2022/08/10 20:47:46 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").2.5.3 创建 kube-scheduler 的 kubeconfig文件

1.设置集群参数

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.7.10:6443 --kubeconfig=kube-scheduler.kubeconfig

Cluster "kubernetes" set2.设置客户端认证参数

# kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig

User "system:kube-scheduler" set.3.设置上下文参数

# kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

Context "system:kube-scheduler" created4.设置当前上下文

# kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

Switched to context "system:kube-scheduler"2.5.4 创建配置文件 kube-scheduler的配置文件

cat kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--leader-elect=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"2.5.5 创建kube-scheduler的服务启动文件

# cat kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target2.5.6 拷贝文件到master2节点并启动服务

[root@k8s-master1 work]# cp kube-scheduler*.pem /etc/kubernetes/ssl/

[root@k8s-master1 work]# cp kube-scheduler.kubeconfig /etc/kubernetes/

[root@k8s-master1 work]# cp kube-scheduler.conf /etc/kubernetes/

[root@k8s-master1 work]# cp kube-scheduler.service /usr/lib/systemd/system/

[root@k8s-master1 work]# rsync -vaz kube-scheduler*.pem k8s-master2:/etc/kubernetes/ssl/

[root@k8s-master1 work]# rsync -vaz kube-scheduler.kubeconfig kube-scheduler.conf k8s-master2:/etc/kubernetes/

[root@k8s-master1 work]# rsync -vaz kube-scheduler.service k8s-master2:/usr/lib/systemd/system/

[root@k8s-master1 work]# systemctl daemon-reload && systemctl enable kube-scheduler && systemctl start kube-scheduler && systemctl status kube-scheduler

Active: active (running) since Wed 2022-08-10 21:10:28 CST; 52ms ago

[root@k8s-master2]# systemctl daemon-reload && systemctl enable kube-scheduler && systemctl start kube-scheduler && systemctl status kube-scheduler2.6. 部署 kubelet 组件

kubelet: 每个 Node 节点上的 kubelet 定期就会调用 API Server 的 REST 接口报告自身状态, API Server 接收这些信息后,将节点状态信息更新到 etcd 中。kubelet 也通过 API Server 监听 Pod信息,从而对 Node 机器上的 POD 进行管理,如创建、删除、更新 Pod

先导入离线镜像压缩包

#把 pause-cordns.tar.gz 上传到 k8s-node1 节点,手动解压

[root@k8s-node1 ~]# docker load -i pause-cordns.tar.gz以下操作在 k8s-master1 上操作

2.6.1 创建 kubelet-bootstrap.kubeconfig

[root@k8s-master1 work]# cd /data/work/

[root@k8s-master1 work]# BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv)

[root@k8s-master1 work]# rm -r kubelet-bootstrap.kubeconfig

[root@k8s-master1 work]# kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.7.10:6443 --kubeconfig=kubelet-bootstrap.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master1 work]# kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig

[root@k8s-master1 work]# kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

[root@k8s-master1 work]# kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig

[root@k8s-master1 work]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap2.6.2 创建配置文件 kubelet.json

"cgroupDriver": "systemd"要和 docker 的驱动一致。

address 替换为自己 k8s-node1 的 IP 地址。

# cat kubelet.json

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "192.168.7.10",

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"featureGates": {

"RotateKubeletServerCertificate": true

},

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.255.0.2"]

}2.6.3 创建kubelet服务启动文件

[root@k8s-master1 work]# vim kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet.json \

--network-plugin=cni \

--pod-infra-container-image=k8s.gcr.io/pause:3.2 \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

#注: –hostname-override:显示名称,集群中唯一

–network-plugin:启用 CNI

–kubeconfig:空路径,会自动生成,后面用于连接 apiserver

–bootstrap-kubeconfig:首次启动向 apiserver 申请证书

–config:配置参数文件

–cert-dir:kubelet 证书生成目录

–pod-infra-container-image:管理 Pod 网络容器的镜像

#注:kubelete.json 配置文件 address 改为各个节点的 ip 地址,在各个 work 节点上启动服务2.6.4 拷贝kubelet的证书及配置文件到node节点

[root@k8s-node1 ~]# mkdir /etc/kubernetes/ssl -p

[root@k8s-master1 work]# scp kubelet-bootstrap.kubeconfig kubelet.json k8s-node1:/etc/kubernetes/

[root@k8s-master1 work]# scp ca.pem k8s-node1:/etc/kubernetes/ssl/

[root@k8s-master1 work]# scp kubelet.service k8s-node1:/usr/lib/systemd/system/2.6.5 在node节点上启动 kubelet 服务

[root@k8s-node1 ~]# mkdir /var/lib/kubelet

[root@k8s-node1 ~]# mkdir /var/log/kubernetes

[root@k8s-node1 ~]# systemctl daemon-reload && systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet确认 kubelet 服务启动成功后,接着到 k8s-master1 节点上 Approve 一下 bootstrap 请求。

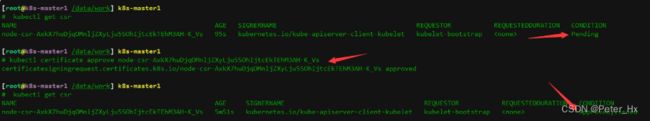

执行如下命令可以看到一个 worker 节点发送了一个 CSR 请求:

[root@k8s-master1 work]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

node-csr-AxkX7huDjqOMnljZXyLju5SOhIjtcEkTEhM3AH-K_Vs 95s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending 2.6.6 在master节点审批node节点的请求

[root@k8s-master1 work]# kubectl certificate approve node-csr-AxkX7huDjqOMnljZXyLju5SOhIjtcEkTEhM3AH-K_Vs

certificatesigningrequest.certificates.k8s.io/node-csr-AxkX7huDjqOMnljZXyLju5SOhIjtcEkTEhM3AH-K_Vs approved

# 再次查看申请

[root@k8s-master1 /data/work] k8s-master1

# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

node-csr-AxkX7huDjqOMnljZXyLju5SOhIjtcEkTEhM3AH-K_Vs 5m51s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued 在master上查看一下node节点是否已经正常加入进来了

[root@k8s-master1 /data/work] k8s-master1

# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-node1 NotReady 8s v1.23.1 #注意:STATUS 是 NotReady 表示还没有安装网络插件

2.7 部署 kube-proxy 组件

2.7.1 创建kube-proxy的 csr 请求

[root@k8s-master1 work]#

# cat kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Hubei",

"L": "Wuhan",

"O": "k8s",

"OU": "system"

}

]

}2.7.2 生成证书

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2022/08/10 21:45:19 [INFO] generate received request

2022/08/10 21:45:19 [INFO] received CSR

2022/08/10 21:45:19 [INFO] generating key: rsa-2048

2022/08/10 21:45:19 [INFO] encoded CSR

2022/08/10 21:45:19 [INFO] signed certificate with serial number 418084057922153637657451382122276535476972007518

2022/08/10 21:45:19 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").2.7.3 创建 kubeconfig 文件

[root@k8s-master1 work]# kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://192.168.7.10:6443 --kubeconfig=kube-proxy.kubeconfig

[root@k8s-master1 work]# kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

[root@k8s-master1 work]# kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

[root@k8s-master1 work]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig2.7.4 创建 kube-proxy 配置文件

[root@k8s-master1 work]# vim kube-proxy.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 192.168.7.13

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 192.168.7.0/24

healthzBindAddress: 192.168.7.13:10256

kind: KubeProxyConfiguration

metricsBindAddress: 192.168.7.13:10249

mode: "ipvs"2.7.5 创建kube-proxy服务启动文件

[root@k8s-master1 work]#

# cat kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.yaml \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target2.7.6 拷贝kube-proxy文件到node节点上

[root@k8s-master1 work]# scp kube-proxy.kubeconfig kube-proxy.yaml k8s-node1:/etc/kubernetes/

[root@k8s-master1 work]#scp kube-proxy.service k8s-node1:/usr/lib/systemd/system/2.7.7 启动kube-proxy服务

[root@k8s-node1 ~]# mkdir -p /var/lib/kube-proxy

[root@k8s-node1 ~]# systemctl daemon-reload && systemctl enable kube-proxy && systemctl start kube-proxy && systemctl status kube-proxy

Active: active (running) since Wed 2022-08-10 21:53:44 CST; 36ms ago2.8 部署 calico 组件

解压离线镜像压缩包

#把 cni.tar.gz 和node.tar.gz 上传到 k8s-node1 节点,手动解压

[root@k8s-node1 ~]# docker load -i calico.tar.gz把 calico.yaml 文件上传到 k8s-master1 上的的/data/work 目录

[root@k8s-master1 work]# kubectl apply -f calico.yaml

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready 30m v1.23.1

[root@k8s-master1 /data/k8s/init-k8s] k8s-master1

# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-677cd97c8d-jmtsh 1/1 Running 0 40s

calico-node-vc8gz 1/1 Running 0 40s 2.9 部署 coredns 组件

创建coredns资源

[root@k8s-master1 work]# kubectl apply -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

# 再次查看pod

[root@k8s-master1 /data/k8s/init-k8s] k8s-master1

# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-677cd97c8d-jmtsh 1/1 Running 0 3m44s

calico-node-vc8gz 1/1 Running 0 3m44s

coredns-86d879486-9sgwk 1/1 Running 0 43s2.10 查看集群状态

环境部署完成,再次查看集群节点状态

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready 41m v1.23.1 三、集群组件功能验证测试

3.1 对系统用户 kubernetes 做授权

使用clusterrolebinding进行授权

[root@k8s-master1 ~]# kubectl create clusterrolebinding kubernetes-kubectl --clusterrole=cluster-admin --user=kubernetes3.2 测试 k8s 集群部署 tomcat 服务

#把 tomcat.tar.gz 和 busybox-1-28.tar.gz 上传到 k8s-node1,手动解压

上传解压

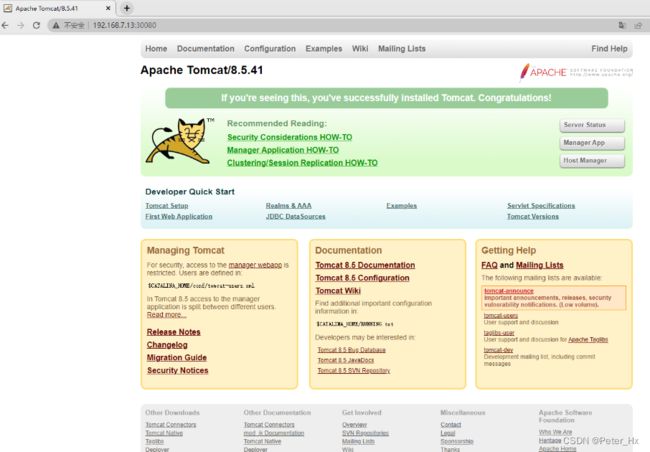

[root@k8s-node1 ~]# docker load -i tomcat.tar.gz && docker load -i busybox-1-28.tar.gz创建tomcat资源

[root@k8s-master1 ~]# kubectl apply -f tomcat.yaml

# kubectl get pods

NAME READY STATUS RESTARTS AGE

demo-pod 2/2 Running 0 24s

[root@k8s-master1 /data/k8s/init-k8s] k8s-master1

# kubectl apply -f tomcat-service.yaml

service/tomcat created

[root@k8s-master1 /data/k8s/init-k8s] k8s-master1

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.255.0.1 443/TCP 5h46m

tomcat NodePort 10.255.185.15 8080:30080/TCP 12s 在浏览器访问 k8s-node1 节点的 ip:30080 即可请求到tomcat页面

3.3 验证 cordns 是否正常

临时运行一个busybox容器进行验证

[root@k8s-master1 ~]# kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

/ # ping www.baidu.com

PING [www.baidu.com](http://www.baidu.com/) (39.156.66.18): 56 data bytes

64 bytes from 39.156.66.14: seq=2 ttl=127 time=6.409 ms

#通过上面可以看到能访问网络

/ # nslookup kubernetes.default.svc.cluster.local

Server: 10.255.0.2

Address 1: 10.255.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default.svc.cluster.local

Address 1: 10.255.0.1 kubernetes.default.svc.cluster.local

#注意:

busybox 要用指定的 1.28 版本,不能用最新版本,最新版本,nslookup 会解析不到 dns 和 ip

10.255.0.2 就是我们 coreDNS 的 clusterIP,说明 coreDNS 配置好了。解析内部 Service 的名称,是通过 coreDNS 去解析的。四、 实现k8s apiserver 高可用

安装 keepalived+nginx

1、安装 nginx 主备:

在 k8s-master1 和 k8s-master2 上做 nginx 主备安装

[root@k8s-master1 ~]# yum install nginx keepalived -y

[root@k8s-master2 ~]# yum install nginx keepalived -y

2、修改 nginx 配置文件。master1和master2配置一样

[root@k8s-master1 ~]# cat /etc/nginx/nginx.conf ,添加下面12行内容

# cat /etc/nginx/nginx.conf

# For more information on configuration, see:

# * Official English Documentation: http://nginx.org/en/docs/

# * Official Russian Documentation: http://nginx.org/ru/docs/

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

--------------------------添加以下12行upstream内容--------------------------------

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.7.10:6443;

server 192.168.7.11:6443;

}

server {

listen 16443;

proxy_pass k8s-apiserver;

}

}

----------------------------------------------------------------------------------

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 4096;

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

include /etc/nginx/conf.d/*.conf;

server {

listen 80;

listen [::]:80;

server_name _;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

error_page 404 /404.html;

location = /404.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

}配置完成后检查一下语法,如遇到下面报错,怎么yum安装一下包即可( 缺少stream的modules模块 )

# nginx -t

nginx: [emerg] unknown directive "stream" in /etc/nginx/nginx.conf:17

nginx: configuration file /etc/nginx/nginx.conf test failed

# yum安装一下即可

yum -y install nginx-mod-stream (或者安装这个nginx-all-modules.noarch包也可以)3、keepalive 配置主 keepalived

[root@k8s-master1 ~]# cat /etc/keepalived/keepalived.conf

# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.7.99/24

}

track_script {

check_nginx

}

}

#vrrp_script:指定检查 nginx 工作状态脚本(根据 nginx 状态判断是否故障转移)

#virtual_ipaddress:虚拟 IP(VIP)[root@k8s-master1 ~]# cat /etc/keepalived/check_nginx.sh

#!/bin/bash

count=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

chmod +x /etc/keepalived/check_nginx.sh备 keepalive

[root@k8s-master2 ~]# cat /etc/keepalived/keepalived.conf

# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id NGINX_BACKUP

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.7.99/24

}

track_script {

check_nginx

}

}

# 注:keepalived 根据脚本返回状态码(0 为工作正常,非 0 不正常)判断是否故障转移。4、启动服务:

[root@k8s-master1 ~]# systemctl start nginx && systemctl start keepalived && systemctl enable nginx keepalived

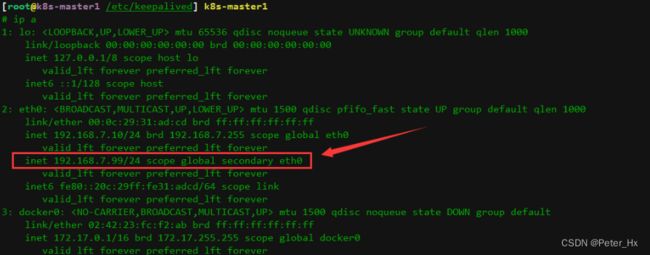

[root@k8s-master2 ~]# systemctl daemon-reload && systemctl start nginx && systemctl start keepalived && systemctl enable nginx keepalived一定要查看一下keepalived服务是否正常,是否有vip地址绑定成功

5、测试 vip 是否绑定成功

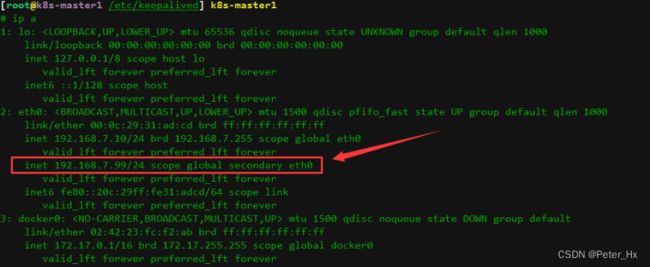

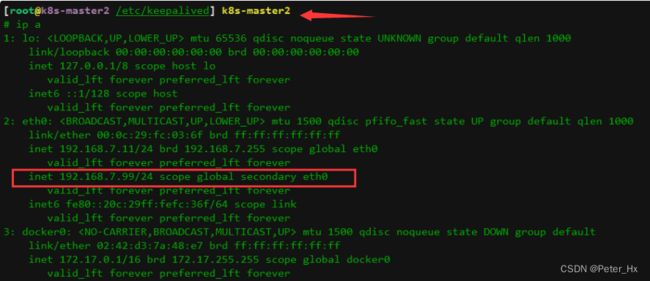

6、测试 keepalived:

停掉 k8s-master1 上的 nginx。vip 会漂移到 k8s-master2

[root@k8s-master1 ~]# service nginx stop

到master2上查看

目前所有的 Worker Node 组件连接都还是 k8s-master1 Node,如果不改为连接 VIP 走负载均衡器,那么 Master 还是单点故障。

因此接下来就是要改所有 Worker Node(kubectl get node 命令查看到的节点)组件配置文件,由原来 192.168.7.10 修改为 192.168.7.99(VIP)。

在所有 Worker Node 执行:

[root@k8s-node1 ~]# sed -i 's#192.168.7.10:6443#192.168.7.99:16443#' /etc/kubernetes/kubelet-bootstrap.kubeconfig

[root@k8s-node1 ~]# sed -i 's#192.168.7.10:6443#192.168.7.99:16443#' /etc/kubernetes/kubelet.json

[root@k8s-node1 ~]# sed -i 's#192.168.7.10:6443#192.168.7.99:16443#' /etc/kubernetes/kubelet.kubeconfig

[root@k8s-node1 ~]# sed -i 's#192.168.7.10:6443#192.168.7.99:16443#' /etc/kubernetes/kube-proxy.yaml

[root@k8s-node1 ~]# sed -i 's#192.168.7.10:6443#192.168.7.99:16443#' /etc/kubernetes/kube-proxy.kubeconfig最后需要重启一下kube-proxy组件

[root@k8s-node1 ~]# systemctl restart kubelet kube-proxy这样master的kube-apiserver高可用就安装好了

erver 192.168.200.1

smtp_connect_timeout 30

router_id NGINX_BACKUP

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.7.99/24

}

track_script {

check_nginx

}

}

# 注:keepalived 根据脚本返回状态码(0 为工作正常,非 0 不正常)判断是否故障转移。4、启动服务:

[root@k8s-master1 ~]# systemctl start nginx && systemctl start keepalived && systemctl enable nginx keepalived

[root@k8s-master2 ~]# systemctl daemon-reload && systemctl start nginx && systemctl start keepalived && systemctl enable nginx keepalived一定要查看一下keepalived服务是否正常,是否有vip地址绑定成功

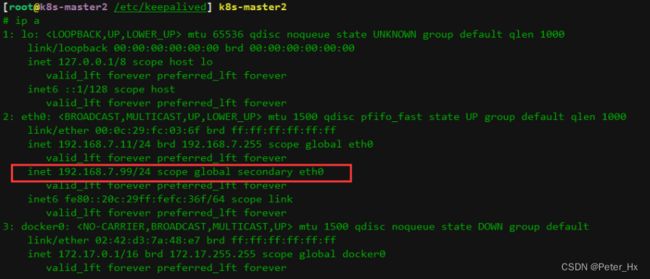

5、测试 vip 是否绑定成功

① 先查看是否在master1节点上绑定了

6、测试 keepalived:

停掉 k8s-master1 上的 nginx。vip 会漂移到 k8s-master2

[root@k8s-master1 ~]# service nginx stop

目前所有的 Worker Node 组件连接都还是 k8s-master1 Node,如果不改为连接 VIP 走负载均衡器,那么 Master 还是单点故障。

因此接下来就是要改所有 Worker Node(kubectl get node 命令查看到的节点)组件配置文件,由原来 192.168.7.10 修改为 192.168.7.99(VIP)。

在所有 Worker Node 执行:

[root@k8s-node1 ~]# sed -i 's#192.168.7.10:6443#192.168.7.99:16443#' /etc/kubernetes/kubelet-bootstrap.kubeconfig

[root@k8s-node1 ~]# sed -i 's#192.168.7.10:6443#192.168.7.99:16443#' /etc/kubernetes/kubelet.json

[root@k8s-node1 ~]# sed -i 's#192.168.7.10:6443#192.168.7.99:16443#' /etc/kubernetes/kubelet.kubeconfig

[root@k8s-node1 ~]# sed -i 's#192.168.7.10:6443#192.168.7.99:16443#' /etc/kubernetes/kube-proxy.yaml

[root@k8s-node1 ~]# sed -i 's#192.168.7.10:6443#192.168.7.99:16443#' /etc/kubernetes/kube-proxy.kubeconfig最后需要重启一下kube-proxy组件

[root@k8s-node1 ~]# systemctl restart kubelet kube-proxy这样master的kube-apiserver高可用就安装好了