(八)史上最强ELK集群搭建系列教程——docker-compose一键式搭建(完结篇)

引言

前面的小节中我们已经独立成节,详细介绍了elk集群的搭建过程,本节将是我们elk集群搭建的终结篇。在本节中,我们的所有服务都是通过docker-compose一键式搭建,所有的服务都是在一个docker-compose的配置文件中维护。这样我们的整个服务都可以通过docker-compose命令进行一键式管控。我们的镜像也采用最新的镜像,elk的版本选取7.12.0版本的镜像。本节内容中,我们几乎全覆盖了我们第一节中elk架构中提到的所有服务,读者也可以根据自己的需求,对某些服务进行增减。另外,为了网络的访问安全,我们可以将核心服务的端口不要暴露出来,比如这里我们只暴露nginx的端口,使用nginx帮我们转发请求,其它服务都是内网调用,这些细节内容,作者这里就不做过多的配置讲解了,我们可以根据需求自行配置。好了,开始我们的elk集群终结篇的整个搭建流程之旅吧,希望能够对你有所帮助。

正文

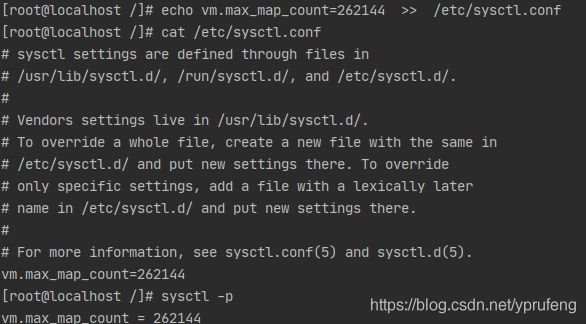

- 修改系统句柄数

说明:linux系统默认的可操作句柄数是65535,es集群默认的进程句柄数需要至少为262144个,如果我们想正常启动es集群,我们需要调大这个参数。

命令:

echo vm.max_map_count=262144 >> /etc/sysctl.conf

sysctl -p

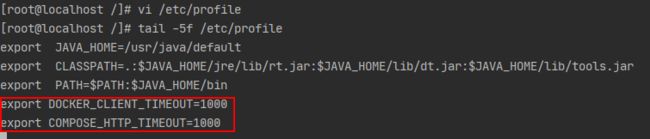

- 修改docker-compose容器启动时间

说明:在使用docker-compose启动多个容器时,在其默认的启动时间60s内无法全部启动完成,容器就会整个启动失败。这里我们将此参数调大到1000s。使用vi编辑器修改系统环境变量文件/etc/profile,在文件的末尾添加俩个参数,然后更新系统参数,使新添加的参数配置生效。

参数:

export DOCKER_CLIENT_TIMEOUT=1000

export COMPOSE_HTTP_TIMEOUT=1000

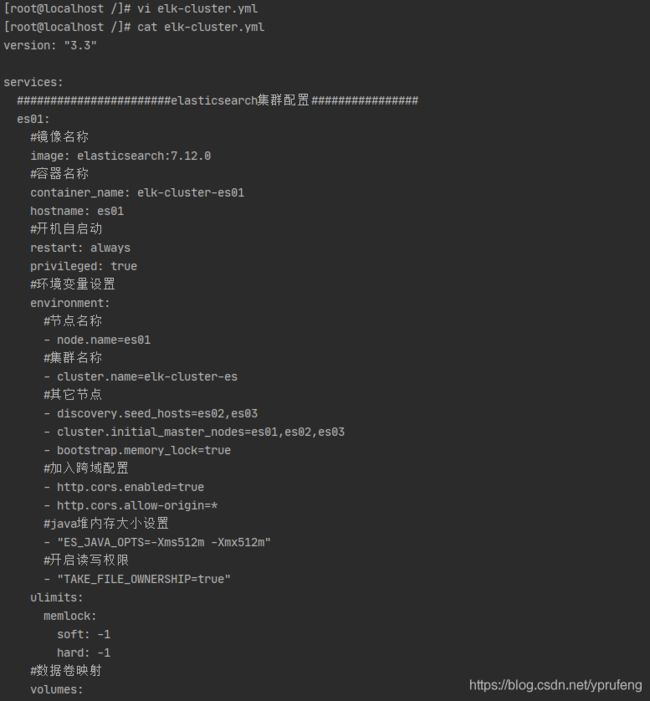

- 创建elk-cluster.yml配置文件

说明:使用vi编辑器在根目录下创建一个elk-cluster.yml文件,具体配置内容如下:

#elk-cluster集群配置文件 version: "3.3" services: #######################elasticsearch集群配置################ es01: #镜像名称 image: elasticsearch:7.12.0 #容器名称 container_name: elk-cluster-es01 hostname: es01 #开机自启动 restart: always privileged: true #环境变量设置 environment: #节点名称 - node.name=es01 #集群名称 - cluster.name=elk-cluster-es #其它节点 - discovery.seed_hosts=es02,es03 - cluster.initial_master_nodes=es01,es02,es03 - bootstrap.memory_lock=true #加入跨域配置 - http.cors.enabled=true - http.cors.allow-origin=* #java堆内存大小设置 - "ES_JAVA_OPTS=-Xms512m -Xmx512m" #开启读写权限 - "TAKE_FILE_OWNERSHIP=true" ulimits: memlock: soft: -1 hard: -1 #数据卷映射 volumes: - /elk/elasticsearch/01/data:/usr/share/elasticsearch/data - /elk/elasticsearch/01/logs:/usr/share/elasticsearch/logs #端口映射 ports: - 9200:9200 #网络配置 networks: - elk es02: image: elasticsearch:7.12.0 container_name: elk-cluster-es02 hostname: es02 restart: always privileged: true environment: - node.name=es02 - cluster.name=elk-cluster-es - discovery.seed_hosts=es01,es03 - cluster.initial_master_nodes=es01,es02,es03 - bootstrap.memory_lock=true #加入跨域配置 - http.cors.enabled=true - http.cors.allow-origin=* - "ES_JAVA_OPTS=-Xms512m -Xmx512m" - "TAKE_FILE_OWNERSHIP=true" ulimits: memlock: soft: -1 hard: -1 volumes: - /elk/elasticsearch/02/data:/usr/share/elasticsearch/data - /elk/elasticsearch/02/logs:/usr/share/elasticsearch/logs #网络配置 networks: - elk es03: image: elasticsearch:7.12.0 container_name: elk-cluster-es03 hostname: es03 restart: always privileged: true environment: - node.name=es03 - cluster.name=elk-cluster-es - discovery.seed_hosts=es01,es02 - cluster.initial_master_nodes=es01,es02,es03 - bootstrap.memory_lock=true #加入跨域配置 - http.cors.enabled=true - http.cors.allow-origin=* - "ES_JAVA_OPTS=-Xms512m -Xmx512m" - "TAKE_FILE_OWNERSHIP=true" ulimits: memlock: soft: -1 hard: -1 volumes: - /elk/elasticsearch/03/data:/usr/share/elasticsearch/data - /elk/elasticsearch/03/logs:/usr/share/elasticsearch/logs #端口映射 networks: - elk #####################kibana配置#################################### kibana: image: kibana:7.12.0 container_name: elk-cluster-kibana hostname: kibana restart: always environment: #elasticsearch服务地址 ELASTICSEARCH_HOSTS: "http://es01:9200" #kibana语言配置:en、zh-CN、ja-JP I18N_LOCALE: "en" ulimits: memlock: soft: -1 hard: -1 #端口映射 ports: - 5601:5601 networks: - elk depends_on: - es01 - es02 - es03 #####################kibana配置#################################### nginx: image: nginx:stable-alpine-perl container_name: elk-cluster-nginx hostname: nginx restart: always ulimits: memlock: soft: -1 hard: -1 #端口映射 ports: - 80:80 networks: - elk depends_on: - kibana #####################logstash配置#################################### logstash01: image: logstash:7.12.0 container_name: elk-cluster-logstash01 hostname: logstash01 restart: always environment: #elasticsearch服务地址 - monitoring.elasticsearch.hosts="http://es01:9200" ports: - 9600:9600 - 5044:5044 networks: - elk depends_on: - es01 - es02 - es03 logstash02: image: logstash:7.12.0 container_name: elk-cluster-logstash02 hostname: logstash02 restart: always environment: #elasticsearch服务地址 - monitoring.elasticsearch.hosts="http://es01:9200" ports: - 9601:9600 - 5045:5044 networks: - elk depends_on: - es01 - es02 - es03 logstash03: image: logstash:7.12.0 container_name: elk-cluster-logstash03 hostname: logstash03 restart: always environment: #elasticsearch服务地址 - monitoring.elasticsearch.hosts="http://es01:9200" ports: - 9602:9600 - 5046:5044 networks: - elk depends_on: - es01 - es02 - es03 #####################kafka集群相关配置#################################### #zookeeper集群 zk01: image: zookeeper:3.7.0 restart: always container_name: elk-cluster-zk01 hostname: zk01 ports: - 2181:2181 networks: - elk volumes: - "/elk/zookeeper/zk01/data:/data" - "/elk/zookeeper/zk01/logs:/datalog" environment: ZOO_MY_ID: 1 ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zk02:2888:3888;2181 server.3=zk03:2888:3888;2181 depends_on: - es01 - es02 - es03 zk02: image: zookeeper:3.7.0 restart: always container_name: elk-cluster-zk02 hostname: zk02 ports: - 2182:2181 networks: - elk volumes: - "/elk/zookeeper/zk02/data:/data" - "/elk/zookeeper/zk02/logs:/datalog" environment: ZOO_MY_ID: 2 ZOO_SERVERS: server.1=zk01:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zk03:2888:3888;2181 depends_on: - es01 - es02 - es03 zk03: image: zookeeper:3.7.0 restart: always container_name: elk-cluster-zk03 hostname: zk03 ports: - 2183:2181 networks: - elk volumes: - "/elk/zookeeper/zk03/data:/data" - "/elk/zookeeper/zk03/logs:/datalog" environment: ZOO_MY_ID: 3 ZOO_SERVERS: server.1=zk01:2888:3888;2181 server.2=zk02:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181 depends_on: - es01 - es02 - es03 #kafka集群 kafka01: image: wurstmeister/kafka:2.13-2.7.0 restart: always container_name: elk-cluster-kafka01 hostname: kafka01 ports: - "9091:9092" - "9991:9991" networks: - elk depends_on: - zk01 - zk02 - zk03 environment: KAFKA_BROKER_ID: 1 KAFKA_ADVERTISED_HOST_NAME: kafka01 KAFKA_ADVERTISED_PORT: 9091 KAFKA_HOST_NAME: kafka01 KAFKA_ZOOKEEPER_CONNECT: zk01:2181,zk02:2181,zk03:2181 KAFKA_LISTENERS: PLAINTEXT://kafka01:9092 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.23.134:9091 JMX_PORT: 9991 KAFKA_JMX_OPTS: "-Djava.rmi.server.hostname=kafka01 -Dcom.sun.management.jmxremote.port=9991 -Dcom.sun.management.jmxremote=true -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.managementote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false" volumes: - "/elk/kafka/kafka01/:/kafka" kafka02: image: wurstmeister/kafka:2.13-2.7.0 restart: always container_name: elk-cluster-kafka02 hostname: kafka02 ports: - "9092:9092" - "9992:9992" networks: - elk depends_on: - zk01 - zk02 - zk03 environment: KAFKA_BROKER_ID: 2 KAFKA_ADVERTISED_HOST_NAME: kafka02 KAFKA_ADVERTISED_PORT: 9092 KAFKA_HOST_NAME: kafka02 KAFKA_ZOOKEEPER_CONNECT: zk01:2181,zk02:2181,zk03:2181 KAFKA_LISTENERS: PLAINTEXT://kafka02:9092 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.23.134.134:9092 JMX_PORT: 9992 KAFKA_JMX_OPTS: "-Djava.rmi.server.hostname=kafka02 -Dcom.sun.management.jmxremote.port=9992 -Dcom.sun.management.jmxremote=true -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.managementote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false" volumes: - "/elk/kafka/kafka02/:/kafka" kafka03: image: wurstmeister/kafka:2.13-2.7.0 restart: always container_name: elk-cluster-kafka03 hostname: kafka03 ports: - "9093:9092" - "9993:9993" networks: - elk depends_on: - zk01 - zk02 - zk03 environment: KAFKA_BROKER_ID: 3 KAFKA_ADVERTISED_HOST_NAME: kafka03 KAFKA_ADVERTISED_PORT: 9093 KAFKA_HOST_NAME: kafka03 KAFKA_ZOOKEEPER_CONNECT: zk01:2181,zk02:2181,zk03:2181 KAFKA_LISTENERS: PLAINTEXT://kafka03:9092 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.23.134:9093 JMX_PORT: 9993 KAFKA_JMX_OPTS: "-Djava.rmi.server.hostname=kafka03 -Dcom.sun.management.jmxremote.port=9993 -Dcom.sun.management.jmxremote=true -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.managementote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false" volumes: - "/elk/kafka/kafka3/:/kafka" #kafka管理工具 'kafka-manager': container_name: elk-cluster-kafka-manager image: sheepkiller/kafka-manager:stable restart: always ports: - 9000:9000 networks: - elk depends_on: - kafka01 - kafka02 - kafka03 environment: KM_VERSION: 1.3.3.18 ZK_HOSTS: zk01:2181,zk02:2181,zk03:2181 #kafka监控工具 'kafka-offset-monitor': container_name: elk-cluster-kafka-offset-monitor image: 564239555/kafkaoffsetmonitor:latest restart: always volumes: - /elk/kafkaoffsetmonitor/conf:/kafkaoffsetmonitor ports: - 9001:8080 networks: - elk depends_on: - kafka01 - kafka02 - kafka03 environment: ZK_HOSTS: zk01:2181,zk02:2181,zk03:2181 KAFKA_BROKERS: kafka01:9092,kafka02:9092,kafka03:9092 REFRESH_SECENDS: 10 RETAIN_DAYS: 2 #######################filebeat配置################ filebaet: #镜像名称 image: elastic/filebeat:7.12.0 #容器名称 container_name: elk-cluster-filebaet hostname: filebaet #开机自启动 restart: always volumes: - /elk/filebeat/data:/elk/logs #权限设置 privileged: true #用户 user: root #环境变量设置 environment: #开启读写权限 - "TAKE_FILE_OWNERSHIP=true" ulimits: memlock: soft: -1 hard: -1 #网络配置 networks: - elk depends_on: - kafka01 - kafka02 - kafka03 networks: elk: driver: bridge

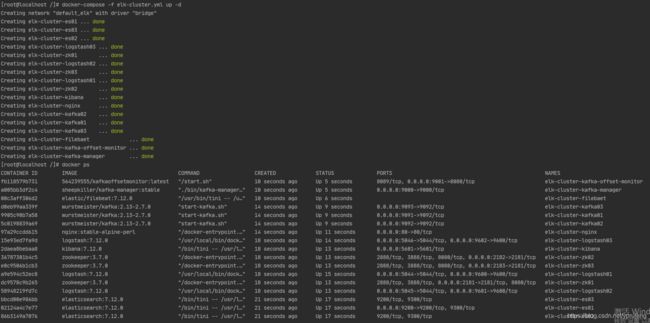

- 启动elk集群

命令:docker-compose -f elk-cluster.yml up -d

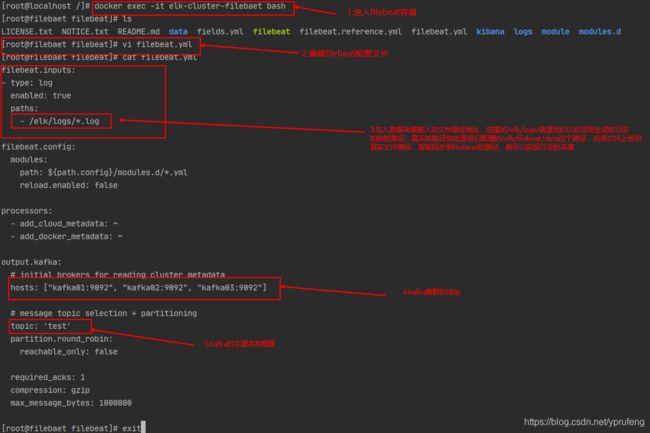

- 修改filebeat配置文件

filebeat.inputs: - type: log enabled: true paths: - /elk/logs/*.log filebeat.config: modules: path: ${path.config}/modules.d/*.yml reload.enabled: false processors: - add_cloud_metadata: ~ - add_docker_metadata: ~ output.kafka: # initial brokers for reading cluster metadata hosts: ["kafka01:9092", "kafka02:9092", "kafka03:9092"] # message topic selection + partitioning topic: 'test' partition.round_robin: reachable_only: false required_acks: 1 compression: gzip max_message_bytes: 1000000

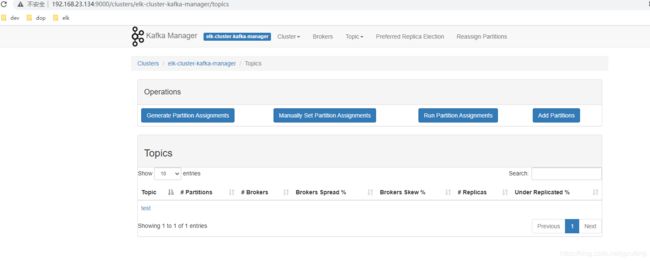

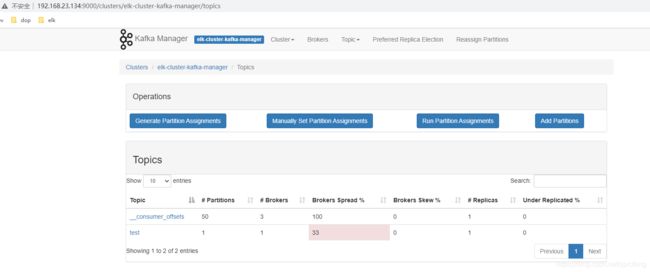

- 创建kafka的主题test

说明:具体的创建过程,可以参考我的博客(六)史上最强ELK集群搭建系列教程——kafka集群搭建中主题创建的步骤。

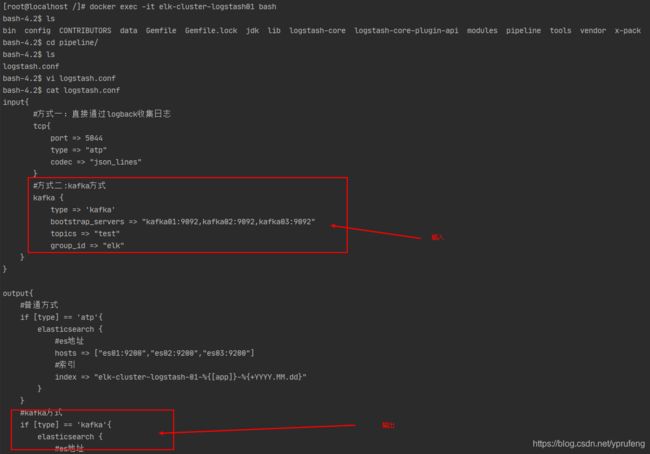

- 修改logstash配置文件

说明:这里我们只以elk-cluster-logstash01为例,其它logstash配置同elk-cluster-logstash01

input{ #方式一:直接通过logback收集日志 tcp{ port => 5044 type => "atp" codec => "json_lines" } #方式二:kafka方式 kafka { type => 'kafka' bootstrap_servers => "kafka01:9092,kafka02:9092,kafka03:9092" topics => "test" group_id => "elk" } } output{ #普通方式 if [type] == 'atp'{ elasticsearch { #es地址 hosts => ["es01:9200","es02:9200","es03:9200"] #索引 index => "elk-cluster-logstash-01-%{[app]}-%{+YYYY.MM.dd}" } } #kafka方式 if [type] == 'kafka'{ elasticsearch { #es地址 hosts => ["es01:9200","es02:9200","es03:9200"] #索引 index => "elk-atp-%{+YYYY.MM.dd}" } } }

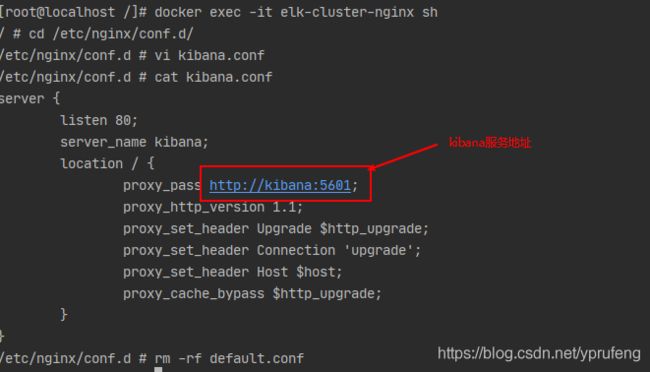

- 修改nginx配置

server { listen 80; server_name kibana; location / { proxy_pass http://kibana:5601; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection 'upgrade'; proxy_set_header Host $host; proxy_cache_bypass $http_upgrade; } }

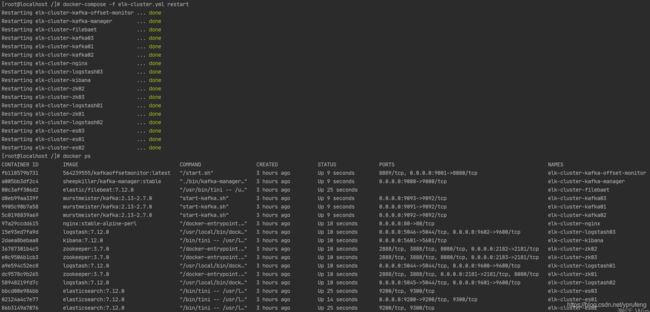

- 重启服务

命令:docker-compose -f elk-cluster.yml restart

- 验证

说明:这里我们通过filebeat收集日志,全流程的演示一下日志收集的整个流程,同时也通过logback插件直接写入logstash日志收集器,验证我们的数据是否都能够写入es集群服务器。

(1)验证服务状态

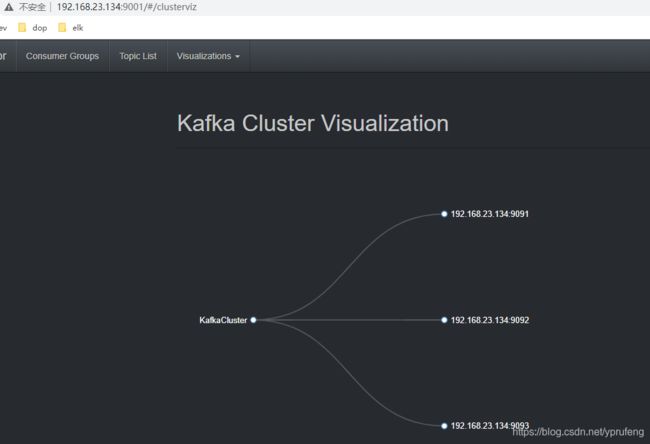

验证:kafka

验证kafka

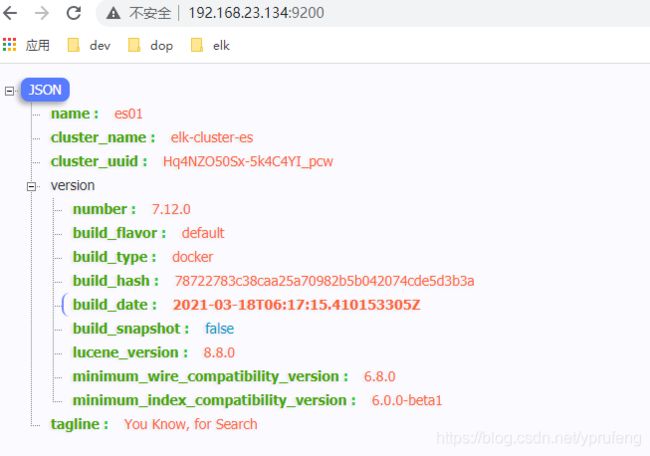

验证elasticsearch服务

验证elasticsearch集群服务

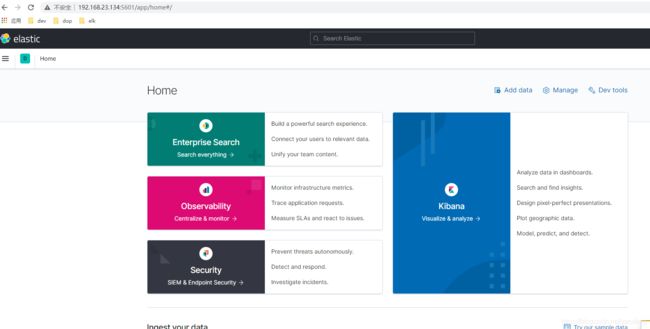

验证kibana服务

验证nginx服务

(2)验证logstash直接收集logback日志

logstash实时接收logback日志(3)验证filebeat全流程收集日志

结语

到这里我们整个elk集群搭建的系列文章就结束了,是不是很刺激,完美的开始,完美的结束,码字不易,希望能对你有所帮助。我们下期见。。。