基于ARM的k8s高可用安装

基于ARM的k8s多master高可用安装

一、初始化

1.配置k8s源

#华为源版本太低,使用阿里的

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-aarch64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#刷新

yum makecache -y

2.安装基础软件包

yum -y install wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate

3. 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

4. 安装和禁用iptables

#如果用firewalld不是很习惯,可以安装iptables,这个步骤可以不做,根据实际需求

#安装

yum install iptables-services -y

#禁用

service iptables stop

systemctl disable iptables

5. 配置时间同步

ntpdate cn.pool.ntp.org

crontab -e

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org

#可以自建时间服务器

systemctl restart crond.service

6. 关闭selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/sysconfig/selinux

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

setenforce 0

reboot

#查看状态

sestatus

7. 关闭交换分区

swapoff -a

# 永久禁用,打开/etc/fstab注释掉swap那一行。

sed -i 's/.*swap.*/#&/' /etc/fstab

8. 修改内核参数

cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

9. 修改主机名和hosts

hostnamectl set-hostname master1

hostnamectl set-hostname master2

hostnamectl set-hostname master3

hostnamectl set-hostname node1

vim /etc/hosts

192.167.0.51 mster1

192.167.0.52 mster2

192.167.0.53 mster3

192.167.0.55 node1

10.配置免密登录(master操作)

ssh-keygen -t rsa

#一直回车

ssh-copy-id -i .ssh/id_rsa.pub root@master2

ssh-copy-id -i .ssh/id_rsa.pub root@master3

二、安装k8s高可用集群

1.安装docker(所有节点)

1.1 下载安装包

官方下载地址 https://download.docker.com/linux/static/stable/aarch64/

wget https://download.docker.com/linux/static/stable/aarch64/docker-19.03.8.tgz

1.2 获取docker静态包及组件

#确认操作系统版本

cat /etc/redhat-release

#解压

tar -xvf docker-19.03.8.tgz

#将文件夹中所有内容拷贝至“/usr/bin”文件夹下

cp -p docker/* /usr/bin

1.3 准备环境

#关闭selinux和防火墙

setenforce 0

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/sysconfig/selinux

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

1.4 启动docker.service服务

#整段执行如下命令,配置docker.service文件

cat > /usr/lib/systemd/system/docker.service <1.5 配置docker加速

vim /etc/docker/daemon.json

# 添加如下内容,具体地址是自己申请的

{

"registry-mirrors": ["https://qc17bin4.mirror.aliyuncs.com"]

}

#查看信息

docker info

1.6 重启生效

systemctl daemon-reload && systemctl restart docker

1.7 配置iptables

#设置网桥包经IPTables,core文件生成路径,配置永久生效

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

echo 1 >/proc/sys/net/bridge/bridge-nf-call-ip6tables

echo """

vm.swappiness = 0

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

""" > /etc/sysctl.conf

sysctl -p

1.8 开启ipvs

#不开启ipvs将会使用iptables,但是效率低,所以官网推荐需要开通ipvs内核

cat > /etc/sysconfig/modules/ipvs.modules < /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe \${kernel_module}

fi

done

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

2.安装kubernetes1.18.17

2.1.安装k8s组件

yum install kubeadm-1.18.17 kubelet-1.18.17 kubectl-1.18.17 kubernetes-cni -y

#若需指定版本,可在命令中添加版本号,例如:yum install -y kubelet-1.18.0

2.2.设置开机自启

systemctl enable kubelet

2.3 查看安装情况

rpm -qa | grep kubelet

rpm -qa | grep kubeadm

rpm -qa | grep kubectl

rpm -qa | grep kubernetes-cni

2.4 下载初始化所需的镜像

- 方法一

# 登录可以访问谷歌的服务器手动下载相应镜像并打包然后安装

docker images | grep k8s#使用 docker load -i

docker load -i kube-apiserver-v1.18.17.tar

docker load -i kube-scheduler-v1.18.17.tar

docker load -i kube-controller-manager-v1.18.17.tar

docker load -i pause-3.2.tar

docker load -i coredns-1.6.7.tar

docker load -i etcd-3.4.3-0.tar

docker load -i kube-proxy-v1.18.17.tar

#查看K8s的Docker镜像组件

docker images | grep k8s

- 方法二

1. 查看所需的镜像

kubeadm config images list

#K8s所需镜像版本有可能会变动,因此查看列表并以此匹配需要下载的Docker镜像,文档中的镜像版本仅做参考。

切忌直接把非arm架构机器上的镜像直接复制过来使用

2.从DockerHub上下载镜像

docker pull docker.io/mirrorgooglecontainers/kube-apiserver-arm64:v1.18.17

docker pull docker.io/mirrorgooglecontainers/kube-controller-manager-arm64:v1.18.17

docker pull docker.io/mirrorgooglecontainers/kube-scheduler-arm64:v1.18.17

docker pull docker.io/mirrorgooglecontainers/kube-proxy-arm64:v1.18.17

docker pull docker.io/mirrorgooglecontainers/pause-arm64:3.2

docker pull docker.io/mirrorgooglecontainers/etcd-arm64:3.4.3-0

docker pull docker.io/coredns/coredns:1.6.7

如果配置了docker镜像库代理,可以直接将标签换为“k8s.gcr.io”下载并省略步骤3,4,例如:

docker pull k8s.gcr.io/kube-apiserver-arm64:v1.18.17

3.修改已下载的镜像标签

docker tag docker.io/mirrorgooglecontainers/kube-apiserver-arm641.18.17 k8s.gcr.io/kube-apiserver:1.18.17

docker tag docker.io/mirrorgooglecontainers/kube-controller-manager-arm64:1.18.17 k8s.gcr.io/kube-controller-manager:1.18.17

docker tag docker.io/mirrorgooglecontainers/kube-scheduler-arm64:1.18.17 k8s.gcr.io/kube-scheduler:1.18.17

docker tag docker.io/mirrorgooglecontainers/kube-proxy-arm64:1.18.17 k8s.gcr.io/kube-proxy:1.18.17

docker tag docker.io/mirrorgooglecontainers/pause-arm64:3.2 k8s.gcr.io/pause:3.2

docker tag docker.io/mirrorgooglecontainers/etcd-arm64:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0

docker tag docker.io/coredns/coredns:1.6.71.6.7 k8s.gcr.io/coredns:1.6.7

#需要将镜像repository修改与kubeadm列出的镜像名保持一致。

4.查看k8s的Docker镜像组件是否与最初列出的相同

docker images | grep k8s

5.删除旧镜像

docker rmi docker.io/mirrorgooglecontainers/kube-apiserver-arm64:v1.18.17

docker rmi docker.io/mirrorgooglecontainers/kube-controller-manager-arm64:v1.18.17

docker rmi docker.io/mirrorgooglecontainers/kube-scheduler-arm64:v1.18.17

docker rmi docker.io/mirrorgooglecontainers/kube-proxy-arm64:v1.18.17

docker rmi docker.io/mirrorgooglecontainers/pause-arm64:3.2

docker rmi docker.io/mirrorgooglecontainers/etcd-arm64:3.4.3-0

docker rmi coredns/coredns:1.6.7

2.5 部署 keepalive+lvs(master操作)

#实现master节点高可用,对apiserver做高可用

1.部署keepalived+lvs,在各master节点操作

yum install -y socat keepalived ipvsadm conntrack

2.修改/etc/keepalived/keepalived.conf

master1节点修改之后的keepalived.conf如下所示:

cp /etc/keepalived/keepalived.conf{,.bak}

vim /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP

nopreempt #非强调模式

interface eth0 #网卡

virtual_router_id 80

priority 100 #优先级

advert_int 1

authentication {

auth_type PASS

auth_pass x1ysD!

}

virtual_ipaddress {

192.167.0.199 #虚拟IP地址,与实际情况结合

}

}

virtual_server 192.167.0.199 6443 { #与virtual_ipaddress相同

delay_loop 6

lb_algo loadbalance

lb_kind DR

net_mask 255.255.255.0

persistence_timeout 0

protocol TCP

real_server 192.167.0.51 6443 { #master1 ip

weight 1

SSL_GET {

url {

path /healthz

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.167.0.52 6443 { #master2 ip

weight 1

SSL_GET {

url {

path /healthz

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.167.0.53 6443 { #maste3r ip

weight 1

SSL_GET {

url {

path /healthz

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

#修改/etc/keepalived/keepalived.conf

master2节点修改之后的keepalived.conf如下所示:

vim /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface eth0

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass x1ysD!

}

virtual_ipaddress {

192.167.0.199

}

}

virtual_server 192.167.0.199 6443 {

delay_loop 6

lb_algo loadbalance

lb_kind DR net_mask 255.255.224.0

persistence_timeout 0

protocol TCP

real_server 192.167.0.51 6443 {

weight 1

SSL_GET {

url {

path /healthz

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.167.0.52 6443 {

weight 1

SSL_GET {

url {

path /healthz

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.167.0.53 6443 {

weight 1

SSL_GET {

url {

path /healthz

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

#修改/etc/keepalived/keepalived.conf

master3节点修改之后的keepalived.conf如下所示:

vim /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface eth0

virtual_router_id 80

priority 30 #优先级与其他master不同

advert_int 1

authentication {

auth_type PASS

auth_pass x1ysD!

}

virtual_ipaddress {

192.167.0.199

}

}

virtual_server 192.167.0.199 6443 {

delay_loop 6

lb_algo loadbalance

lb_kind DR

net_mask 255.255.224.0

persistence_timeout 0

protocol TCP

real_server 192.167.0.51 6443 {

weight 1

SSL_GET {

url {

path /healthz

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.167.0.52 6443 {

weight 1

SSL_GET {

url {

path /healthz

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.167.0.53 6443 {

weight 1

SSL_GET {

url {

path /healthz

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

# 注意

1.keepalive需要配置BACKUP,而且是非抢占模式nopreempt,假设master1宕机,启动之后vip不会自动漂移到master1,这样可以保证k8s集群始终处于正常状态,因为假设master1启动,apiserver等组件不会立刻运行,如果vip漂移到master1,那么整个集群就会挂掉

2.启动顺序master1->master2->master3,在master1、master2、master3依次执行如下命令

systemctl enable keepalived && systemctl start keepalived && systemctl status keepalived

3.keepalived启动成功之后,在master1上通过ip addr可以看到vip已经绑定到ens33这个网卡上了

2.6 初始化集群

- 初始化

#在master1上执行

vim kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.18.17 #镜像详细版本

controlPlaneEndpoint: 192.168.0.199:6443

apiServer:

certSANs:

- 192.167.0.51

- 192.167.0.52

- 192.167.0.53

- 192.167.0.55

- 192.167.0.56

- 192.167.0.57

- 192.167.0.199

networking:

podSubnet: 10.244.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

#controlPlaneEndpoint: 写虚拟ip

#certSAN 写master、node、vip的IP

#初始化命令

kubeadm init --config kubeadm-config.yaml

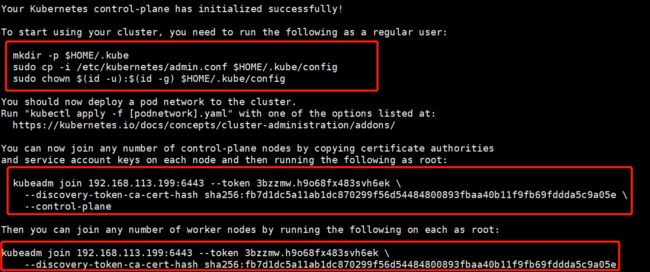

- 初始化结果

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.113.199:6443 --token 3bzzmw.h9o68fx483svh6ek \

--discovery-token-ca-cert-hash sha256:fb7d1dc5a11ab1dc870299f56d54484800893fbaa40b11f9fb69fddda5c9a05e \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.113.199:6443 --token 3bzzmw.h9o68fx483svh6ek \

--discovery-token-ca-cert-hash sha256:fb7d1dc5a11ab1dc870299f56d54484800893fbaa40b11f9fb69fddda5c9a05e

#以上内容根据环境的变化而变化

- master1 操作

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#查看结果,出现下图代表没有问题

kubectl get nodes

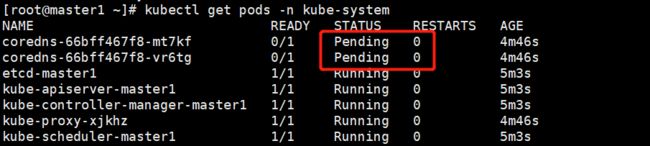

- 查看coredns

kubectl get pods -n kube-system

#上面可以看到STATUS状态是NotReady,cordns是pending,是因为没有安装网络插件,需要安装calico或者flannel。

2.7把master证书拷贝到master2和master3

#在master2和master3创建存放目录

cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/

#拷贝证书(master1操作)

scp /etc/kubernetes/pki/ca.crt master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key master2:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt master2:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/etcd/ca.key master2:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/ca.crt master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key master3:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt master3:/etc/kubernetes/pki/etcd/

scp /etc/kubernetes/pki/etcd/ca.key master3:/etc/kubernetes/pki/etcd/

2.8 加入集群

#在master2和master3执行

kubeadm join 192.167.0.199:6443 --token r24pp2.ioo01ts437a7hydf \

--discovery-token-ca-cert-hash sha256:7eb8e79c35e89e8c8b91f4091e7f41691dbf50f3ed681130ca54d149ca596099 \

--control-plane

#分别执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

2.9安装 calico

#下载

wget https://docs.projectcalico.org/v3.15/manifests/calico.yaml

#安装

kubectl apply -f calico.yaml

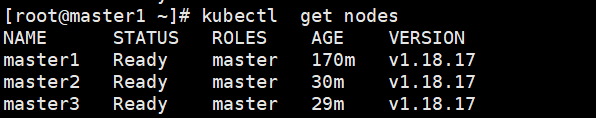

2.10 查看

kubectl get nodes

#已经正常

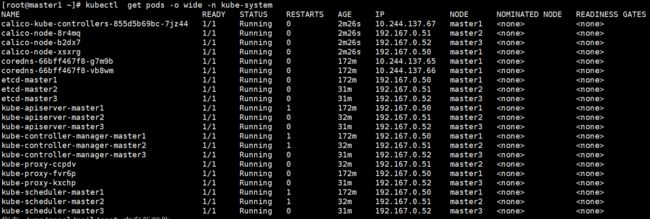

kubectl get pods -o wide -n kube-system

#名称空间也正常

2.11 加入node

#在node1和node2执行

kubeadm join 192.167.0.199:6443 --token r24pp2.ioo01ts437a7hydf \

--discovery-token-ca-cert-hash sha256:7eb8e79c35e89e8c8b91f4091e7f41691dbf50f3ed681130ca54d149ca596099

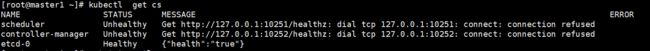

2.12 验证组件

kubectl get nodes

kubectl get cs

kubectl get pods -n kube-system

kubectl get daemonsets -n kube-system

kubectl get deployments -n kube-system

2.13 命令补全(master)

#安装completion

yum install -y bash-completion

#查看

kubectl completion bash

#加入环境变量

kubectl completion bash > /etc/profile.d/kubectl.sh

#source

source /etc/profile.d/kubectl.sh

#加入开机自启

vim /root/.bashrc

source /etc/profile.d/kubectl.sh

#这时候就可以tab补全了

2.14 问题处理

- 问题1

#出现这种情况,是/etc/kubernetes/manifests下的kube-controller-manager.yaml和kube-scheduler.yaml设置的默认端口是0,在文件中注释掉就可以了

vim /etc/kubernetes/manifests/kube-controller-manager.yaml

注释第27行 - --port=0

vim /etc/kubernetes/manifests/kube-scheduler.yaml

注释第19行 - --port=0

- 问题2

#查看官方镜像

docker search nginx --filter "is-official=true"

3.dashboard安装

3.1 下载kubernetes-dashboard安装文件并应用YAML资源定义

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.5/aio/deploy/recommended.yaml

docker pull kubernetesui/metrics-scraper:v1.0.6

docker pull kubernetesui/dashboard:v2.0.5

kubectl apply -f recommended.yaml

3.2 从外部访问控制面板,开放为NodePort类型

kubectl edit service -n kubernetes-dashboard kubernetes-dashboard

#将type改成NodePort

type: NodePort

3.3 登录

- 创建用户并关联权限到cluster-admin role

vim dashboard-rbac.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

#关联

kubectl apply -f dashboard-rbac.yaml

#查看用户

kubectl get serviceaccounts -n kubernetes-dashboard

- 查看用户名密码

kubectl get secrets -n kubernetes-dashboard -o yaml

- 解密

echo 'ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNklrZzVjWE5uUW1wQmVITlhZM2MxWlVGbmNFNTBNVnBYYkdOeU1VWjVRbXBIYnpGNllrdG1Wak5VZWtFaWZRLmV5SnBjM01pT2lKcmRXSmxjbTVsZEdWekwzTmxjblpwWTJWaFkyTnZkVzUwSWl3aWEzVmlaWEp1WlhSbGN5NXBieTl6WlhKMmFXTmxZV05qYjNWdWRDOXVZVzFsYzNCaFkyVWlPaUpyZFdKbGNtNWxkR1Z6TFdSaGMyaGliMkZ5WkNJc0ltdDFZbVZ5Ym1WMFpYTXVhVzh2YzJWeWRtbGpaV0ZqWTI5MWJuUXZjMlZqY21WMExtNWhiV1VpT2lKcmRXSmxjbTVsZEdWekxXUmhjMmhpYjJGeVpDMTBiMnRsYmkxNmMyMTNOQ0lzSW10MVltVnlibVYwWlhNdWFXOHZjMlZ5ZG1salpXRmpZMjkxYm5RdmMyVnlkbWxqWlMxaFkyTnZkVzUwTG01aGJXVWlPaUpyZFdKbGNtNWxkR1Z6TFdSaGMyaGliMkZ5WkNJc0ltdDFZbVZ5Ym1WMFpYTXVhVzh2YzJWeWRtbGpaV0ZqWTI5MWJuUXZjMlZ5ZG1salpTMWhZMk52ZFc1MExuVnBaQ0k2SW1Fd1pUUm1PREprTFRVNE9XRXRORE5rTUMwNFl6ZzRMV1E0WmpnMU5EUmpNRFl5WVNJc0luTjFZaUk2SW5ONWMzUmxiVHB6WlhKMmFXTmxZV05qYjNWdWREcHJkV0psY201bGRHVnpMV1JoYzJoaWIyRnlaRHByZFdKbGNtNWxkR1Z6TFdSaGMyaGliMkZ5WkNKOS5iS0FvWDZJUElIUkFfNTF6c1B1c043NXgybFE0dTZ3eEdQWlVtaU5KMnR0cXB2cGJHc1N0Rmp0dmhiUHhITDluaVFTMTV6SWg1X2ZCN2dPX2NzX3NIam9Id1plYlpPZHo1OC0zOVAyMU9zRjZnMGtuZ2QzS3hOcnlqeEUwS0hHUGdnbzVyNm1yblNZV29fbUpGUjFKaGdHdE96c0o4end1NVZWLVFhRnViaGF0cWhQOFhVRUc3Rmd5V0M0MzZVTUZLWm9jWjhDZlpVaE1lTWViOElFVHFkMGY4a21IUjFWSEV3MkpCejFtSnVDNEozMHBYYVBMQzhjNEJXM3cxZFRESU1kLV9OQmYtdlQwUVdQenVpd0R0RGV6VHhDb1ZXMl84NVp3WEpMM1RNRTFCYnNzS01jS2NGZWRZem9Pcmp4RU5OUl95dERpZ2tfczlKbDNEQTYtY2c=' |base64 -d

dmMyVnlkbWxqWlMxaFkyTnZkVzUwTG01aGJXVWlPaUpyZFdKbGNtNWxkR1Z6TFdSaGMyaGliMkZ5WkNJc0ltdDFZbVZ5Ym1WMFpYTXVhVzh2YzJWeWRtbGpaV0ZqWTI5MWJuUXZjMlZ5ZG1salpTMWhZMk52ZFc1MExuVnBaQ0k2SW1Fd1pUUm1PREprTFRVNE9XRXRORE5rTUMwNFl6ZzRMV1E0WmpnMU5EUmpNRFl5WVNJc0luTjFZaUk2SW5ONWMzUmxiVHB6WlhKMmFXTmxZV05qYjNWdWREcHJkV0psY201bGRHVnpMV1JoYzJoaWIyRnlaRHByZFdKbGNtNWxkR1Z6TFdSaGMyaGliMkZ5WkNKOS5iS0FvWDZJUElIUkFfNTF6c1B1c043NXgybFE0dTZ3eEdQWlVtaU5KMnR0cXB2cGJHc1N0Rmp0dmhiUHhITDluaVFTMTV6SWg1X2ZCN2dPX2NzX3NIam9Id1plYlpPZHo1OC0zOVAyMU9zRjZnMGtuZ2QzS3hOcnlqeEUwS0hHUGdnbzVyNm1yblNZV29fbUpGUjFKaGdHdE96c0o4end1NVZWLVFhRnViaGF0cWhQOFhVRUc3Rmd5V0M0MzZVTUZLWm9jWjhDZlpVaE1lTWViOElFVHFkMGY4a21IUjFWSEV3MkpCejFtSnVDNEozMHBYYVBMQzhjNEJXM3cxZFRESU1kLV9OQmYtdlQwUVdQenVpd0R0RGV6VHhDb1ZXMl84NVp3WEpMM1RNRTFCYnNzS01jS2NGZWRZem9Pcmp4RU5OUl95dERpZ2tfczlKbDNEQTYtY2c=’ |base64 -d