kmem_cache的创建和释放-slub分配器

目录

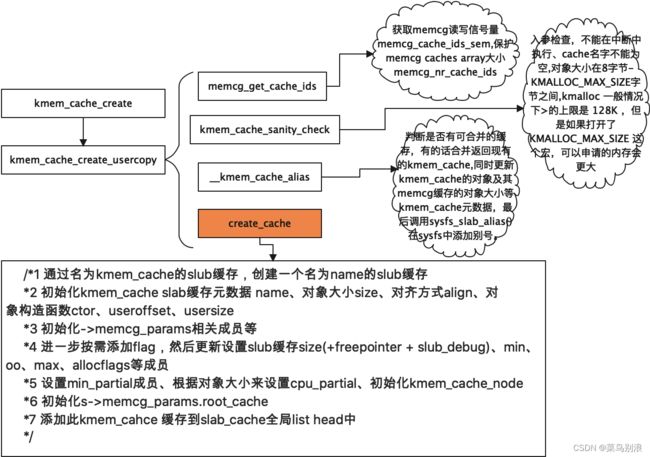

kmem_cache_create

kmem_cache_create_usercopy

__kmem_cache_alias

find_mergeable

create_cache

__kmem_cache_create

kmem_cache_open

kmem_cache_destroy

shutdown_cache

内核版本: kernel-4.19

kmem_cache是slub分配的基础,实际就是slub缓存;kmem_cache主要是描述如何管理一堆对象,其实就是slab的布局。每个对象都是固定字节的大小,并且分配的对象地址按照8字节(sizeof(void *))对齐;他是kmem_cache_create创建的,kmem_cache_destroy来进行销毁的;内核默认创建的kmem_cache有kmem_cache_node、kmalloc-128、kmalloc-256、net_namespace、pid_namespace等,当然我们自己也可以定义自己的kmem_cahce,很多驱动都会定义自己的kmem_cache,通过cat /proc/slabinfo可以查看kmem_cache的使用情况;注意,kmem_cache_create仅仅是创建了一个描述slab缓存池布局的数据结构,并没有从伙伴系统申请内存,具体的申请内存操作是在kmeme_cache_alloc中完成的。

这篇文章主要讲讲kmem_cache_create和kmem_cache_destroy的具体实现原理;

kmem_cache_create

从一个具体的inode_cache入手吧,这里创建一个名为inode_cache的kmem_cache作为分配inode的slub缓存,通过入参,可以知道其对象大小就是inode的结构体的大小,也就是说,通过此inode_cache缓存可以用于高效分配inode,还包含slab flag、对象构造函数init_once;

static struct kmem_cache *inode_cachep __read_mostly;

inode_cachep = kmem_cache_create("inode_cache",

sizeof(struct inode),

0,

(SLAB_RECLAIM_ACCOUNT|SLAB_PANIC|

SLAB_MEM_SPREAD|SLAB_ACCOUNT),

init_once);首先是对函数的封装和导出:kmem_cache_create就是kmem_cache_create_usercopy的一个封装;

struct kmem_cache *

kmem_cache_create(const char *name, unsigned int size, unsigned int align,

slab_flags_t flags, void (*ctor)(void *))

{

return kmem_cache_create_usercopy(name, size, align, flags, 0, 0,

ctor);

}

EXPORT_SYMBOL(kmem_cache_create);核心调用关系图如下:

kmem_cache_create_usercopy

下来详细介绍下kmem_cache_create_usercopy函数;这个函数主要工作就是:

1)入参检查,主要对对象大小和对象名称做检查

2)合并现有的slub缓存

3)分配和初始化kmem_cache中的这三个管理结构和成员:kmem_cache,kmem_cache_node和kmem_cache_cpu。

/*

* kmem_cache_create_usercopy - Create a cache.

* @name: A string which is used in /proc/slabinfo to identify this cache.//kmem_cache的名称

* @size: The size of objects to be created in this cache.//cache中的对象大小

* @align: The required alignment for the objects.//对象对齐方式,上层都是传入的0

* @flags: SLAB flags

* @useroffset: Usercopy: region offset // 传入0

* @usersize: Usercopy region size // 传入0

* @ctor: A constructor for the objects. //对象构造函数,

* //成功,返回一个指向kmem_cache的指针,失败返回NULL

* Returns a ptr to the cache on success, NULL on failure.

* Cannot be called within a interrupt, but can be interrupted.

* //当新分配一个page的是否,通过ctor函数构造object

* The @ctor is run when new pages are allocated by the cache.

*

* The flags are

*

* %SLAB_POISON - Poison the slab with a known test pattern (a5a5a5a5)

* to catch references to uninitialised memory.

*

* %SLAB_RED_ZONE - Insert `Red' zones around the allocated memory to check

* for buffer overruns.

*

* %SLAB_HWCACHE_ALIGN - Align the objects in this cache to a hardware

* cacheline. This can be beneficial if you're counting cycles as closely

* as davem.

*/

struct kmem_cache *

kmem_cache_create_usercopy(const char *name,

unsigned int size, unsigned int align,

slab_flags_t flags,

unsigned int useroffset, unsigned int usersize,

void (*ctor)(void *))

{

struct kmem_cache *s = NULL;

const char *cache_name;

int err;

get_online_cpus();//获取cpu热插拔锁cpu_hotplug_lock,防止热插拔改变cpu_online_map

get_online_mems();//获取内存热插拔锁mem_hotplug_lock,防止内存热插拔

memcg_get_cache_ids();//获取memcg读写信号量memcg_cache_ids_sem,保护memcg caches array大小 memcg_nr_cache_ids

mutex_lock(&slab_mutex);//获取slab_mutex互斥锁

err = kmem_cache_sanity_check(name, size);//入参检查,不能在中断中执行、cache名字不能为空,对象大小在8字节-KMALLOC_MAX_SIZE字节之间,kmalloc 一般情况下的上限是 128K ,但是如果打开了 KMALLOC_MAX_SIZE 这个宏,可以申请的内存会更大

if (err) {

goto out_unlock;

}

/* Refuse requests with allocator specific flags */

if (flags & ~SLAB_FLAGS_PERMITTED) {//只支持指定的一些flag

err = -EINVAL;

goto out_unlock;

}

/*

* Some allocators will constraint the set of valid flags to a subset

* of all flags. We expect them to define CACHE_CREATE_MASK in this

* case, and we'll just provide them with a sanitized version of the

* passed flags.

*/

flags &= CACHE_CREATE_MASK;//设置组合标志集合SLAB_CORE_FLAGS | SLAB_DEBUG_FLAGS | SLAB_CACHE_FLAGS

/* Fail closed on bad usersize of useroffset values. */

if (WARN_ON(!usersize && useroffset) || //检查或纠正usersize、useroffset

WARN_ON(size < usersize || size - usersize < useroffset))

usersize = useroffset = 0;

if (!usersize)

//判断是否有可合并的缓存,有的话合并返回现有的kmem_cache,同时更新kmem_cache的对象及其memcg缓存的对象大小等kmem_cache元数据,最后调用sysfs_slab_alias()在sysfs中添加别号。

s = __kmem_cache_alias(name, size, align, flags, ctor);

if (s)

goto out_unlock;

cache_name = kstrdup_const(name, GFP_KERNEL);//条件复制name字段到cache_name

if (!cache_name) {

err = -ENOMEM;

goto out_unlock;

}

/*1 通过名为kmem_cache的slub缓存,创建一个名为name的slub缓存

*2 初始化kmem_cache slab缓存元数据 name、对象大小size、对齐方式align、对象构造函数ctor、useroffset、usersize

*3 初始化->memcg_params相关成员等

*4 进一步按需添加flag,然后更新设置slub缓存size(+freepointer + slub_debug)、min、oo、max、allocflags等成员

*5 设置min_partial成员、根据对象大小来设置cpu_partial、初始化kmem_cache_node

*6 初始化s->memcg_params.root_cache

*7 添加此kmem_cahce 缓存到slab_cache全局list head中

*/

s = create_cache(cache_name, size,

calculate_alignment(flags, align, size),

flags, useroffset, usersize, ctor, NULL, NULL);

if (IS_ERR(s)) {

err = PTR_ERR(s);

kfree_const(cache_name);

}

//下面是一些锁的恢复操作和错误处理,如果无错,返回keme_cache s,实际上这里的slub缓存并没有分配实际的slab和对象构

造,只是做了元数据的初始化操作

out_unlock:

mutex_unlock(&slab_mutex);

memcg_put_cache_ids();

put_online_mems();

put_online_cpus();//对cpu_online_map的解锁

if (err) {

if (flags & SLAB_PANIC)

panic("kmem_cache_create: Failed to create slab '%s'. Error %d\n",

name, err);

else {

pr_warn("kmem_cache_create(%s) failed with error %d\n",

name, err);

dump_stack();

}

return NULL;

}

return s;

}

EXPORT_SYMBOL(kmem_cache_create_usercopy);memcg_get_cache_ids、kmem_cache_sanity_check比较简单,不做介绍,接下来介绍下

__kmem_cache_alias函数;

__kmem_cache_alias

关键调用链:

他的功能主要是:

1)通过find_mergeable发现可合并的slub缓存,然后更新对象大小s->size和按照word对齐的对象大小s->inuse;同时也要更新以s为root cache的所有的memcg缓存对象元数据。如果这里找到现有匹配的slub缓存,则直接返回现有的slab缓存,并在sysfs系统中增加别名;不用再创建新的slub缓存;

struct kmem_cache *

__kmem_cache_alias(const char *name, unsigned int size, unsigned int align,

slab_flags_t flags, void (*ctor)(void *))

{

struct kmem_cache *s, *c;

s = find_mergeable(size, align, flags, name, ctor);//发现可合并的kmem_cache

if (s) {

s->refcount++;//操作kmem_cache前,引用技术+1

/*

* Adjust the object sizes so that we clear

* the complete object on kzalloc.

*/

s->object_size = max(s->object_size, size);//取原缓存对象与当前指定对象大小的最大值为新对象大小

s->inuse = max(s->inuse, ALIGN(size, sizeof(void *)));//同上,设置按照word对齐的的对象大小

for_each_memcg_cache(c, s) {//轮询设置以s为root cache的所有的memcg缓存对象元数据

c->object_size = s->object_size;

c->inuse = max(c->inuse, ALIGN(size, sizeof(void *)));

}

if (sysfs_slab_alias(s, name)) {//在sysfs中添加别称,并还原引用计数

s->refcount--;

s = NULL;

}

}

return s;

}接下来看看发现可合并的slub缓存函数的实现;

find_mergeable

slab_flags_t flags, const char *name, void (*ctor)(void *))

{

struct kmem_cache *s;

//检查内核slab merge控制配置

if (slab_nomerge)

return NULL;

if (ctor)

return NULL;

//先以word对齐

size = ALIGN(size, sizeof(void *));

//综合考虑硬件缓存的对齐方式

align = calculate_alignment(flags, align, size);

//按照综合后的对其方式设置对象大小

size = ALIGN(size, align);

//按cmdline配置确认是否增加slub_debug flag

flags = kmem_cache_flags(size, flags, name, NULL);

if (flags & SLAB_NEVER_MERGE)

return NULL;

//遍历slab_root_caches链表slab_cache,里面包含所有的slab cache,root_caches_node实际是memcg_params.__root_caches_node

list_for_each_entry_reverse(s, &slab_root_caches, root_caches_node) {

if (slab_unmergeable(s))

continue;

if (size > s->size)

continue;

//判断当前的kmem_cache与查找的标识类型是否一致,不是则跳过

if ((flags & SLAB_MERGE_SAME) != (s->flags & SLAB_MERGE_SAME))

continue;

/*

* Check if alignment is compatible.

* Courtesy of Adrian Drzewiecki

*/

//判断对齐量是否匹配

if ((s->size & ~(align - 1)) != s->size)

continue;

//判断大小相差是否超过word大小,是则跳过

if (s->size - size >= sizeof(void *))

continue;

//对齐格式需要大于等于align或者整除align,否则跳过

if (IS_ENABLED(CONFIG_SLAB) && align &&

(align > s->align || s->align % align))

continue;

//flag相同、对象大小相差一个字以内、对齐格式大于等于align或者整除align,则可以合并

,直接返回匹配现有的slub缓存

return s;

}

return NULL;

}如果没有找到可以复用的slub缓存,则调用create_cache创建新的slub缓存。

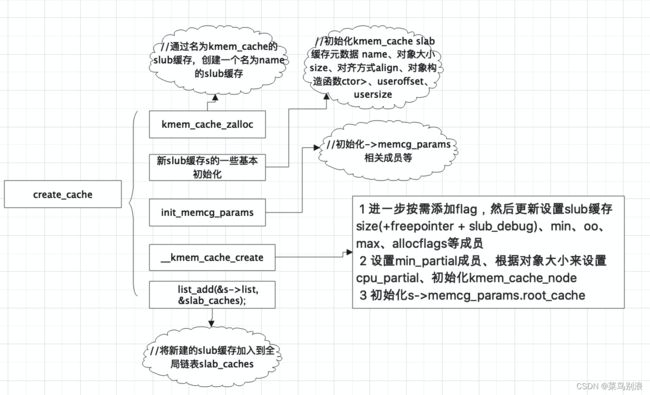

create_cache

核心调用链路:

该函数的作用如下:

/*1 通过名为kmem_cache的slub缓存,创建一个名为name的slub缓存

*2 初始化kmem_cache slab缓存元数据 name、对象大小size、对齐方式align、对象构造函数ctor、useroffset、usersize

*3 初始化->memcg_params相关成员等

*4 进一步按需添加flag,然后更新设置slub缓存size(+freepointer + slub_debug)、min、oo、

max、allocflags等成员

*5 设置min_partial成员、根据对象大小来设置cpu_partial、初始化kmem_cache_node

*6 初始化s->memcg_params.root_cache

*7 添加此kmem_cahce 缓存到slab_cache全局list head中

*/

static struct kmem_cache *create_cache(const char *name,

unsigned int object_size, unsigned int align,

slab_flags_t flags, unsigned int useroffset,

unsigned int usersize, void (*ctor)(void *),

struct mem_cgroup *memcg, struct kmem_cache *root_cache)

{

struct kmem_cache *s;

int err;

if (WARN_ON(useroffset + usersize > object_size))

useroffset = usersize = 0;

err = -ENOMEM;

//通过名为kmem_cache的slub缓存,创建一个名为name的slub缓存

s = kmem_cache_zalloc(kmem_cache, GFP_KERNEL);

if (!s)

goto out;

//初始化kmem_cache slab缓存元数据 name、对象大小size、对齐方式align、对象构造函数ctor、useroffset、usersize

s->name = name;

s->size = s->object_size = object_size;

s->align = align;

s->ctor = ctor;

s->useroffset = useroffset;

s->usersize = usersize;

//初始化->memcg_params相关成员等

err = init_memcg_params(s, memcg, root_cache);

if (err)

goto out_free_cache;

/* 1 进一步按需添加flag,然后更新设置slub缓存size(+freepointer + slub_debug)、min、oo、max、allocflags等成员

* 2 设置min_partial成员、根据对象大小来设置cpu_partial、初始化kmem_cache_node

* 3 初始化s->memcg_params.root_cache

*/

err = __kmem_cache_create(s, flags);

if (err)

goto out_free_cache;

//destroy之前需要确认为0

s->refcount = 1;

//将新建的slub缓存加入到全局链表slab_caches

list_add(&s->list, &slab_caches);

memcg_link_cache(s);

out:

if (err)

return ERR_PTR(err);

return s;

out_free_cache:

destroy_memcg_params(s);

kmem_cache_free(kmem_cache, s);

goto out;

}接下来讲一下核心结构成员的初始化函数__kmem_cache_create

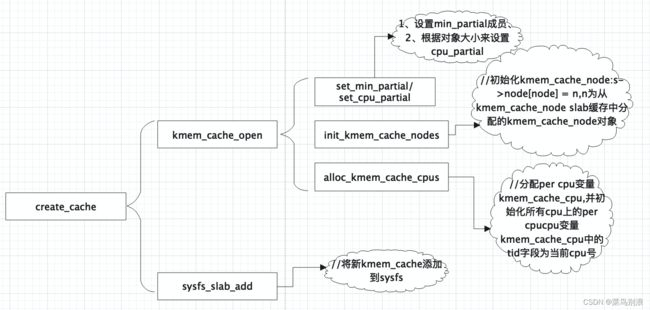

__kmem_cache_create

主要的调用链路:

主要作用如下:

*1进一步按需添加flag,然后更新设置slub缓存size(+freepointer + slub_debug)、min、oo、max、allocflags等成员

*2 设置min_partial成员、根据对象大小来设置cpu_partial、初始化kmem_cache_node

*3 初始化s->memcg_params.root_cache

int __kmem_cache_create(struct kmem_cache *s, slab_flags_t flags)

{

int err;

/* 1 进一步按需添加flag,然后更新设置slub缓存size(+freepointer + slub_debug)、min、oo>、max、allocflags等成员

* 2 设置min_partial成员、根据对象大小来设置cpu_partial、初始化kmem_cache_node

*/

err = kmem_cache_open(s, flags);

if (err)

return err;

/* Mutex is not taken during early boot */

if (slab_state <= UP)

return 0;

//初始化s->memcg_params.root_cache

memcg_propagate_slab_attrs(s);

//将kmem_cache添加到sysfs

err = sysfs_slab_add(s);

if (err)

__kmem_cache_release(s);

return err;

}kmem_cache_open

static int kmem_cache_open(struct kmem_cache *s, slab_flags_t flags)

{

//设置flag,如果打开了slub_debug,则需要设置上

s->flags = kmem_cache_flags(s->size, flags, s->name, s->ctor);

#ifdef CONFIG_SLAB_FREELIST_HARDENED

s->random = get_random_long();

#endif

//设置slub缓存size(针对指定有调整+freepointer + slub_debug)、min、oo、max、allocflags等成员

if (!calculate_sizes(s, -1))

goto error;

if (disable_higher_order_debug) {

/*

* Disable debugging flags that store metadata if the min slab

* order increased.

*/

if (get_order(s->size) > get_order(s->object_size)) {

s->flags &= ~DEBUG_METADATA_FLAGS;

s->offset = 0;

if (!calculate_sizes(s, -1))

goto error;

}

}

#if defined(CONFIG_HAVE_CMPXCHG_DOUBLE) && \

defined(CONFIG_HAVE_ALIGNED_STRUCT_PAGE)

if (system_has_cmpxchg_double() && (s->flags & SLAB_NO_CMPXCHG) == 0)

/* Enable fast mode */

s->flags |= __CMPXCHG_DOUBLE;

#endif

/*

* The larger the object size is, the more pages we want on the partial

* list to avoid pounding the page allocator excessively.

*/

//设置min_partial成员,用于限制struct kmem_cache_node中的partial链表slab的数量,实际表示kmem_cache_node中

partial链表最大slab数量,如果大于这个mini_partial的值,那么多余的slab就会被释放。

set_min_partial(s, ilog2(s->size) / 2);

//根据对象大小来设置cpu_partial,用于限制per cpu partial中所有slab的free object的数量的最大值,超过这个>值就会将所有的slab转移到kmem_cache_node的partial链表。

set_cpu_partial(s);

#ifdef CONFIG_NUMA

s->remote_node_defrag_ratio = 1000;

#endif

/* Initialize the pre-computed randomized freelist if slab is up */

//如果slab是up状态,则初始化预先计算的随机freelist

if (slab_state >= UP) {

if (init_cache_random_seq(s))

goto error;

}

//初始化kmem_cache_node:s->node[node] = n,n为从kmem_cache_node slab缓存中分配的kmem_cache_node对象

if (!init_kmem_cache_nodes(s))

goto error;

//分配per cpu变量kmem_cache_cpu,并初始化所有cpu上的per cpucpu变量kmem_cache_cpu中的tid字段为当前cpu号

if (alloc_kmem_cache_cpus(s))

return 0;

//释放kmem_cache_node????

free_kmem_cache_nodes(s);

error:

if (flags & SLAB_PANIC)

panic("Cannot create slab %s size=%u realsize=%u order=%u offset=%u flags=%lx\n",

s->name, s->size, s->size,

oo_order(s->oo), s->offset, (unsigned long)flags);

return -EINVAL;

}init_kmem_cache_nodes

该函数初始化kmem_cache_node:s->node[node] = n,n为从kmem_cache_node slab缓存中分配的kmem_cache_node对象。

static int init_kmem_cache_nodes(struct kmem_cache *s)

{

int node;

for_each_node_state(node, N_NORMAL_MEMORY) {

struct kmem_cache_node *n;

if (slab_state == DOWN) {

//当系统启动阶段slub还没有建立起来的时候通过函数early_kmem_cache_node_alloc来分配kmem_cache_nodes结构。系统实现的第一个slub缓存就是"kmem_cache_node",slab_state 等于 DOWN

early_kmem_cache_node_alloc(node);

continue;

}

//从kmem_cache_node slab缓存中分配kmem_cache_node对象

n = kmem_cache_alloc_node(kmem_cache_node,

GFP_KERNEL, node);

if (!n) {

free_kmem_cache_nodes(s);

return 0;

}

//初始化kmem_cache_node对象n的nr_partial partial list中slab数量、partial slab列表、spinlock_t list_lock,并赋值给s->node[node]

init_kmem_cache_node(n);

s->node[node] = n;

}

return 1;

}early_kmem_cache_node_alloc

static void early_kmem_cache_node_alloc(int node)

{

struct page *page;

struct kmem_cache_node *n;

BUG_ON(kmem_cache_node->size < sizeof(struct kmem_cache_node));

//分配缓存的第一个slab

page = new_slab(kmem_cache_node, GFP_NOWAIT, node);

BUG_ON(!page);

if (page_to_nid(page) != node) {

pr_err("SLUB: Unable to allocate memory from node %d\n", node);

pr_err("SLUB: Allocating a useless per node structure in order to be able to continue\n");

}

//slab的第一个object分配给"kmem_cache_node"缓存的kmem_cache_node结构

n = page->freelist;

BUG_ON(!n);

// 函数get_freepointer 只有一行代码*(void **)(object + s->offset);也就是设置下一个空闲object

page->freelist = get_freepointer(kmem_cache_node, n);

//slab中已经使用一个object

page->inuse = 1;

//表示该slab不在s->cpu_slab中

page->frozen = 0;

// kmem_cache_node是一个全局的指针变量,指向"kmem_cache_node"缓存的kmem_cache

kmem_cache_node->node[node] = n;

#ifdef CONFIG_SLUB_DEBUG

init_object(kmem_cache_node, n, SLUB_RED_ACTIVE);

init_tracking(kmem_cache_node, n);

#endif

kasan_kmalloc(kmem_cache_node, n, sizeof(struct kmem_cache_node),

GFP_KERNEL);

//初始化kmem_cache_node中各个成员

init_kmem_cache_node(n);

inc_slabs_node(kmem_cache_node, node, page->objects);

/*

* No locks need to be taken here as it has just been

* initialized and there is no concurrent access.

*/

//将slab添加到s->node->partial

__add_partial(n, page, DEACTIVATE_TO_HEAD);

}alloc_kmem_cache_cpus

该函数分配per cpu变量kmem_cache_cpu,并初始化所有cpu上的per cpucpu变量kmem_cache_cpu中的tid字段为当前cpu号

static inline int alloc_kmem_cache_cpus(struct kmem_cache *s)

{

BUILD_BUG_ON(PERCPU_DYNAMIC_EARLY_SIZE <

KMALLOC_SHIFT_HIGH * sizeof(struct kmem_cache_cpu));

/*

* Must align to double word boundary for the double cmpxchg

* instructions to work; see __pcpu_double_call_return_bool().

*/

//分配per cpu变量kmem_cache_cpu:s->cpu_slab,指定double word对齐

s->cpu_slab = __alloc_percpu(sizeof(struct kmem_cache_cpu),

2 * sizeof(void *));

if (!s->cpu_slab)

return 0;

//初始化所有cpu上的per cpucpu变量kmem_cache_cpu中的tid字段为当前cpu号

init_kmem_cache_cpus(s);

return 1;

}至此,一个kmem_cache的缓存便创建成功。

kmem_cache_destroy

void kmem_cache_destroy(struct kmem_cache *s)

{

int err;

if (unlikely(!s))

return;

flush_memcg_workqueue(s);

get_online_cpus();

get_online_mems();

mutex_lock(&slab_mutex);

//缓存的应用计数减1,如果减1后的引用计数不为零,说明还有人在用这个缓存,直接就退出了

s->refcount--;

if (s->refcount)

goto out_unlock;

//如果引用计数已经为零,则先销毁memcg,如果成功的话err等于零,最后通过shutdown_cache销毁缓存

err = shutdown_memcg_caches(s);

if (!err)

err = shutdown_cache(s);

if (err) {

pr_err("kmem_cache_destroy %s: Slab cache still has objects\n",

s->name);

dump_stack();

}

out_unlock:

mutex_unlock(&slab_mutex);

put_online_mems();

put_online_cpus();

}

EXPORT_SYMBOL(kmem_cache_destroy);shutdown_cache

static int shutdown_cache(struct kmem_cache *s)

{

/* free asan quarantined objects */

kasan_cache_shutdown(s);

// #释放所有被slab 占用的资源

if (__kmem_cache_shutdown(s) != 0)

return -EBUSY;

//将memcg相关的数据从链表移除

memcg_unlink_cache(s);

//把s从slab_cache链表移除

list_del(&s->list);

if (s->flags & SLAB_TYPESAFE_BY_RCU) {

#ifdef SLAB_SUPPORTS_SYSFS

sysfs_slab_unlink(s);

#endif

list_add_tail(&s->list, &slab_caches_to_rcu_destroy);

schedule_work(&slab_caches_to_rcu_destroy_work);

} else {

#ifdef SLAB_SUPPORTS_SYSFS

//删除sysfs条目

sysfs_slab_unlink(s);

sysfs_slab_release(s);

#else

slab_kmem_cache_release(s);

#endif

}

return 0;

}