SLAB缓存管理之创建使用

注:本文分析基于linux-4.18.0-193.14.2.el8_2内核版本,即CentOS 8.2

1、关于slab

slab本身就是缓存,伙伴系统以页作为管理单位,对小内存块不友好,浪费严重,从而出现slab,将不规则的各个内存块分门别类,进程需要使用时直接在对应slab管理区获取,不用再从伙伴系统中获取和初始化,提高效率的同时也减少内存碎片。针对slab缓存管理的各个结构关系,可参考《SLAB缓存管理各结构关系》,了解了各个结构体之间的关系,对于slab的管理逻辑也就清楚了大半,再来实际看看slab的创建就事半功倍了。

2、创建slab管理结构

只要是有经常申请释放相同大小内存的场景小,都可以创建自己的slab管理区,slab的创建通过kmem_cache_create_usercopy,我们以ext4_inode_cache这个slab管理区为例,

static int __init init_inodecache(void)

{

//创建名为ext4_inode_cache的slab管理区

ext4_inode_cachep = kmem_cache_create_usercopy("ext4_inode_cache",

sizeof(struct ext4_inode_info), 0,

(SLAB_RECLAIM_ACCOUNT|SLAB_MEM_SPREAD|

SLAB_ACCOUNT),

offsetof(struct ext4_inode_info, i_data),

sizeof_field(struct ext4_inode_info, i_data),

init_once);

if (ext4_inode_cachep == NULL)

return -ENOMEM;

return 0;

}

这里ext4_inode_cache就是我们在/proc/slabinfo中看到的slab名称,

[root@localhost ~]# cat /proc/slabinfo | grep "ext4_inode_cache"

ext4_inode_cache 57789 59265 1176 27 8 : tunables 0 0 0 : slabdata 2195 2195 0

kmem_cache_create_usercopy实际创建的是kmem_cache的管理结构,用来统管ext4_inode_cache这个slab管理区,创建这个结构时也会查看是否系统中有可复用的结构,没有才会真正的去系统内存中分配,

struct kmem_cache *

kmem_cache_create_usercopy(const char *name,

unsigned int size, unsigned int align,

slab_flags_t flags,

unsigned int useroffset, unsigned int usersize,

void (*ctor)(void *))

{

struct kmem_cache *s = NULL;

...

if (!usersize)

//尝试复用slab中已经存在的kmem_cache,复用的基本条件是

//创建size与已存在的kmem_cache的size比较接近,且小于等于后者

s = __kmem_cache_alias(name, size, align, flags, ctor);

if (s)

goto out_unlock;

cache_name = kstrdup_const(name, GFP_KERNEL);

if (!cache_name) {

err = -ENOMEM;

goto out_unlock;

}

//没有可复用的,需要新分配一个

s = create_cache(cache_name, size,

calculate_alignment(flags, align, size),

flags, useroffset, usersize, ctor, NULL, NULL);

...

return s;

}

static struct kmem_cache *create_cache(const char *name,

unsigned int object_size, unsigned int align,

slab_flags_t flags, unsigned int useroffset,

unsigned int usersize, void (*ctor)(void *),

struct mem_cgroup *memcg, struct kmem_cache *root_cache)

{

struct kmem_cache *s;

int err;

if (WARN_ON(useroffset + usersize > object_size))

useroffset = usersize = 0;

err = -ENOMEM;

//通过zalloc分配一个kmem_cache

s = kmem_cache_zalloc(kmem_cache, GFP_KERNEL);

if (!s)

goto out;

s->name = name;

s->size = s->object_size = object_size;

s->align = align;

s->ctor = ctor;

s->useroffset = useroffset;

s->usersize = usersize;

err = init_memcg_params(s, root_cache);

if (err)

goto out_free_cache;

//初始化kmem_cache中其他变量

err = __kmem_cache_create(s, flags);

if (err)

goto out_free_cache;

s->refcount = 1;

//系统上所有的slab管理区都挂到slab_caches链表

list_add(&s->list, &slab_caches);

memcg_link_cache(s, memcg);

out:

if (err)

return ERR_PTR(err);

return s;

out_free_cache:

destroy_memcg_params(s);

kmem_cache_free(kmem_cache, s);

goto out;

}

分配kmem_cache结构后,需要做一些初始化,然后将该结构挂载到slab_caches链表,这样系统中所有的slab管理区就形成了一个链表,这个链表上就是/proc/slabinfo中包含的所有slab。

3、创建slab对象

创建kmem_cache结构后,实际需要使用的slab对象并没有分配,需要等到进程实际需要用对象时才会从伙伴系统中分配内存,并初始化为slab对象,挂载到slab管理结构上。

3.1 kmem_cache_alloc

slab对象的分配通过kmem_cache_alloc实现,我们还是以ext4_inode_cache为例,

static struct inode *ext4_alloc_inode(struct super_block *sb)

{

struct ext4_inode_info *ei;

ei = kmem_cache_alloc(ext4_inode_cachep, GFP_NOFS);

...

}

kmem_cache_alloc的第一个入参就是上面步骤中创建的ext4 slab管理区,

kmem_cache_alloc -> slab_alloc -> __do_cache_alloc

kmem_cache_alloc经过一些列的调用走到__do_cache_alloc,进行真正的slab对象分配。

3.2 __do_cache_alloc

主要逻辑:

- 从当前node上获取空闲缓存对象

- 当前node内存不够,到其他node获取空闲slab对象

static __always_inline void *

__do_cache_alloc(struct kmem_cache *cache, gfp_t flags)

{

void *objp;

if (current->mempolicy || cpuset_do_slab_mem_spread()) {

objp = alternate_node_alloc(cache, flags);

if (objp)

goto out;

}

//从当前node上获取空闲缓存对象

objp = ____cache_alloc(cache, flags);

/*

* We may just have run out of memory on the local node.

* ____cache_alloc_node() knows how to locate memory on other nodes

*/

if (!objp)

//当前node内存耗尽,尝试到另一个node上获取空闲对象

objp = ____cache_alloc_node(cache, flags, numa_mem_id());

out:

return objp;

}

3.3 ____cache_alloc

主要逻辑:

- 从本地CPU高速缓存结构中获取对象

- 本地CPU高速缓无可用对象,要从其他地方填充

static inline void *____cache_alloc(struct kmem_cache *cachep, gfp_t flags)

{

void *objp;

struct array_cache *ac;

check_irq_off();

//从本地CPU高速缓存结构中获取对象

ac = cpu_cache_get(cachep);

if (likely(ac->avail)) {

ac->touched = 1;

//如果从本地CPU高速缓存结构中还有可用对象,直接获取并返回

objp = ac->entry[--ac->avail];

STATS_INC_ALLOCHIT(cachep);

goto out;

}

STATS_INC_ALLOCMISS(cachep);

//本地CPU高速缓无可用对象,要填充

objp = cache_alloc_refill(cachep, flags);

/*

* the 'ac' may be updated by cache_alloc_refill(),

* and kmemleak_erase() requires its correct value.

*/

ac = cpu_cache_get(cachep);

out:

/*

* To avoid a false negative, if an object that is in one of the

* per-CPU caches is leaked, we need to make sure kmemleak doesn't

* treat the array pointers as a reference to the object.

*/

if (objp)

kmemleak_erase(&ac->entry[ac->avail]);

return objp;

}

3.4 cache_alloc_refill

主要逻辑:

- 先尝试从node共享缓存中获取空闲slab对象填充到本地CPU缓存

- node共享缓存不行,则尝试从slabs_partial和slabs_free上获取空闲对象填充

- 以上都不行,就只能找上级伙伴系统分配页框,并初始化为slab对象并填充

static void *cache_alloc_refill(struct kmem_cache *cachep, gfp_t flags)

{

int batchcount;

struct kmem_cache_node *n;

struct array_cache *ac, *shared;

int node;

void *list = NULL;

struct page *page;

check_irq_off();

node = numa_mem_id();

ac = cpu_cache_get(cachep);

batchcount = ac->batchcount;

if (!ac->touched && batchcount > BATCHREFILL_LIMIT) {

//touched的值在获取对象时置1,如果为0,说明最近都没有使用,不需要填充太多

batchcount = BATCHREFILL_LIMIT;

}

//获取当前node的kmem_cache_node结构,后面需要检查三个slab链表的状态

n = get_node(cachep, node);

BUG_ON(ac->avail > 0 || !n);

//获取node共享高速缓存

shared = READ_ONCE(n->shared);

//如果当前node上没有可用对象,且(不存在节点共享缓存或者共享缓存被用完)

//这时需要去上一级内存系统,即伙伴系统里获取空闲页面并填充到slab管理结构中

if (!n->free_objects && (!shared || !shared->avail))

goto direct_grow;

spin_lock(&n->list_lock);

shared = READ_ONCE(n->shared);

//如果有node共享缓存,尝试从这里获取空闲对象填充

if (shared && transfer_objects(ac, shared, batchcount)) {

shared->touched = 1;

goto alloc_done;

}

//node共享缓存没戏,换个路径

while (batchcount > 0) {

//尝试从slabs_partial和slabs_free上获取空闲对象

page = get_first_slab(n, false);

if (!page)

goto must_grow;

check_spinlock_acquired(cachep);

batchcount = alloc_block(cachep, ac, page, batchcount);

//调整slab链表,我们刚获取的这个page如果里面的对象用完了就挂到slabs_full

//如果没用完就挂到slabs_partial链表

fixup_slab_list(cachep, n, page, &list);

}

must_grow:

//node上空闲对象更新,减去放到本地CPU缓存的数量

n->free_objects -= ac->avail;

alloc_done:

spin_unlock(&n->list_lock);

fixup_objfreelist_debug(cachep, &list);

direct_grow:

//各种路径都没有空闲对象了,只能找上一级boss——buddy system要资源了

if (unlikely(!ac->avail)) {

/* Check if we can use obj in pfmemalloc slab */

if (sk_memalloc_socks()) {

void *obj = cache_alloc_pfmemalloc(cachep, n, flags);

if (obj)

return obj;

}

//从伙伴系统分配页框,并初始化为slab管理对象

page = cache_grow_begin(cachep, gfp_exact_node(flags), node);

/*

* cache_grow_begin() can reenable interrupts,

* then ac could change.

*/

//再次确定本地CPU缓存是否可用,防止分配页框是其他进程也做了相同操作

ac = cpu_cache_get(cachep);

if (!ac->avail && page)

//填充batchcount个空闲对象回本地CPU缓存中,剩下的才放回结点slab链表上

alloc_block(cachep, ac, page, batchcount);

//做些善后工作,比如把页框挂载到对应的slab链表,以及更新统计信息

cache_grow_end(cachep, page);

if (!ac->avail)

return NULL;

}

ac->touched = 1;

return ac->entry[--ac->avail];

}

3.5 cache_grow_begin

主要逻辑:

- 从伙伴系统获取页框

- 分配空闲对象数组

- 初始化slab对象

static struct page *cache_grow_begin(struct kmem_cache *cachep,

gfp_t flags, int nodeid)

{

void *freelist;

size_t offset;

gfp_t local_flags;

int page_node;

struct kmem_cache_node *n;

struct page *page;

...

//从上级伙伴管理系统上获取一个空闲slab对象,页面数为cachep->gfporder

page = kmem_getpages(cachep, local_flags, nodeid);

if (!page)

goto failed;

page_node = page_to_nid(page);

n = get_node(cachep, page_node);

/* Get colour for the slab, and cal the next value. */

n->colour_next++;

if (n->colour_next >= cachep->colour)

n->colour_next = 0;

offset = n->colour_next;

if (offset >= cachep->colour)

offset = 0;

offset *= cachep->colour_off;

/* Get slab management. */

//分配空闲对象数组

freelist = alloc_slabmgmt(cachep, page, offset,

local_flags & ~GFP_CONSTRAINT_MASK, page_node);

if (OFF_SLAB(cachep) && !freelist)

goto opps1;

slab_map_pages(cachep, page, freelist);

kasan_poison_slab(page);

//初始化slab上的空闲对象,包括空闲对象数组

cache_init_objs(cachep, page);

if (gfpflags_allow_blocking(local_flags))

local_irq_disable();

return page;

opps1:

kmem_freepages(cachep, page);

failed:

if (gfpflags_allow_blocking(local_flags))

local_irq_disable();

return NULL;

}

3.6 alloc_slabmgmt

主要逻辑:

- 针对空闲对象放置在页框外,需要额外引入一个微型kmem_cache,需要分配地址

- 针对空闲对象放置在页框内,则使用页框最后的几个字节作为存放地址

static void *alloc_slabmgmt(struct kmem_cache *cachep,

struct page *page, int colour_off,

gfp_t local_flags, int nodeid)

{

void *freelist;

//获取页面的虚拟地址

void *addr = page_address(page);

//设置首个对象的地址

page->s_mem = addr + colour_off;

page->active = 0;

if (OBJFREELIST_SLAB(cachep))

freelist = NULL;

else if (OFF_SLAB(cachep)) {

//空闲对象索引数组在slab外,则需要从freelist_cache中分配对象

//相当于嵌入了另一个微型的kmem_cache结构

freelist = kmem_cache_alloc_node(cachep->freelist_cache,

local_flags, nodeid);

if (!freelist)

return NULL;

} else {

//如果在slab内部,则使用slab最后的空间来存放空闲对象索引数组

freelist = addr + (PAGE_SIZE << cachep->gfporder) -

cachep->freelist_size;

}

return freelist;

}

3.7 cache_grow_end

主要逻辑:

- 更新各个统计值

- 将新分配得来的slab按照实际使用情况挂载到slabs_free、slabs_full或者slabs_partial链表

static void cache_grow_end(struct kmem_cache *cachep, struct page *page)

{

struct kmem_cache_node *n;

void *list = NULL;

...

spin_lock(&n->list_lock);

//更新当前node上的slab数量

n->total_slabs++;

//如果当前slab所有对象都是空闲,加入slabs_free链表

if (!page->active) {

list_add_tail(&page->slab_list, &n->slabs_free);

n->free_slabs++;

} else

//否则加入slabs_full或者slabs_partial链表

fixup_slab_list(cachep, n, page, &list);

STATS_INC_GROWN(cachep);

//更新当前node上的可用的对象数量

n->free_objects += cachep->num - page->active;

spin_unlock(&n->list_lock);

fixup_objfreelist_debug(cachep, &list);

}

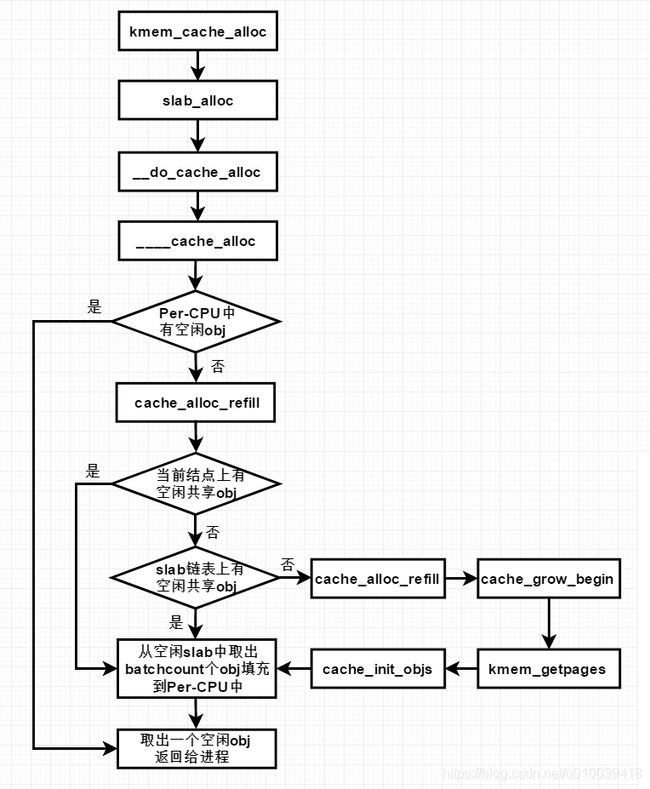

4、slab对象分配流程

整个slab分配流程可以大概用以下流程图描述,总的来说就是有这几级缓存,

- 本地Per-CPU高速缓存

- 当前节点共享slab对象

- slab链表

- 上级伙伴系统

越往后,获取空闲slab对象的代价越大,效率越低,其实和硬件的L1、L2缓存类似。