k8s部署tidb4.0集群和监控

书接上文 K8S 的 Volume[本地磁盘] ,这里我用上文创建的Provisioner来运行tidb数据库。我自己的k8s有helm和持久化存储,直接开干

准备:

1.、安装 helm 客户端(master 节点 我本机有了旧版本 这里没有更新,新旧版本后面命令有点不同)

wget -c https://get.helm.sh/helm-v3.4.2-linux-amd64.tar.gz

tar -xf helm-v3.4.2-linux-amd64.tar.gz

cd linux-amd64/

chmod +x helm

cp -a helm /usr/local/bin/

helm version -c2、创建持久化存储 local-volume-provisioner.yaml 内容如下:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: "local-storage"

provisioner: "kubernetes.io/no-provisioner"

volumeBindingMode: "WaitForFirstConsumer"

---

apiVersion: v1

kind: ConfigMap

metadata:

name: local-provisioner-config

namespace: kube-system

data:

setPVOwnerRef: "true"

nodeLabelsForPV: |

- kubernetes.io/hostname

storageClassMap: |

local-storage:

hostDir: /mnt/disks

mountDir: /mnt/disks

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: local-volume-provisioner

namespace: kube-system

labels:

app: local-volume-provisioner

spec:

selector:

matchLabels:

app: local-volume-provisioner

template:

metadata:

labels:

app: local-volume-provisioner

spec:

serviceAccountName: local-storage-admin

containers:

- image: "quay.io/external_storage/local-volume-provisioner:v2.3.4"

name: provisioner

securityContext:

privileged: true

env:

- name: MY_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: MY_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: JOB_CONTAINER_IMAGE

value: "quay.io/external_storage/local-volume-provisioner:v2.3.4"

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 100m

memory: 100Mi

volumeMounts:

- mountPath: /etc/provisioner/config

name: provisioner-config

readOnly: true

# mounting /dev in DinD environment would fail

# - mountPath: /dev

# name: provisioner-dev

- mountPath: /mnt/disks

name: local-disks

mountPropagation: "HostToContainer"

volumes:

- name: provisioner-config

configMap:

name: local-provisioner-config

# - name: provisioner-dev

# hostPath:

# path: /dev

- name: local-disks

hostPath:

path: /mnt/disks

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-storage-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-storage-provisioner-pv-binding

namespace: kube-system

subjects:

- kind: ServiceAccount

name: local-storage-admin

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:persistent-volume-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-storage-provisioner-node-clusterrole

namespace: kube-system

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-storage-provisioner-node-binding

namespace: kube-system

subjects:

- kind: ServiceAccount

name: local-storage-admin

namespace: kube-system

roleRef:

kind: ClusterRole

name: local-storage-provisioner-node-clusterrole

apiGroup: rbac.authorization.k8s.io挂载磁盘,其Provisioner本身其并不提供local volume,但它在各个节点上的provisioner会去动态的“发现”挂载点(discovery directory),当某node的provisioner在/mnt/disks目录下发现有挂载点时,会创建PV,该PV的local.path就是挂载点,并设置nodeAffinity为该node。lvp.sh

#!/bin/bash

for i in $(seq 1 10); do

mkdir -p /mnt/disks-bind/vol${i}

mkdir -p /mnt/disks/vol${i}

mount --bind /mnt/disks-bind/vol${i} /mnt/disks/vol${i}

done创建pvc

#创建挂在文件夹 每个节点都要执行

sh ./lvp.sh

#拉去镜像

docker pull quay.io/external_storage/local-volume-provisioner:v2.3.4

#docker save -o local-volume-provisioner-v2.3.4.tar quay.io/external_storage/local-volume-provisioner:v2.3.4

#docker load -i local-volume-provisioner-v2.3.4.tar

kubectl apply -f local-volume-provisioner.yaml

kubectl get po -n kube-system -l app=local-volume-provisioner && kubectl get pv | grep local-storage4.创建 TiDB CRD(master 节点)

wget -c https://raw.githubusercontent.com/pingcap/tidb-operator/v1.1.7/manifests/crd.yaml

kubectl apply -f ./crd.yaml

kubectl get crd5.安装 operator(master 节点)

wget -c http://charts.pingcap.org/tidb-operator-v1.1.7.tgz

tar -xf tidb-operator-v1.1.7.tgz

kubectl create namespace tidb

helm install --namespace tidb tidb-operator -f ./tidb-operator/values.yaml --version v1.1.7

#helm install --namespace tidb tidb-operator -f ./tidb-operator/values.yaml --version v1.1.7 --generate-name

kubectl get pods -n tidb6.部署 TiDB 集群(master 节点)vim ./tidb-cluster.yaml [我这里是在测试搭建环境 只有一个k8s-master和k8s-node 所以副本是2,生产应该是>=3的奇数]

apiVersion: pingcap.com/v1alpha1

kind: TidbCluster

metadata:

name: tidb

namespace: tidb

spec:

version: "v4.0.8"

timezone: UTC

configUpdateStrategy: RollingUpdate

hostNetwork: false

imagePullPolicy: IfNotPresent

helper:

image: busybox:1.26.2

enableDynamicConfiguration: true

pd:

enableDashboardInternalProxy: true

baseImage: pingcap/pd

config: {}

replicas: 2

requests:

cpu: "100m"

storage: 1Gi

mountClusterClientSecret: false

storageClassName: "local-storage"

tidb:

baseImage: pingcap/tidb

replicas: 2

requests:

cpu: "100m"

config: {}

service:

type: NodePort

externalTrafficPolicy: Cluster

mysqlNodePort: 30011

statusNodePort: 30012

tikv:

baseImage: pingcap/tikv

config: {}

replicas: 2

requests:

cpu: "100m"

storage: 1Gi

mountClusterClientSecret: false

storageClassName: "local-storage"

tiflash:

baseImage: pingcap/tiflash

maxFailoverCount: 3

replicas: 1

storageClaims:

- resources:

requests:

storage: 1Gi

storageClassName: local-storage

pump:

baseImage: pingcap/tidb-binlog

replicas: 1

storageClassName: local-storage

requests:

storage: 1Gi

schedulerName: default-scheduler

config:

addr: 0.0.0.0:8250

gc: 7

heartbeat-interval: 2

ticdc:

baseImage: pingcap/ticdc

replicas: 2

config:

logLevel: info

enablePVReclaim: false

pvReclaimPolicy: Retain

tlsCluster: {}kubectl apply -f ./tidb-cluster.yaml -n tidb

# kubectl delete tc tidb-cluster -n tidb 销毁

kubectl get pod -n tidb到这里tidb的安装就基本完成了,可以用客服端链接DB

7.初始化 TiDB 集群设置密码(master 节点)

#创建 Secret 指定 root 账号密码

#kubectl create secret generic tidb-secret --from-literal=root=123456 --namespace=tidb

#创建 Secret 指定 root 账号密码 自动创建其它用户

kubectl create secret generic tidb-secret --from-literal=root=123456 --from-literal=developer=123456 --namespace=tidb

# cat ./tidb-initializer.yaml

---

apiVersion: pingcap.com/v1alpha1

kind: TidbInitializer

metadata:

name: tidb

namespace: tidb

spec:

image: tnir/mysqlclient

# imagePullPolicy: IfNotPresent

cluster:

namespace: tidb

name: tidb

initSql: |-

create database app;

# initSqlConfigMap: tidb-initsql

passwordSecret: tidb-secret

# permitHost: 172.6.5.8

# resources:

# limits:

# cpu: 1000m

# memory: 500Mi

# requests:

# cpu: 100m

# memory: 50Mi

# timezone: "Asia/Shanghai"

# kubectl apply -f ./tidb-initializer.yaml --namespace=tidb

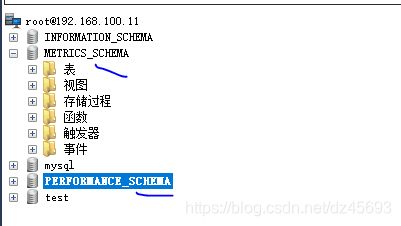

执行成功后,现在客户端连接tidb就需要密码了。[我这里测试创建了一个app空数据库]

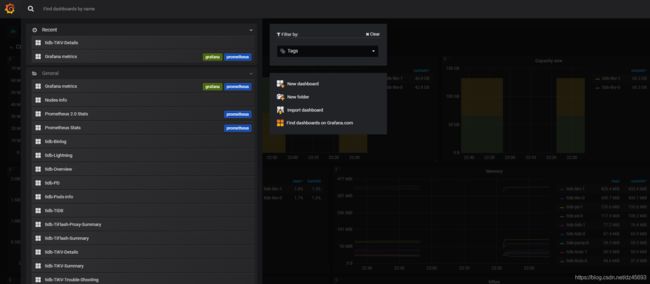

8.部署监控(master 节点)

# cat ./tidb-monitor.yaml

apiVersion: pingcap.com/v1alpha1

kind: TidbMonitor

metadata:

name: tidb

spec:

clusters:

- name: tidb

prometheus:

baseImage: prom/prometheus

version: v2.18.1

grafana:

baseImage: grafana/grafana

version: 6.0.1

initializer:

baseImage: pingcap/tidb-monitor-initializer

version: v4.0.8

reloader:

baseImage: pingcap/tidb-monitor-reloader

version: v1.0.1

imagePullPolicy: IfNotPresent

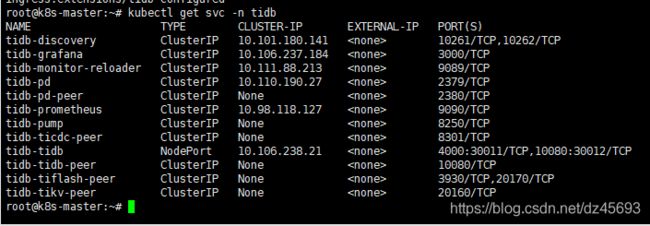

# kubectl -n tidb apply -f ./tidb-monitor.yaml这里默认的grafana 是ClusterIP,外面先访问比较困难, 我尝试改成以下配置,但是nodePort没有生效,而是随机生成的一个【虽然可以访问】。

9借用ingress来访问,创建 tidb-ingress.yaml 文件如下:【 kubectl -n tidb apply -f ./tidb-ingress.yaml 然后配置域名】

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: tidb

namespace: tidb

spec:

rules:

- host: k8s.grafana.com

http:

paths:

- path: /

backend:

serviceName: tidb-grafana

servicePort: 3000

- host: k8s.tidb.com

http:

paths:

- path: /

backend:

serviceName: tidb-pd

servicePort: 2379

- host: k8s.prometheus.com

http:

paths:

- path: /

backend:

serviceName: tidb-prometheus

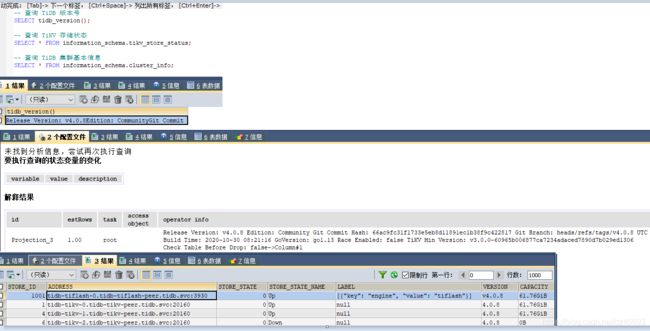

servicePort: 9090访问http://k8s.tidb.com/dashboard 用户名root 密码 空

访问http://k8s.grafana.com/login 用户名和密码 都是admin

访问 http://k8s.prometheus.com/

以上ingress的svc 要根据自己的实际配置:

DB查询:

备注,

#查看 TiDB 集群信息命令

# kubectl get pods -n tidb -o wide

# kubectl get all -n tidb

# kubectl get svc -n tidb

#更改 TiDB 集群配置命令

# kubectl edit tc -n mycluster用到的镜像有:

docker pull quay.io/external_storage/local-volume-provisioner:v2.3.4

docker pull pingcap/tidb-operator:v1.1.7

docker pull pingcap/pd:v4.0.8

docker pull pingcap/tikv:v4.0.8

docker pull pingcap/tidb:v4.0.8

docker pull pingcap/tidb-binlog:v4.0.8

docker pull pingcap/ticdc:v4.0.8

docker pull pingcap/tiflash:v4.0.8

docker pull pingcap/tidb-monitor-reloader:v1.0.1

docker pull pingcap/tidb-monitor-initializer:v4.0.8

docker pull grafana/grafana:6.0.1

docker pull prom/prometheus:v2.18.1

docker pull busybox:1.26.2

docker pull pingcap/tidb-backup-manager:v1.1.7

docker pull pingcap/advanced-statefulset:v0.3.3

#####导出镜像

docker save -o local-volume-provisioner-v2.3.4.tar quay.io/external_storage/local-volume-provisioner:v2.3.4

docker save -o tidb-operator-v1.1.7.tar pingcap/tidb-operator:v1.1.7

docker save -o tidb-backup-manager-v1.1.7.tar pingcap/tidb-backup-manager:v1.1.7

docker save -o advanced-statefulset-v0.3.3.tar pingcap/advanced-statefulset:v0.3.3

docker save -o pd-v4.0.8.tar pingcap/pd:v4.0.8

docker save -o tikv-v4.0.8.tar pingcap/tikv:v4.0.8

docker save -o tidb-v4.0.8.tar pingcap/tidb:v4.0.8

docker save -o tidb-binlog-v4.0.8.tar pingcap/tidb-binlog:v4.0.8

docker save -o ticdc-v4.0.8.tar pingcap/ticdc:v4.0.8

docker save -o tiflash-v4.0.8.tar pingcap/tiflash:v4.0.8

docker save -o tidb-monitor-reloader-v1.0.1.tar pingcap/tidb-monitor-reloader:v1.0.1

docker save -o tidb-monitor-initializer-v4.0.8.tar pingcap/tidb-monitor-initializer:v4.0.8

docker save -o grafana-6.0.1.tar grafana/grafana:6.0.1

docker save -o prometheus-v2.18.1.tar prom/prometheus:v2.18.1

docker save -o busybox-1.26.2.tar busybox:1.26.2

#####导入镜像

docker load -i local-volume-provisioner-v2.3.4.tar

docker load -i tidb-operator-v1.1.7.tar

docker load -i advanced-statefulset-v0.3.3.tar

docker load -i busybox-1.26.2.tar

docker load -i grafana-6.0.1.tar

docker load -i kube-scheduler-v1.15.9.tar

docker load -i kube-scheduler-v1.16.9.tar

docker load -i mysqlclient-latest.tar

docker load -i pd-v4.0.8.tar

docker load -i prometheus-v2.18.1.tar

docker load -i ticdc-v4.0.8.tar

docker load -i tidb-backup-manager-v1.1.7.tar

docker load -i tidb-binlog-v4.0.8.tar

docker load -i tidb-monitor-initializer-v4.0.8.tar

docker load -i tidb-monitor-reloader-v1.0.1.tar

docker load -i tidb-v4.0.8.tar

docker load -i tiflash-v4.0.8.tar

docker load -i tikv-v4.0.8.tar

docker load -i tiller-v2.16.7.tar参考:

https://github.com/kubernetes-sigs/sig-storage-local-static-provisioner/blob/master/docs/operations.md

https://docs.pingcap.com/zh/tidb-in-kubernetes/stable/get-started#%E9%83%A8%E7%BD%B2-tidb-operator

https://docs.pingcap.com/zh/tidb-in-kubernetes/stable/configure-storage-class

https://www.cnblogs.com/zhouwanchun/p/14139413.html

https://blog.csdn.net/allensandy/article/details/105270559