【电商数仓】数仓搭建之明细数据(data warehouse detail -- DWD)层(用户行为数据之曝光、错误日志表、数据加载脚本,业务数据之评价、订单事实表)

文章目录

- 一 曝光日志表

-

-

- 1 思路解析

- 2 建表语句

- 3 数据导入

- 4 查看数据

-

- 二 错误日志表

-

- 1 思路解析

- 2 建表语句

- 3 数据导入

- 4 查看数据

- 三 DWD层用户行为数据加载脚本

- 四 DWD层业务数据概述

- 五 评价事实表(事务型事实表)

-

- 1 分区设计

- 2 建表语句

- 3 数据导入

-

- (1)首日导入

- (2)每日导入

- 六 订单明细事实表(事务型事实表)

-

- 1 分区设计

- 2 建模语句

- 3 数据导入

-

- (1)首日装载

- (2)每日装载

一 曝光日志表

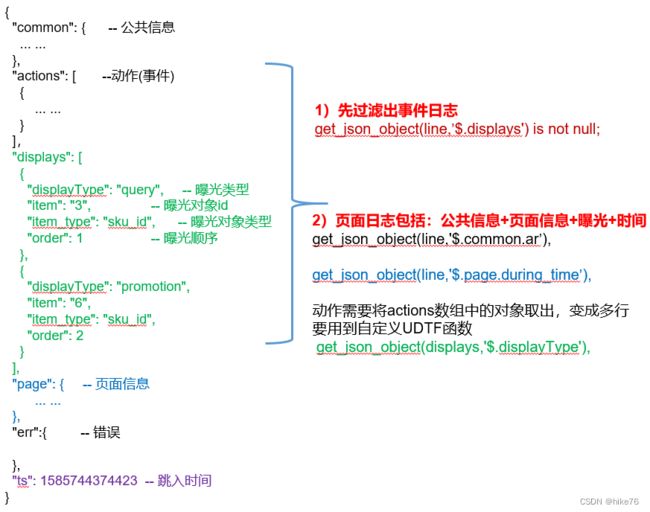

**曝光日志解析思路:**曝光日志表中每行数据对应一个曝光记录,一个曝光记录应当包含公共信息、页面信息以及曝光信息。先将包含display字段的日志过滤出来,然后通过UDTF函数,将display数组“炸开”(类似于explode函数的效果),然后使用get_json_object函数解析每个字段。

1 思路解析

2 建表语句

DROP TABLE IF EXISTS dwd_display_log;

CREATE EXTERNAL TABLE dwd_display_log(

`area_code` STRING COMMENT '地区编码',

`brand` STRING COMMENT '手机品牌',

`channel` STRING COMMENT '渠道',

`is_new` STRING COMMENT '是否首次启动',

`model` STRING COMMENT '手机型号',

`mid_id` STRING COMMENT '设备id',

`os` STRING COMMENT '操作系统',

`user_id` STRING COMMENT '会员id',

`version_code` STRING COMMENT 'app版本号',

`during_time` BIGINT COMMENT 'app版本号',

`page_item` STRING COMMENT '目标id ',

`page_item_type` STRING COMMENT '目标类型',

`last_page_id` STRING COMMENT '上页类型',

`page_id` STRING COMMENT '页面ID ',

`source_type` STRING COMMENT '来源类型',

`ts` BIGINT COMMENT 'app版本号',

`display_type` STRING COMMENT '曝光类型',

`item` STRING COMMENT '曝光对象id ',

`item_type` STRING COMMENT 'app版本号',

`order` BIGINT COMMENT '曝光顺序',

`pos_id` BIGINT COMMENT '曝光位置'

) COMMENT '曝光日志表'

PARTITIONED BY (`dt` STRING)

STORED AS ORC

LOCATION '/warehouse/gmall/dwd/dwd_display_log'

TBLPROPERTIES ("orc.compress"="snappy");

3 数据导入

insert overwrite table dwd_display_log partition(dt='2020-06-14')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.ts'),

get_json_object(display,'$.display_type'),

get_json_object(display,'$.item'),

get_json_object(display,'$.item_type'),

get_json_object(display,'$.order'),

get_json_object(display,'$.pos_id')

from ods_log

lateral view explode_json_array(get_json_object(line,'$.displays')) tmp as display

where dt='2020-06-14'

and get_json_object(line,'$.displays') is not null;

4 查看数据

select * from dwd_display_log where dt='2020-06-14' limit 2;

二 错误日志表

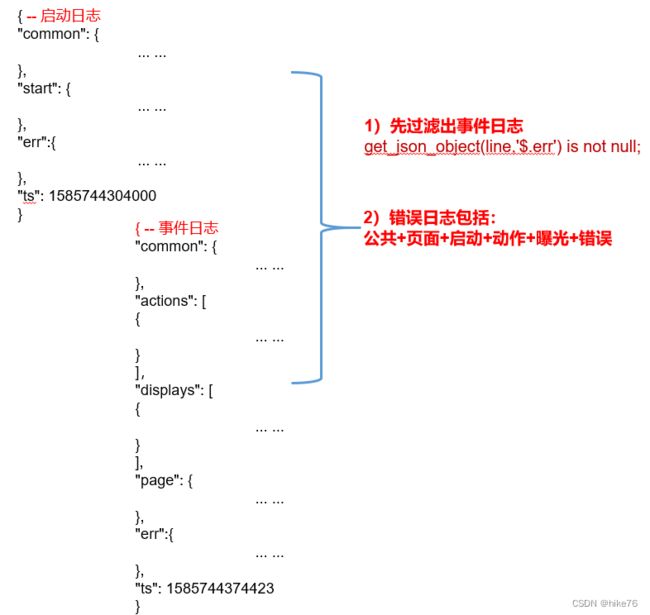

**错误日志解析思路:**错误日志表中每行数据对应一个错误记录,为方便定位错误,一个错误记录应当包含与之对应的公共信息、页面信息、曝光信息、动作信息、启动信息以及错误信息。先将包含err字段的日志过滤出来,然后使用get_json_object函数解析所有字段。

1 思路解析

2 建表语句

DROP TABLE IF EXISTS dwd_error_log;

CREATE EXTERNAL TABLE dwd_error_log(

`area_code` STRING COMMENT '地区编码',

`brand` STRING COMMENT '手机品牌',

`channel` STRING COMMENT '渠道',

`is_new` STRING COMMENT '是否首次启动',

`model` STRING COMMENT '手机型号',

`mid_id` STRING COMMENT '设备id',

`os` STRING COMMENT '操作系统',

`user_id` STRING COMMENT '会员id',

`version_code` STRING COMMENT 'app版本号',

`page_item` STRING COMMENT '目标id ',

`page_item_type` STRING COMMENT '目标类型',

`last_page_id` STRING COMMENT '上页类型',

`page_id` STRING COMMENT '页面ID ',

`source_type` STRING COMMENT '来源类型',

`entry` STRING COMMENT ' icon手机图标 notice 通知 install 安装后启动',

`loading_time` STRING COMMENT '启动加载时间',

`open_ad_id` STRING COMMENT '广告页ID ',

`open_ad_ms` STRING COMMENT '广告总共播放时间',

`open_ad_skip_ms` STRING COMMENT '用户跳过广告时点',

`actions` STRING COMMENT '动作',

`displays` STRING COMMENT '曝光',

`ts` STRING COMMENT '时间',

`error_code` STRING COMMENT '错误码',

`msg` STRING COMMENT '错误信息'

) COMMENT '错误日志表'

PARTITIONED BY (`dt` STRING)

STORED AS ORC

LOCATION '/warehouse/gmall/dwd/dwd_error_log'

TBLPROPERTIES ("orc.compress"="snappy");

说明:此处为对动作数组和曝光数组做处理,如需分析错误与单个动作或曝光的关联,可先使用explode_json_array函数将数组“炸开”,再使用get_json_object函数获取具体字段。

3 数据导入

insert overwrite table dwd_error_log partition(dt='2020-06-14')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.start.entry'),

get_json_object(line,'$.start.loading_time'),

get_json_object(line,'$.start.open_ad_id'),

get_json_object(line,'$.start.open_ad_ms'),

get_json_object(line,'$.start.open_ad_skip_ms'),

get_json_object(line,'$.actions'),

get_json_object(line,'$.displays'),

get_json_object(line,'$.ts'),

get_json_object(line,'$.err.error_code'),

get_json_object(line,'$.err.msg')

from ods_log

where dt='2020-06-14'

and get_json_object(line,'$.err') is not null;

4 查看数据

select * from dwd_error_log where dt='2020-06-14' limit 2;

表格重要性排名:action = page > display = start > 其他

三 DWD层用户行为数据加载脚本

# 编写脚本

# 在hadoop101的/home/hzy/bin目录下创建脚本

vim ods_to_dwd_log.sh

# 在脚本中编写如下内容

#!/bin/bash

APP=gmall

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d "-1 day" +%F`

fi

dwd_start_log="

insert overwrite table ${APP}.dwd_start_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.start.entry'),

get_json_object(line,'$.start.loading_time'),

get_json_object(line,'$.start.open_ad_id'),

get_json_object(line,'$.start.open_ad_ms'),

get_json_object(line,'$.start.open_ad_skip_ms'),

get_json_object(line,'$.ts')

from ${APP}.ods_log

where dt='$do_date'

and get_json_object(line,'$.start') is not null;"

dwd_page_log="

insert overwrite table ${APP}.dwd_page_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.ts')

from ${APP}.ods_log

where dt='$do_date'

and get_json_object(line,'$.page') is not null;"

dwd_action_log="

insert overwrite table ${APP}.dwd_action_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(action,'$.action_id'),

get_json_object(action,'$.item'),

get_json_object(action,'$.item_type'),

get_json_object(action,'$.ts')

from ${APP}.ods_log lateral view ${APP}.explode_json_array(get_json_object(line,'$.actions')) tmp as action

where dt='$do_date'

and get_json_object(line,'$.actions') is not null;"

dwd_display_log="

insert overwrite table ${APP}.dwd_display_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.during_time'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.ts'),

get_json_object(display,'$.display_type'),

get_json_object(display,'$.item'),

get_json_object(display,'$.item_type'),

get_json_object(display,'$.order'),

get_json_object(display,'$.pos_id')

from ${APP}.ods_log lateral view ${APP}.explode_json_array(get_json_object(line,'$.displays')) tmp as display

where dt='$do_date'

and get_json_object(line,'$.displays') is not null;"

dwd_error_log="

insert overwrite table ${APP}.dwd_error_log partition(dt='$do_date')

select

get_json_object(line,'$.common.ar'),

get_json_object(line,'$.common.ba'),

get_json_object(line,'$.common.ch'),

get_json_object(line,'$.common.is_new'),

get_json_object(line,'$.common.md'),

get_json_object(line,'$.common.mid'),

get_json_object(line,'$.common.os'),

get_json_object(line,'$.common.uid'),

get_json_object(line,'$.common.vc'),

get_json_object(line,'$.page.item'),

get_json_object(line,'$.page.item_type'),

get_json_object(line,'$.page.last_page_id'),

get_json_object(line,'$.page.page_id'),

get_json_object(line,'$.page.source_type'),

get_json_object(line,'$.start.entry'),

get_json_object(line,'$.start.loading_time'),

get_json_object(line,'$.start.open_ad_id'),

get_json_object(line,'$.start.open_ad_ms'),

get_json_object(line,'$.start.open_ad_skip_ms'),

get_json_object(line,'$.actions'),

get_json_object(line,'$.displays'),

get_json_object(line,'$.ts'),

get_json_object(line,'$.err.error_code'),

get_json_object(line,'$.err.msg')

from ${APP}.ods_log

where dt='$do_date'

and get_json_object(line,'$.err') is not null;"

case $1 in

dwd_start_log )

hive -e "$dwd_start_log"

;;

dwd_page_log )

hive -e "$dwd_page_log"

;;

dwd_action_log )

hive -e "$dwd_action_log"

;;

dwd_display_log )

hive -e "$dwd_display_log"

;;

dwd_error_log )

hive -e "$dwd_error_log"

;;

all )

hive -e "$dwd_start_log$dwd_page_log$dwd_action_log$dwd_display_log$dwd_error_log"

;;

esac

chmod 777 ods_to_dwd_log.sh

ods_to_dwd_log.sh all 2020-06-14

# 查询导入结果

注意:先关闭hiveserver2再执行脚本,防止出现内存不足的错误

四 DWD层业务数据概述

业务数据方面DWD层的搭建主要注意点在于维度建模。

业务数据中事实表分为三类

-

事务型事实表:表格的数据每天只新增,不修改,当天的数据当天处理,彼此独立,互不影响。

导入数据的思路:增量导入,所有这种表格都是按照日期分区,与ods层一致,所以直接将ods层的数据直接拿过来就可以。但是这种表格首日导入与每日导入不一样,第一天导入的时候包括所有的历史数据,在导入历史数据的时候需要按照事务完成的日期去动态分区。

-

周期型快照事实表:每日全量更新,记录的是某一个时刻关注的某一个业务是什么状态,不会在一天的任何时间都去追踪,以天为单位,如每天的零点。不记录变化,只记录状态,且是周期型的记录,周期就是一天。

导入数据的思路:当天ods层的数据之间拿过来就是快照,如果差一些维度,就补一点维度。

-

累积型快照事实表:数据量大,每天导入的数据都是新增及变化的,导入这些数据就需要处理成累积型快照事实表,用于追踪业务事实的变化。处理第二天的数据需要依赖第一天的数据,记录数据的变化。处理方式类似于拉链表。

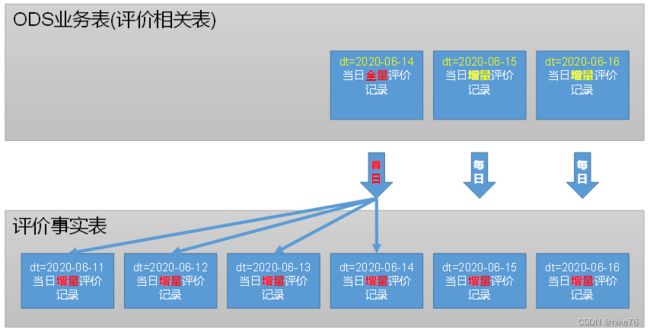

五 评价事实表(事务型事实表)

1 分区设计

2 建表语句

DROP TABLE IF EXISTS dwd_comment_info;

CREATE EXTERNAL TABLE dwd_comment_info(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户ID',

`sku_id` STRING COMMENT '商品sku',

`spu_id` STRING COMMENT '商品spu',

`order_id` STRING COMMENT '订单ID',

`appraise` STRING COMMENT '评价(好评、中评、差评、默认评价)',

`create_time` STRING COMMENT '评价时间'

) COMMENT '评价事实表'

PARTITIONED BY (`dt` STRING)

STORED AS ORC

LOCATION '/warehouse/gmall/dwd/dwd_comment_info/'

TBLPROPERTIES ("orc.compress"="snappy");

3 数据导入

(1)首日导入

insert overwrite table dwd_comment_info partition (dt)

select

id,

user_id,

sku_id,

spu_id,

order_id,

appraise,

create_time,

date_format(create_time,'yyyy-MM-dd')

from ods_comment_info

where dt='2020-06-14';

(2)每日导入

insert overwrite table dwd_comment_info partition(dt='2020-06-15')

select

id,

user_id,

sku_id,

spu_id,

order_id,

appraise,

create_time

from ods_comment_info where dt='2020-06-15';

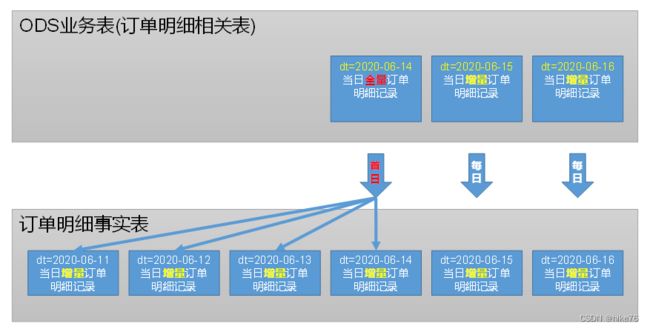

六 订单明细事实表(事务型事实表)

1 分区设计

2 建模语句

DROP TABLE IF EXISTS dwd_order_detail;

CREATE EXTERNAL TABLE dwd_order_detail (

`id` STRING COMMENT '订单编号',

`order_id` STRING COMMENT '订单号',

`user_id` STRING COMMENT '用户id',

`sku_id` STRING COMMENT 'sku商品id',

`province_id` STRING COMMENT '省份ID',

`activity_id` STRING COMMENT '活动ID',

`activity_rule_id` STRING COMMENT '活动规则ID',

`coupon_id` STRING COMMENT '优惠券ID',

`create_time` STRING COMMENT '创建时间',

`source_type` STRING COMMENT '来源类型',

`source_id` STRING COMMENT '来源编号',

`sku_num` BIGINT COMMENT '商品数量',

`original_amount` DECIMAL(16,2) COMMENT '原始价格',

`split_activity_amount` DECIMAL(16,2) COMMENT '活动优惠分摊',

`split_coupon_amount` DECIMAL(16,2) COMMENT '优惠券优惠分摊',

`split_final_amount` DECIMAL(16,2) COMMENT '最终价格分摊'

) COMMENT '订单明细事实表表'

PARTITIONED BY (`dt` STRING)

STORED AS ORC

LOCATION '/warehouse/gmall/dwd/dwd_order_detail/'

TBLPROPERTIES ("orc.compress"="snappy");

3 数据导入

(1)首日装载

insert overwrite table dwd_order_detail partition(dt)

select

od.id,

od.order_id,

oi.user_id,

od.sku_id,

oi.province_id,

oda.activity_id,

oda.activity_rule_id,

odc.coupon_id,

od.create_time,

od.source_type,

od.source_id,

od.sku_num,

od.order_price*od.sku_num,

od.split_activity_amount,

od.split_coupon_amount,

od.split_final_amount,

date_format(create_time,'yyyy-MM-dd')

from

(

select

*

from ods_order_detail

where dt='2020-06-14'

)od

left join

(

select

id,

user_id,

province_id

from ods_order_info

where dt='2020-06-14'

)oi

on od.order_id=oi.id

left join

(

select

order_detail_id,

activity_id,

activity_rule_id

from ods_order_detail_activity

where dt='2020-06-14'

)oda

on od.id=oda.order_detail_id

left join

(

select

order_detail_id,

coupon_id

from ods_order_detail_coupon

where dt='2020-06-14'

)odc

on od.id=odc.order_detail_id;

(2)每日装载

insert overwrite table dwd_order_detail partition(dt='2020-06-15')

select

od.id,

od.order_id,

oi.user_id,

od.sku_id,

oi.province_id,

oda.activity_id,

oda.activity_rule_id,

odc.coupon_id,

od.create_time,

od.source_type,

od.source_id,

od.sku_num,

od.order_price*od.sku_num,

od.split_activity_amount,

od.split_coupon_amount,

od.split_final_amount

from

(

select

*

from ods_order_detail

where dt='2020-06-15'

)od

left join

(

select

id,

user_id,

province_id

from ods_order_info

where dt='2020-06-15'

)oi

on od.order_id=oi.id

left join

(

select

order_detail_id,

activity_id,

activity_rule_id

from ods_order_detail_activity

where dt='2020-06-15'

)oda

on od.id=oda.order_detail_id

left join

(

select

order_detail_id,

coupon_id

from ods_order_detail_coupon

where dt='2020-06-15'

)odc

on od.id=odc.order_detail_id;