安装kubeflow

安装kubeflow

- 安装kustomize

- 安装kubeflow

-

- 下载manifests

- 设置kubernetes默认storageclass

- 执行安装

- 需要在每台机器上面安装nvidia-docker2

- 安装nvdia gpu插件

- 在机器上面安装nvidia插件

- 暴露kubeflow服务

- 添加LDAP支持

- 关于LDAP用户的namespace

因为是多次补充的安装过程,因此,安装驱动和k8s搭建部分的顺序比较乱,需要自行脑补。。

安装kustomize

curl -Lo ./kustomize https://github.com/kubernetes-sigs/kustomize/releases/download/v3.2.0/kustomize_3.2.0_linux_amd64

chmod +x ./kustomize

sudo mv kustomize /usr/local/bin

安装kubeflow

高级玩家都使用使用https://github.com/kubeflow/manifests进行安装,哈哈哈

下载manifests

https://github.com/kubeflow/manifests/releases/tag/v1.5.1

设置kubernetes默认storageclass

安装ceph的csi插件见以前写的博客:

设置默认storageclass:

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: rbd.csi.ceph.com

parameters:

clusterID: 365b02aa-db0c-11ec-b243-525400ce981f

pool: k8s_rbd #之前创建pool的名称

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

csi.storage.k8s.io/fstype: ext4

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- discard

执行安装

# 解压后,进入

[root@master1 kubeflow]# cd manifests-1.5.0/

[root@master1 manifests-1.5.0]# ls

apps contrib docs go.mod hack OWNERS prow_config.yaml tests

common develop.yaml example go.sum LICENSE proposals README.md

[root@master1 manifests-1.5.0]# while ! kustomize build example | kubectl apply -f -; do echo "Retrying to apply resources"; sleep 10; done

因为镜像基本都是国外的,所以kubeflow安装过程需要添加国外代理或者需要将国外镜像通过其他方式下载到本地,需要等N长时间

需要在每台机器上面安装nvidia-docker2

参考:安装nvidia-docker2

2.1设置仓库

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.repo | \

sudo tee /etc/yum.repos.d/nvidia-docker.repo

2.2更新仓库中的key

DIST=$(sed -n 's/releasever=//p' /etc/yum.conf)

DIST=${DIST:-$(. /etc/os-release; echo $VERSION_ID)}

sudo yum makecache

2.3安装nvidia-docker2

sudo yum install nvidia-docker2

2.4 重新载入docker daemon的设定

sudo pkill -SIGHUP dockerd

2.5 测试是否安装成功

docker run --runtime=nvidia --rm nvidia/cuda nvidia-smi

第一次运行会花几分钟下载组件,最后显示如下结果则表示安装成功

docker run --runtime=nvidia --rm nvidia/cuda nvidia-smi

Wed Mar 25 04:58:46 2020

±----------------------------------------------------------------------------+

| NVIDIA-SMI 418.87.00 Driver Version: 418.87.00 CUDA Version: 10.1 |

|-------------------------------±---------------------±---------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|=++==============|

| 0 GeForce RTX 208… Off | 00000000:1A:00.0 Off | N/A |

| 16% 26C P8 1W / 250W | 0MiB / 10989MiB | 0% Default |

安装nvdia gpu插件

参考:navdia plugin gitlab地址

引用:

https://www.csdn.net/tags/MtTaMgxsMjE4ODA4LWJsb2cO0O0O.html

在机器上面安装nvidia插件

参照NVIDIA官网:https://docs.nvidia.com/datacenter/tesla/tesla-installation-notes/index.html#centos7

在反复使用yum安装都失败的情况下,一直报错如下:

安装过程中,需要添加:

-bash: yum-config-manager: 未找到命令

yum -y install yum-utils

安装过程如下:

yum install -y nvidia-driver-latest-dkms

加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* centos-sclo-rh: mirrors.bupt.edu.cn

* centos-sclo-sclo: mirrors.bupt.edu.cn

* elrepo: mirrors.tuna.tsinghua.edu.cn

正在解决依赖关系

--> 正在检查事务

---> 软件包 nvidia-driver-latest-dkms.x86_64.3.515.65.01-1.el7 将被 安装

.........................

.........................

.........................

---> 软件包 libmodman.x86_64.0.2.0.1-8.el7 将被 安装

---> 软件包 nettle.x86_64.0.2.7.1-9.el7_9 将被 安装

---> 软件包 trousers.x86_64.0.0.3.14-2.el7 将被 安装

--> 处理 3:nvidia-driver-latest-dkms-libs-515.65.01-1.el7.x86_64 与 nvidia-x11-drv-libs 的冲突

--> 解决依赖关系完成

错误:nvidia-driver-latest-dkms-libs conflicts with nvidia-x11-drv-libs-515.65.01-1.el7_9.elrepo.x86_64

您可以尝试添加 --skip-broken 选项来解决该问题

您可以尝试执行:rpm -Va --nofiles --nodigest

于是,使用文件安装:

参照官网:https://docs.nvidia.com/datacenter/tesla/tesla-installation-notes/index.html#runfile

NVIDIA drivers are available as .run installer packages for use with Linux distributions from the NVIDIA driver downloads site. Select the .run package for your GPU product.

This page also includes links to all the current and previous driver releases: https://www.nvidia.com/en-us/drivers/unix.

The .run can also be downloaded using wget or curl as shown in the example below:

$ BASE_URL=https://us.download.nvidia.com/tesla

$ DRIVER_VERSION=450.80.02

$ wget $BASE_URL/$DRIVER_VERSION/NVIDIA-Linux-x86_64-$DRIVER_VERSION.run

Once the .run installer has been downloaded, the NVIDIA driver can be installed:

$ sudo sh NVIDIA-Linux-x86_64-$DRIVER_VERSION.run

Follow the prompts on the screen during the installation. For more advanced options on using the .run installer, see the --help option or refer to the README.

需要禁用原来显卡的驱动:

需要升级内核:

注意: 必须使得kernel-headers和devel与内核版本保持一致

下载kernel依赖文件:

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-headers-5.4.219-1.el7.elrepo.x86_64.rpm

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.219-1.el7.elrepo.x86_64.rpm

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-tools-libs-5.4.219-1.el7.elrepo.x86_64.rpm

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-tools-libs-devel-5.4.219-1.el7.elrepo.x86_64.rpm

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/python-perf-5.4.219-1.el7.elrepo.x86_64.rpm

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/perf-5.4.219-1.el7.elrepo.x86_64.rpm

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-tools-5.4.219-1.el7.elrepo.x86_64.rpm

yum remove kernel-headers-3.10.0-1160.76.1.el7.x86_64

yum install kernel-lt-headers-5.4.219-1.el7.elrepo.x86_64.rpm

yum remove kernel-tools-3.10.0-1160.76.1.el7.x86_64

yum remove kernel-tools

yum install kernel-lt-tools-libs-5.4.219-1.el7.elrepo.x86_64.rpm

yum install kernel-lt-tools-5.4.219-1.el7.elrepo.x86_64.rpm

(base) [root@node3 ~]# rpm -qa|grep kernel

kernel-ml-tools-libs-devel-5.19.4-1.el7.elrepo.x86_64

kernel-ml-5.19.4-1.el7.elrepo.x86_64

kernel-ml-tools-5.19.4-1.el7.elrepo.x86_64

kernel-ml-headers-5.19.4-1.el7.elrepo.x86_64

kernel-ml-tools-libs-5.19.4-1.el7.elrepo.x86_64

kernel-ml-devel-5.19.4-1.el7.elrepo.x86_64

安装过程中,需要升级gcc版本:

centos7.9上面升级gcc

sudo yum install -y http://mirror.centos.org/centos/7/extras/x86_64/Packages/centos-release-scl-rh-2-3.el7.centos.noarch.rpm

sudo yum install -y http://mirror.centos.org/centos/7/extras/x86_64/Packages/centos-release-scl-2-3.el7.centos.noarch.rpm

sudo yum install devtoolset-9-gcc-c++

source /opt/rh/devtoolset-9/enable

暴露kubeflow服务

可以参考:https://www.jianshu.com/p/6f7aeecada67

https://www.cnblogs.com/boshen-hzb/p/10679863.html

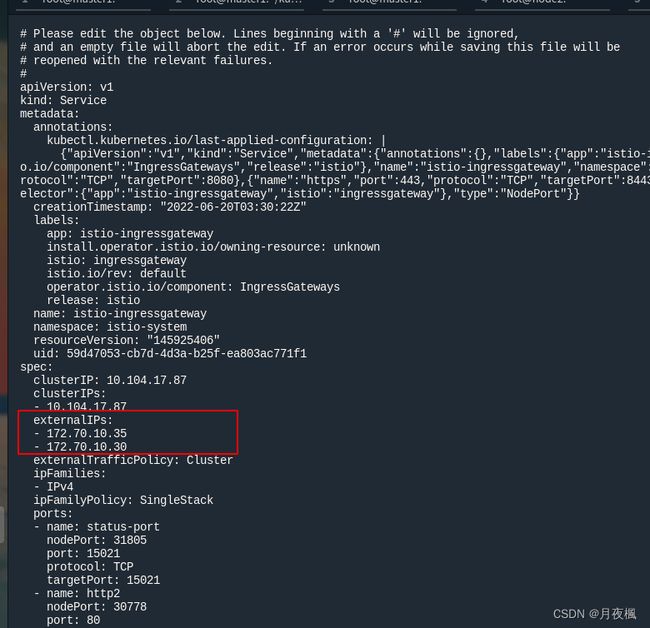

1.确认istio-ingressgateway是否有对外的IP

(base) [root@node3 ~]# kubectl get service istio-ingressgateway -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-ingressgateway NodePort 10.104.17.87 172.70.10.35,172.70.10.30 15021:31805/TCP,80:30778/TCP,443:31460/TCP,31400:30593/TCP,15443:31113/TCP 71d

如果 EXTERNAL-IP 有值(IP 地址或主机名),则说明您的环境具有可用于 Ingress 网关的外部负载均衡器。如果 EXTERNAL-IP 值是 (或一直是 ),则说明可能您的环境并没有为 Ingress 网关提供外部负载均衡器的功能。

可以通过以下方法添加外部IP

kubectl edit service istio-ingressgateway -n istio-system

因为我是3台k8s集群,所以我加了2个外部IP(去掉master负载),这样就可以用这2个IP的任意一个对外提供服务了,默认端口80

因为我是3台k8s集群,所以我加了2个外部IP(去掉master负载),这样就可以用这2个IP的任意一个对外提供服务了,默认端口80

使用https://172.70.10.35或者https://172.70.10.30

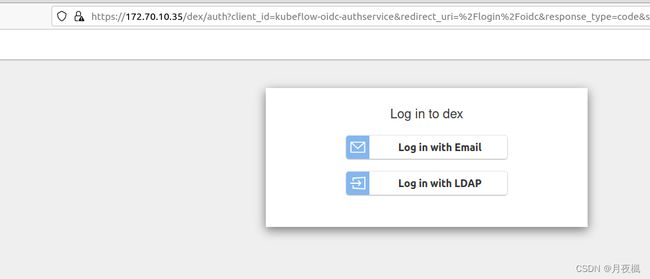

添加LDAP支持

参见dex官网:https://dexidp.io/docs/connectors/ldap/

在k8s中,找到dex服务

在k8s中,找到dex服务

base) [root@node3 ~]# kubectl get pods -n auth

NAME READY STATUS RESTARTS AGE

dex-5ddf47d88d-jg2kf 1/1 Running 0 4h2m

(base) [root@node3 ~]# kubectl get pod/dex-5ddf47d88d-jg2kf -n auth -o yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

cni.projectcalico.org/containerID: a4b63ccd8259f2a57bc9019aaf49a15008d1da121835dfd6282bb6acfda531f0

cni.projectcalico.org/podIP: 10.244.135.31/32

cni.projectcalico.org/podIPs: 10.244.135.31/32

creationTimestamp: "2022-08-30T03:48:54Z"

generateName: dex-5ddf47d88d-

labels:

app: dex

pod-template-hash: 5ddf47d88d

name: dex-5ddf47d88d-jg2kf

namespace: auth

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: dex-5ddf47d88d

uid: 115ce097-69e4-4228-ba06-206908416093

resourceVersion: "145713807"

uid: 1a26c0e9-60fe-4b13-a0be-26089005bbdc

spec:

containers:

- command:

- dex

- serve

- /etc/dex/cfg/config.yaml

env:

- name: KUBERNETES_POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

envFrom:

- secretRef:

name: dex-oidc-client

image: quay.io/dexidp/dex:v2.24.0

imagePullPolicy: IfNotPresent

name: dex

ports:

- containerPort: 5556

name: http

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /etc/dex/cfg

name: config

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-qw5j7

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: node3

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: dex

serviceAccountName: dex

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- configMap:

defaultMode: 420

items:

- key: config.yaml

path: config.yaml

name: dex

name: config

- name: kube-api-access-qw5j7

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2022-08-30T03:48:54Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2022-08-30T03:48:56Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2022-08-30T03:48:56Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2022-08-30T03:48:54Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: docker://c66373a4e4dc033444cf9e8aee9ec446ed3e5b54c53b42d68f6b9cf50ca46886

image: quay.io/dexidp/dex:v2.24.0

imageID: docker-pullable://quay.io/dexidp/dex@sha256:c9b7f6d0d9539bc648e278d64de46eb45f8d1e984139f934ae26694fe7de6077

lastState: {}

name: dex

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2022-08-30T03:48:56Z"

hostIP: 172.70.10.30

phase: Running

podIP: 10.244.135.31

podIPs:

- ip: 10.244.135.31

qosClass: BestEffort

startTime: "2022-08-30T03:48:54Z"

可以根据该配置文件,找到configMap:

(base) [root@node3 ~]# kubectl get cm -n auth

NAME DATA AGE

dex 1 71d

istio-ca-root-cert 1 71d

kube-root-ca.crt 1 71d

(base) [root@node3 ~]# kubectl get cm/dex -n auth -o yaml

apiVersion: v1

data:

config.yaml: |

issuer: http://dex.auth.svc.cluster.local:5556/dex

storage:

type: kubernetes

config:

inCluster: true

web:

http: 0.0.0.0:5556

logger:

level: "debug"

format: text

oauth2:

skipApprovalScreen: true

enablePasswordDB: true

staticPasswords:

- email: [email protected]

hash: $2y$12$4K/VkmDd1q1Orb3xAt82zu8gk7Ad6ReFR4LCP9UeYE90NLiN9Df72

# https://github.com/dexidp/dex/pull/1601/commits

# FIXME: Use hashFromEnv instead

username: user

userID: "15841185641784"

staticClients:

# https://github.com/dexidp/dex/pull/1664

- idEnv: OIDC_CLIENT_ID

redirectURIs: ["/login/oidc"]

name: 'Dex Login Application'

secretEnv: OIDC_CLIENT_SECRET

在该configmap后,添加ldap配置即可:

apiVersion: v1

kind: ConfigMap

metadata:

name: dex

namespace: auth

data:

config.yaml: |

issuer: http://dex.auth.svc.cluster.local:5556/dex

storage:

type: kubernetes

config:

inCluster: true

web:

http: 0.0.0.0:5556

logger:

level: "debug"

format: text

oauth2:

skipApprovalScreen: true

enablePasswordDB: true

staticPasswords:

- email: [email protected]

hash: $2y$12$4K/VkmDd1q1Orb3xAt82zu8gk7Ad6ReFR4LCP9UeYE90NLiN9Df72

# https://github.com/dexidp/dex/pull/1601/commits

# FIXME: Use hashFromEnv instead

username: user

userID: "15841185641784"

staticClients:

# https://github.com/dexidp/dex/pull/1664

- idEnv: OIDC_CLIENT_ID

redirectURIs: ["/login/oidc"]

name: 'Dex Login Application'

secretEnv: OIDC_CLIENT_SECRET

connectors:

- type: ldap

# Required field for connector id.

id: ldap

# Required field for connector name.

name: LDAP

config:

# Host and optional port of the LDAP server in the form "host:port".

# If the port is not supplied, it will be guessed based on "insecureNoSSL",

# and "startTLS" flags. 389 for insecure or StartTLS connections, 636

# otherwise.

host: 192.70.30.2:15007

# Following field is required if the LDAP host is not using TLS (port 389).

# Because this option inherently leaks passwords to anyone on the same network

# as dex, THIS OPTION MAY BE REMOVED WITHOUT WARNING IN A FUTURE RELEASE.

#

insecureNoSSL: true

# If a custom certificate isn't provide, this option can be used to turn on

# TLS certificate checks. As noted, it is insecure and shouldn't be used outside

# of explorative phases.

#

insecureSkipVerify: true

# When connecting to the server, connect using the ldap:// protocol then issue

# a StartTLS command. If unspecified, connections will use the ldaps:// protocol

#

startTLS: false

# Path to a trusted root certificate file. Default: use the host's root CA.

# rootCA: /etc/dex/ldap.ca

# A raw certificate file can also be provided inline.

# rootCAData: ( base64 encoded PEM file )

# The DN and password for an application service account. The connector uses

# these credentials to search for users and groups. Not required if the LDAP

# server provides access for anonymous auth.

# Please note that if the bind password contains a `$`, it has to be saved in an

# environment variable which should be given as the value to `bindPW`.

bindDN: cn=*****,cn=BDIT,ou=Group,dc=******,dc=com

bindPW: *****

# The attribute to display in the provided password prompt. If unset, will

# display "Username"

usernamePrompt: Email Address

# User search maps a username and password entered by a user to a LDAP entry.

userSearch:

# BaseDN to start the search from. It will translate to the query

# "(&(objectClass=person)(uid=))".

baseDN: ou=Group,dc=*****,dc=com

# Optional filter to apply when searching the directory.

#filter: "(objectClass=person)"

#filter: "(&(cn=%s)(cn=%s))"

# username attribute used for comparing user entries. This will be translated

# and combined with the other filter as "(=)".

#username: userPrincipalName

username: cn

# The following three fields are direct mappings of attributes on the user entry.

# String representation of the user.

idAttr: DN

# Required. Attribute to map to Email.

#emailAttr: userPrincipalName

emailAttr: cn

# Maps to display name of users. No default value.

nameAttr: cn

# Group search queries for groups given a user entry.

# groupSearch:

# # BaseDN to start the search from. It will translate to the query

# # "(&(objectClass=group)(member=))".

# baseDN: ou=Group,dc=knowdee,dc=com

# # Optional filter to apply when searching the directory.

# filter: "(&(cn=%s)(cn=%s))"

# #filter: "(objectClass=group)"

#

# # Following list contains field pairs that are used to match a user to a group. It adds an additional

# # requirement to the filter that an attribute in the group must match the user's

# # attribute value.

# userMatchers:

# - userAttr: DN

# groupAttr: member

#

# # Represents group name.

# nameAttr: cn

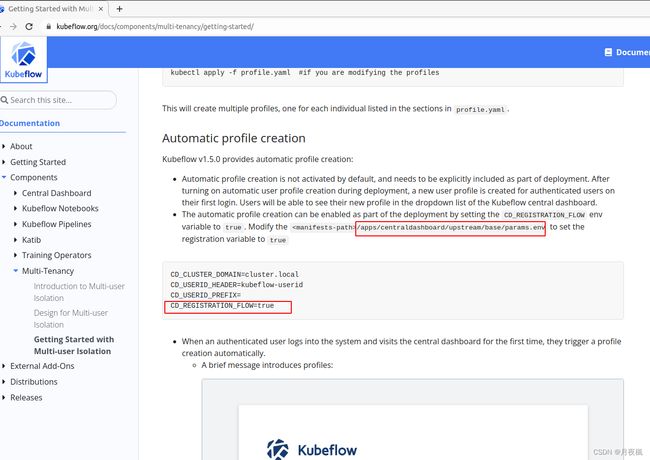

关于LDAP用户的namespace

默认情况下,ldap用户,没有相应的namespace,因此不能在kubeflow中进行资源申请,因此,添加namespace配置,如下:

在kubeflow官网上面,找到修改的参数:

修改该参数,然后执行kustomize:

修改该参数,然后执行kustomize:

kubectl apply -f …