微服务架构系列(一)之虚拟平台、分布式存储、高可用k8s集群环境搭建

一、物理机虚拟平台搭建

1、由实向需架构转换图(每台物理机要求两块硬盘用来做分布式存储和系统盘,我的是10年前的老机器没啥大的要求):

2、系统安装去官网下载 Proxmox VE 7.x ISO Installer (按需求选择版本)下载promox镜像,下载u盘制作工具,最后弹框选DD模式;机器比较新的可以自己选择u盘制作或装机工具。(u盘恢复日常使用需手动格式化:cmd-->diskpart-->list disk-->select 1 以实际u对应的磁盘编号为准--->clean--->create partition primary-->active--->format fs=ntfs label="my u pan" quick--->assign)

3、安装跟着界面一步一步下一步没啥要注意的,最好一台机器两块盘,一块装系统一块大一点备用做分布式存储,注意选下盘符就行

二、分布式存储ceph环境搭建(至少三台机器每台都要安装)

1、nano /etc/apt/sources.list将下面源替换到sources.list文件中然后保存退出(ctrl +o 保存--->回车替换一样的名称--->ctrl +x 退出)

#deb http://ftp.debian.org/debian bullseye main contrib

#deb http://ftp.debian.org/debian bullseye-updates main contrib

# security updates

#deb http://security.debian.org bullseye-security main contrib

# debian aliyun source

deb https://mirrors.aliyun.com/debian/ bullseye main non-free contrib

deb-src https://mirrors.aliyun.com/debian/ bullseye main non-free contrib

deb https://mirrors.aliyun.com/debian-security/ bullseye-security main

deb-src https://mirrors.aliyun.com/debian-security/ bullseye-security main

deb https://mirrors.aliyun.com/debian/ bullseye-updates main non-free contrib

deb-src https://mirrors.aliyun.com/debian/ bullseye-updates main non-free contrib

deb https://mirrors.aliyun.com/debian/ bullseye-backports main non-free contrib

deb-src https://mirrors.aliyun.com/debian/ bullseye-backports main non-free contrib

# proxmox source

# deb http://download.proxmox.com/debian/pve bullseye pve-no-subscription

deb https://mirrors.ustc.edu.cn/proxmox/debian/pve bullseye pve-no-subscription2、nano /etc/apt/sources.list.d/pve-enterprise.list注释原有同步地址保存退出

# deb https://enterprise.proxmox.com/debian/pve buster pve-enterprise3、nano /etc/apt/sources.list.d/ceph.list替换ceph的源保存退出

deb http://mirrors.ustc.edu.cn/proxmox/debian/ceph-pacific bullseye main4、更新源

root@masterxxx:~# apt-get update

root@masterxxx:~# apt-get upgrade

root@masterxxx:~# apt-get dist-upgrade 5、查看nano /etc/apt/sources.list.d/ceph.list文件是否变更,有变就再次重复第3步

6、配置Promox7.1集群,我是登录master001创建的,002和003直接加入

7、进入集群按钮下边的ceph按钮回弹框出来个配置按钮,点击下配置内外网ip就完事儿(我是以master001为管理节点的)

8、然后进入每台ceph节点(我的同时也是Promox节点)查看并绑定备用硬盘我的是/dev/sda,根据实际节点查看绑定。

root@masterxxx:~# fdisk -l

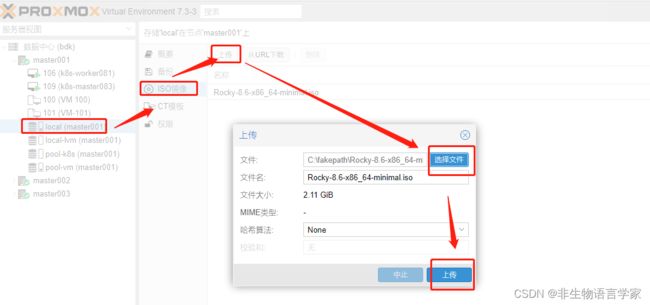

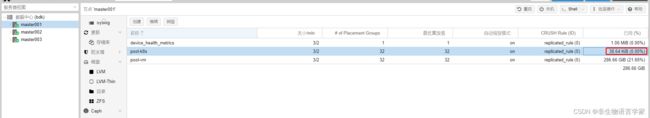

root@masterxxx:~# pveceph osd create /dev/sda9、创建ceph的pool-k8s和pool-vm池以备后用,如果改了大小就要点高级把pgs也改成对应的值,我是直接默认创建,最后上传系统镜像、

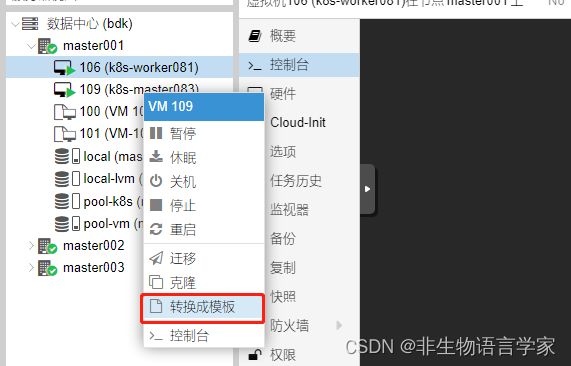

10、k8s基础系统安装(硬盘和镜像选上步新建和上传的,按提示一步一步设置完等待创建完成点运行在右侧完成基础系统安装),最后去除光驱(不去除迁移异常)再转换成模板

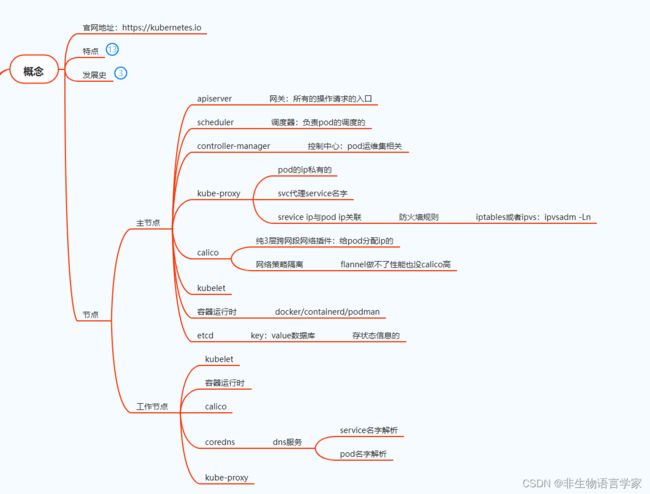

三、3主3从k8s集群搭建

1、环境简介

系统:RockyLinux8.6(4核8G)*6台---->基础系统同时运行实际只要3.5G虚拟内存

高可用中间件:nginx+KeepAlive(实现控制节点高可用)

网络中间件:Calico

时间同步:chrony

具体节点详情图:

2、k8s安装示意图我物理机是172.16.0段的虚拟机是172.16.1段的它们互通,可以根据实际情况设置相同的ip段

3、模板完整克隆(不要链接克隆)一台虚拟机(做k8s基础环境)

3.1、基础工具安装

[root@anonymous ~]# yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo libaio-devel wget vim ncurses-devel autoconf automake zlib-devel epel-release openssh-server socat ipvsadm conntrack telnet3.2、时间同步配置

[root@anonymous ~]# yum -y install chrony

[root@anonymous ~]# systemctl enable chronyd --now

[root@anonymous ~]# vim /etc/chrony.conf

删除:

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

在原来的位置,插入国内 NTP 服务器地址

server ntp1.aliyun.com iburst

server ntp2.aliyun.com iburst

server ntp1.tencent.com iburst

server ntp2.tencent.com iburst

[root@anonymous ~]# systemctl restart chronyd3.3、关闭防火墙和swap分区

[root@anonymous ~]# systemctl stop firewalld ; systemctl disable firewalld

# 永久关闭SELINUX 需要重启主机才会生效

[root@anonymous ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

# 临时关闭SELINUX

[root@anonymous ~]# setenforce 0

#临时关闭swap

[root@anonymous ~]# swapoff -a

#永久关闭:注释 swap 挂载 /dev/mapper/centos-swap swap

[root@anonymous ~]# vim /etc/fstab3.4、修改内核参数

[root@anonymous ~]# modprobe br_netfilter

[root@anonymous ~]# lsmod | grep br_netfilter

[root@anonymous ~]# cat > /etc/sysctl.d/k8s.conf <3.5、配置安装源

# docker的:

[root@anonymous ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# k8s的:

[root@anonymous ~]# tee /etc/yum.repos.d/kubernetes.repo <<-'EOF'

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF3.6、安装k8s及容器运行时(我选装的docker)

#安装k8s

[root@anonymous ~]# yum install docker-ce -y

[root@anonymous ~]# systemctl start docker && systemctl enable docker.service

[root@anonymous ~]# tee /etc/docker/daemon.json << 'EOF'

{

"registry-mirrors":["https://vh3bm52y.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

[root@anonymous ~]# systemctl daemon-reload

[root@anonymous ~]# systemctl restart docker

[root@anonymous ~]# systemctl enable docker

[root@anonymous ~]# systemctl status docker

# 安装k8s组件

[root@anonymous ~]# yum install -y kubelet-1.23.1 kubeadm-1.23.1 kubectl-1.23.1

[root@anonymous ~]# systemctl enable kubelet

# kubelet :运行在集群所有节点上,用于启动 Pod 和容器等对象的工具

# kubeadm :用于初始化集群,启动集群的命令工具

# kubectl :用于和集群通信的命令行,通过 kubectl 可以部署和管理应用,查看各种资源,创建、删除和更新各种组件3.7、关闭当前虚拟机生成模板右键-->克隆-->完全克隆-->直到完成一共生成6台虚拟机

3.8、给克隆处理的模板机配置固定ip及主机名

# 设置固定ip(我的网卡是eth0 具体网卡根据实际情况修改)

[root@192 network-scripts]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

# 配置参考 x根据实际主机ip补齐

BOOTPROTO=static

IPADDR=172.16.1.X

NETMASK=255.255.255.0

GATEWAY=172.16.1.1

DNS1=172.16.1.1

# 设置主机名

[root@anonymous ~]# hostnamectl set-hostname master083 && bash

[root@master083 ~]# vim /etc/hosts

# 新增的主机解析配置

172.16.1.83 master083

172.16.1.78 master078

172.16.1.79 master079

172.16.1.80 worker080

172.16.1.81 worker081

172.16.1.82 worker0824、主节点(master083、master078、master079)配置

4.1、选择一台虚拟机作为控制节点的主节点机器(我选的是:172.16.1.83 master083)

# 一路回车,不输入密码

[root@master083 ~]# ssh-keygen

# 把本地的 ssh 公钥文件安装到远程主机对应的账户,yes 输入远程机密码

[root@master083 ~]# ssh-copy-id master078

[root@master083 ~]# ssh-copy-id master079

[root@master083 ~]# ssh-copy-id master083

[root@master083 ~]# ssh-copy-id worker080

[root@master083 ~]# ssh-copy-id worker081

[root@master083 ~]# ssh-copy-id worker0824.2、主节点nginx+KeepAlive(3台主节点都要安装)

[root@master083 ~]# yum install nginx keepalived nginx-mod-stream -y4.3、修改或替换nignx配置( 3台主节点都都改,文件地址: /etc/nginx/nginx.conf)

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 172.16.1.83:6443 weight=5 max_fails=3 fail_timeout=30s;

server 172.16.1.78:6443 weight=5 max_fails=3 fail_timeout=30s;

server 172.16.1.79:6443 weight=5 max_fails=3 fail_timeout=30s;

}

server {

listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突

proxy_pass k8s-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80 default_server;

server_name _;

location / {

}

}

}

4.4、修改或配置KeepAlive(3台主节点都都改,文件地址: /etc/keepalived/keepalived.conf)

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER # 备用服务器用BACKUP(多个同为MASTER 会互ping不通)我83是MASTER

interface ens18 # 修改为实际网卡名

virtual_router_id 83 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 78和79可以设置90/80小于100优先级不一样就行

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

172.16.1.83/24 # 3台控制节点虚拟统一暴露1台,我内网12这台没被占用,根据实际情况自己选择

}

track_script {

check_nginx

}

}4.5、check_nginx.sh参考注意加执行权限

#!/bin/bash

#1、判断Nginx是否存活

counter=`ps -C nginx --no-header | wc -l`

if [ $counter -eq 0 ]; then

#2、如果不存活则尝试启动Nginx

service nginx start

sleep 2

#3、等待2秒后再次获取一次Nginx状态

counter=`ps -C nginx --no-header | wc -l`

#4、再次进行判断,如Nginx还不存活则停止Keepalived,让地址进行漂移

if [ $counter -eq 0 ]; then

service keepalived stop

fi

fi4.6、3台主节点启动nginx及KeepAlive

[root@master083 ~]# chmod +x /etc/keepalived/check_nginx.sh

[root@master083 ~]# systemctl daemon-reload

[root@master083 ~]# systemctl enable nginx keepalived

[root@master083 ~]# systemctl start nginx

[root@master083 ~]# systemctl start keepalived4.7、我是创建k8s集群配置文件(我选定是keepalived的master-->master083)

# yaml配置参考

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.23.1

# 上面配置的虚拟主机ip

controlPlaneEndpoint: 172.16.1.46:16443

imageRepository: registry.aliyuncs.com/google_containers

apiServer:

certSANs:

- 172.16.1.86

- 172.16.1.78

- 172.16.1.79

- 172.16.1.80

- 172.16.1.81

- 172.16.1.82

- 172.16.1.46

networking:

podSubnet: 10.244.0.0/16

serviceSubnet: 10.10.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

4.8、执行配置文件初始化集群(我选定是keepalived的master-->master083)

# 初始化

[root@master083 ~]# kubeadm init --config kubeadm-config.yaml --ignore-preflight-errors=SystemVerification

# 拷贝admin用户配置

[root@master083 ~]# mkdir -p $HOME/.kube

[root@master083 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master083 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 查看节点

[root@master083 ~]# kubectl get nodes5、剩余节点接入集群

5.1、控制节点接入,以master079为例(master078重复此步骤即可)

# 创建证书存放目录

[root@master079 ~]# cd /root && mkdir -p /etc/kubernetes/pki/etcd &&mkdir -p ~/.kube/

# 拷贝证书

[root@master083 ~]# scp /etc/kubernetes/pki/ca.crt master079 :/etc/kubernetes/pki/

[root@master083 ~]# scp /etc/kubernetes/pki/ca.key master079 :/etc/kubernetes/pki/

[root@master083 ~]# scp /etc/kubernetes/pki/sa.key master079 :/etc/kubernetes/pki/

[root@master083 ~]# scp /etc/kubernetes/pki/sa.pub master079 :/etc/kubernetes/pki/

[root@master083 ~]# scp /etc/kubernetes/pki/front-proxy-ca.crt master079 :/etc/kubernetes/pki/

[root@master083 ~]# scp /etc/kubernetes/pki/front-proxy-ca.key master079 :/etc/kubernetes/pki/

[root@master083 ~]# scp /etc/kubernetes/pki/etcd/ca.crt master079 :/etc/kubernetes/pki/etcd/

[root@master083 ~]# scp /etc/kubernetes/pki/etcd/ca.key master079 :/etc/kubernetes/pki/etcd/

# 查看加入信息的token和sha256

[root@master083 ~]# kubeadm token create --print-join-command

# xxx根据上条命令查看到的信息进行替换(系统回滚可能导致系统时间问题,你可以手动刷新下系统时间:chronyc -a makestep)

[root@master079 ~]# kubeadm join 172.16.1.83:16443 --token xxx --discovery-token-ca-cert-hash sha256:xxx --control-plane --ignore-preflight-errors=SystemVerification

# 复制拷贝admin

[root@master079 ~]# mkdir -p $HOME/.kube

[root@master079 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master079 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 查看节点信息

[root@master079 ~]# kubectl get nodes5.2、工作节点节点接入

[root@worker080 ~]# kubeadm join 192.168.0.12:16443 --token *** --discovery-token-ca-cert-hash sha256:****** --ignore-preflight-errors=SystemVerification

[root@worker081 ~]# kubeadm join 192.168.0.12:16443 --token *** --discovery-token-ca-cert-hash sha256:****** --ignore-preflight-errors=SystemVerification

[root@worker082 ~]# kubeadm join 192.168.0.12:16443 --token *** --discovery-token-ca-cert-hash sha256:****** --ignore-preflight-errors=SystemVerification

# 为工作节点打标签标记下

[root@master083 ~]# kubectl label node worker080 node-role.kubernetes.io/worker=worker

[root@master083 ~]# kubectl label node worker081 node-role.kubernetes.io/worker=worker

[root@master083 ~]# kubectl label node worker082 node-role.kubernetes.io/worker=worker

[root@master083 ~]# kubectl get nodes6、网络插件安装与检测,到此k8s的基础部署就完成了

6.1、安装calico(calico.yaml下载地址:https://docs.projectcalico.org/manifests/calico.yaml),里边会涉及到Google源导致安装失败以及一些定制配置,本文所用的calico文件参考,有熟悉的也可以自己配

# 安装

[root@master083 ~]# kubectl apply -f calico.yaml

# 等待自动配置后查看网络是否生效

[root@master083 ~]# kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master078 Ready control-plane,master 37h v1.23.1 172.16.1.78 Rocky Linux 8.6 (Green Obsidian) 4.18.0-372.9.1.el8.x86_64 docker://20.10.21

master079 Ready control-plane,master 37h v1.23.1 172.16.1.79 Rocky Linux 8.6 (Green Obsidian) 4.18.0-372.9.1.el8.x86_64 docker://20.10.21

master083 Ready control-plane,master 37h v1.23.1 172.16.1.83 Rocky Linux 8.6 (Green Obsidian) 4.18.0-372.9.1.el8.x86_64 docker://20.10.21

worker080 Ready worker 37h v1.23.1 172.16.1.80 Rocky Linux 8.6 (Green Obsidian) 4.18.0-372.9.1.el8.x86_64 docker://20.10.21

worker081 Ready worker 37h v1.23.1 172.16.1.81 Rocky Linux 8.6 (Green Obsidian) 4.18.0-372.9.1.el8.x86_64 docker://20.10.21

worker082 Ready worker 37h v1.23.1 172.16.1.82 Rocky Linux 8.6 (Green Obsidian) 4.18.0-372.9.1.el8.x86_64 docker://20.10.21

6.2、创建并进入pod检测dns和外网是否通畅

[root@master083 ~]# docker pull busybox:1.28

# 外网检测

[root@master083 ~]# kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

/ # ping www.baidu.com

# dns检测

[root@master083 ~]# kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

/ # nslookup kubernetes.default.svc.cluster.local7、k8s的分布式存储环境搭建(采用nfs共享式存储可以忽略此步骤)

7.1、去ceph主节点上创建分布式存储操作账号kube

root@master001:~# ceph auth get-or-create client.kube mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=pool-k8s' -o ceph.client.kube.keyring

# 2、查看kube用户和admin用户的key

root@master001:~# ceph auth get-key client.admin

root@master001:~# ceph auth get-key client.kube7.2、去k8s主节点上关联ceph账号信息

# 1、创建dev命名空间

[root@master083 ~]# kubectl create ns dev

# 2、创建 admin secret替换第7.2步client.admin获取到的值

[root@master083 ~]# kubectl create secret generic ceph-secret --type="kubernetes.io/rbd" \

--from-literal=key='AQDSdZBjX15VFBAA+zJDZ8reSLCm2UAxtEW+Gw==' \

--namespace=kube-system

# 3、在 dev 命名空间创建pvc用于访问ceph的 secret替换第7.2步client.kube获取到的值

[root@master083 ~]# kubectl create secret generic ceph-user-secret --type="kubernetes.io/rbd" \

--from-literal=key='AQCizZJjB19ADxAAmx0yYeL2QDJ5j3WsN/jyGA==' \

--namespace=dev

# 4、查看 secret

[root@master083 ~]# kubectl get secret ceph-user-secret -o yaml -n dev

[root@master083 ~]# kubectl get secret ceph-secret -o yaml -n kube-system7.3、创建一个账户,主要用来管理ceph provisioner在k8s集群中运行的权(rbac-ceph.yaml)

apiVersion: v1

kind: ServiceAccount #创建一个账户,主要用来管理ceph provisioner在k8s集群中运行的权

metadata:

name: rbd-provisioner

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["services"]

resourceNames: ["kube-dns"]

verbs: ["list", "get"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: rbd-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: rbd-provisioner

namespace: kube-system

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: rbd-provisioner

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: kube-system

7.4、创建ceph的供应商(provisioner-ceph.yaml)

apiVersion: apps/v1

kind: Deployment

metadata:

name: rbd-provisioner

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: rbd-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: rbd-provisioner

spec:

containers:

- name: rbd-provisioner

image: "quay.io/external_storage/rbd-provisioner:latest"

imagePullPolicy: IfNotPresent

volumeMounts:

- name: ceph-conf

mountPath: /etc/ceph

env:

- name: PROVISIONER_NAME

value: ceph.com/rbd

serviceAccount: rbd-provisioner

volumes:

- name: ceph-conf

hostPath:

path: /etc/ceph

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ceph-rbd

provisioner: ceph.com/rbd

parameters:

monitors: 172.16.0.143:6789,172.16.0.211:6789,172.16.0.212:6789

adminId: admin # k8s访问ceph的用户

adminSecretName: ceph-secret # secret名字

adminSecretNamespace: kube-system # secret加命名空间

pool: pool-k8s # ceph的rbd进程池

userId: kube # k8s访问ceph的用户

userSecretName: ceph-user-secret # secret名字,不需要加命名空间

fsType: ext4

imageFormat: "2"

imageFeatures: "layering"

reclaimPolicy: Retain

7.5、将ceph服务器(我的是物理机master001)上的 /etc/ceph下的文件拷贝到各个工作节点一下是worker081工作节点的 /etc/ceph拷贝后结构参考

7.6、在k8s的控制节点master083上进行初始化部署操作

[root@master083 ~]# kubectl apply -f rbac-ceph.yaml

[root@master083 ~]# kubectl apply -f provisioner-ceph.yaml

# 查看供应商启动节点信息

[root@master083 ~]#kubectl get pod -n kube-system -owide

................ ... ....... ........ ...

kube-proxy-s5mfz 1/1 Running 4 (22h ago) 37h 172.16.1.81 worker081

kube-proxy-tqksh 1/1 Running 4 38h 172.16.1.79 master079

kube-proxy-w4h57 1/1 Running 4 (22h ago) 37h 172.16.1.82 worker082

kube-scheduler-master078 1/1 Running 30 38h 172.16.1.78 master078

kube-scheduler-master079 1/1 Running 24 38h 172.16.1.79 master079

kube-scheduler-master083 1/1 Running 18 (127m ago) 38h 172.16.1.83 master083

rbd-provisioner-579d59bb7b-ssd8b 1/1 Running 6 3h45m 10.244.129.207 worker081

7.7、排错总结(此处可以直接跳过直接看7.8)

7.7.1、第一次供应商没有Running请到相应的节点去拉取镜像(我就得去worker081这个节点)

[root@worker081 ~]# docker pull quay.io/external_storage/rbd-provisioner:latest7.7.2、拷贝完后最好把供应商里边的ceph升级

# 进入容器

[root@master083 ~]# kubectl exec -it rbd-provisioner-579d59bb7b-ssd8b -c rbd-provisioner -n kube-system -- sh

# 查看版本太老了兼容不了

sh-4.2# ceph -v

# 更新容器yum源

sh-4.2# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

# 2.3、配置ceph源

sh-4.2# cat >>/etc/yum.repos.d/ceph.repo<< eof

[ceph]

name=ceph

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/x86_64/

gpgcheck=0

priority=1

enable=1

[ceph-noarch]

name=cephnoarch

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/

gpgcheck=0

priority=1

enable=1

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/SRPMS/

gpgcheck=0

priority=1

enable=1

eof

# 更新

sh-4.2# yum -y update

# 查看可安装版本

sh-4.2# yum list ceph-common --showduplicates | sort -r

ceph-common.x86_64 2:14.2.9-0.el7 ceph

ceph-common.x86_64 2:14.2.8-0.el7 ceph

ceph-common.x86_64 2:14.2.7-0.el7 ceph

ceph-common.x86_64 2:14.2.6-0.el7 ceph

ceph-common.x86_64 2:14.2.5-0.el7 ceph

ceph-common.x86_64 2:14.2.4-0.el7 ceph

ceph-common.x86_64 2:14.2.3-0.el7 ceph

ceph-common.x86_64 2:14.2.22-0.el7 ceph

ceph-common.x86_64 2:14.2.22-0.el7 @ceph

ceph-common.x86_64 2:14.2.21-0.el7 ceph

ceph-common.x86_64 2:14.2.20-0.el7 ceph

ceph-common.x86_64 2:14.2.2-0.el7 ceph

.................. ............... ....

# 安装最新版本

sh-4.2# yum install -y ceph-common-14.2.21-0.el7

sh-4.2# ceph -v

# 进入到master081服务器,查看docker 容器并重新制作镜像(9fb54e49f9bf是运行容器的id不是镜像的id)docker images 和 docker ps 可手动找到运行的容器关联的镜像

[root@master081 ~]# sudo docker commit -m "update ceph-common 14.2.22 " -a "morik" 9fb54e49f9bf provisioner/ceph:14.2.22

[root@master081 ~]# docker save ceph_provisioner_14.2.22.tar.gz provisioner/ceph:14.2.22

# 分别去83和81上先后删除Deployment 和 docker镜像

[root@master083 ~]# kubectl delete Deployment rbd-provisioner -n rbd-provisioner

[root@master081 ~]# docker rmi -f 9fb54e49f9bf

# 将新生成的ceph_provisioner_14.2.22.tar.gzdocker镜像分别拷贝到工作节点并加载

[root@master080 ~]# docker load -i /home/ceph_provisioner_14.2.22.tar.gz

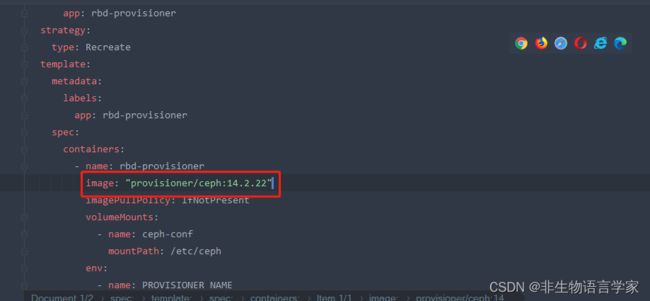

[root@master082 ~]# docker load -i /home/ceph_provisioner_14.2.22.tar.gz7.8、修改镜像名称(你可以直接使用本文提供的docker镜像,也可以按7.6的方式自己生成)

7.9、测试pvc(test-ceph.yaml)

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: ceph-sc-claim

namespace: dev

spec:

storageClassName: ceph-rbd

accessModes:

- ReadWriteOnce

- ReadOnlyMany

resources:

requests:

storage: 500Mi

8.0、测试看效果,初始12k--->格式化后38k

[root@master083 ~]# kubectl apply -f test-ceph.yaml

[root@master083 ~]# kubectl get pvc -n dev

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ceph-sc-claim Bound pvc-406871be-069e-4ac1-84c9-ccc1589fd880 500Mi RWO,ROX ceph-rbd 4h35m

[root@master083 ~]# kubectl get pv -n dev

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-406871be-069e-4ac1-84c9-ccc1589fd880 500Mi RWO,ROX Retain Bound dev/ceph-sc-claim ceph-rbd 4h35m

[root@master083 ~]#