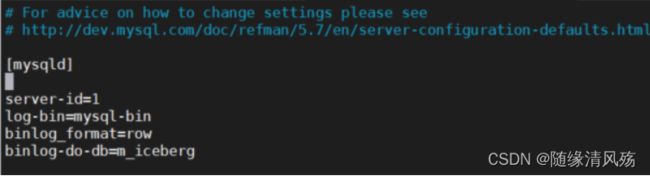

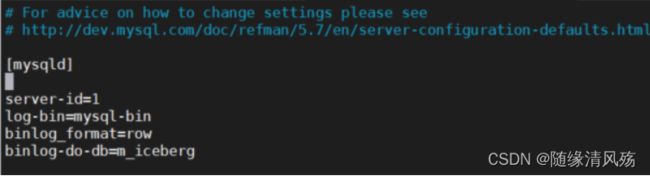

1、开启binlog日志

1.1、在mysql中对需要进行实时数据监测的库开启binlog同步

sudo vim /etc/my.cnf

server-id=1

log-bin=mysql-bin

binlog_format=row

binlog-do-db=m_iceberg

sudo systemctl restart mysqld

1.2、查询是否开启

cd /var/lib/mysql

2、实时写入前提

- 实时写入必要配置:在flink-conf.yaml中配置checkpoints相关参数

execution.checkpointing.interval: 3min

execution.checkpointing.externalized-checkpoint-retention: RETAIN_ON_CANCELLATION

execution.checkpointing.max-concurrent-checkpoints: 1

execution.checkpointing.min-pause: 0

execution.checkpointing.mode: EXACTLY_ONCE

execution.checkpointing.timeout: 10min

execution.checkpointing.tolerable-failed-checkpoints: 0

execution.checkpointing.unaligned: false

state.backend: filesystem

state.checkpoints.dir: hdfs:///flink/flink-checkpoints

state.savepoints.dir: hdfs:///flink/flink-savepoints

flink-sql-connector-kafka_2.11-1.13.5.jar

flink-sql-connector-mysql-cdc-1.3.0.jar

3、数据源创建

CREATE TABLE `product_view` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`user_id` int(11) NOT NULL,

`product_id` int(11) NOT NULL,

`server_id` int(11) NOT NULL,

`duration` int(11) NOT NULL,

`times` varchar(11) NOT NULL,

`time` datetime NOT NULL,

PRIMARY KEY (`id`),

KEY `time` (`time`),

KEY `user_product` (`user_id`,`product_id`) USING BTREE,

KEY `times` (`times`) USING BTREE

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

INSERT INTO `product_view` VALUES ('1', '1', '1', '1', '120', '120', '2020-04-24 13:14:00');

INSERT INTO `product_view` VALUES ('2', '1', '1', '1', '120', '120', '2020-04-24 13:14:00');

INSERT INTO `product_view` VALUES ('3', '1', '1', '3', '120', '120', '2020-04-24 13:14:00');

INSERT INTO `product_view_sink` VALUES ('4', '1', '1', '2', '120', '120', '2020-04-24 13:14:00');

INSERT INTO `product_view` VALUES ('5', '8', '1', '1', '120', '120', '2020-05-14 13:14:00');

INSERT INTO `product_view` VALUES ('6', '8', '1', '2', '120', '120', '2020-05-13 13:14:00');

INSERT INTO `product_view` VALUES ('7', '8', '1', '3', '120', '120', '2020-04-24 13:14:00');

INSERT INTO `product_view` VALUES ('8', '8', '1', '3', '120', '120', '2020-04-23 13:14:00');

INSERT INTO `product_view` VALUES ('9', '8', '1', '2', '120', '120', '2020-05-13 13:14:00');

INSERT INTO `product_view` VALUES (10, 8, 1, 2, 120, '120', '2020-05-13 13:14:00');

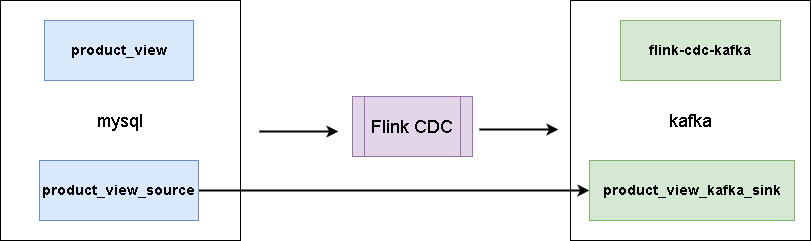

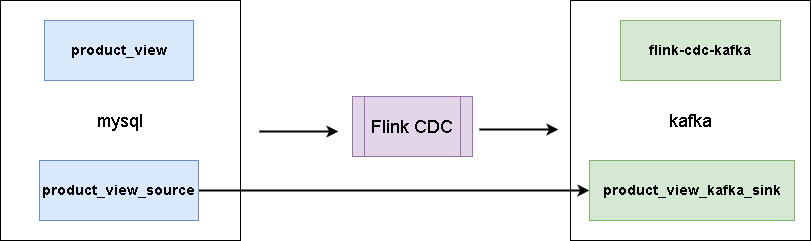

4、创建数据表关联Mysql

- 含义:我们在flink sql client操作这个表相当于操作mysql里面的对应表。

CREATE TABLE `default_catalog`.`default_database`.`product_view_source` (

`id` int,

`user_id` int,

`product_id` int,

`server_id` int,

`duration` int,

`times` string,

`time` timestamp,

PRIMARY KEY (`id`) NOT ENFORCED

) WITH (

'connector' = 'mysql-cdc',

'hostname' = '192.168.6.102',

'port' = '3306',

'username' = 'root',

'password' =、,

'database-name' = 'm_iceberg',

'table-name' = 'product_view'

);

5、Mysql表入湖

CREATE EXTERNAL TABLE product_view_sink (

`id` int,

`user_id` int,

`product_id` int,

`server_id` int,

`duration` int,

`times` string,

`time` timestamp,

PRIMARY KEY (`id`) NOT ENFORCED

) STORED BY 'org.apache.iceberg.mr.hive.HiveIcebergStorageHandler'

TBLPROPERTIES ( 'write.metadata.delete-after-commit.enabled'='true',

'write.metadata.previous-versions-max'='5',

'format-version'='2');

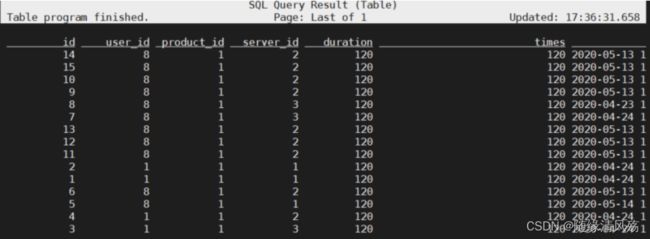

insert into product_view_sink select * from default_catalog.default_database.product_view_source;

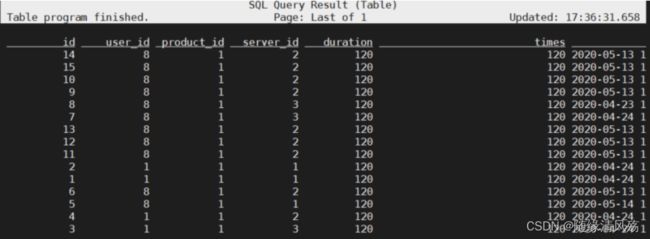

- 验证结果:Source表(在default_catalog)可实时查看,Sink表(在hive_Iceberg)无法实时查看。

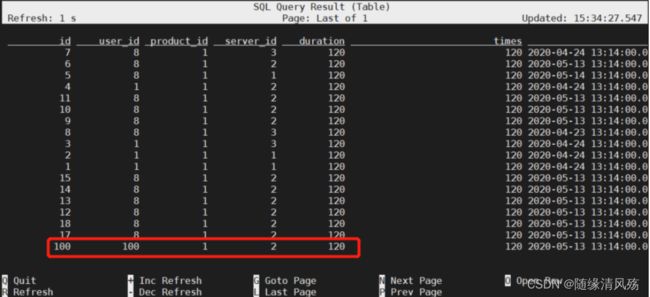

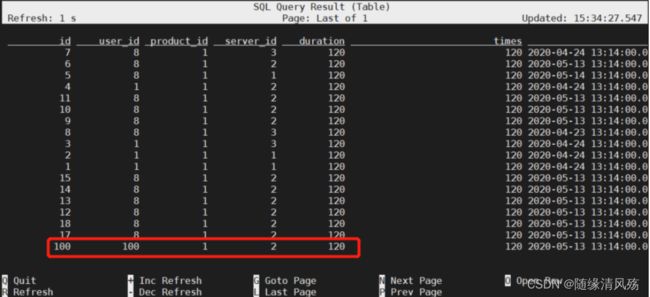

6、数据的增删改同步

- 验证结果:Flink1.14.5支持数据增删改同步

- hive建表配置

TBLPROPERTIES ( 'write.metadata.delete-after-commit.enabled'='true',

'write.metadata.previous-versions-max'='5',

'format-version'='2');

6.1、数据增加验证

INSERT INTO `product_view` VALUES ('100', '8', '1', '2', '120', '120', '2020-05-13 13:14:00');

6.2、数据更新验证

UPDATE product_view SET user_id = 100 WHERE id = 100;

6.3、数据删除验证

delete from product_view where id = 100;