class torch.optim.lr_scheduler.StepLR

参考链接: class torch.optim.lr_scheduler.StepLR(optimizer, step_size, gamma=0.1, last_epoch=-1, verbose=False)

配套代码下载链接: 测试学习率调度器.zip

实验代码展示:

# torch.optim.lr_scheduler.StepLR

import matplotlib.pyplot as plt

import numpy as np

import torch

from torch.utils.data import Dataset, DataLoader

from torch import nn

from torch.autograd import Function

import random

import os

seed = 20200910

os.environ['PYTHONHASHSEED'] = str(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed) # if you are using multi-GPU.

np.random.seed(seed) # Numpy module.

random.seed(seed) # Python random module.

torch.manual_seed(seed)

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = True

class Dataset4cxq(Dataset):

def __init__(self, length):

self.length = length

def __len__(self):

return self.length

def __getitem__(self, index):

if type(index) != type(2) and type(index) != (slice):

raise TypeError('索引类型错误,程序退出...')

# index 是单个数

if type(index) == type(2):

if index >= self.length or index < -1 * self.length:

# print("索引越界,程序退出...")

raise IndexError("索引越界,程序退出...")

elif index < 0:

index = index + self.length

Celsius = torch.randn(1,1,dtype=torch.float).item()

Fahrenheit = 32.0 + 1.8 * Celsius

return Celsius, Fahrenheit

def collate_fn4cxq(batch):

list_c = []

list_f = []

for c, f in batch:

list_c.append(c)

list_f.append(f)

list_c = torch.tensor(list_c)

list_f = torch.tensor(list_f)

return list_c, list_f

if __name__ == "__main__":

my_dataset = Dataset4cxq(32)

# for c,f in my_dataset:

# print(type(c),type(f))

dataloader4cxq = torch.utils.data.DataLoader(

dataset=my_dataset,

batch_size=8,

# batch_size=2,

drop_last=True,

# drop_last=False,

shuffle=True, # True False

# shuffle=False, # True False

collate_fn=collate_fn4cxq,

# collate_fn=None,

)

# for cnt, data in enumerate(dataloader4cxq, 0):

# # pass

# sample4cxq, label4cxq = data

# print('sample4cxq的类型: ',type(sample4cxq),'\tlabel4cxq的类型: ',type(label4cxq))

# print('迭代次数:', cnt, ' sample4cxq:', sample4cxq, ' label4cxq:', label4cxq)

print('开始创建模型'.center(80,'-'))

model = torch.nn.Linear(in_features=1, out_features=1, bias=True) # True # False

model.cuda()

# optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

# 模拟华氏度与摄氏度之间的转换

# Fahrenheit = 32 + 1.8 * Celsius

model.train()

cost_function = torch.nn.MSELoss()

epochs = 100001 # 100001

epochs = 10001 # 100001

print('\n')

print('开始训练模型'.center(80,'-'))

list4delta = list()

list4epoch = list()

# scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=(lambda epoch: 0.99 ** (epoch//1000)))

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=500, gamma=0.9)

for epoch in range(epochs):

# with torch.no_grad():

# Celsius = torch.randn(10,1,dtype=torch.float).cuda()

# Fahrenheit = 32.0 + 1.8 * Celsius

# Fahrenheit = Fahrenheit.cuda()

# Celsius = torch.randn(1,1,dtype=torch.float,requires_grad=False).cuda() # requires_grad=False True

# Fahrenheit = 32.0 + 1.8 * Celsius

# Fahrenheit = Fahrenheit.cuda() # requires_grad=False

total_loss = 0.0

for cnt, data in enumerate(dataloader4cxq, 0):

Celsius, Fahrenheit = data

Celsius, Fahrenheit = Celsius.cuda().view(-1,1), Fahrenheit.cuda().view(-1,1)

output = model(Celsius)

loss = cost_function(output, Fahrenheit)

total_loss += loss.item()

optimizer.zero_grad()

loss.backward()

optimizer.step()

scheduler.step()

if epoch % 100 == 0: # if epoch % 1000 == 0:

list4delta.append(total_loss)

list4epoch.append(epoch)

if epoch % 500 == 0:

info = '\nepoch:{0:>6}/{1:<6}\t'.format(epoch,epochs)

for k, v in model.state_dict().items():

info += str(k)+ ':' + '{0:<.18f}'.format(v.item()) + '\t'

# info += str(k)+ ':' + str(v.item()) + '\t'

print(info)

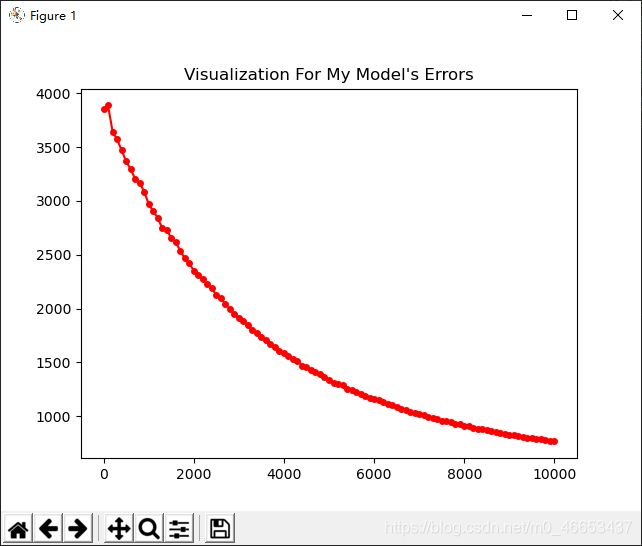

fig, ax = plt.subplots()

# ax.plot(10*np.random.randn(100),10*np.random.randn(100),'o')

ax.plot(list4epoch, list4delta, 'r.-', markersize=8)

ax.set_title("Visualization For My Model's Errors")

plt.show()

控制台下输出:

Windows PowerShell

版权所有 (C) Microsoft Corporation。保留所有权利。

尝试新的跨平台 PowerShell https://aka.ms/pscore6

加载个人及系统配置文件用了 796 毫秒。

(base) PS C:\Users\chenxuqi\Desktop\News4cxq\测试学习率调度器> conda activate pytorch_1.7.1_cu102

(pytorch_1.7.1_cu102) PS C:\Users\chenxuqi\Desktop\News4cxq\测试学习率调度器> & 'D:\Anaconda3\envs\pytorch_1.7.1_cu102\python.exe' 'c:\Users\chenxuqi\.vscode\extensions\ms-python.python-2021.1.502429796\pythonFiles\lib\python\debugpy\launcher' '49758' '--' 'c:\Users\chenxuqi\Desktop\News4cxq\测试学习率调度器\test08.py'

-------------------------------------开始创建模型-------------------------------------

-------------------------------------开始训练模型-------------------------------------

epoch: 0/10001 weight:0.962383031845092773 bias:0.980020046234130859

epoch: 500/10001 weight:1.129075884819030762 bias:2.954752445220947266

epoch: 1000/10001 weight:1.238264322280883789 bias:4.707053184509277344

epoch: 1500/10001 weight:1.298225045204162598 bias:6.279161930084228516

epoch: 2000/10001 weight:1.386581063270568848 bias:7.694698333740234375

epoch: 2500/10001 weight:1.414126753807067871 bias:8.970099449157714844

epoch: 3000/10001 weight:1.462724089622497559 bias:10.119626998901367188

epoch: 3500/10001 weight:1.554199934005737305 bias:11.155819892883300781

epoch: 4000/10001 weight:1.609407067298889160 bias:12.089537620544433594

epoch: 4500/10001 weight:1.631286978721618652 bias:12.930944442749023438

epoch: 5000/10001 weight:1.631085872650146484 bias:13.689365386962890625

epoch: 5500/10001 weight:1.646203756332397461 bias:14.372797966003417969

epoch: 6000/10001 weight:1.667070984840393066 bias:14.988748550415039062

epoch: 6500/10001 weight:1.690698742866516113 bias:15.543690681457519531

epoch: 7000/10001 weight:1.677183747291564941 bias:16.043390274047851562

epoch: 7500/10001 weight:1.694453477859497070 bias:16.493432998657226562

epoch: 8000/10001 weight:1.713040113449096680 bias:16.898756027221679688

epoch: 8500/10001 weight:1.715337395668029785 bias:17.264890670776367188

epoch: 9000/10001 weight:1.727315664291381836 bias:17.592933654785156250

epoch: 9500/10001 weight:1.720250487327575684 bias:17.890419006347656250

epoch: 10000/10001 weight:1.725156664848327637 bias:18.157394409179687500