【无标题】

一.Kubernetes概述

文档:https://kuboard.cn/learning/

kubernetes从 1.23版本不支持docker了,而是使用containerd

1.docker以集群工作面临的问题

集群编排面临的问题如下:

1.跨主机通信问题?

2.多容器跨主机部署?

3.容器发布,升级,回滚?

4.容器挂掉后,如何自动拉起服务?

5.当现有容器资源不足时,是否可以自动扩容?

6.能够实现容器的健康检查,若不健康是否能自动恢复?

7.如何将容器调度到集群特定节点?

8.将容器从一个节点驱逐,下线节点如何操作?

9.集群如何扩容?

10.集群如何监控?

11.集群日志如何收集?

…

早期容器容器编排工具:docker inc swarm,Apache mesos marathon,Google Kubernetes(简称K8S)。

2.Kubernetes概述

1)k8s发展史

2014年 docker容器编排工具,立项

2015年7月 发布kubernetes 1.0, 加入cncf基金会 孵化

2016年,kubernetes干掉两个对手,docker swarm,mesos marathon 1.2版

2017年 1.5 -1.9

2018年 k8s 从cncf基金会 毕业项目1.10 1.11 1.12,1.13

2019年: 1.14,1.15,1.16,1.17

2020年: 1.18, 1.19,1.20,1.21

2021年: 1.22

2022年: 1.23,1.24

cncf :

cloud native compute foundation 孵化器kubernetes (k8s):

希腊语 舵手,领航者 容器编排领域,谷歌15年容器使用经验,

borg容器管理平台,使用golang重构borg,kubernetes推荐阅读:

https://kubernetes.io/releases/patch-releases/#1-22

https://github.com/kubernetes/kubernetes

https://kubernetes.io/releases/release/温馨提示:

k8s的称呼并非空穴来风,可百度搜索"i18n"(是“国际化”的简称,全称为"internationalization")。

2)Kubernetes简介

Kubernetes最初源于谷歌内部的**Borg**,提供了面向应用的容器集群部署和管理系统。Kubernetes 的目标旨在消除编排物理/虚拟计算,网络和存储基础设施的负担,并使应用程序运营商和开发人员完全将重点放在以容器为中心的原语上进行自助运营。Kubernetes 也提供稳定、兼容的基础(平台),用于构建定制化的workflows 和更高级的自动化任务。

Kubernetes 具备完善的集群管理能力,包括多层次的安全防护和准入机制、多租户应用支撑能力、透明的服务注册和服务发现机制、内建负载均衡器、故障发现和自我修复能力、服务滚动升级和在线扩容、可扩展的资源自动调度机制、多粒度的资源配额管理能力。

Kubernetes 还提供完善的管理工具,涵盖开发、部署测试、运维监控等各个环节。

K8S主要功能:

K8s是用来对docker容器进行管理和编排的工具,其是一个基于docker构建的调度服务,提供资源调度、均衡容灾、服务注册、动态扩容等功能套件,其作用如下所示:

(1)数据卷:pod中容器之间数据共享,可以使用数据卷

(2)应用程序健康检查:容器内服务可能发生异常导致服务不可用,可以使用健康检查策略保证应用的健壮性。

(3)复制应用程序实例:控制器维护着pod的副本数量,保证一个pod或者一组同类的pod数量始终可用。

(4)弹性伸缩:根据设定的指标(CPU利用率等)动态的自动缩放pod数

(5)负载均衡:一组pod副本分配一个私有的集群IP地址,负载均衡转发请求到后端容器,在集群内布,其他pod可通过这个Cluster IP访问集群。

(6)滚动更新:更新服务不中断,一次更新一个pod,而不是同时删除整个服务

(7)服务编排:通过文件描述部署服务,使的程序部署更高效。

(8)资源监控:Node节点组件集成cAdvisor资源收集工具,可通过Heapster汇总整个集群节点资源数据,然后存储到InfluxDB时序数据库,再由Grafana展示

(9)提供认证和授权:支持属性访问控制、角色访问控制等认证授权策略。

3) K8S特点

- 可移植: 支持公有云,私有云,混合云

- 可扩展: 模块化, 插件化, 可挂载, 可组合

- 自动化: 自动部署,自动重启,自动复制,自动伸缩/扩展

3.Kubernetes架构

| 机器名 | ||

|---|---|---|

| k8s151.com | 10.0.0.151 | 2C4G |

| k8s152.com | 10.0.0.152 | 1C2G |

| k8s153.com | 10.0.0.153 | 1C2G |

| k8s154.com | 10.0.0.154 | 1C2G |

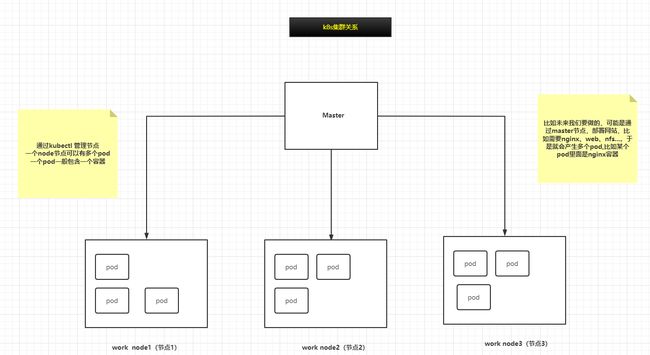

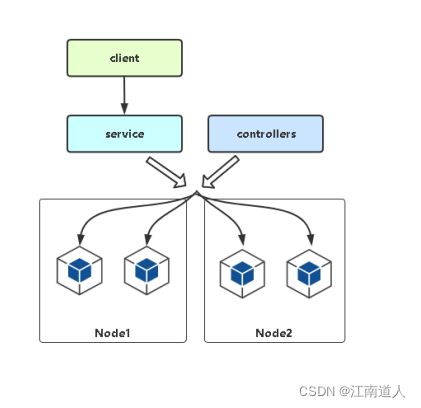

1)两大角色

K8S有两大角色,一个是Master节点,一个是Worker节点。

其中Master负责管理和调度集群资源,Worker负责提供集群资源。

在一个高可用的集群当中,他俩一般由多个节点构成,这些节点可以是虚拟机也可以是物理机。

Worker提供的节点叫Pod,简单理解Pod就是K8S云平台提供的虚拟机,Pod里头住的是应用容器,比如Docker容器。

大部分情况Pod里只包含一个容器;有时候也包含多个容器,其中一个是主容器,其他是辅助容器。

为了加深理解,这里做个简单类比。Master好比指挥调度员工干活的主管,Worker好比部门当中实际干活的人。

K8S主要解决集群资源调度的问题,当有应用发布请求过来的时候,K8S会根据集群资源的空闲状态,把集群当中空闲的Pod合理的分配到Worker当中;另外,K8S还负责监控集群,当集群中有节点或者Pod挂了,它需要重新协调和启动Pod,保证应用的高可用,这个技术也叫自愈;另外K8S还需要管理集群的网络,保证Pod和服务之间可以互通互联。

2)通常 一个 K8S集群包括两个部分:

2.1) Master 节点 (主节点)

主要负责管理和控制,Master节点包含Etcd、Apiserver、controller-manager、Scheduler等组件,作用分别如下:

-

Scheduler:- 负责集群调度决策,当新的应用请求到达集群,scheduler负责决策相应的Pod应该分布到哪些空闲节点上。controller manager负责保证集群状态最终一致性。它通过api server监控集群的最终状态,确保集群状态和预期状态是一致的。如果一个运用要求发布10个Pod,controller manager会最终启动10个Pod,如果一个Pod挂了,它会负责协调,重新启动Pod。如果Pod启多了,它会协调关闭多余Pod。也就是说,K8S采用的是最终一致调度策略,它是集群自愈背后的实现机制kube-scheduler根据调度算法为新创建的Pod选择一个Node节点,可以任意部署,可以部署在同一个节点上,也可以部署在不同的节点上。(负责资源的调度,按照预定的调度策略将 Pod 调度到相应的机器上)

- 也就是说 Scheduler 负责思考,Pod 放在哪个 Node,然后将决策告诉 kubelet,kubelet 完成 Pod 在 Node 的加载工作。

说白了,Scheduler 是 boss,kubelet 是干活的工人,他们都通过 APIServer 进行信息交换

-

Controller Manager:- Kube-controller-manager,处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的。(负责维护集群的状态,比如故障检测、自动扩展、滚动更新等)

-

Etcd:- etcd是分布式的KV数据库,负责状态存储,所有集群的状态,比如节点Pod发布,配置等等。高可用的etcd集群部署一般要三个节点。etcd可以独立部署,也可以和Master节点一起部署。api server集群的接口和通信总线。kubectl,kubelet,kube-proxy,dashboard,sdk操作背后都是通过api server和集群进行交互。可以理解为etcd的一个代理,是唯一能够直接访问和操作etcd的组件,其他QQ账号出售平台组件都只能依赖api server间接操作etcd。它还是集群的事件总线(EventBus),其他组件可以订阅它,当有事件发生时候,会通知感兴趣的这些组件(存储状态的数据库,保存了整个集群的状态)。

-

API Server:- kube-apiserver,集群的统一入口,各组件协调者,以RESTFUL API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给etcd存储,(提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制)。

2.2 Node 节点

除了Master节点,还有一群 Node 节点(计算节点),Node节点包含kubelet、kube-proxy等组件,作用分别如下:

- kubelet:

可以理解为Master在工作节点上的Agent,管理本机运行容器的生命周期,比如创建容器,Pod挂载数据卷,下载secret,获取容器的节点状态等工作。kubelet将每一个Pod转换成一组容器。(主要负责监视指派到它所在Node上的Pod,包括创建、修改、监控、删除等)

- kube-proxy:

- 在工作节点上实现Pod网络代理,维护网络规则和四层负载均衡工作。换句话说,就是用于负责Pod网络路由,用于对外提供访问的实现。可以找到你关心的项目所在的pod节点(负责为Service提供cluster内部的服务发现和负载均衡)。

Cloud Controller Manager:

用在云平台上的Kube-controller-manager组件。如果我们直接在物理机上部署的话,可以不使用该组件。POD:

用户划分容器的最小单位,一个POD可以存在多个容器。docker/rocket(rkt,已停止支持):

容器引擎,用于运行容器。参考链接:

https://kubernetes.io/zh/docs/concepts/overview/components/

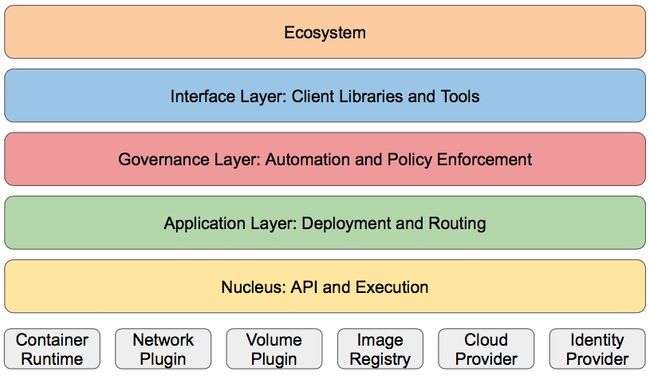

分层架构(了解)

Kubernetes设计理念和功能其实就是一个类似Linux的分层架构,如下图所示:

各层说明如下:

核心层:Kubernetes最核心的功能,对外提供API构建高层的应用,对内提供插件式应用执行环境

应用层:部署(无状态应用、有状态应用、批处理任务、集群应用等)和路由(服务发现、DNS解析等)

管理层:系统度量(如基础设施、容器和网络的度量),自动化(如自动扩展、动态Provision等)以及策略管理(RBAC、Quota、PSP、NetworkPolicy等)

接口层:kubectl命令行工具、客户端SDK以及集群联邦

生态系统:在接口层之上的庞大容器集群管理调度的生态系统,可以划分为两个范畴

Kubernetes外部:日志、监控、配置管理、CI、CD、Workflow、FaaS、OTS应用、ChatOps等

Kubernetes内部:CRI、CNI、CVI、镜像仓库、Cloud Provider、集群自身的配置和管理等

总体架构

二.Kubernete集群部署方式及大规模集群注意事项

1.kubernetes集群部署方式

yum安装:

优点:

安装,配置很简单,适合新手学习。

缺点:

不能定制安装。kind安装:

kind让你能够在本地计算机上运行Kubernetes。 kind要求你安装并配置好Docker。

推荐阅读:

https://kind.sigs.k8s.io/docs/user/quick-start/minikube部署:

minikube是一个工具, 能让你在本地运行Kubernetes。

minikube在你本地的个人计算机(包括 Windows、macOS 和 Linux PC)运行一个单节点的Kubernetes集群,以便你来尝试 Kubernetes 或者开展每天的开发工作。因此很适合开发人员体验K8S。

推荐阅读:

https://minikube.sigs.k8s.io/docs/start/kubeadm:

你可以使用kubeadm工具来创建和管理Kubernetes集群,适合在生产环境部署。

该工具能够执行必要的动作并用一种用户友好的方式启动一个可用的、安全的集群。

推荐阅读:

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/二进制部署:

安装步骤比较繁琐,但可以更加了解细节。适合运维人员生产环境中使用。源码编译安装:

难度最大,请做好各种故障排查的心理准备。其实这样一点对于K8S二次开发的人员应该不是很难。

2.kubectl时kubernetes集群的命令行工具

kubectl使得你可以对Kubernetes集群运行命令。 你可以使用kubectl来部署应用、监测和管理集群资源以及查看日志。

推荐阅读:

https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands

3.大规模集群的注意事项

推荐阅读:

https://kubernetes.io/zh/docs/setup/best-practices/cluster-large/

三.部署Kubernetes集群

部署k8s常见错误,去此网站查找解决方法

1.K8S安装

在集群机器上都要操作

1.1 yum安装

# 1.环境准备

(1)虚拟机操作系统环境准备

参考链接:

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

(2)关闭swap分区(集群机器都要做)

1) 临时关闭

swapoff -a && sysctl -w vm.swappiness=0 #临时禁用交换分区

2) 基于配置文件关闭

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab #永久禁用交换分区

cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Tue Sep 27 23:52:08 2022

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

UUID=731074a1-55a3-436a-8c7d-9b6e4f5acba9 / xfs defaults 0 0

UUID=f98df919-9080-4b39-a153-b4c8a68ff8a0 /boot xfs defaults 0 0

#UUID=ee7f7341-64b9-4f47-9aaf-9d0615b65b4d swap swap defaults 0 0

(3) 确保各个节点MAC地址或product_uuid唯一

ifconfig eth0 | grep ether | awk '{print $2}'

cat /sys/class/dmi/id/product_uuid

温馨提示:

一般来讲,硬件设备会拥有唯一的地址,但是有些虚拟机的地址可能会重复。

Kubernetes使用这些值来唯一确定集群中的节点。 如果这些值在每个节点上不唯一,可能会导致安装失败。

(4) 检查网络节点是否互通

简而言之,就是检查你的k8s集群各节点是否互通,可以使用ping命令来测试。

(5) 允许iptable检查桥接流量

cat <(6)检查端口是否被占用

参考链接: https://kubernetes.io/zh/docs/reference/ports-and-protocols/

ss -ntl

(7)检查docker的环境

参考链接:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.15.md#unchanged

- 1)配置docker源

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

curl -o /etc/yum.repos.d/docker-ce.repo https://download.docker.com/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo

yum list docker-ce --showduplicates

- 2)安装指定的docker版本

yum -y install docker-ce-18.09.9 docker-ce-cli-18.09.9

yum -y install bash-completion

source /usr/share/bash-completion/bash_completion

- 3)打包软件包分发到其他节点部署docker(此步骤可跳过)

mkdir docker-rpm-18-09 && find /var/cache/yum -name "*.rpm" |xargs mv -t docker-rpm-18-09/

-

- 配置docker优化

cat > /etc/docker/daemon.json <<'EOF'

{

"registry-mirrors": ["https://hzow8dfk.mirror.aliyuncs.com"],

"insecure-registries": ["k8s151.oldboyedu.com:5000"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

- 5)配置docker开机自启动

systemctl enable --now docker && systemctl restart docker

(8)禁用防火墙

systemctl disable --now firewalld

echo 1 >/proc/sys/net/ipv4/ip_forward #proc/sys/net/ipv4/ip_forward,该文件内容为0,表示禁止数据包转发,1表示允许,将其修改为1

(9)禁用selinux

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

grep ^SELINUX= /etc/selinux/config

(10)配置host解析

cat >> /etc/hosts <<'EOF'

10.0.0.151 k8s151.com

10.0.0.152 k8s152.com

10.0.0.153 k8s153.com

10.0.0.154 k8s154.com

EOF

cat /etc/hosts

(11) 在k8s151.oldboyedu.com节点启用docker registry的私有仓库

[root@k8s151 ~]$ docker run -dp 5000:5000 --restart always --name oldboyedu-registry registry:2

[root@docker151 ~]$ cat > /etc/docker/daemon.json <<'EOF'

{

"registry-mirrors": ["https://hzow8dfk.mirror.aliyuncs.com"],

"insecure-registries": ["k8s151.oldboyedu.com:5000"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

[root@docker101 ~]$ systemctl restart docker

http://10.0.0.151:5000/v2/_catalog

(12)重置节点

如果练习的过程中遇到太多的问题,想要重新操作一遍,可以重置节点

在所有节点执行

kubeadm reset #重置节点

rm -rf /etc/cni/net.d

#删除之前安装的k8s版本,如之前安装的是1.22.0,然后重新下载安装k8s,按照之前的流程执行(如果不用还版本,就不用执行这个)

yum -y remove kubeadm-1.22.0 kubelet-1.22.0 kubectl-1.22.0

# 2.软件包作用说明

你需要在每台机器上安装以下的软件包:

kubeadm:

用来初始化集群的指令。

kubelet:

在集群中的每个节点上用来启动Pod和容器等。

kubectl:

用来与集群通信的命令行工具。

kubeadm不能帮你安装或者管理kubelet或kubectl,所以你需要确保它们与通过kubeadm安装的控制平面(master)的版本相匹配。 如果不这样做,则存在发生版本偏差的风险,可能会导致一些预料之外的错误和问题。

然而,控制平面与kubelet间的相差一个次要版本不一致是支持的,但kubelet的版本不可以超过"API SERVER"的版本。 例如,1.7.0版本的kubelet可以完全兼容1.8.0版本的"API SERVER",反之则不可以。

#3.所有节点安装kubeadm,kubelet,kubectl

(1) 配置软件源

cat > /etc/yum.repos.d/kubernetes.repo <(2) 安装kubeadm,kubelet,kubectl软件包

yum -y install kubeadm-1.20.0 kubelet-1.20.0 kubectl-1.20.0

(3) 查看kubeadm的版本(将来你要安装的K8S时请所有组件版本均保持一致!)

yum -y list kubeadm --showduplicates | sort -r

(4) 启动kubelet服务(若服务启动失败时正常现象,其会自动重启,因为缺失配置文件,初始化集群后恢复!此步骤可跳过!)

systemctl enable --now kubelet && systemctl status kubelet

(5) 温馨提示:(可以将k8s软件打包到其他节点安装哟,前提是得开启rpm包缓存。)

mkdir k8s-rpm && find /var/cache/yum -name "*.rpm" | xargs mv -t k8s-rpm

参考链接:

https://kubernetes.io/zh/docs/tasks/tools/install-kubectl-linux/

建议:执行完上面的步骤之后,要把所有机器都拍摄快照,以防止出现问题之后,可以快速回滚到基础配置

#4.初始化master节点

只在151这台mstar机器上操作

把k8s集群看做一个工厂,通过初始化master节点,产生了一个厂长,这个厂长就是master,一个厂区有很多大仓库,然后每个仓库就是一个node,然后厂长就设立一个代理人,代理厂长管理仓库,这个人就Kubectl,通过kubeadm join 加入厂长的阵容,归厂长管理。 然后一个仓库可能有多个员工,员工就是pod,厂长想能联系到每个员工怎么办呢? 网络组件Flannel帮厂长解决了这个问题,flannel给每个pod分配了一个内部号码,厂长就可以联系。

(1) 使用kubeadm初始化master节点

[root@k8s151 ~]$ kubeadm init --kubernetes-version=v1.20.0 --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16 --service-cidr=10.254.0.0/16

....

kubeadm join 10.0.0.151:6443 --token 7enaea.6bk11es4yzx03vu5 \

--discovery-token-ca-cert-hash sha256:72953dbe7918dc60edae342bd3096f85ccd4b0ceb410ac9ee28f38fc5b5d0ba1

相关参数说明:

--apiserver-advertise-address: 指定apiserver的地址,用于其他节点连接用的内网地址。

--kubernetes-version: 指定K8S master组件的版本号。

--image-repository: 指定下载k8s master组件的镜像仓库地址。

--pod-network-cidr: 指定Pod的网段地址。

--service-cidr: 指定SVC的网段

使用kubeadm初始化集群时,可能会出现如下的输出信息:

[init]

使用初始化的K8S版本。

[preflight]

主要是做安装K8S集群的前置工作,比如下载镜像,这个时间取决于你的网速。

[certs]

生成证书文件,默认存储在"/etc/kubernetes/pki"目录哟。

[kubeconfig]

生成K8S集群的默认配置文件,默认存储在"/etc/kubernetes"目录哟。

[kubelet-start]

启动kubelet,

环境变量默认写入:"/var/lib/kubelet/kubeadm-flags.env"

配置文件默认写入:"/var/lib/kubelet/config.yaml"

[control-plane]

使用静态的目录,默认的资源清单存放在:"/etc/kubernetes/manifests"。

此过程会创建静态Pod,包括"kube-apiserver","kube-controller-manager"和"kube-scheduler"

[etcd]

创建etcd的静态Pod,默认的资源清单存放在:""/etc/kubernetes/manifests"

[wait-control-plane]

等待kubelet从资源清单目录"/etc/kubernetes/manifests"启动静态Pod。

[apiclient]

等待所有的master组件正常运行。

[upload-config]

创建名为"kubeadm-config"的ConfigMap在"kube-system"名称空间中。

[kubelet]

创建名为"kubelet-config-1.22"的ConfigMap在"kube-system"名称空间中,其中包含集群中kubelet的配置

[upload-certs]

跳过此节点,详情请参考”--upload-certs"

[mark-control-plane]

标记控制面板,包括打标签和污点,目的是为了标记master节点。

[bootstrap-token]

创建token口令,例如:"kbkgsa.fc97518diw8bdqid"。

如下图所示,这个口令将来在加入集群节点时很有用,而且对于RBAC控制也很有用处哟。

[kubelet-finalize]

更新kubelet的证书文件信息

[addons]

添加附加组件,例如:"CoreDNS"和"kube-proxy”

初始化常遇到的问题

有时候我们第一次初始化失败后,再次初始化,会提示我们有些文件已经存在了,或者端口被占用了,那是因为之前的初始化已经产生了某些文件,再次初始化的时候要把之前的文件删除

(2) 拷贝授权文件,用于管理K8S集群

[root@k8s151 ~]$ mkdir -p $HOME/.kube

[root@k8s151 ~]$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s151 ~]$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

(4) 查看集群节点

[root@k8s151 ~]$ kubectl get no

NAME STATUS ROLES AGE VERSION

k8s151 NotReady master 4m34s v1.15.12

[root@k8s151 ~]$ kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

[root@k8s151 ~]$ kubectl get no,cs

NAME STATUS ROLES AGE VERSION

node/k8s151 NotReady master 5m47s v1.15.12

NAME STATUS MESSAGE ERROR

componentstatus/scheduler Healthy ok

componentstatus/controller-manager Healthy ok

componentstatus/etcd-0 Healthy {"health":"true"}

如果出现这种情况

[root@k8s151 ~]$ vim /etc/kubernetes/manifests/kube-scheduler.yaml ... # - --port=0 #这一行注释掉,然后重启就可以 [root@k8s151 ~]$ vim /etc/kubernetes/manifests/kube-controller-manager.yaml ...... # - --port=0 #找到这个port=0并注释 [root@k8s151 ~]$ systemctl restart kubelet

#5.配置所有worker节点加入k8s集群

除了151这个master机器,其他的woker节点都要加入(154这个暂时不做)

![]()

在152和153上执行

kubeadm join 10.0.0.151:6443 --token a3fndb.oitexxftm0xpx90j \

--discovery-token-ca-cert-hash sha256:ef830cffbeb332ae329af30a9257fd7584e034d524bc5cb2cd6e9bcfe6307f10

节点都加入之后,查看一下

[root@k8s151 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s151.oldboyedu.com NotReady master 10m v1.18.0

k8s152.oldboyedu.com NotReady 60s v1.18.0

k8s153.oldboyedu.com NotReady 38s v1.18.0

如何重新加入节点(当加入节点出现问题后执行)

swapoff -a kubeadm reset -y #重置节点,当你加入节点或者master节点想重新初始化,可以先执行此命令 rm /etc/cni/net.d/* -f systemctl daemon-reload systemctl restart kubelet iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

#6.初始化网络组件

Flannel可以用于Kubernetes底层网络的实现,主要作用有:它能协助Kubernetes,给每一个Node上的pod都分配互相不冲突的IP地址

参考链接:

https://kubernetes.io/zh/docs/concepts/cluster-administration/addons/

https://github.com/flannel-io/flannel/blob/master/Documentation/kubernetes.md

在线初始化(不推荐)

[root@k8s151 /app/tools]$ kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

#因为在线安装kube-flannel.yaml里面的镜像是国外的,所以很多时候我们都会下载的很慢,或者甚至失败

本地安装,先把kube-flannel.yml、flannel和flannel-cni-plugin镜像文件上传到/app/tools

[root@k8s151 /app/tools]$ docker load -i flannel-cni-plugin-v1.1.2.tar.gz

[root@k8s151 /app/tools]$ docker load -i flannel-v0.21.2.tar.gz

#注意,导入这两个镜像,要在所有节点上都操作

[root@k8s151 /app/tools]$ kubectl apply -f kube-flannel.yml

[root@k8s151 /app/tools]$

验证flannel插件是否部署成功(要等一会,查看ROLES的状态变为Ready):

[root@k8s151 ~]$ kubectl get nodes #可以看到,我们的版本是v1.20.0

NAME STATUS ROLES AGE VERSION

k8s151.oldboyedu.com Ready control-plane,master 14m v1.20.0

k8s152.oldboyedu.com Ready 9m41s v1.20.0

k8s153.oldboyedu.com Ready 9m38s v1.20.0

查看flannel是否部署成功,如果不成功,节点状态就可能不会是Ready

[root@k8s151 ~]$ kubectl get pods -A -o wide | grep flannel

kube-system kube-flannel-ds-amd64-5gbnq 1/1 Running 0 4m43s 10.0.0.153 k8s153

kube-system kube-flannel-ds-amd64-8qmwf 1/1 Running 0 4m43s 10.0.0.210 k8s151

kube-system kube-flannel-ds-amd64-xvrxb 1/1 Running 0 4m43s 10.0.0.152 k8s152

[root@k8s151 ~]$ kubectl get ds -A

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system kube-flannel-ds 0 0 0 0 0 beta.kubernetes.io/arch=amd64 2m52s

kube-system kube-proxy 3 3 3 3 3 beta.kubernetes.io/os=linux 26m

查看kube-flannel-ds的日志

这个很重要,当你的pod出问题之后,要通过这个命令查看pod的错误日志

格式:

kubectl -nlogs 案例: kubectl logs -n kube-flannel kube-flannel-ds-jwnwm当初始化出现问题,可以重新初始化:

https://blog.csdn.net/one2threexm/article/details/107735228

rm -rf /etc/kubernetes/* kubeadm reset rm -rf /etc/kubernetes/* rm -rf ~/.kube/* rm -rf /var/lib/etcd/* kubeadm reset -f

#7.添加自动补全功能

所有组件都

echo "source <(kubectl completion bash)" >> ~/.bashrc && source ~/.bashrc

#8.测试网络的联通性(新手可跳过,讲师课堂演示参考)

(1)测试网络是否正常

[root@k8s151 ~]$ kubectl run oldboyedu-nginx --image=nginx:1.20.1 --replicas=3

[root@k8s151 ~]$ kubectl run oldboyedu-linux --image=alpine --replicas=3 -- sleep 300

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/oldboyedu-linux created

(2)观察是否是runing状态:

[root@k8s151 ~]$ kubectl get pods

NAME READY STATUS RESTARTS AGE

oldboyedu-linux 1/1 Running 0 4m29s

oldboyedu-nginx 1/1 Running 0 29s

(3)测试网络是否互通

建议使用alpine镜像的Pod进行测试即可。

查看pod日志

- 格式:kubectl describe pod

案例: kubectl describe pod oldboyedu-linux

#9.彩蛋

(1)修改终端颜色

[root@k8s151 ~]$ cat <> ~/.bashrc

PS1='[\[\e[34;1m\]\u@\[\e[0m\]\[\e[32;1m\]\h\[\e[0m\]\[\e[31;1m\] \w\[\e[0m\]]$ '

EOF

[root@k8s151 ~]$ source ~/.bashrc

(2)内存回收小妙招

echo 3 > /proc/sys/vm/drop_caches

温馨提示:

drop_caches的值可以是0-3之间的数字,代表不同的含义

0:不释放(系统默认值)

1:释放页缓存

2:释放dentries和inodes

3:释放所有缓存

#10.高可用的etcd集群 [待更新]

敬请期待…

参考链接:

https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/setup-ha-etcd-with-kubeadm/

https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/high-availability/

2.问题排查–特别重要

常见文件点这里解决

describe

特别重要

当你在k8s中遇到问题,第一反应应该先去看看到底哪里出错了,如pod的status不是Running状态,但是为什么不是,哪里错了,你只有使用describe查看,当然了,也不只是只有pod才可以使用describe,像svc这些都可以

格式:kubectl describe

如果指定名称空间的话 : kubectl -n

案例:

比如使用查看pod的具体情况

kubectl describe pod pod的名字,然后如果有错误,一般都在最后几行有报错信息

查看svc的情况

kubectl describe svc svc的名称

logs

格式:kubectl logs [-f] [-p] POD [-c CONTAINER]

有些情况我们使用describe查看的时候是正常的,但是呢就是有问题,我们要通过这个logs查看

-c, --container="": 容器名

-f, --follow[=false]: 指定是否持续输出日志

--interactive[=true]: 如果为true,当需要时提示用户进行输入。默认为true

--limit-bytes=0: 输出日志的最大字节数。默认无限制

-p, --previous[=false]: 如果为true,输出pod中曾经运行过,但目前已终止的容器的日志

--since=0: 仅返回相对时间范围,如5s、2m或3h,之内的日志。默认返回所有日志。只能同时使用since和since-time中的一种

--since-time="": 仅返回指定时间(RFC3339格式)之后的日志。默认返回所有日志。只能同时使用since和since-time中的一种

--tail=-1: 要显示的最新的日志条数。默认为-1,显示所有的日志

--timestamps[=false]: 在日志中包含时间戳、

1)查看名称为nginx的pod的日志

kubectl logs nginx

2)查看名称为java的pod中,名称为web-m容器的日志(-c 指定容器名)

kubectl logs -p -c java web-m

3)持续输出pod java中的容器web-m的日志

kubectl logs -f -c java web-m

4)仅输出pod nginx中最近的20条日志

kubectl logs --tail=20 nginx

5)输出pod nginx中最近一小时内产生的所有日志

kubectl logs --since=1h nginx

6)查看指定pod的日志

kubectl logs

kubectl logs -f #类似tail -f的方式查看(tail -f 实时查看日志文件 tail -f 日志文件log)

7)查看指定pod中指定容器的日志

kubectl logs -c

一次性查看:

kubectl logs pod_name -c container_name -n namespace

tail -f方式实时查看:

kubectl logs -f -n namespace

四.Pod基础管理

1.什么是Pod

Pod是Kubernetes集群中最小部署单元,一个Pod由一个容器或多个容器组成,这些容器可以共享网络,存储等资源等。

Pod有以下特点:

(1)一个Pod可以理解成一个应用实例,提供服务;

(2)Pod中容器始终部署在同一个Node上;

(3)Pod中容器共享网络,存储资源;

(4)Kubernetes集群是直接管理Pod,而不是容器;

2.一个Pod运行单个容器案例

SHORTNAMES:简称

APIGROUP:所属的api组

注意: 不同的k8s版本,使用的版本号apiVersion是不同的,所以我们在以后的工作中注意这一点,可以使用kubectl api-resource查看[root@k8s151 /k8s-manifests/pods]$ kubectl api-resources NAME SHORTNAMES APIGROUP NAMESPACED KIND .... configmaps cm true ConfigMap endpoints ep true Endpoints events ev true Event namespaces ns false Namespace nodes no false Node pods po true Pod replicationcontrollers rc true ReplicationController secrets true Secret daemonsets ds apps true DaemonSet deployments deploy apps true Deployment replicasets rs apps true ReplicaSet 可以查看api组对应的版本号,根据这两个去写apiVersion [root@k8s151 /k8s-manifests/pods]$ kubectl api-versions ...... apps/v1 batch/v1 batch/v1beta1 v1 .....

(1) 编写资源清单

[root@k8s151 ~]$ mkdir -p /k8s-manifests/pods

[root@k8s151 /k8s-manifests/pods]$ cat > 01-pod-nginx.yaml <<'EOF'

# 部署的资源类型

kind: Pod

# API的版本号

apiVersion: v1

# 元数据信息

metadata:

# 资源的名称

name: oldboyedu-linux80-web

# 自定义Pod资源的配置

spec:

# 定义容器香港信息

containers:

# 定义容器的名称

- name: linux80-web

# 定义容器基于哪个镜像启动

image: nginx:1.18

EOF

(2) 创建资源

[root@k8s151 /k8s-manifests/podes]$ kubectl create -f 01-pod-nginx.yaml

pod/oldboyedu-linux80-web created

(3) 查看Pod资源

[root@k8s151 ~]$ kubectl get pods

NAME READY STATUS RESTARTS AGE

oldboyedu-linux80-web 0/1 ContainerCreating 0 6s

[root@k8s151 ~]$ kubectl get pods -o wide # 主要查看IP地址

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

oldboyedu-linux80-web 0/1 ContainerCreating 0 17s k8s152.oldboyedu.com

相关字段说明:

NAME

代表的是资源的名称。

READY

代表资源是否就绪。比如 0/1 ,表示一个Pod内有一个容器,而且这个容器还未运行成功。

STATUS

代表容器的运行状态。

RESTARTS

代表Pod重启次数,即容器被创建的次数。

AGE

代表Pod资源运行的时间。

IP

代表Pod的IP地址。

NODE

代表Pod被调度到哪个节点。

其他:

"NOMINATED NODE和"READINESS GATES"暂时先忽略哈。

3.一个Pod运行多个容器案例

k8s的yaml资源清单基本格式

(1)编写资源清单

[root@k8s151 /k8s-manifests/podes]$ cat > 02-pod-nginx-alpine.yaml <<'EOF'

# 部署的资源类型

kind: Pod

# API的版本号

apiVersion: v1

# 元数据信息

metadata:

# 资源的名称

name: oldboyedu-linux80-nginx-alpine

# 自定义Pod资源的配置

spec:

# 定义容器相关信息

containers:

# 定义容器的名称

- name: linux80-web

# 定义容器基于哪个镜像启动

image: nginx:1.18

- name: linux80-alpine

image: alpine

# 为终端分配一个标准输入,目的是为了阻塞容器,阻塞容器后,容器将不退出。

stdin: true

EOF

(2)创建资源

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 02-pod-nginx-alpine.yaml

(3)查看Pod资源

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods

NAME READY STATUS RESTARTS AGE

oldboyedu-linux80-nginx-alpine 2/2 Running 0 4m16

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods -o wide # 主要查看IP地址(可以看到,这个节点在153机器上)

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

oldboyedu-linux80-nginx-alpine 2/2 Running 0 4m39s 10.244.2.5 k8s153.oldboyedu.com

#我们可以看到,如上面的结果,我们创建了一个pod类型的资源,然后分配到了k8s153.oldboyedu.com这个节点上

在153机器上运行docker命令,可以看到创建了两个容器

[root@k8s153 ~]$ docker ps -a | grep oldboyedu-linux80-nginx-alpine

我们进入到容器里面,发现pod就是一个nginx的docker容器

[root@k8s153 ~]$ docker exec -it 3fcdeaff8089 /bin/bash

root@oldboyedu-linux80-nginx-alpine:/# nginx -v

nginx version: nginx/1.18.0

Pod的阶段及容器状态

| STATUS | 描述 |

|---|---|

Pending(悬决) |

Pod 已被 Kubernetes 系统接受,但有一个或者多个容器尚未创建亦未运行。此阶段包括等待 Pod 被调度的时间和通过网络下载镜像的时间。 |

Running(运行中) |

Pod 已经绑定到了某个节点,Pod 中所有的容器都已被创建。至少有一个容器仍在运行,或者正处于启动或重启状态。 |

Succeeded(成功) |

Pod 中的所有容器都已成功终止,并且不会再重启。 |

Failed(失败) |

Pod 中的所有容器都已终止,并且至少有一个容器是因为失败终止。也就是说,容器以非 0 状态退出或者被系统终止。 |

Unknown(未知) |

因为某些原因无法取得 Pod 的状态。这种情况通常是因为与 Pod 所在主机通信失败。 |

Pod的阶段如上表所示。 Pod遵循一个预定义的生命周期,起始于 Pending 阶段,如果至少其中有一个主要容器正常启动,则进入Running,之后取决于Pod中是否有容器以失败状态结束而进入Succeeded或者Failed阶段。

Pod内容器的状态主要有以下三种:

Waiting (等待)

如果容器并不处在 Running 或 Terminated 状态之一,它就处在 Waiting 状态。 处于 Waiting 状态的容器仍在运行它完成启动所需要的操作:例如,从某个容器镜像 仓库拉取容器镜像,或者向容器应用 Secret 数据等等。 当你使用 kubectl 来查询包含 Waiting 状态的容器的 Pod 时,你也会看到一个 Reason 字段,其中给出了容器处于等待状态的原因。

Running(运行中)

Running 状态表明容器正在执行状态并且没有问题发生。 如果配置了 postStart 回调,那么该回调已经执行且已完成。 如果你使用 kubectl 来查询包含 Running 状态的容器的 Pod 时,你也会看到关于容器进入Running状态的信息。

Terminated(已终止)

处于 Terminated 状态的容器已经开始执行并且或者正常结束或者因为某些原因失败。 如果你使用kubectl来查询包含 Terminated 状态的容器的 Pod 时,你会看到 容器进入此状态的原因、退出代码以及容器执行期间的起止时间。

如果容器配置了 preStop 回调,则该回调会在容器进入 Terminated 状态之前执行。

推荐阅读:

https://kubernetes.io/zh/docs/concepts/workloads/pods/pod-lifecycle/

https://kubernetes.io/zh/docs/concepts/containers/container-lifecycle-hooks/

4.连接Pod并验证网络共享实战案例

(1)使用exec在Pod中执行命令

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-linux80-nginx-alpine -- nginx -t

[root@k8s151 /k8s-manifests/podes]$ kubectl exec po/oldboyedu-linux80-nginx-alpine -- nginx -t

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-t5TaLVid-1681480617432)(http://qn.jicext.cn/img-202304141749947.png)]

(2) 连接容器

和docker连接容器类似

kubectl exec po/oldboyedu-linux80-web -it -- bash

kubectl exec oldboyedu-linux80-nginx-alpine -c linux80-alpine -it -- sh # 连接到指定容器。

(3) 同一个Pod的容器默认共享网络空间

测试见视频。

核心命令: wget 127.0.0.1:80 # 在alpine中镜像的容器中执行该命令即可。

温馨提示:

使用"kubectl api-resources"可以查看集群的所有资源,各字段解释如下:

NAME

资源的名称。SHORTNAME : 资源名称的简写形式。

APIGROUP :资源属于哪个API组。

NAMESPACED :是否属于某个名称空间。

KIND :资源的类型。

5.资源的增删改查

5.1 查询

查看Pod,nodes的信息 (重点掌握)

[root@k8s151 ~]$ kubectl get po,no

NAME READY STATUS RESTARTS AGE

pod/oldboyedu-linux80-nginx-alpine 2/2 Running 0 3h59m

pod/oldboyedu-linux81-homework 1/1 Running 0 3m9s

NAME STATUS ROLES AGE VERSION

node/k8s151.0ldboyedu.com Ready master 21d v1.18.0

node/k8s152.oldboyedu.com Ready 21d v1.18.0

node/k8s153.oldboyedu.com Ready 21d v1.18.0

查看Pod被调度的节点及IP地址等信息。 (重点掌握)

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

oldboyedu-linux80-nginx-alpine 2/2 Running 0 3h59m 10.244.2.5 k8s153.oldboyedu.com

oldboyedu-linux81-homework 1/1 Running 0 3m44s 10.244.1.11 k8s152.oldboyedu.com

查看Pod资源创建时的yaml文件,若用户为定义,此处会有默认字段。(了解即可)

kubectl get pods -o yaml

查看Pod及其标签信息。 (了解即可)

kubectl get pods --show-labels

自定义列名称输出: (了解即可)

案例一:

[root@k8s151 ~]$ kubectl get pod -o custom-columns=CONTAINER:.spec.containers[0].name

案例二:

[root@k8s151 ~]$ kubectl get pod -o custom-columns=CONTAINER:.spec.containers[0].name,IMAGE:.spec.containers[0].image

案例三:

[root@k8s151 ~]$ kubectl get pods oldboyedu-linux80-nginx-alpine -o custom-columns=oldboyedu-container-name:.spec.containers[0].name,oldboyedu-stdin:.spec.containers[1].stdin,oldboyedu-image01:.spec.containers[0].image,oldboyedu-image02:.spec.containers[1].image

参考链接:

https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands#get

https://kubernetes.io/docs/reference/kubectl/#custom-columns

5.2 删除

基于文件删除资源。(重点掌握)

[root@k8s151 ~]$ kubectl delete -f 02-pod-nginx-alpine.yaml

基于Pods的名称进行删除。(重点掌握)

[root@k8s151 ~]$ kubectl delete pods oldboyedu-linux80-web

基于标签进行删除,即匹配标签名称为"apps=myweb"的所有Pod类型。(了解即可)

[root@k8s151 ~]$ kubectl delete pods -l apps=myweb

删除所有的Pod信息。(了解即可)

[root@k8s151 ~]$ kubectl delete pod --all

5.3 更新

apply命令是一个高级命令,若资源不存在,则会创建,若资源存在,可以用于更新。(重点掌握)

[root@k8s151 ~]$ kubectl apply -f 01-pod-nginx.yaml

5.4 创建

将指定文件的资源进行创建,不能多次执行。该命令逐渐被apply命令所替代使用。(了解即可)

[root@k8s151 ~]$ kubectl create -f 01-pod-nginx.yaml

5.5 标签管理

5.5.1 声明式

优点:持久化标签配置

缺点:修改配置文件并应用

[root@k8s151 /k8s-manifests/pods]$ cat > 03-pod-nginx-labels.yaml <<'EOF'

#API版本号

apiVersion: v1

#部署的资源类型

kind: Pod

#元数据信息

metadata:

#资源的名称

name: oldboyedu-linux81-labels

#为资源配置标签,KEY和VALUES都是用户自定义的

labels:

apps: web

class: linux81

school: oldboyedu

address: shahe

#自定义Pod资源的配置

spec:

#定义容器相关信息

containers:

#定义容器名称

- name: linux81-web

image: nginx:1.18

EOF

[root@k8s151 /k8s-manifests/podes]$ kubectl create -f 03-pod-nginx-labels.yaml

pod/oldboyedu-linux81-labels created

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

oldboyedu-linux80-nginx-alpine 2/2 Running 0 5h46m class=linux81,school=oldboyedu

oldboyedu-linux81-homework 1/1 Running 1 109m

oldboyedu-linux81-labels 1/1 Running 0 9s address=shahe,apps=web,class=linux81,school=oldboyedu

5.5.2 响应式

【临时测试】

- 优点:不需要修改配置文件,立即生效

- 缺点:无法持久化,临时的

- 基于资源名称创建标签

[root@k8s151 /k8s-manifests/podes]$ kubectl label pods oldboyedu-linux81-labels school=oldboyedu class=linux81

- 基于文件的方式创建标签

[root@k8s151 /k8s-manifests/podes]$ kubectl label -f 01-pod-nginx.yaml address=ShaHe

pod/oldboyedu-linux80-web labeled

5.5.3 删除标签

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

oldboyedu-linux80-nginx-alpine 2/2 Running 0 6h8m class=linux81,school=oldboyedu

oldboyedu-linux80-web 1/1 Running 0 16m address=ShaHe

oldboyedu-linux81-homework 1/1 Running 2 132m

oldboyedu-linux81-labels 1/1 Running 0 22m address=shahe,apps=web,class=linux81,school=oldboyedu

案例:移除oldboyedu-linux80-web的 address标签

[root@k8s151 /k8s-manifests/podes]$ kubectl label pods oldboyedu-linux80-web address-

pod/oldboyedu-linux80-web labeled

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

oldboyedu-linux80-nginx-alpine 2/2 Running 0 6h9m class=linux81,school=oldboyedu

oldboyedu-linux80-web 1/1 Running 0 17m

oldboyedu-linux81-homework 1/1 Running 2 133m

oldboyedu-linux81-labels 1/1 Running 0 23m address=shahe,apps=web,class=linux81,school=oldboyedu

5.6 故障排查相关命令

5.6.1 describe 命令

特别重要

当你在k8s中遇到问题,第一反应应该先去看看到底哪里出错了,如pod的status不是Running状态,但是为什么不是,哪里错了,你只有使用describe查看,当然了,也不只是只有pod才可以使用describe,像svc这些都可以

格式:

kubectl describe<名称>

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

oldboyedu-linux80-nginx-alpine 2/2 Running 0 6h9m class=linux81,school=oldboyedu

oldboyedu-linux80-web 1/1 Running 0 17m

oldboyedu-linux81-homework 1/1 Running 2 133m

oldboyedu-linux81-labels 1/1 Running 0 23m address=shahe,apps=web,class=linux81,school=oldboyedu

[root@k8s151 /k8s-manifests/podes]$ kubectl delete pods --all

pod "oldboyedu-linux80-nginx-alpine" deleted

pod "oldboyedu-linux80-web" deleted

pod "oldboyedu-linux81-homework" deleted

pod "oldboyedu-linux81-labels" deleted

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 02-pod-nginx-alpine.yaml

pod/oldboyedu-linux80-nginx-alpine created

- 查看Pod的详细信息

[root@k8s151 /k8s-manifests/podes]$ kubectl describe pods/oldboyedu-linux80-web

Name: oldboyedu-linux80-web #资源名称

Namespace: default #名称空间

Priority: 0

Node: k8s152.oldboyedu.com/10.0.0.152 #调度节点

Start Time: Sat, 19 Nov 2022 17:33:09 +0800

Labels: #标签

Annotations: #注解信息

Status: Running #状态

IP: 10.244.1.14 #ip

IPs:

IP: 10.244.1.14

Containers:

linux80-web: #容器名称

Container ID: docker://defc257ef21874dc68d190f4d1803245374bde3f0b60dbc18e65589a839b28d7 #容器id

Image: nginx:1.18 #镜像

Image ID: docker-pullable://nginx@sha256:e90ac5331fe095cea01b121a3627174b2e33e06e83720e9a934c7b8ccc9c55a0

Port: #端口

Host Port: #主机端口

State: Running #状态

Started: Sat, 19 Nov 2022 17:33:11 +0800

Ready: True #是否是就绪状态

Restart Count: 0

Environment: #环境变量

Mounts: #挂载信息

/var/run/secrets/kubernetes.io/serviceaccount from default-token-c5lh2 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

PodScheduled True

Volumes:

default-token-c5lh2:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-c5lh2

Optional: false

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled default-scheduler Successfully assigned default/oldboyedu-linux80-nginx-alpine to k8s152.oldboyedu.com #成功调度152节点

Normal Pulled 69s kubelet, k8s152.oldboyedu.com Container image "nginx:1.18" already present on machine #拉取镜像,本地已经有拉取成功的

Normal Created 69s kubelet, k8s152.oldboyedu.com Created container linux80-web #创建linux80-web容器

Normal Started 68s kubelet, k8s152.oldboyedu.com Started container linux80-web #启动linux80-web容器

Normal Pulling 68s kubelet, k8s152.oldboyedu.com Pulling image "alpine" #拉取镜像

Normal Pulled 53s kubelet, k8s152.oldboyedu.com Successfully pulled image "alpine"

Normal Created 52s kubelet, k8s152.oldboyedu.com Created container linux80-alpine #创建容器

Normal Started 52s kubelet, k8s152.oldboyedu.com Started container linux80-alpine #启动

- 查看包含关键字的POD(了解即可)

[root@k8s151 /k8s-manifests/podes]$ kubectl describe pods o #/oldboyedu-linux80-nginx-alpine

- 查看资源清单的详细信息 (了解即可)

[root@k8s151 /k8s-manifests/podes]$ kubectl describe -f 02-pod-nginx-alpine.yaml

.....

- 根据标签查看详情信息(了解即可)

[root@k8s151 /k8s-manifests/podes]$ kubectl label -f 02-pod-nginx-alpine.yaml apps=myweb

pod/oldboyedu-linux80-nginx-alpine labeled

[root@k8s151 /k8s-manifests/podes]$ kubectl describe po -l apps=myweb

....

5.6.2 log 命令

kubectl logs [-f] [-p] POD [-c CONTAINER]

[root@k8s151 /k8s-manifests/podes]$ kubectl delete pods --all

pod "oldboyedu-linux80-nginx-alpine" deleted

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 01-pod-nginx.yaml

pod/oldboyedu-linux80-web created

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 02-pod-nginx-alpine.yaml

pod/oldboyedu-linux80-nginx-alpine created

-

查看pod日志

- 如果一个pod中只有一个容器,则不用指定容器名。

oldboyedu-linux80-web中只有一个容器linux80-web [root@k8s151 /k8s-manifests/podes]$ kubectl logs oldboyedu-linux80-web /docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration /docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/ /docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh 10-listen-on-ipv6-by-default.sh: Getting the checksum of /etc/nginx/conf.d/default.conf 10-listen-on-ipv6-by-default.sh: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf /docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh /docker-entrypoint.sh: Configuration complete; ready for start up oldboyedu-linux80-nginx-alpine中有两个容器,linux80-web和linux80-alpine(没有指定容器名称,报错了) [root@k8s151 /k8s-manifests/podes]$ kubectl logs -f oldboyedu-linux80-nginx-alpine error: a container name must be specified for pod oldboyedu-linux80-nginx-alpine, choose one of: [linux80-web linux80-alpine] [root@k8s151 /k8s-manifests/podes]$ kubectl logs oldboyedu-linux80-nginx-alpine linux80-alpine error: a container name must be specified for pod oldboyedu-linux80-nginx-alpine, choose one of: [linux80-web linux80-alpine]- 如果有多个容器,必须要指定容器名

使用"-c"选项一般多用于一个Pod内有多个容器的场景。

[root@k8s151 /k8s-manifests/podes]$ kubectl logs -f oldboyedu-linux80-nginx-alpine -c linux80-alpine

# Kubernetes-managed hosts file.

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

fe00::0 ip6-mcastprefix

fe00::1 ip6-allnodes

fe00::2 ip6-allrouters

10.244.1.16 oldboyedu-linux80-nginx-alpine

- 查看指定时间内的日志

[root@k8s151 /k8s-manifests/podes]$ kubectl logs -f oldboyedu-linux80-nginx-alpine -c linux80-web --timestamps --since=10m (了解即可)

–since=10m

表示查看当前时间最近10分钟的日志信息。

–timestamps

查看时间戳。

5.6.3 cp 命令

- 从本地复制到远程容器

把本地/etc文件复制到oldboyedu-linux80-nginx-alpine中的linux80-alpine容器中(如果不加-c指定,会复制到第一个容器中)

[root@k8s151 /k8s-manifests/podes]$ kubectl cp /etc/ oldboyedu-linux80-nginx-alpine:/oldboyedu-etc-2022 -c linux80-alpine容器中(如果不加-c指定,会复制到第一个容器中)

将本地的目录拷贝到Pod中指定的容器相关的路径。

连接linux80-alpine容器,查看复制结果

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-linux80-nginx-alpine -c linux80-alpine -it -- sh

/ #

/ # cd /opt/

/opt # ls

etc

/opt #

[root@k8s151 /k8s-manifests/podes]$ kubectl -n oldboyedu-linux80 cp /etc/ oldboyedu-linux80-nginx-alpine:/opt

将本地的目录拷贝到指定名称空间的容器,若不指定名称空间,默认拷贝到default名称空间的Pod哟~(了解)

温馨提示:

(1)如果想要从Pod中拷贝数据到本地,要求容器映像中存在"tar二进制文件。如果"tar"不存在,"kubectl cp"将失败。

(2)我曾尝试在Pod手动创建一个tar包,并尝试将该tar拷贝出来,发现最终还是失败了,测试的版式1.15.12。

- 从容器复制到本地

复制文件:把oldboyedu-linux80-nginx-alpine下的linux80-alpine中的/etc文件,复制到本地/opt/里面,并重命名为01

[root@k8s151 /k8s-manifests/podes]$ kubectl cp oldboyedu-linux80-nginx-alpine:/etc -c linux80-alpine /opt/01

[root@k8s151 /k8s-manifests/podes]$ ll /opt/01/

total 96

-rw-r--r-- 1 root root 7 Nov 20 12:35 alpine-release

drwxr-xr-x 4 root root 88 Nov 20 12:35 apk

drwxr-xr-x 2 root root 6 Nov 20 12:35 conf.d

drwxr-xr-x 2 root root 18 Nov 20 12:35 crontabs

-rw-r--r-- 1 root root 89 Nov 20 12:35 fstab

-rw-r--r-- 1 root root 697 Nov 20 12:35 group

-rw-r--r-- 1 root root 31 Nov 20 12:35 hostname

-rw-r--r-- 1 root root 226 Nov 20 12:35 hosts

.....

把/etc/hosts文件,复制到本地/opt里面,并重命名为02

[root@k8s151 /k8s-manifests/podes]$ kubectl cp oldboyedu-linux80-nginx-alpine:/etc/hosts -c linux80-alpine /opt/02

[root@k8s151 /k8s-manifests/podes]$ cat /opt/02

# Kubernetes-managed hosts file.

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

fe00::0 ip6-mcastprefix

fe00::1 ip6-allnodes

fe00::2 ip6-allrouters

10.244.1.16 oldboyedu-linux80-nginx-alpine

5.6.4 exec 命令

- 连接容器

一个pod中有多个容器,默认进入第一个

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-linux80-nginx-alpine -it -- sh

Defaulting container name to linux80-web.

Use 'kubectl describe pod/oldboyedu-linux80-nginx-alpine -n default' to see all of the containers in this pod.

#

加-c指定容器

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-linux80-nginx-alpine -c linux80-alpine -it -- sh

/ #

- 在容器中执行命令

在pod为oldboyedu-linux80-web中执行命令cat /etc/hosts命令

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-linux80-web -- cat /etc/hosts

# Kubernetes-managed hosts file.

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

fe00::0 ip6-mcastprefix

fe00::1 ip6-allnodes

fe00::2 ip6-allrouters

10.244.2.6 oldboyedu-linux80-web

一个pod中有多个容器,默认查看第一个

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-linux80-nginx-alpine -- cat /etc/hosts

Defaulting container name to linux80-web.

Use 'kubectl describe pod/oldboyedu-linux80-nginx-alpine -n default' to see all of the containers in this pod.

# Kubernetes-managed hosts file.

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

fe00::0 ip6-mcastprefix

fe00::1 ip6-allnodes

fe00::2 ip6-allrouters

10.244.1.16 oldboyedu-linux80-nginx-alpine

指定oldboyedu-linux80-nginx-alpine中的linux80-alpine

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-linux80-nginx-alpine -c linux80-alpine -- cat /etc/hosts

# Kubernetes-managed hosts file.

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

fe00::0 ip6-mcastprefix

fe00::1 ip6-allnodes

fe00::2 ip6-allrouters

10.244.1.16 oldboyedu-linux80-nginx-alpine

6. args 和 command

[root@k8s151 /k8s-manifests/podes]$ cat > 05-pod-command-args.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-linux80-command-args

labels:

apps: myweb

spec:

containers:

- name: linux80-web

image: nginx:1.18

# command会覆盖镜像的ENTRYPOINT

command:

- "tail"

# args会覆盖镜像的CMD指令

args:

- "-f"

- "/etc/hosts"

EOF

7.资源限制

7.1 limits案例

[root@k8s151 /k8s-manifests/podes]$ cat 03-pods-limits.yaml

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-resources-limits

labels:

apps: myweb

spec:

containers:

- name: linux80-web

image: nginx:1.18

# 配置容器的资源限制

resources:

# 设置资源的上限

limits:

# 配置内存限制

memory: "200Mi"

# 配置CPU的显示,CPU的换算公式: 1core = 1000m

cpu: "500m"

7.2 limits 和 requests案例

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-resources-limits-requests

labels:

apps: myweb

spec:

containers:

- name: linux80-web

image: nginx:1.18

# 配置容器的资源限制

resources:

# 设置资源的上线

limits:

# 配置内存限制

memory: "200Mi"

# 配置CPU的显示,CPU的换算公式: 1core = 1000m

cpu: "500m"

# 配置容器期望的资源,如果所有节点不符合期望的资源,则无法完成调度

requests:

memory: "100Mi"

cpu: "500m"

面试题: 请说一下如何限制Pod的使用资源,分别说一下limit和request的作用是什么?

Request: 容器使用的最小资源需求,作为容器调度时资源分配的判断依赖。只有当节点上可分配资源量>=容器资源请求数时才允许将容器调度到该节点。但Request参数不限制容器的最大可使用资源。

Limit: 容器能使用资源的资源的最大值,设置为0表示使用资源无上限。

Request能够保证Pod有足够的资源来运行,而Limit则是防止某个Pod无限制地使用资源,导致其他Pod崩溃。两者之间必须满足关系: 0<=Request<=Limit<=Infinity (如果Limit为0表示不对资源进行限制,这时可以小于Request)

7.3 综合案例

(1)编写资源清单

[root@k8s151 /k8s-manifests/pods]$ cat > 07-pods-resources-stress.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-linux80-stress

labels:

apps: myweb

spec:

containers:

- name: linux80-web

image: jasonyin2020/oldboyedu-linux-tools:v0.1

resources:

limits:

memory: "1Gi"

cpu: "500m"

requests:

memory: "100Mi"

cpu: "200m"

command:

- "tail"

args:

- "-f"

- "/etc/hosts"

EOF

(2)创建Pod

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 07-pods-resources-stress.yaml

(3)执行压力测试命令

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-linux80-stress -- stress --cpu 8 --io 4 --vm 7 --vm-bytes 128M --timeout 10m --vm-keep

(4)观察Pod使用资源状态

如上图所示。

8.镜像下载策略

- 调度到指定节点

[root@k8s151 /k8s-manifests/podes]$ cat > 04-pods-imagePullPolicy.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-linux80-imagepullpolicy-02

labels:

apps: myweb

spec:

# 将Pod调度到指定到节点名称

# 注意,节点名称不能乱写,必须是在"kubectl get nodes"指令中存在.

nodeName: k8s153.oldboyedu.com

hostNetwork: true #共享宿主机网络

containers:

- name: myweb

image: nginx:1.18

resources:

limits:

memory: "1Gi"

cpu: "500m"

requests:

memory: "100Mi"

cpu: "500m"

EOF

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 04-pods-imagePullPolicy.yaml

pod/oldboyedu-linux80-imagepullpolicy-02 created

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

oldboyedu-linux80-imagepullpolicy-02 1/1 Running 0 31s 10.0.0.153 k8s153.oldboyedu.com

oldboyedu-linux80-nginx-alpine 2/2 Running 0 19h 10.244.1.16 k8s152.oldboyedu.com

oldboyedu-linux80-web 1/1 Running 0 19h 10.244.2.6 k8s153.oldboyedu.com

http://10.0.0.153/

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ldVP8rfj-1681480617433)(http://qn.jicext.cn/img-202304141750881.png)]

推送镜像到仓库

[root@k8s153 ~]$ docker run nginx:1.18

[root@k8s153 ~]$ docker ps -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0da5feb95874 nginx:1.18 "/docker-entrypoint.…" 12 seconds ago Up 8 seconds 80/tcp optimistic_banach

把新启动的nginx容器提交到仓库

[root@k8s152 ~]$ docker exec -it optimistic_banach bash

root@0da5feb95874:/# echo 2222222222222 > /usr/share/nginx/html/index.html

#optimistic_banach是之前创建的nginx:1.18的镜像名字,现在把这个镜像提交,然后重新命名为myweb:v0.1

[root@k8s153 ~]$ docker commit optimistic_banach k8s151.oldboyedu.com:5000/myweb:v0.1

sha256:7657735b87ebf45a5f9d48dbfdc37856db9fab14b06d17b7817d6026148fa77c

查看提交的镜像

[root@k8s153 ~]$ docker images | grep k8s151.oldboyedu.com

k8s151.oldboyedu.com:5000/myweb v0.1 b85fe5f2830b 9 minutes ago 133MB

推送到仓库

[root@k8s153 ~]$ docker push k8s151.oldboyedu.com:5000/myweb

在网址http://10.0.0.151:5000/v2/_catalog可以看到

{"repositories":["myweb"]}

- 镜像下载策略 (理解就行,不用操作)

指定镜像的下载策略,其值为: Always, Never, IfNotPresent

Always:

总是去拉取最新的镜像,这是默认值.

如果本地镜像存在同名称的tag,其会取出该镜像的RepoDigests(镜像摘要)和远程仓库的RepoDigests进行比较

若比较结果相同,则直接使用本地缓存镜像,若比较结果不同,则会拉取远程仓库最新的镜像

Never:

如果本地有镜像,则尝试启动容器;

如果本地没有镜像,则永远不会去拉取尝试镜像。

IfNotPresent:

如果本地有镜像,则尝试启动容器,并不会去拉取镜像。

如果本地没有镜像,则会去拉取镜像。

(1)编写资源清单

[root@k8s151 /k8s-manifests/podes]$ cat > 04-pods-imagePullPolicy.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

#名字不能有大写

name: imagepullpolicy-ifnotpresent

labels:

apps: myweb

spec:

# 将Pod调度到指定到节点名称

# 注意,节点名称不能乱写,必须是在"kubectl get nodes"指令中存在.

nodeName: k8s152.oldboyedu.com

hostNetwork: true #共享宿主机网络

containers:

- name: myweb

image: nginx:1.18

imagePullPolicy: Always #镜像的下载策略

EOF

(2)常见资源清单

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 04-pods-imagePullPolicy.yaml

(3)验证

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

imagepullpolicy-ifnotpresent 1/1 Running 0 85s 10.244.2.11 k8s152.oldboyedu.com > >

[root@k8s152 ~]$ docker run -it --name "nginx-test" --rm nginx:1.18 bash

root@e3c4006be96f:/# cd /usr/share/nginx/html/

root@e3c4006be96f:/usr/share/nginx/html# ls

50x.html index.html

root@e3c4006be96f:/usr/share/nginx/html# echo " oldboyedu 1111

" > index.html

查看刚才启动的测试容器

[root@k8s152 ~]$ docker ps |grep nginx-test

94118ecf543a nginx:1.18 "/docker-entrypoint.…" 59 seconds ago Up 57 seconds 80/tcp nginx-test

[root@k8s152 ~]$ docker commit 94118ecf543a myweb:v0.1

sha256:19141695fd72f4e48bae58911c18d3fc8aefb16b36d3d5d9a052df32684c59f5

[root@k8s152 ~]$ docker images |grep myweb

myweb v0.1 19141695fd72 43 seconds ago 133MB

启动一个新的nginx容器

[root@k8s152 ~]$ docker run -d --name myweb nginx:1.18

0da5feb95874e976acb280b215db03703a1578dd1ea5cfb713a31f4aea8f44c3

[root@k8s152 ~]$ docker ps -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0da5feb95874 nginx:1.18 "/docker-entrypoint.…" 12 seconds ago Up 8 seconds 80/tcp optimistic_banach

把新启动的nginx容器提交到仓库

[root@k8s152 ~]$ docker exec -it myweb bash

root@0da5feb95874:/# echo 2222222222222 > /usr/share/nginx/html/index.html

[root@k8s153 ~]$ docker commit myweb k8s151.oldboyedu.com:5000/myweb:v0.1

sha256:7657735b87ebf45a5f9d48dbfdc37856db9fab14b06d17b7817d6026148fa77c

查看提交的镜像

[root@k8s153 ~]$ docker images | grep k8s151.oldboyedu.com

k8s151.oldboyedu.com:5000/myweb v0.1 b85fe5f2830b 9 minutes ago 133MB

推送到仓库

[root@k8s153 ~]$ docker push k8s151.oldboyedu.com:5000/myweb:v0.1

在网址http://10.0.0.151:5000/v2/_catalog可以看到

{"repositories":["myweb"]}

[root@k8s151 /k8s-manifests/podes]$ cat > 04-pods-imagePullPolicy.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

#名字不能有大写

name: imagepullpolicy-ifnotpresent-004

labels:

apps: myweb

spec:

hostNetwork: true #共享宿主机网络

# 将Pod调度到指定到节点名称

# 注意,节点名称不能乱写,必须是在"kubectl get nodes"指令中存在.

nodeName: k8s152.oldboyedu.com

containers:

- name: myweb

image: k8s151.oldboyedu.com:5000/myweb:v0.1

imagePullPolicy: IfNotPresent #镜像的下载策略

EOF

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 04-pods-imagePullPolicy.yaml

Note:

在生产环境中部署容器时,你应该避免使用 :latest 标签,因为这使得正在运行的镜像的版本难以追踪,并且难以正确地回滚。

相反,应指定一个有意义的标签,如 v1.42.0。

删除镜像

1)删除元数据信息

[root@k8s151 /k8s-manifests/podes]$ docker exec oldboyedu-registry rm -rf /var/lib/registry/docker/registry/v2/repositories/nginx

2)回收数据

[root@k8s151 /k8s-manifests/podes]$ docker exec oldboyedu-registry registry garbage-collect /etc/docker/registry/config.yml

参考链接:

https://kubernetes.io/zh/docs/concepts/containers/images/

http://10.0.0.152/

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-nVcPMd8L-1681480617433)(http://qn.jicext.cn/img-202304141750701.png)]

9.传递环境变量

(1)编写资源清单

[root@k8s151 /k8s-manifests/podes]$ cat > 05-pods-env.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-pods-env

labels:

apps: myweb

spec:

containers:

- name: myweb

image: k8s151.oldboyedu.com:5000/myweb:v0.1

# 向容器传递环境变量.

env:

# 指定环境变量的名称.

- name: SCHOOL

# 指定环境变量的值.

value: oldboyedu

- name: CLASS

value: linux81

- name: test01

image: nginx:1.18

command:

- tail

- -f

- /etc/hosts

env:

- name: ADDRESS

value: 河南驻马店

EOF

(2)创建资源

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 05-pods-env.yaml

(3)验证

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-pods-env -c myweb -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=oldboyedu-pods-env-01

SCHOOL=oldboyedu

CLASS=linux81

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT=tcp://10.254.0.1:443

KUBERNETES_PORT_443_TCP=tcp://10.254.0.1:443

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_ADDR=10.254.0.1

KUBERNETES_SERVICE_HOST=10.254.0.1

KUBERNETES_SERVICE_PORT=443

NGINX_VERSION=1.18.0

NJS_VERSION=0.4.4

PKG_RELEASE=2~buster

HOME=/root

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-pods-env -c test01 -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=oldboyedu-pods-env-01

SCHOOL=oldboyedu

CLASS=linux81

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT=tcp://10.254.0.1:443

KUBERNETES_PORT_443_TCP=tcp://10.254.0.1:443

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_ADDR=10.254.0.1

KUBERNETES_SERVICE_HOST=10.254.0.1

KUBERNETES_SERVICE_PORT=443

NGINX_VERSION=1.18.0

NJS_VERSION=0.4.4

PKG_RELEASE=2~buster

HOME=/root

[root@k8s151 /k8s-manifests/podes]$ kubectl exec oldboyedu-pods-env-01 -c test01 -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=oldboyedu-pods-env-01

ADDRESS=河南驻马店

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_ADDR=10.254.0.1

KUBERNETES_SERVICE_HOST=10.254.0.1

KUBERNETES_SERVICE_PORT=443

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT=tcp://10.254.0.1:443

KUBERNETES_PORT_443_TCP=tcp://10.254.0.1:443

KUBERNETES_PORT_443_TCP_PROTO=tcp

NGINX_VERSION=1.18.0

NJS_VERSION=0.4.4

PKG_RELEASE=2~buster

HOME=/root

10.存储卷

10.1 为什么需要存储卷

容器中的文件在磁盘上是临时存放的,这给容器中运行比较重要的应用程序带来一些问题。

• 问题1:当容器升级或者崩溃时,kubelet会重建容器,容器内文件会丢失

• 问题2:一个Pod中运行多个容器需要共享文件 Kubernetes 卷(Volume) 这一抽象概念能够解决这两个问题。

容器部署过程中一般有三种数据:

(1)启动时需要的初始数据,比如配置文件,比如: wordpress,zabbix等等。

(2)启动过程中产生的临时数据,该数据需要多个容器间共享,比如: nginx + filebeat;

(3)启动容器过程中产生的持久化数据,比如:mysql。

综上所述,数据卷的作用就是为了解决上面三种情况产生的数据进行持久化的方案。

参考链接:

https://kubernetes.io/zh/docs/concepts/storage/volumes/

10.2 emptyDir案例

10.2.1 emptyDir概述

emptyDir类型的Volume在Pod分配到Node上时被创建,Kubernetes会在Node上自动分配一个目录,因此无需指定宿主机Node上对应的目录文件。 这个目录的初始内容为空,当Pod从Node上移除时,emptyDir中的数据会被永久删除。

什么是emptyDir:

是一个临时存储卷,与Pod的生命周期绑定到一起,如果Pod被删除了,这意味着数据也被随之删除。

emptyDir作用:

(1)可以实现持久化;

(2)同一个Pod的多个容器可以实现数据共享,多个不同的Pod之间不能进行数据通信;

(3)随着Pod的生命周期而存在,当我们删除Pod时,其数据也会被随之删除;

emptyDir的应用场景:

(1)临时缓存空间,比如基于磁盘的归并排序;

(2)为较耗时计算任务提供检查点,以便任务能方便的从崩溃前状态恢复执行;

(3)存储Web访问日志及错误日志等信息;

emptyDir优缺点:

优点:

(1)可以实现同一个Pod内多个容器之间数据共享;

(2)当Pod内的某个容器被强制删除时,数据并不会丢失,因为Pod没有删除;

缺点:

(1)当Pod被删除时,数据也会被随之删除;

(2)不同的Pod之间无法实现数据共享;

参考链接:

https://kubernetes.io/docs/concepts/storage/volumes#emptydir

温馨提示:

1)启动pods后,使用emptyDir其数据存储在"/var/lib/kubelet/pods"路径下对应的POD_ID目录哟!

/var/lib/kubelet/pods/${POD_ID}/volumes/kubernetes.io~empty-dir/

2)可以尝试验证上一步找到的目录,并探讨为什么Pod删除其数据会被随之删除的真正原因,见视频。

10.2.2 挂载单个存储卷

先删除所有节点,以防止有启动的nginx占用80端口,导致新的容器无法启动

[root@k8s151 /k8s-manifests/pods]$ kubectl delete pod --all

[root@k8s151 /k8s-manifests/pods]$ cat > 06-pods-volume-emptyDir.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-pods-emptydir

labels:

apps: myweb

spec:

volumes:

- name: data01

emptyDir: {}

- name: data02

emptyDir: {}

containers:

- name: myweb

image: k8s151.oldboyedu.com:5000/myweb:v0.1

volumeMounts:

- name: data01

mountPath: /oldboyedu-linux81 #把此文件/oldboyedu-linux81 挂载到data01

- name: test01

image: nginx:1.18

command:

- tail

- -f

- /etc/hosts

volumeMounts:

- name: data02

mountPath: /data-linux81 #把此文件/data-linux81 挂载到data02

EOF

[root@k8s151 /k8s-manifests/pods]$ kubectl apply -f 06-pods-volume-emptyDir.yaml

pod/oldboyedu-pods-emptydir-001 created

进到pod的容器中,没有指定容器名,则进入到第一个容器,即myweb

[root@k8s151 /k8s-manifests/pods]$ kubectl exec oldboyedu-pods-emptydir -it -- bash

Defaulting container name to myweb.

Use 'kubectl describe pod/oldboyedu-pods-emptydir-001 -n default' to see all of the containers in this pod.

root@oldboyedu-pods-emptydir-001:/# cat /usr/share/nginx/html/index.html

2222222222222 #可以看到nginx首页显示的是222

root@oldboyedu-pods-emptydir-001:/# echo AAAAAAAAAAA > /usr/share/nginx/html/index.html #把nignx默认显示改为AAA...

可以看到容器部署在152节点

[root@k8s151 /k8s-manifests/pods]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

oldboyedu-pods-emptydir 2/2 Running 0 6m41s 10.244.1.23 k8s152.oldboyedu.com

在152节点删除myweb容器(删除后,kubectl会立即重启启动)

[root@k8s152 ~]$ docker ps | grep myweb

0f91e4e67302 b85fe5f2830b "/docker-entrypoint.…" 3 minutes ago Up 3 minutes k8s_myweb_oldboyedu-pods-emptydir_default_6b012714-7a95-42c1-9fd4-7f5361b2c423_0

[root@k8s152 ~]$ docker rm -f 0f91e4e67302

36efbb657af2

然后验证curl访问nginx,看到nginx的首页恢复为修改之前的222(并没有持久化,容器重启之后,修改的数据并没有保存)

[root@k8s151 /k8s-manifests/pods]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

oldboyedu-pods-emptydir 2/2 Running 0 6m41s 10.244.1.23 k8s152.oldboyedu.com

#之所以修改的数据没有保存,是因为我们修改的不是挂载目录 /oldboyedu-linux81

[root@k8s151 /k8s-manifests/pods]$ curl 10.244.1.23

2222222222222

启动pods后,使用emptyDir其数据存储在"/var/lib/kubelet/pods"路径下对应的POD_ID目录,/var/lib/kubelet/pods/${POD_ID}/volumes/kubernetes.io~empty-dir/

[root@k8s153 ~]$ ll /var/lib/kubelet/pods/

total 0

drwxr-x--- 5 root root 71 Oct 29 11:30 6cc3a87e-627b-40b3-8f64-c26da3378a86

drwxr-x--- 5 root root 71 Oct 29 11:30 be543b8b-f713-459f-be14-5faa4709bd4b

drwxr-x--- 5 root root 71 Nov 22 14:20 ebde8b2e-87ec-4fe2-a928-99d0e6d1ba21

可以看到修改的Neri,在本地目录中已经同步

[root@k8s153 ~]$ cat /var/lib/kubelet/pods/ebde8b2e-87ec-4fe2-a928-99d0e6d1ba21/volumes/kubernetes.io~empty-dir/data01/index.html

AAAAAAAAAAA

[root@k8s151 /k8s-manifests/podes]$ cat > 06-pods-volume-emptyDir.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-pods-emptydir

labels:

apps: myweb

spec:

volumes:

- name: data01

emptyDir: {}

- name: data02

emptyDir: {}

containers:

- name: myweb

image: k8s151.oldboyedu.com:5000/myweb:v0.1

volumeMounts:

- name: data01

#挂载/usr/share/nginx/html,实现数据共享,这样修改此文件内的东西,就会实现持久化

mountPath: /usr/share/nginx/html

- name: test01

image: nginx:1.18

command:

- tail

- -f

- /etc/hosts

volumeMounts:

- name: data01

mountPath: /data-linux81

EOF

再次重复上面的步骤,发现删除相应容器后,修改的内容并没重置(因为我们在/usr/share/nginx/html修改数据,而这个文件是挂载到data01的,实现了数据共享,所以就会实现持久化)

10.2.3 挂载多个存储卷

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-linux80-volume-001

labels:

apps: myweb

spec:

nodeName: k8s202.oldboyedu.com

volumes:

- emptyDir: {}

name: data01

- name: data02

emptyDir: {}

- name: data03

emptyDir: {}

containers:

- name: linux80-web

image: k8s201.oldboyedu.com:5000/nginx:1.20.1

volumeMounts:

- name: data01

mountPath: /oldboyedu-linux80-data

- name: data02

mountPath: /oldboyedu-linux80-data002

- name: data03

mountPath: /oldboyedu-linux80-data003

- name: linux80-linux

image: k8s201.oldboyedu.com:5000/alpine

command:

- tail

- -f

- /etc/hosts

stdin: true

volumeMounts:

- name: data01

mountPath: /oldboyedu-linux-data-001

- name: data02

mountPath: /oldboyedu-linux-data-002

- name: data03

mountPath: /oldboyedu-linux-data-003

注意:如果需要用到仓库里面的镜像,首先要先把镜像推送到仓库。 还有很多都使用nginx镜像,要注意nginx端口占用

10.3 hostPath案例

10.3.1 hostPath概述

hotsPath数据卷:

挂载Node文件系统(Pod所在节点)上文件或者目录到Pod中的容器。如果Pod删除了,宿主机的数据并不会被删除。

应用场景:

Pod中容器需要访问宿主机文件。

hotsPath优缺点:

优点:

(1) 可以实现同一个Pod不同容器之间的数据共享;

(2) 可以实现同一个Node节点不同Pod之间的数据共享;

缺点:

无法满足跨节点Pod之间的数据共享。

推荐阅读:

https://kubernetes.io/docs/concepts/storage/volumes/#hostpath

10.3.2 挂载文件案例

[root@k8s151 /k8s-manifests/podes]$ cat > 07-pods-volume-hostPath.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-volume-hostpath-01

labels:

apps: myweb

spec:

nodeName: k8s153.oldboyedu.com

# 声明存储卷类型和名称

volumes:

- name: data01

emptyDir: {}

- name: data02

hostPath:

# 指定宿主机的路径,如果源数据是文件

path: /data-linux81

containers:

- name: myweb

image: k8s151.oldboyedu.com:5000/myweb:v0.1

volumeMounts:

- name: data02

mountPath: /usr/share/nginx/html

---

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-volume-hostpath-02

labels:

apps: myweb

spec:

nodeName: k8s153.oldboyedu.com

volumes:

- name: data01

hostPath:

path: /data-linux81

containers:

- name: test01

image: nginx:1.18

volumeMounts:

- name: data01

mountPath: /usr/share/nginx/html

EOF

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 07-pods-volume-hostPath.yaml

pod/oldboyedu-volume-hostpath-01 created

pod/oldboyedu-volume-hostpath-02 created

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods -o wide |grep host

oldboyedu-volume-hostpath-01 1/1 Running 0 38s 10.244.2.24 k8s153.oldboyedu.com > >

oldboyedu-volume-hostpath-02 1/1 Running 0 38s 10.244.2.23 k8s153.oldboyedu.com > >

因为容器都在153节点

[root@k8s153 ~]$ mkdir -p /data-linux81 && cd /data-linux81

[root@k8s153 /data-linux81]$ echo ">oldboyedu linux81 >" > index.html

因为共享了同一个宿主机目录,所以

[root@k8s151 /k8s-manifests/podes]$ curl 10.244.2.42

>oldboyedu linux81 >

[root@k8s151 /k8s-manifests/podes]$ curl 10.244.2.41

>oldboyedu linux81 >

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-volume-hostpath-01

labels:

apps: myweb

spec:

nodeName: k8s152.oldboyedu.com

# 声明存储卷类型和名称

volumes:

- name: data01

hostPath:

# 指定宿主机的路径,如果源数据是文件

path: /data-linux81

containers:

- name: myweb

image: k8s151.oldboyedu.com:5000/myweb:v0.1

command: ["sleep","3600"]

volumeMounts:

- name: data01

# 挂载点也必须是文件

mountPath: /usr/share/nginx/html

# 以只读的方式挂载,默认值是false。

# readOnly: true

10.3.3 挂载目录案例

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-linux80-volume-hostpath-03

labels:

apps: myweb

spec:

nodeName: k8s153.oldboyedu.com

volumes:

- name: data01

hostPath:

# 如果源数据是目录

path: /data-linux81

containers:

- name: linux80-web

image: k8s151.oldboyedu.com:5000/myweb:v0.1

command: ["sleep","3600"]

volumeMounts:

- name: data01

# 对应的挂载点应该也是目录

mountPath: /etc/nginx/

# readOnly: true

10.4 nfs案例

10.4.1 nfs概述

NFS数据卷:

提供对NFS挂载支持,可以自动将NFS共享路径挂载到Pod中。

NFS:

英文全称为"Network File System"(网络文件系统),是由SUN公司研制的UNIX表示层协议(presentation layer protocol),能使使用者访问网络上别处的文件就像在使用自己的计算机一样。

NFS是一个主流的文件共享服务器,但存在单点故障,我们需要对数据进行备份哟,如果有必要可以使用分布式文件系统哈。

推荐阅读:

https://kubernetes.io/docs/concepts/storage/volumes/#nfs

10.4.2 部署nfs server

(1)所有节点安装nfs相关软件包

yum -y install nfs-utils

(2)k8s151节点设置共享目录

mkdir -pv /oldboyedu/data/kubernetes

cat > /etc/exports <<'EOF'

/oldboyedu/data/kubernetes *(rw,no_root_squash)

EOF

(3)配置nfs服务开机自启动

systemctl enable --now nfs

(4)服务端检查NFS挂载信息,如上图所示。

exportfs

(5)客户端节点手动挂载测试(152或153节点)

mount -t nfs k8s151.oldboyedu.com:/oldboyedu/data/kubernetes /mnt/

umount /mnt

10.4.3 参考案例

[root@k8s151 /oldboyedu/data/kubernetes]$ cat > 08-pods-volume-nfs.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-volume-nfs-01

labels:

apps: myweb

spec:

nodeName: k8s152.oldboyedu.com

# 声明存储卷类型和名称

volumes:

- name: data01

#指定nfs的存储卷

nfs:

#指定nfs的服务器

server: 10.0.0.151

#指定nfs服务的存储路径

path: /oldboyedu/data/kubernetes

containers:

- name: myweb

image: k8s151.oldboyedu.com:5000/myweb:v0.1

volumeMounts:

- name: data01

mountPath: /usr/share/nginx/html

---

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-volume-nfs-02

labels:

apps: myweb

spec:

nodeName: k8s153.oldboyedu.com

volumes:

- name: data01

nfs:

server: 10.0.0.151

path: /oldboyedu/data/kubernetes

containers:

- name: test01

image: nginx:1.18

volumeMounts:

- name: data01

mountPath: /usr/share/nginx/html

EOF

[root@k8s151 /oldboyedu/data/kubernetes]$ kubectl apply -f 08-pods-volume-nfs.yaml

pod/oldboyedu-volume-nfs-01 created

pod/oldboyedu-volume-nfs-02 created

[root@k8s151 /oldboyedu/data/kubernetes]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

oldboyedu-volume-nfs-01 1/1 Running 0 37s 10.244.1.27 k8s152.oldboyedu.com > >

oldboyedu-volume-nfs-02 1/1 Running 0 36s 10.244.2.43 k8s153.oldboyedu.com > >

[root@k8s151 /oldboyedu/data/kubernetes]$ echo "AAAAAAAA" >/oldboyedu/data/kubernetes/index.html

#提示,有时候出现错误,可以把pod删了,再试试

[root@k8s151 /oldboyedu/data/kubernetes]$ curl 10.244.2.44

AAAAAAAAA

[root@k8s151 /oldboyedu/data/kubernetes]$ curl 10.244.1.28

AAAAAAAAA

10.4.4 参考案例

cat 15-pod-volume-nfs-02.yaml

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-linux80-volume-nfs-02

labels:

apps: myweb

spec:

nodeName: k8s152.oldboyedu.com

volumes:

- name: myweb

# 配置NFS挂载

nfs:

server: 10.0.0.151

path: /oldboyedu/data/kubernetes

containers:

- name: linux80-web

image: k8s151.oldboyedu.com:5000/myweb:v0.1

volumeMounts:

- name: myweb

mountPath: /usr/share/nginx/html

10.5 configmap资源

10.5.1 configmap概述

configmap数据会存储在etcd数据库,其应用场景主要在于应用程序配置。

configMap支持的数据类型:

(1) 键值对;

(2) 多行数据;

Pod使用configmap资源有两种常见的方式:

(1) 变量注入;

(2) 数据卷挂载

推荐阅读:

https://kubernetes.io/docs/concepts/storage/volumes/#configmap

https://kubernetes.io/docs/concepts/configuration/configmap/

10.5.2 configmap资源创建

10.5.2.1 参考案例1

[root@k8s151 ~]$ mkdir -p /k8s-manifests/configMap

[root@k8s151 /k8s-manifests/configMap]$ cat > 01.cm-configMap.yaml <<"EOF"

apiVersion: v1

kind: ConfigMap

metadata:

name: oldboyedu-cm-linux81

data:

# 单行数据

school: "清华"

class: "云计算"

# 多行数据

student.txt: |

姓名: 张三

年龄: 25

民族: 汉

EOF

[root@k8s151 /k8s-manifests/configMap]$ kubectl apply -f 01.cm-configMap.yaml

configmap/oldboyedu-cm-linux81 created

查找configmaps的简称

[root@k8s151 /oldboyedu/data/kubernetes]$ kubectl api-resources |grep configmaps

configmaps cm true ConfigMap

[root@k8s151 /k8s-manifests/configMap]$ kubectl get configmaps

NAME DATA AGE

oldboyedu-cm-linux81 3 33s

可以使用configMap的简称代替

[root@k8s151 /k8s-manifests/configMap]$ kubectl get cm

NAME DATA AGE

oldboyedu-cm-linux81 3 33s

[root@k8s151 /k8s-manifests/configMap]$ kubectl get cm -o yaml

apiVersion: v1

items:

- apiVersion: v1

data:

class: 云计算

school: 清华

student.txt: |

姓名: 张三

年龄: 25

民族: 汉

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"class":"云计算","school":"清华","student.txt":"姓名: 张三\n年龄: 25\n民族: 汉\n"},"kind":"ConfigMap","metadata":{"annotations":{},"name":"oldboyedu-cm-linux81","namespace":"default"}}

creationTimestamp: "2022-11-23T02:26:08Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:class: {}

f:school: {}

f:student.txt: {}

f:metadata:

f:annotations:

.: {}

f:kubectl.kubernetes.io/last-applied-configuration: {}

manager: kubectl

operation: Update

time: "2022-11-23T02:26:08Z"

name: oldboyedu-cm-linux81

namespace: default

resourceVersion: "1059853"

selfLink: /api/v1/namespaces/default/configmaps/oldboyedu-cm-linux81

uid: f2a2e989-bae1-4c34-9751-22a495b51fc1

kind: List

metadata:

resourceVersion: ""

selfLink: ""

[root@k8s151 /k8s-manifests/configMap]$ kubectl describe cm oldboyedu-cm-linux81

Name: oldboyedu-cm-linux81

Namespace: default

Labels:

Annotations:

Data

====

school:

----

清华

student.txt:

----

姓名: 张三

年龄: 25

民族: 汉

class:

----

云计算

Events:

10.5.2.1 参考案例2

使用cm的资源

[root@k8s151 /k8s-manifests/configMap]$ cat > 01-pods-myweb-configMap.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: oldboyedu-volumes-cm-02

labels:

apps: myweb

spec:

nodeName: k8s152.oldboyedu.com

volumes:

- name: data01

# 定义数据卷类型是configMap.

configMap:

# 引用configMap的名称.

name: oldboyedu-cm-linux81

# 引用configMap的具体的Key相关信息.

items:

# 指定configmap的key名称,该名称必须在cm资源中存在.

- key: student.txt

# 可以暂时理解为挂载到容器的文件名称.

path: oldboyedu-student.txt

- key: class

path: oldboyedu-class

- key: school

path: oldboyedu-school

containers:

- name: myweb

image: k8s151.oldboyedu.com:5000/myweb:v0.1

volumeMounts:

- name: data01

mountPath: /oldboyedu-configMap/

EOF

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 01-pods-myweb-configMap.yaml

pod/oldboyedu-volumes-cm-01 created

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cm-school-01 0/1 ContainerCreating 0 39s > k8s152.oldboyedu.com > >

[root@k8s151 /k8s-manifests/podes]$ kubectl exec -it oldboyedu-volumes-cm-01 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

root@oldboyedu-volumes-cm-01:/# ls /oldboyedu-configMap/

oldboyedu-class oldboyedu-school oldboyedu-student.txt

root@oldboyedu-volumes-cm-01:/# cat /oldboyedu-configMap/oldboyedu-student.txt

姓名: 张三

年龄: 25

民族: 汉

[root@k8s151 /k8s-manifests/configMap]$ cat > 02-cm-configMap.yaml <<'EOF'

apiVersion: v1

kind: ConfigMap

metadata:

name: cm-config

data:

# 单行数据

name: "清华"

class: "云计算"

# 多行数据

student.msg: |

姓名: 张三

年龄: 25

民族: 汉

my.cnf: |

host: 10.0.0.201

port: 13306

socket: /tmp/mysql.sock

username: root

password: oldboyedu

redis.conf: |

host: 10.0.0.293

port: 6379

requirepass: oldboyedu

nginx.conf: |

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

# include /usr/local/nginx/conf/conf.d/*.conf;

server {

listen 81;

root /usr/local/nginx/html/bird/;

server_name game01.oldboyedu.com;

}

server {

listen 82;

root /usr/local/nginx/html/pinshu/;

server_name game02.oldboyedu.com;

}

server {

listen 83;

root /usr/local/nginx/html/tanke/;

server_name game03.oldboyedu.com;

}

server {

listen 84;

root /usr/local/nginx/html/pingtai/;

server_name game04.oldboyedu.com;

}

server {

listen 85;

root /usr/local/nginx/html/chengbao/;

server_name game05.oldboyedu.com;

}

}

cat > 02-cm-configMap.yaml <<'EOF'

apiVersion: v1

kind: ConfigMap

metadata:

name: linux81-config

data:

# 单行数据

name: "清华"

class: "云计算"

# 多行数据

student.txt: |

姓名: 张三

年龄: 25

民族: 汉

my.cnf: |

host: 10.0.0.201

port: 13306

socket: /tmp/mysql.sock

username: root

password: oldboyedu

redis.conf: |

host: 10.0.0.293

port: 6379

requirepass: oldboyedu

nginx.conf: |

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

# include /usr/local/nginx/conf/conf.d/*.conf;

server {

listen 81;

root /usr/local/nginx/html/bird/;

server_name game01.oldboyedu.com;

}

server {

listen 82;

root /usr/local/nginx/html/pinshu/;

server_name game02.oldboyedu.com;

}

server {

listen 83;

root /usr/local/nginx/html/tanke/;

server_name game03.oldboyedu.com;

}

server {

listen 84;

root /usr/local/nginx/html/pingtai/;

server_name game04.oldboyedu.com;

}

server {

listen 85;

root /usr/local/nginx/html/chengbao/;

server_name game05.oldboyedu.com;

}

}

EOF

[root@k8s151 /k8s-manifests/configMap]$ kubectl apply -f 02-cm-configMap.yaml

configmap/linux81-config created

10.5.3 使用configmap资源

10.5.3.1 基于存储卷的方式挂载

[root@k8s151 /k8s-manifests/podes]$ cat > 09-pods-conf-configMap.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: volumes-cm-conf-01

spec:

nodeName: k8s152.oldboyedu.com

volumes:

- name: oldboyedu-myweb

configMap:

name: linux81-config

items:

- key: student.txt

path: oldboyedu-student.txt

- key: class

path: oldboyedu-class

- name: oldboyedu-nginx

configMap:

name: linux81-config

items:

- key: nginx.conf

path: nginx.conf

containers:

- name: myweb

image: k8s151.oldboyedu.com:5000/myweb:v0.1

volumeMounts:

- name: oldboyedu-myweb

mountPath: /oldboyedu-configMap/

- name: oldboyedu-nginx

mountPath: /oldboyedu-nginx/

EOF

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 09-pods-conf-configMap.yaml

pod/volumes-cm-conf-01 created

[root@k8s151 /k8s-manifests/podes]$ kubectl exec -it volumes-cm-conf-01 -- bash

pod/volumes-cm-conf-02 created

[root@k8s151 /k8s-manifests/podes]$ kubectl exec -it volumes-cm-conf-01 -- bash

root@volumes-cm-conf-01:/oldboyedu-nginx# ls /oldboyedu-nginx/

nginx.conf

root@volumes-cm-conf-01:/oldboyedu-nginx# ls /oldboyedu-configMap/

oldboyedu-class oldboyedu-student.txt

10.5.3.2 基于环境变量的方式挂载

[root@k8s151 /k8s-manifests/podes]$ cat > 09-pods-env-configMap.yaml <<'EOF'

kind: Pod

apiVersion: v1

metadata:

name: volumes-cm-env-01

spec:

nodeName: k8s152.oldboyedu.com

volumes:

- name: oldboyedu-myweb

configMap:

name: linux81-config

items:

- key: student.txt

path: oldboyedu-student.txt

- key: class

path: oldboyedu-class

- name: oldboyedu-nginx

configMap:

name: linux81-config

items:

- key: nginx.conf

path: nginx.conf

containers:

- name: myweb

image: k8s151.oldboyedu.com:5000/myweb:v0.1

env:

#自定义变量名称

- name: CLASS

#自定义值

value: linux81

- name: MYSQL_CONFIG

# 指定从哪里取值

valueFrom:

# 指定从configMap去引用数据

configMapKeyRef:

# 指定configMap的名称(从指定cm中读取数据)

name: linux81-config

# 指定configmap的key,即引用哪条数据!

key: my.cnf

volumeMounts:

- name: oldboyedu-myweb

mountPath: /oldboyedu-configMap/

- name: oldboyedu-nginx

mountPath: /oldboyedu-nginx/

EOF

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 09-pods-env-configMap.yaml

pod/volumes-cm-conf-01 created

[root@k8s151 /k8s-manifests/podes]$ kubectl get pods

NAME READY STATUS RESTARTS AGE

volumes-cm-conf-01 1/1 Running 0 47m

volumes-cm-env-01 1/1 Running 0 10s

[root@k8s151 /k8s-manifests/podes]$ kubectl exec -it volumes-cm-env-01 -- bash

root@volumes-cm-env-01:/# env

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_SERVICE_PORT=443

HOSTNAME=volumes-cm-env-01

PWD=/

PKG_RELEASE=2~buster

HOME=/root

KUBERNETES_PORT_443_TCP=tcp://10.254.0.1:443

NJS_VERSION=0.4.4

TERM=xterm

CLASS=linux81 #自定义的变量和值

SHLVL=1

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_ADDR=10.254.0.1

KUBERNETES_SERVICE_HOST=10.254.0.1

KUBERNETES_PORT=tcp://10.254.0.1:443

KUBERNETES_PORT_443_TCP_PORT=443

MYSQL_CONFIG=host: 10.0.0.201 #从cm中引用的配置

port: 13306

socket: /tmp/mysql.sock

username: root

password: oldboyedu

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

NGINX_VERSION=1.18.0

_=/usr/bin/env

10.6 secret资源

10.6.1 secret概述

与ConfigMap类似,区别在于secret存储敏感数据,所有的数据都需要经过base64进行编码。

使用secret主要存储的是凭据信息。

参考链接:

https://kubernetes.io/zh/docs/concepts/configuration/secret/#secret-types

10.6.2 secret资源创建

[root@k8s151 /k8s-manifests/secret]$ cat > 01.linux81-secret.yaml <<'EOF'

apiVersion: v1

kind: Secret

metadata:

name: db-user-passwd

# Opaque类型是用户自定义类型.

type: Opaque

data:

# 定义两条数据,其值必须是base64编码后的数据,否则创建会报错哟~

username: YWRtaW4K

password: b2xkYm95ZWR1Cg==

EOF

[root@k8s151 /k8s-manifests/podes]$ kubectl apply -f 01.linux81-secret.yaml

secret/db-user-passwd created

[root@k8s151 /k8s-manifests/podes]$ kubectl get secrets

NAME TYPE DATA AGE

db-user-passwd Opaque 2 44s

default-token-c5lh2 kubernetes.io/service-account-token 3 25d #存服务账号令牌的

[root@k8s151 /k8s-manifests/podes]$ kubectl describe secrets db-user-passwd

Name: db-user-passwd