Optimizers for Deep Learning

文章目录

- 一、Some Notations

-

- What is Optimization about?

- 二、SGD

-

- SGD with Momentum(SGDM)

- Why momentum?

- 三、Adagrad

-

- RMSProp

- 四、Adam

-

- SWATS [Keskar, et al., arXiv’17]

- Towards Improving Adam

- Towards Improving SGDM

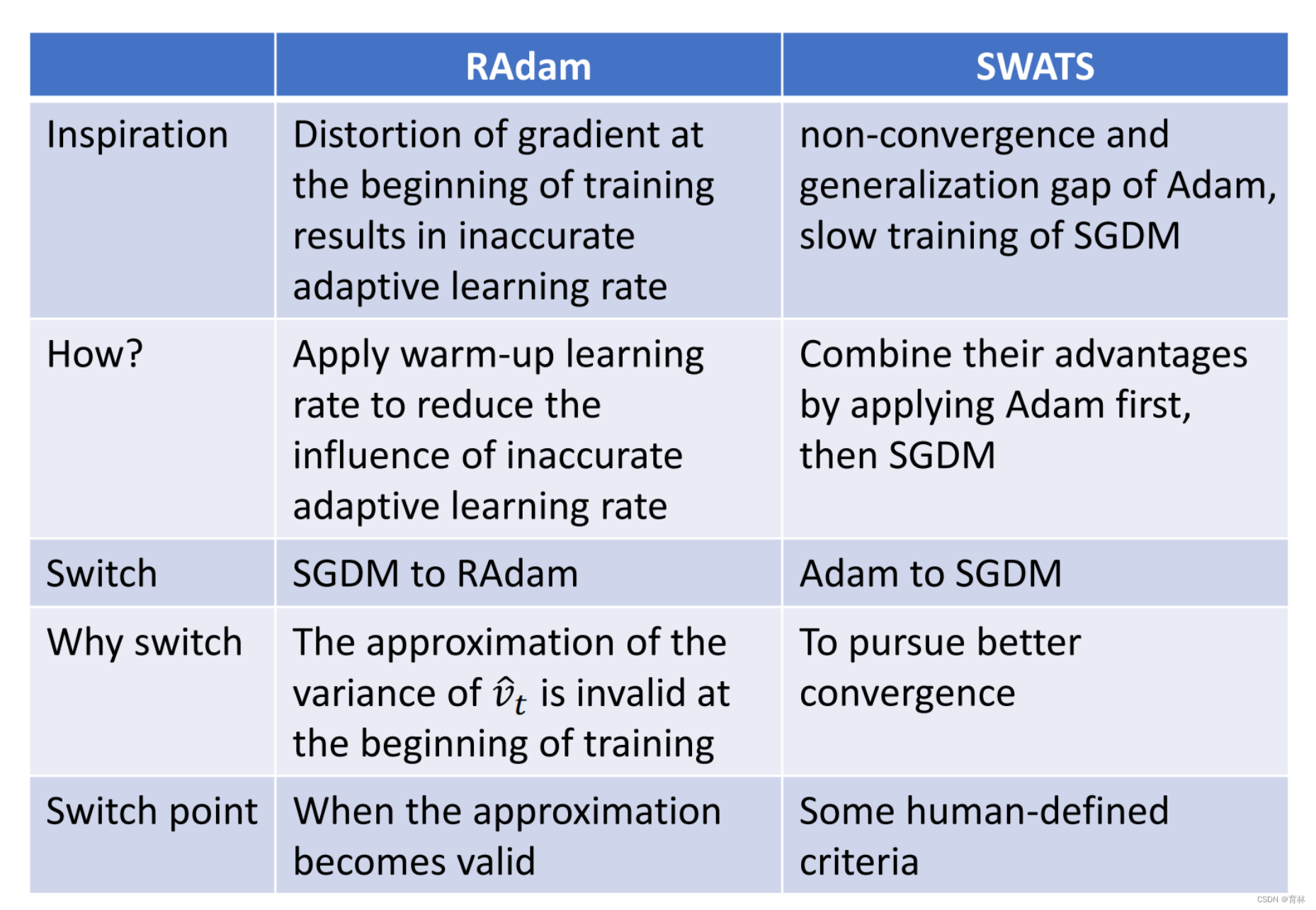

- RAdam vs SWATS

- Lookahead [Zhang, et al., arXiv’19]

- Momentum recap

- Can we look into the future

- 五、optimizer

-

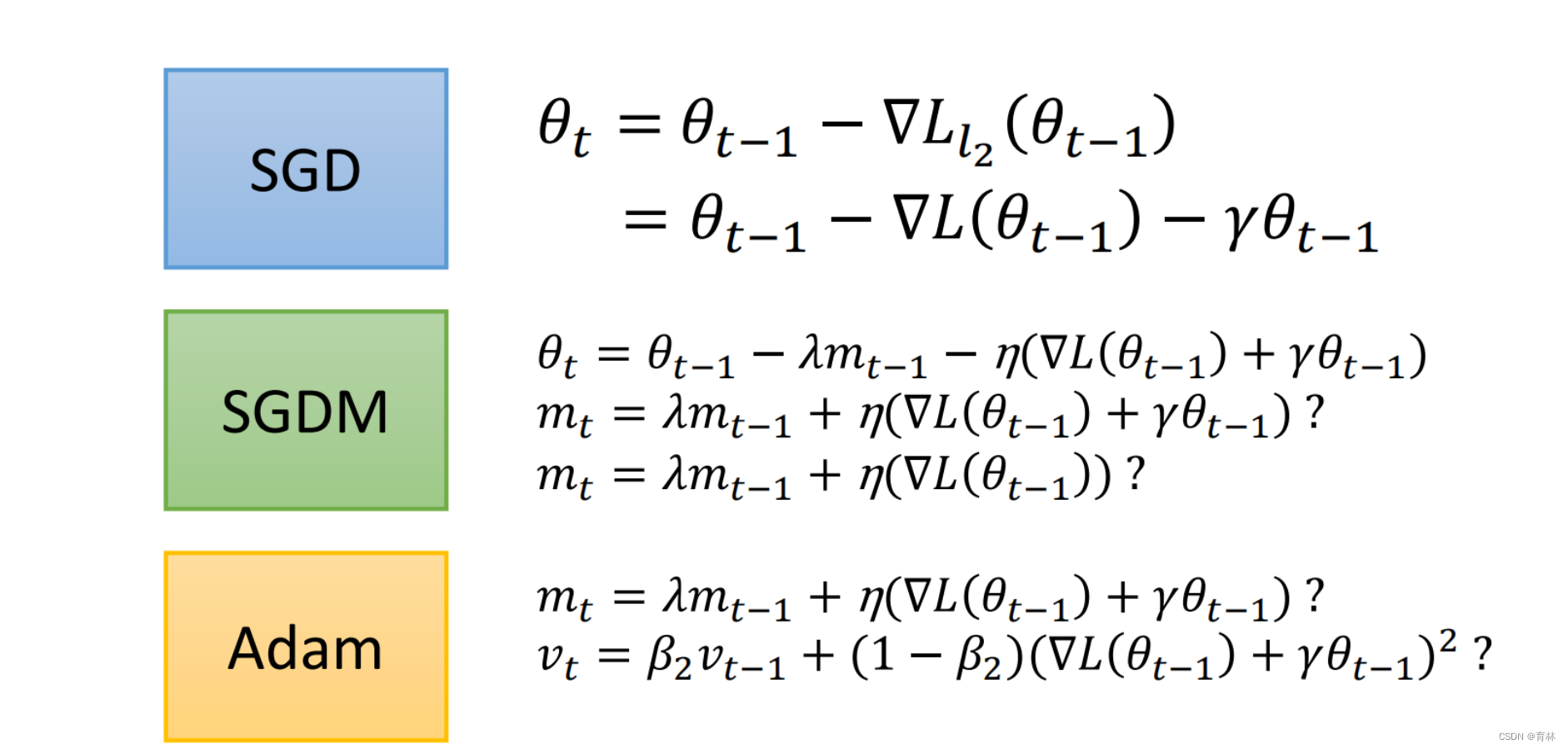

- L2

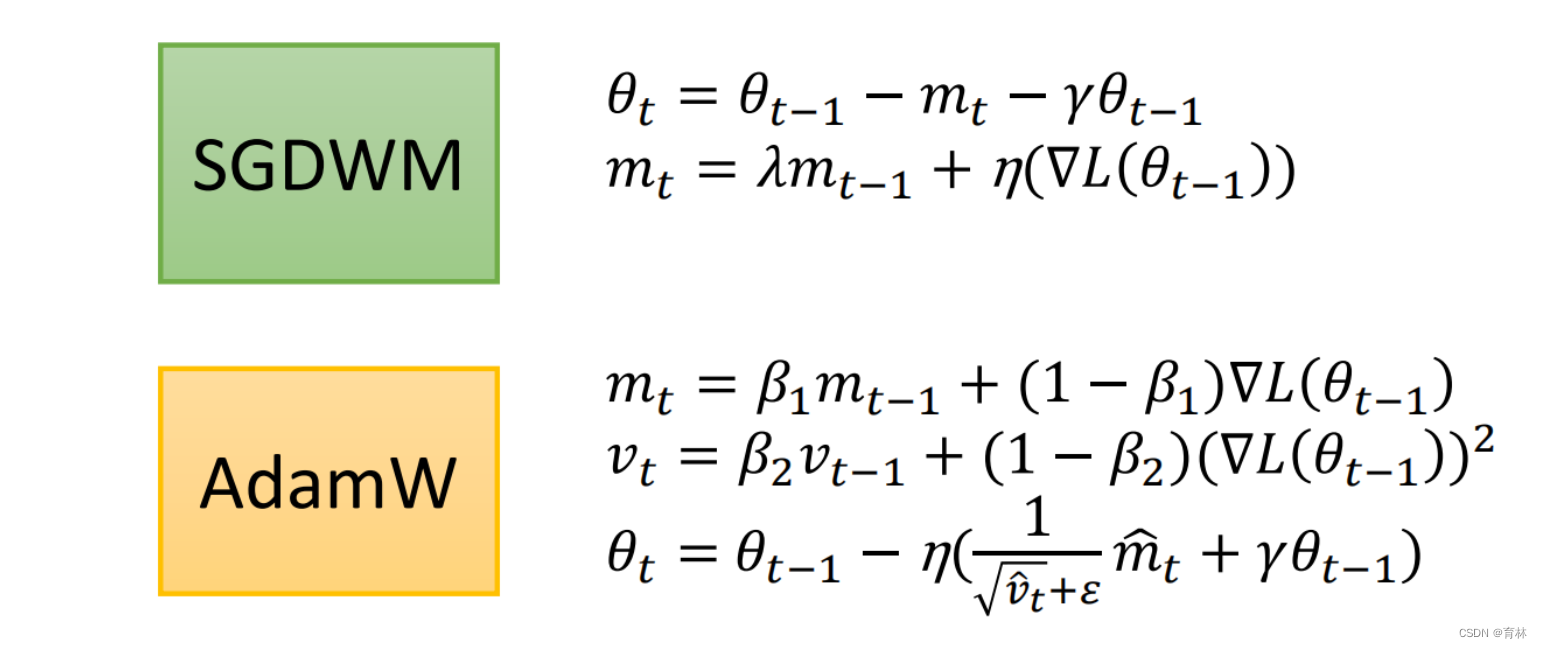

- AdamW & SGDW with momentum

- Something helps optimization

- 总结

-

- Advices:

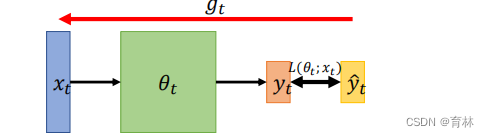

一、Some Notations

: model parameters at time step

• ∇() or : gradient at , used to compute +1

+1: momentum accumulated from time step 0 to

time step , which is used to compute +1

What is Optimization about?

Find a to get the lowest σ (; )

Or, Find a to get the lowest ()

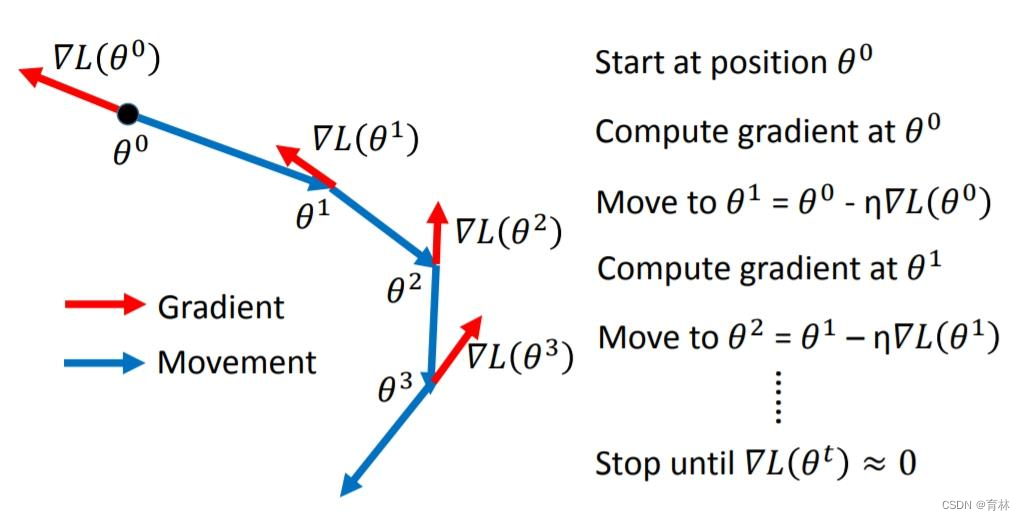

二、SGD

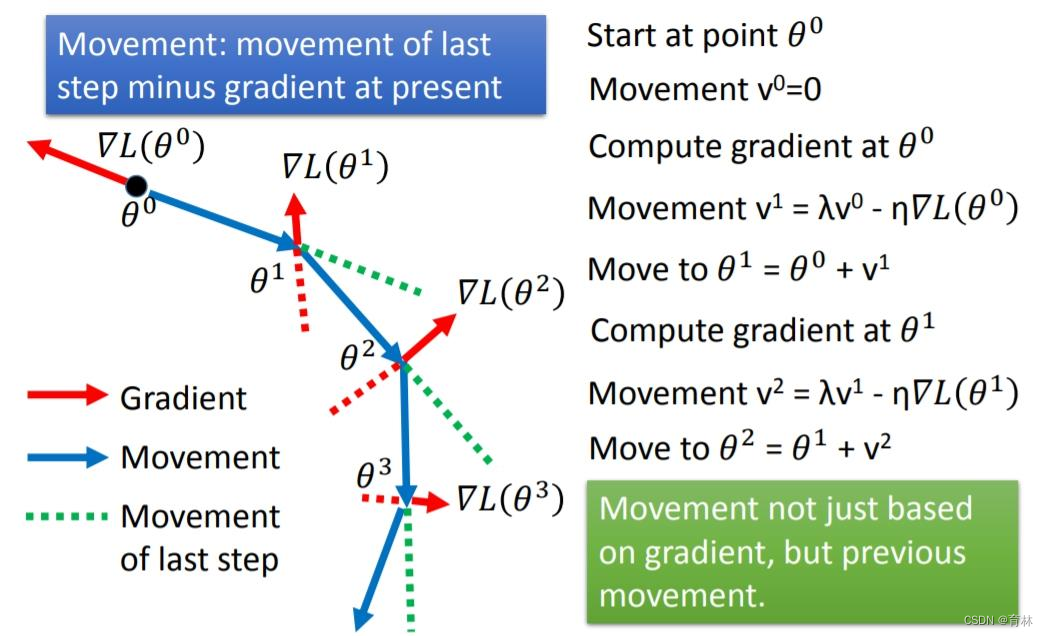

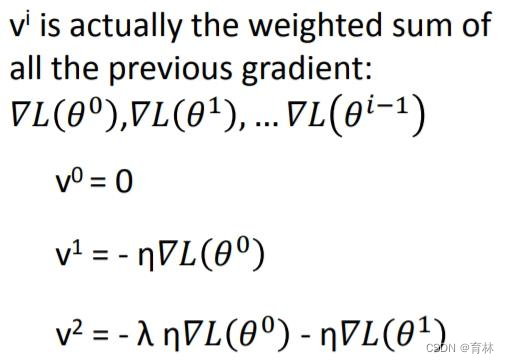

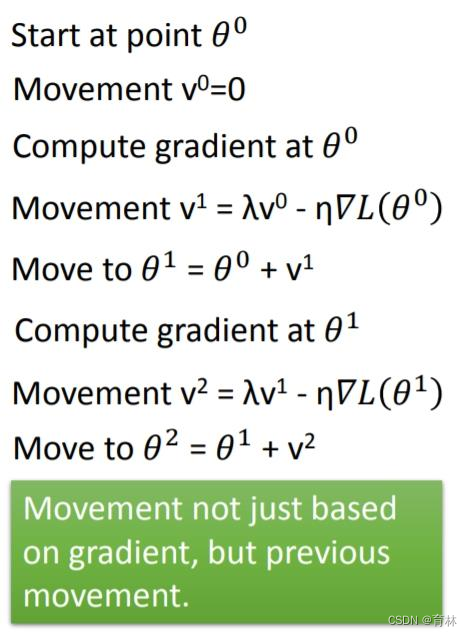

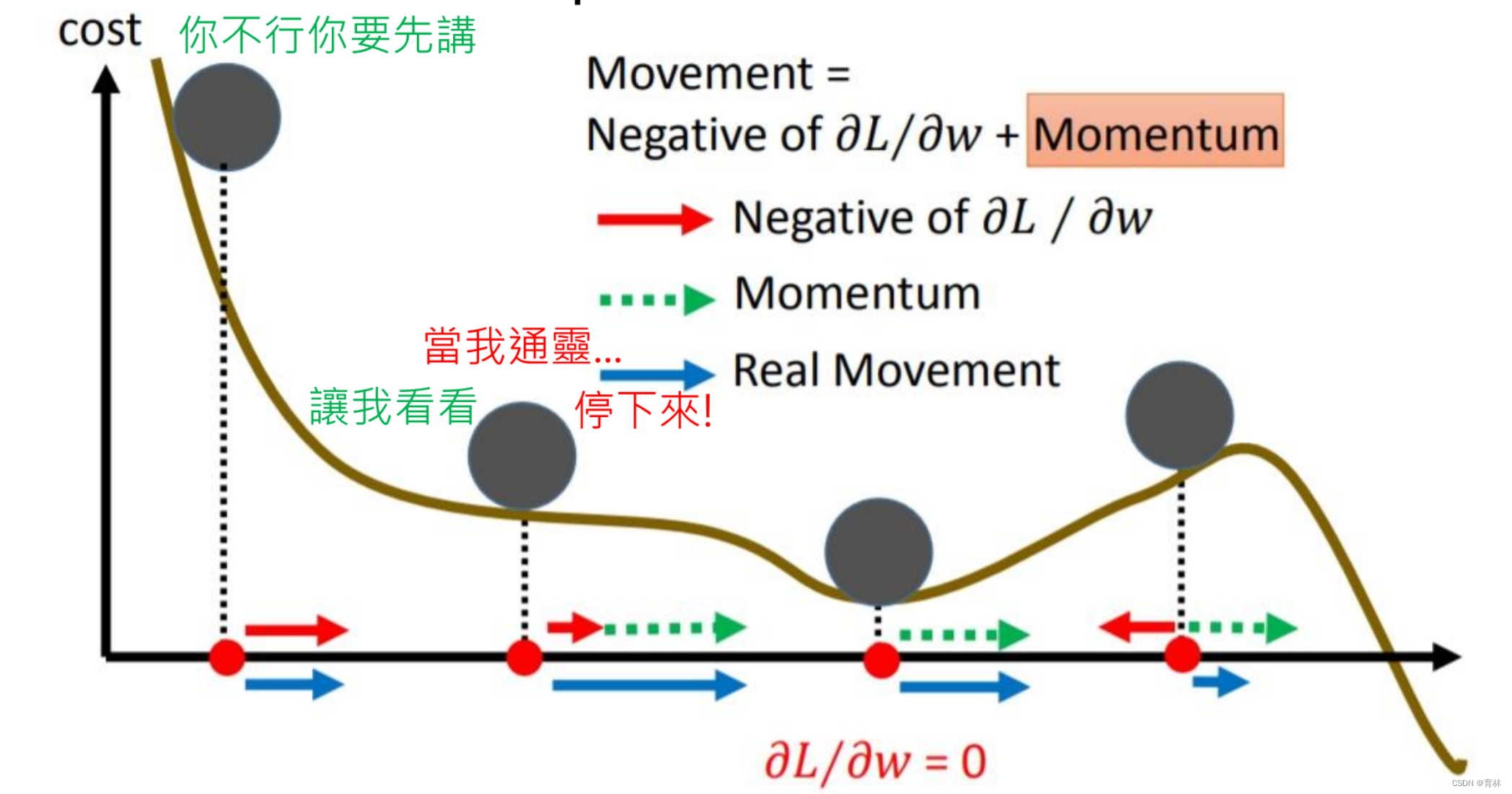

SGD with Momentum(SGDM)

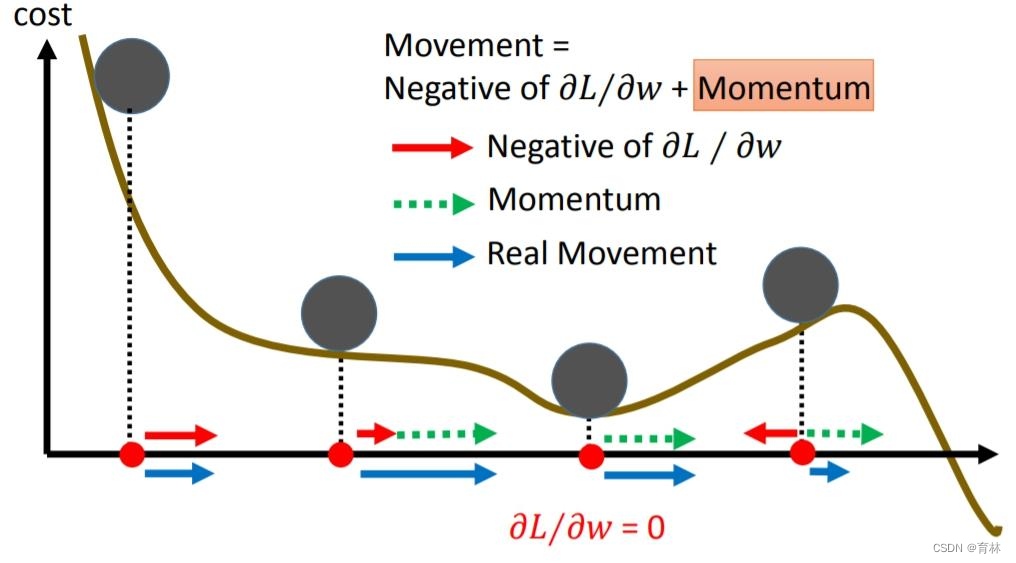

Why momentum?

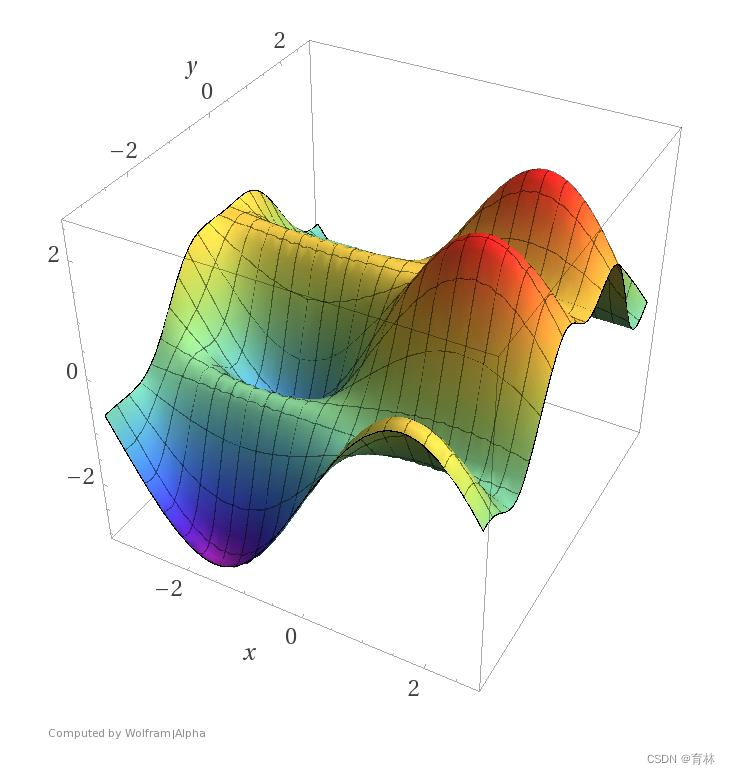

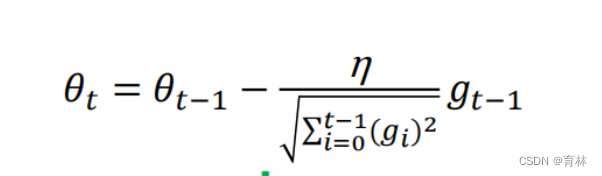

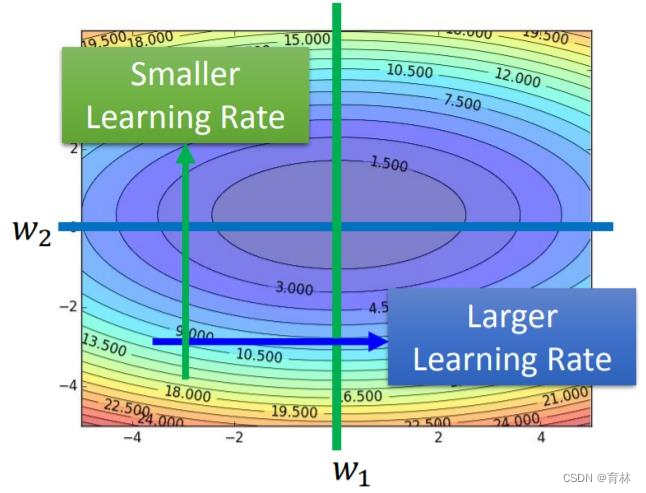

三、Adagrad

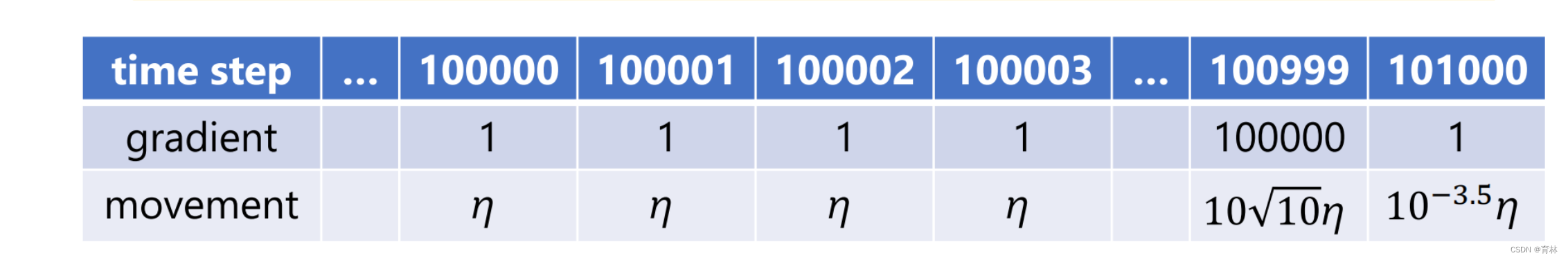

What if the gradients at the first few time steps are extremely large…

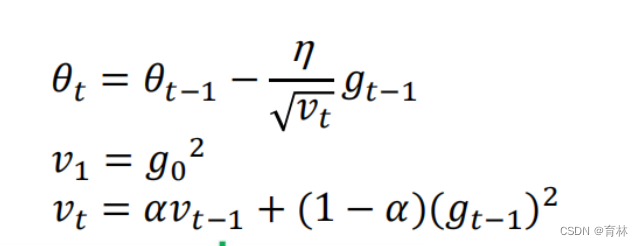

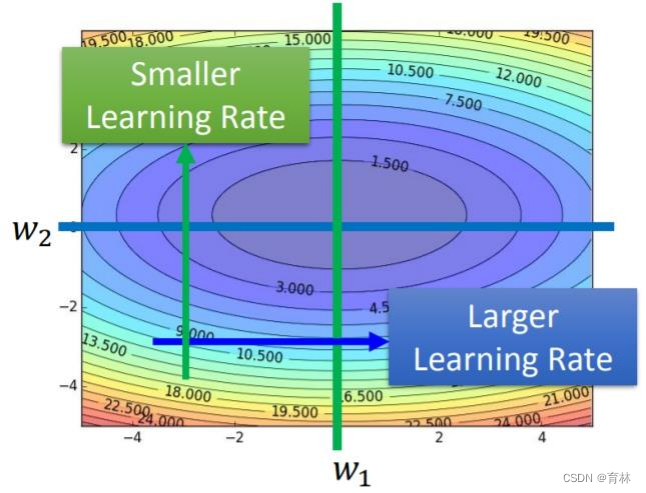

RMSProp

Exponential moving average (EMA) of squared gradients is not monotonically increasing

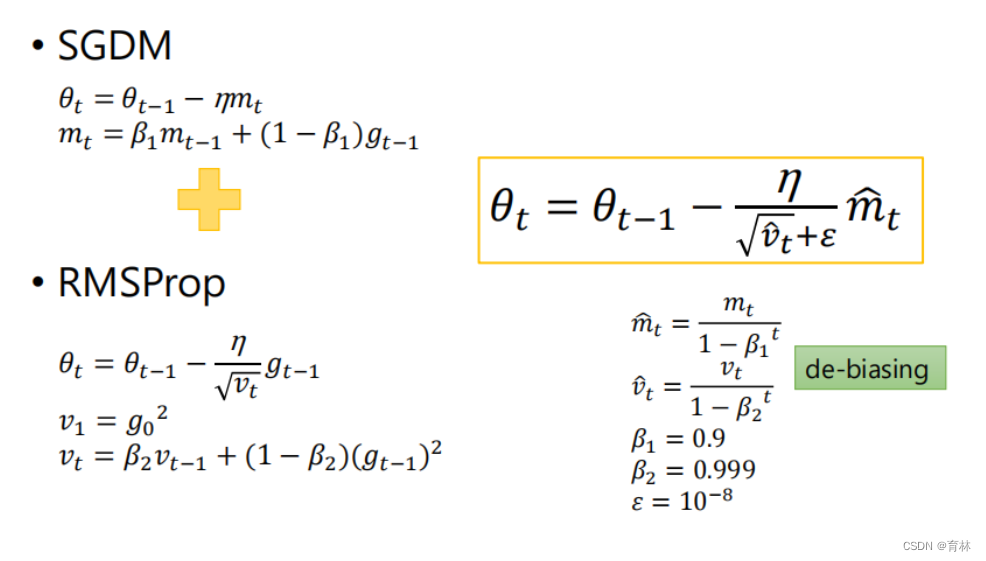

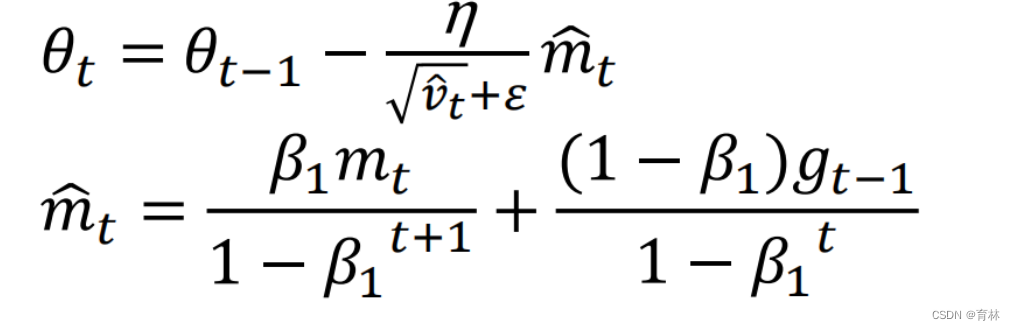

四、Adam

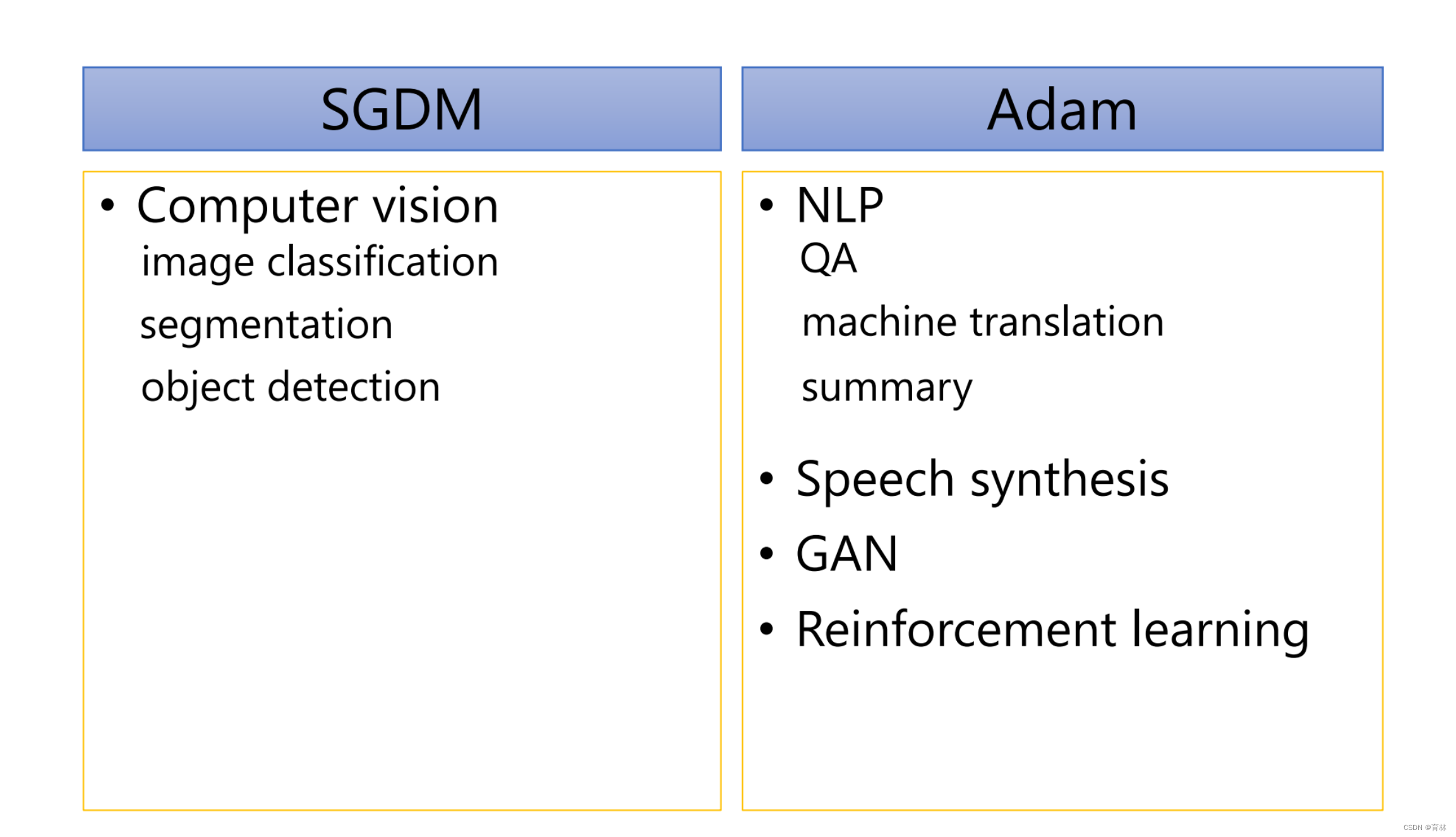

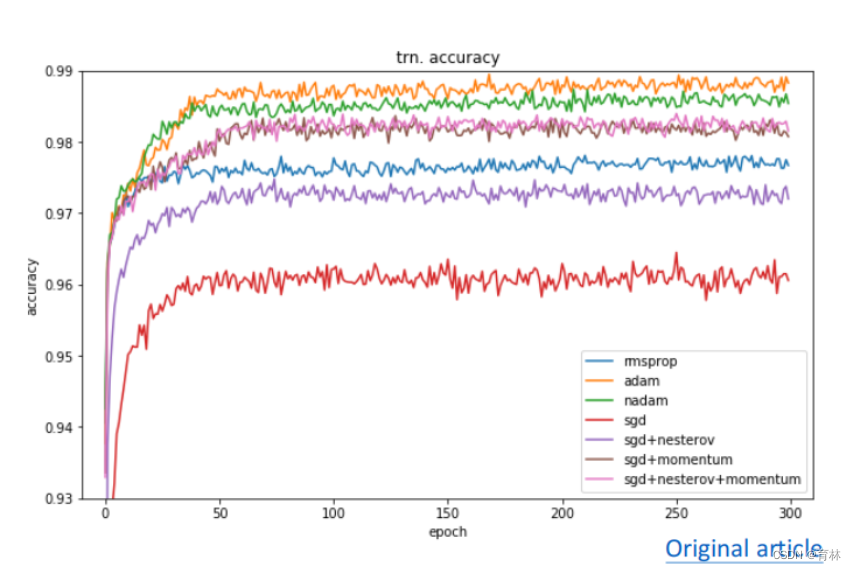

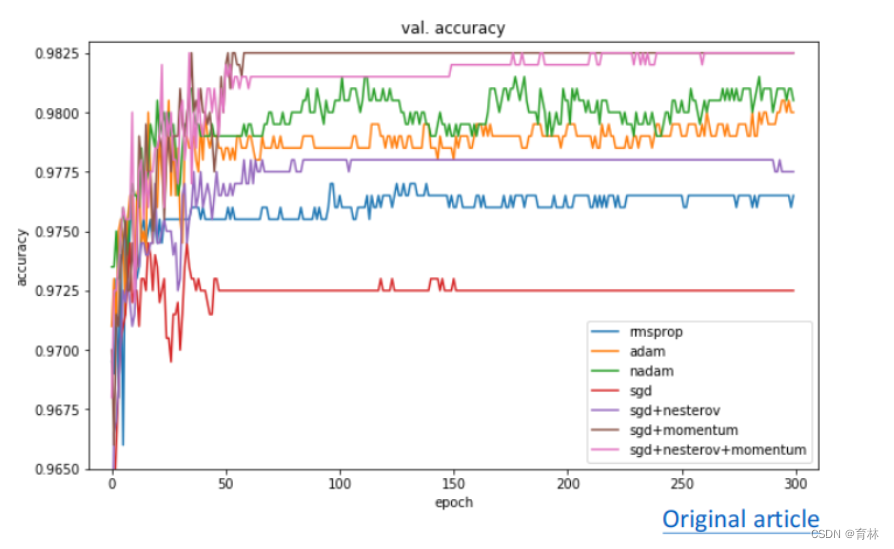

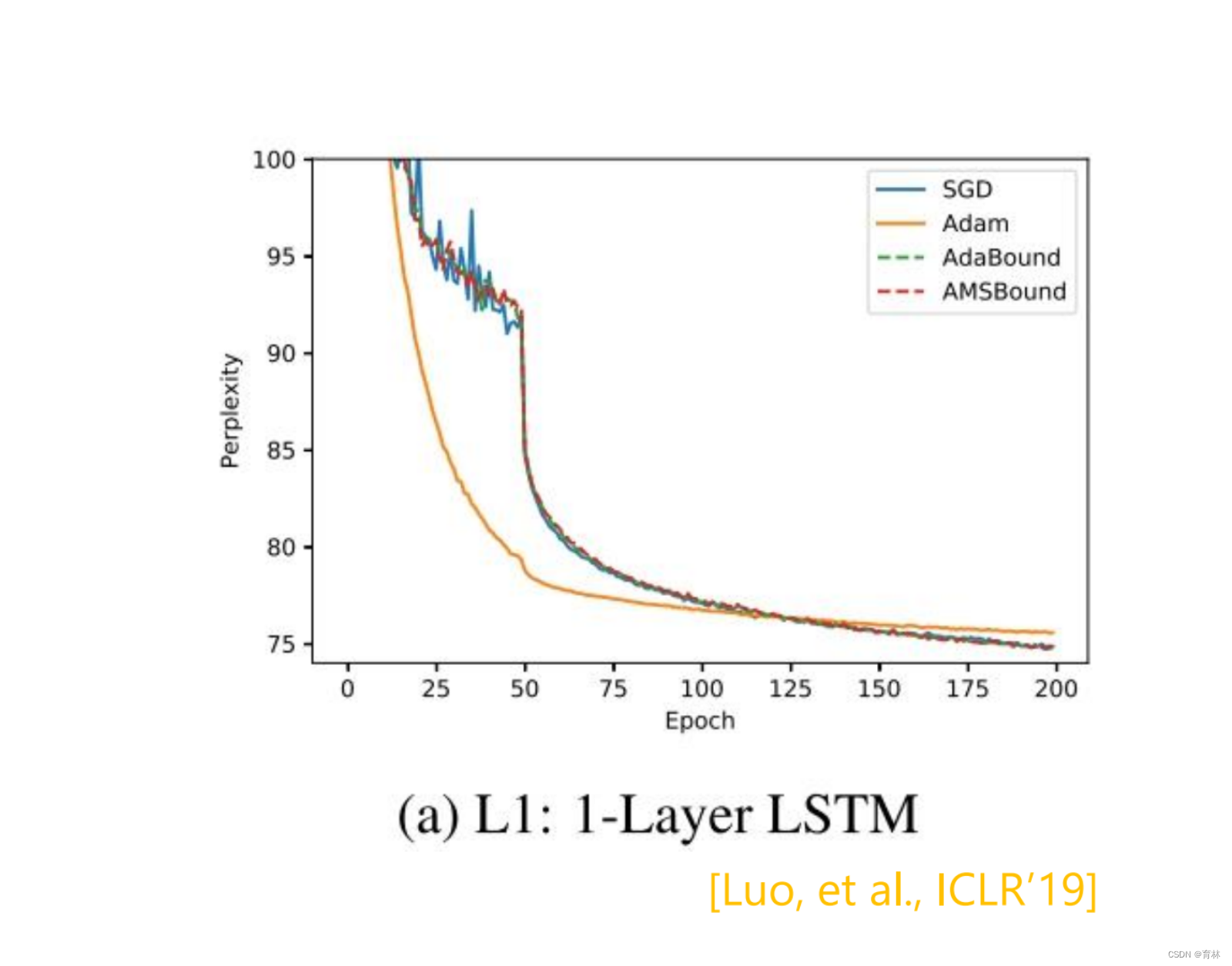

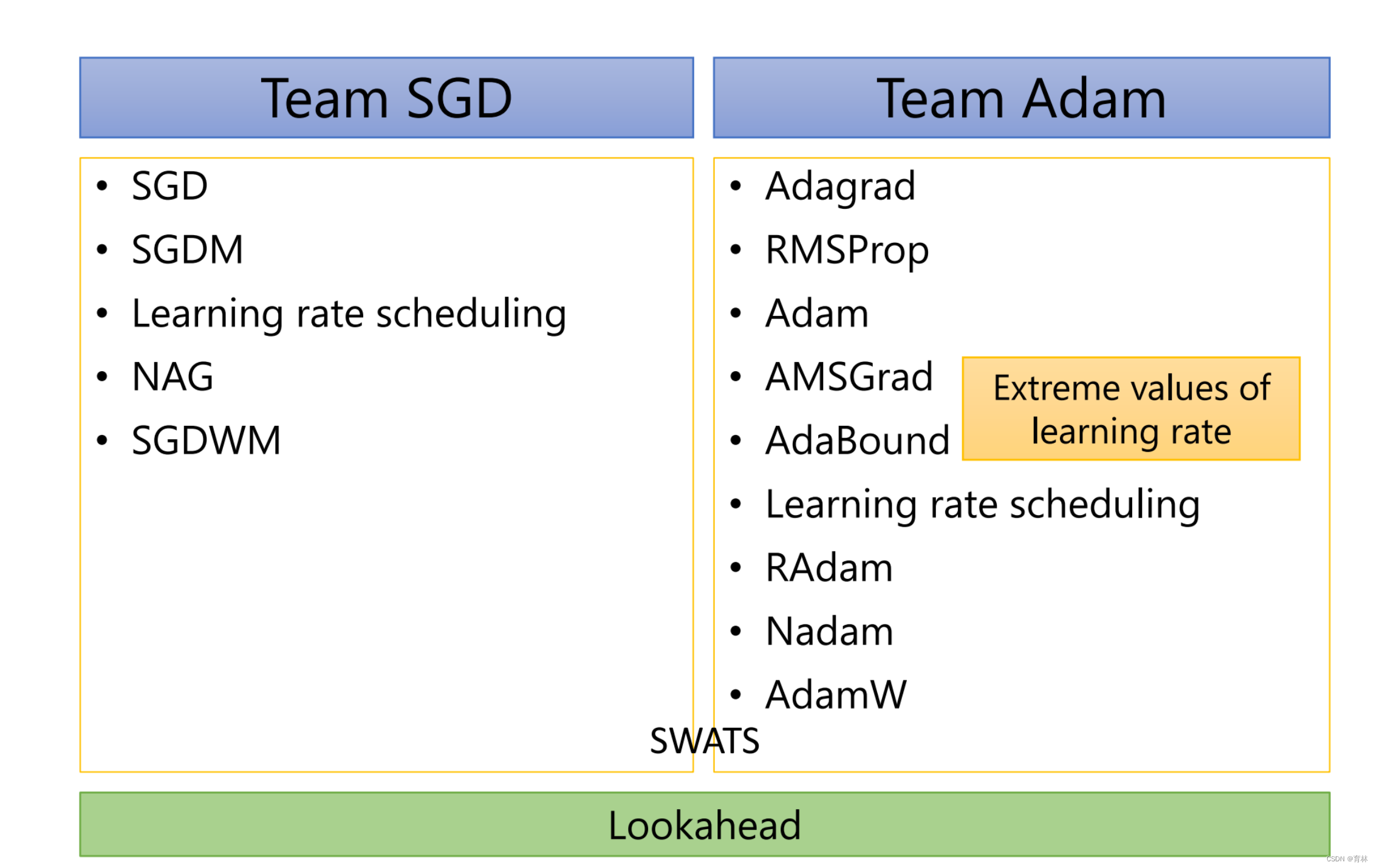

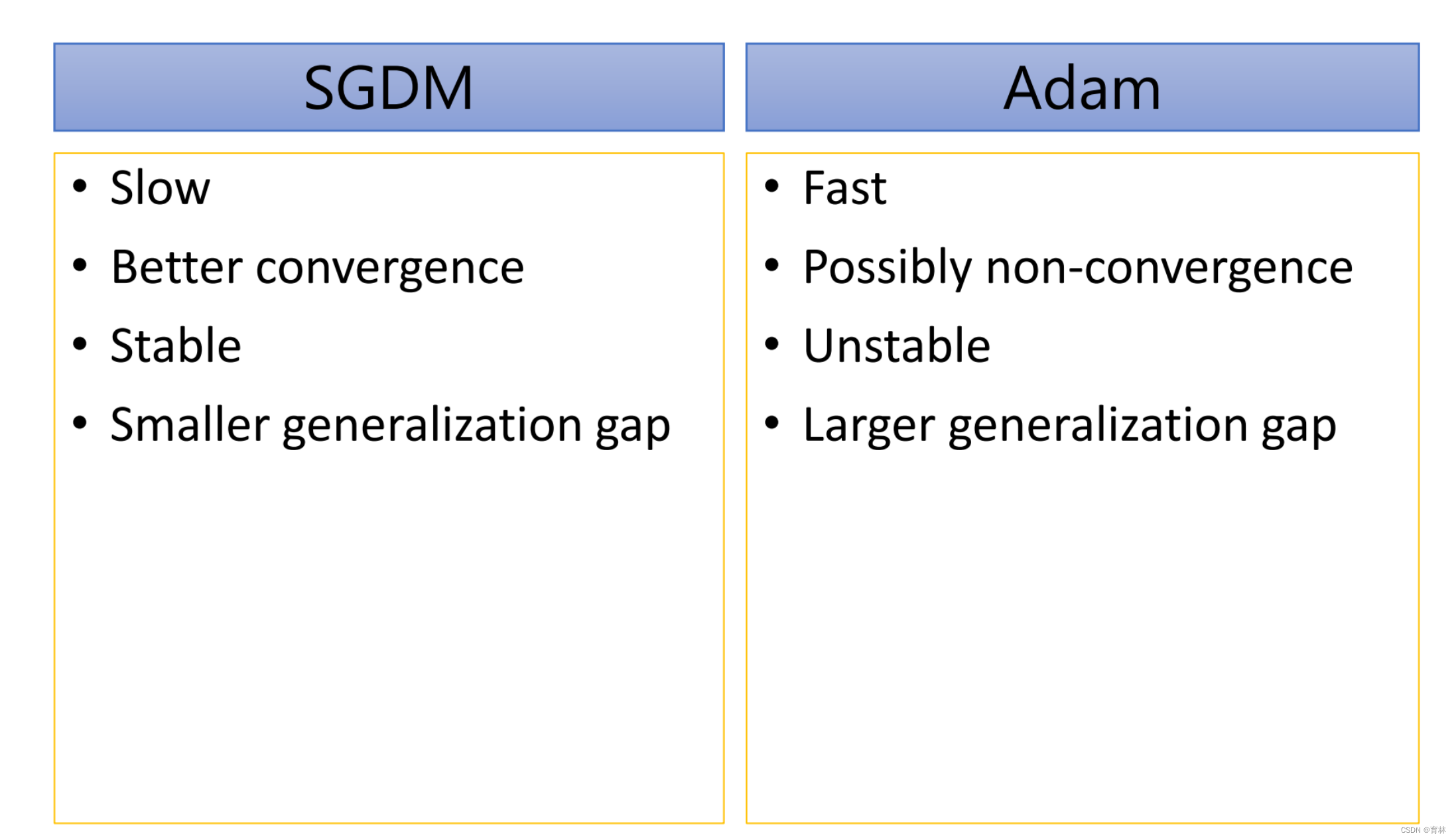

Adam vs SGDM

Adam vs SGDM

Adam vs SGDM

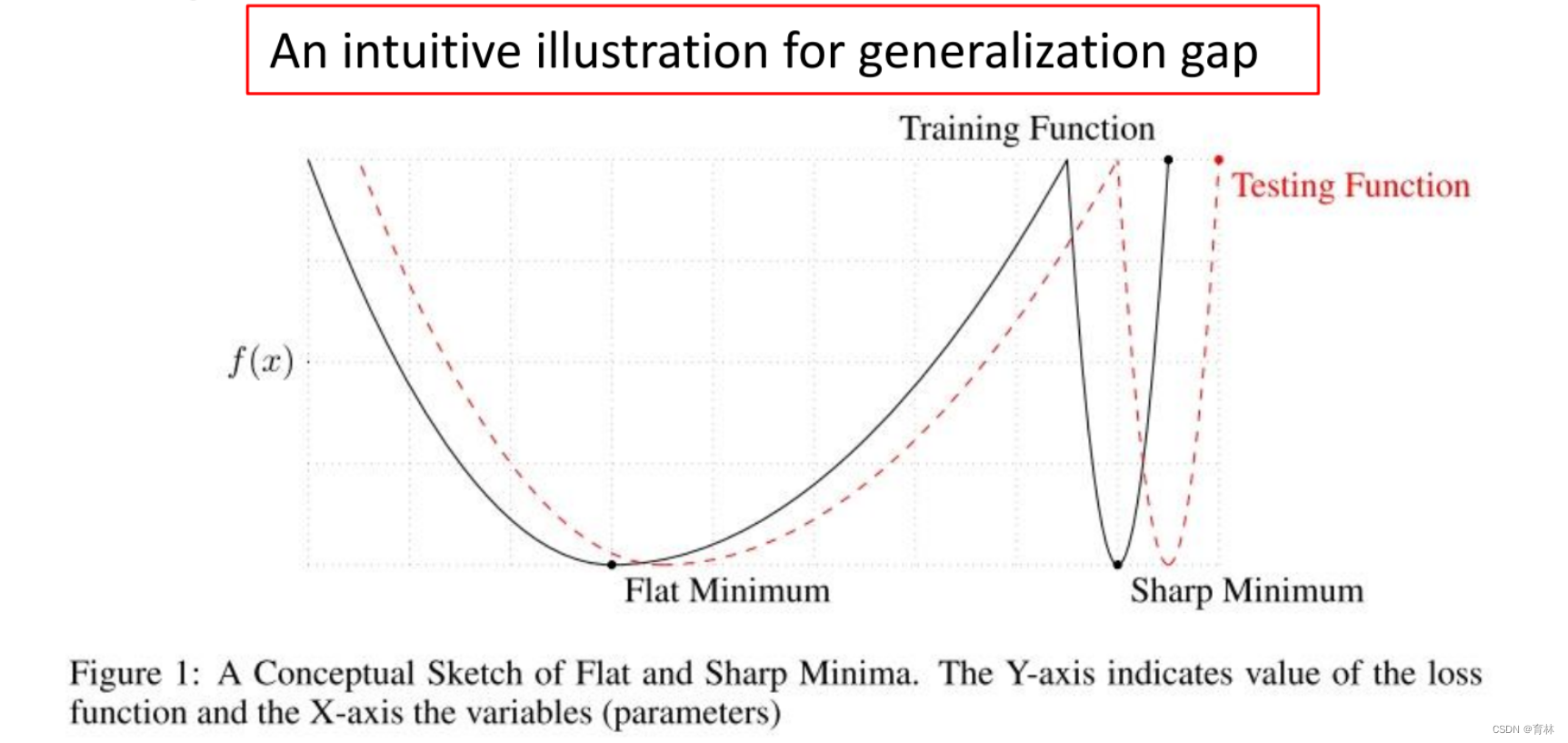

Adam:fast training, large generalization gap, unstable

• SGDM:stable, little generalization gap, better convergence(?)

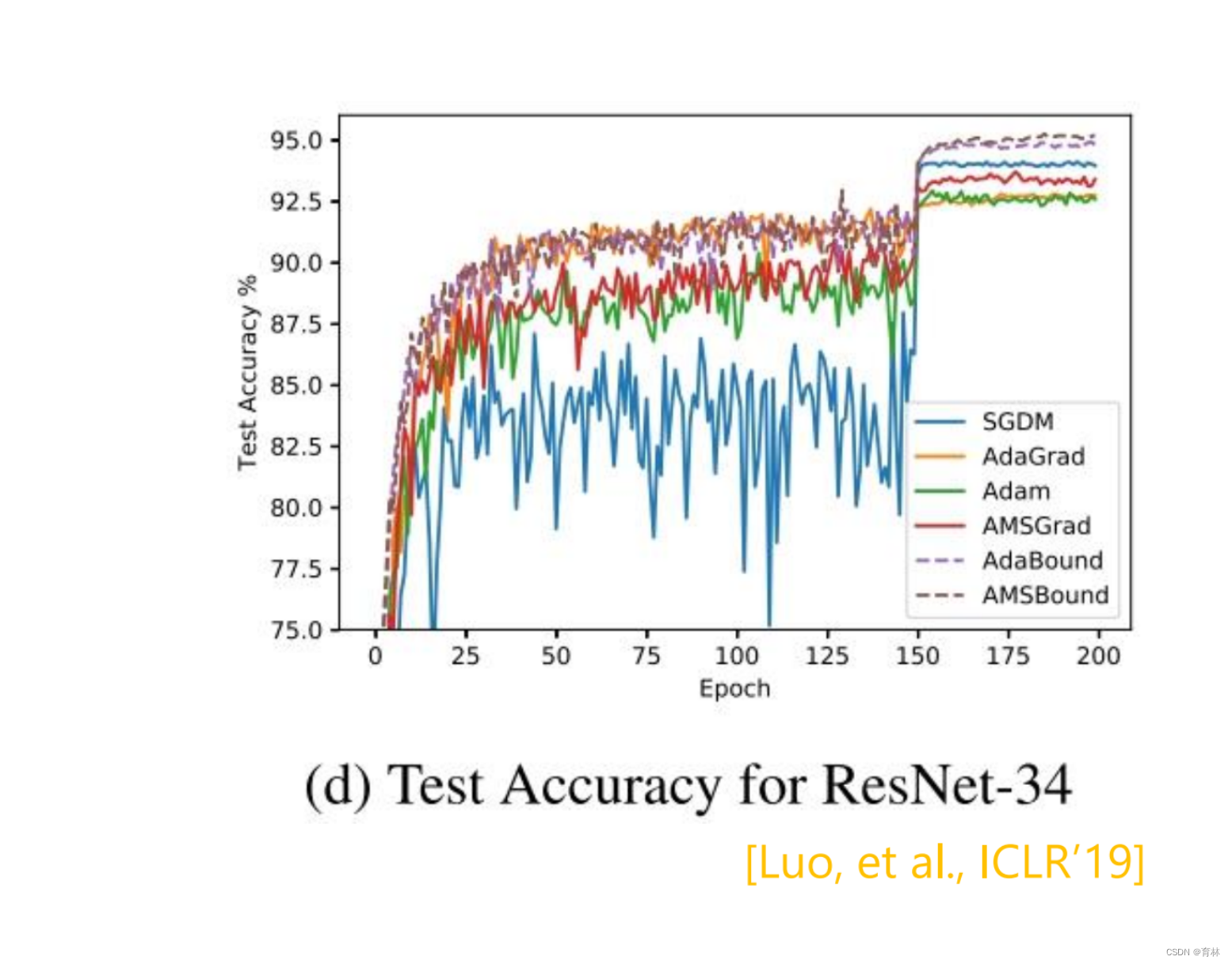

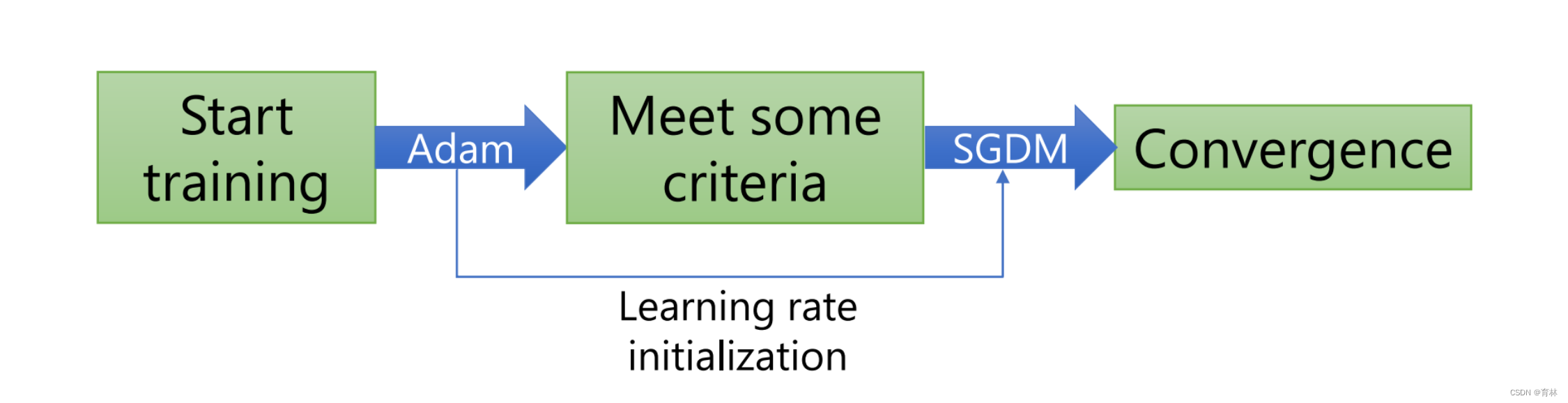

SWATS [Keskar, et al., arXiv’17]

Begin with Adam(fast), end with SGDM

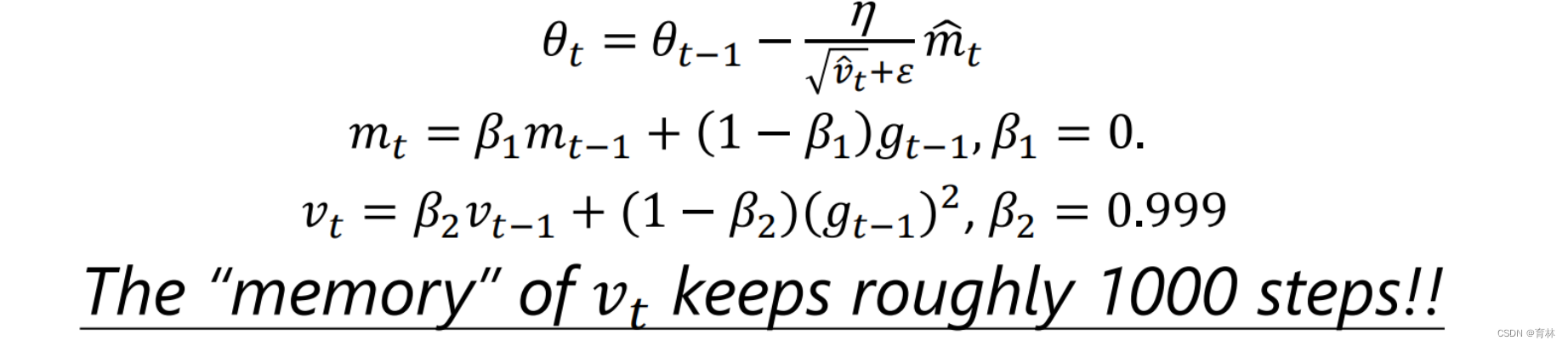

Towards Improving Adam

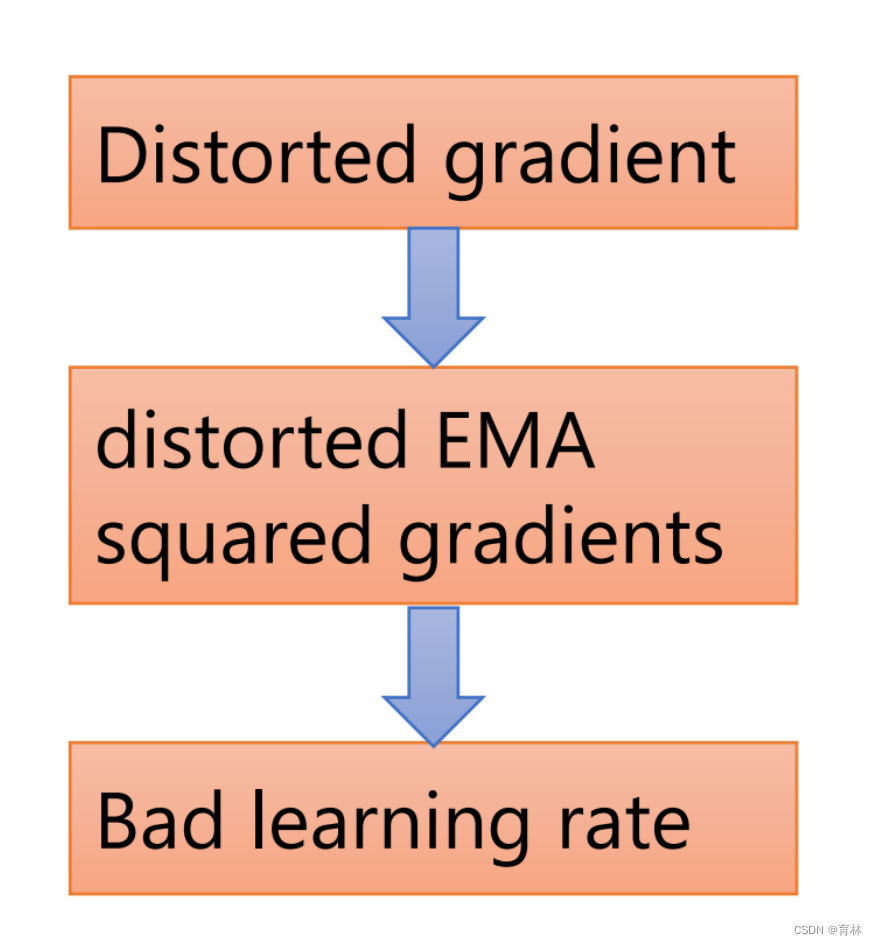

Trouble shooting:

The “memory” of keeps roughly 1000 steps!!

In the final stage of training, most gradients are small and non-informative, while some mini-batches provide large informative gradient rarely

Towards Improving SGDM

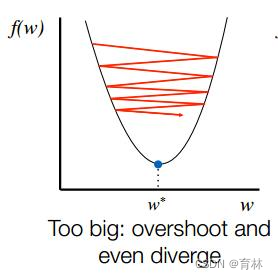

Adaptive learning rate algorithms:dynamically adjust learning rate over time

SGD-type algorithms:fix learning rate for all updates… too slow for small learning rates and bad result for large learning rates

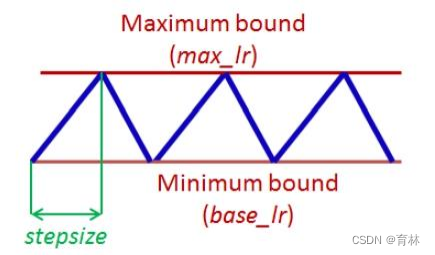

Cyclical LR [Smith, WACV’17]

• learning rate:decide by LR range test

• stepsize:several epochs

• avoid local minimum by varying learning rate

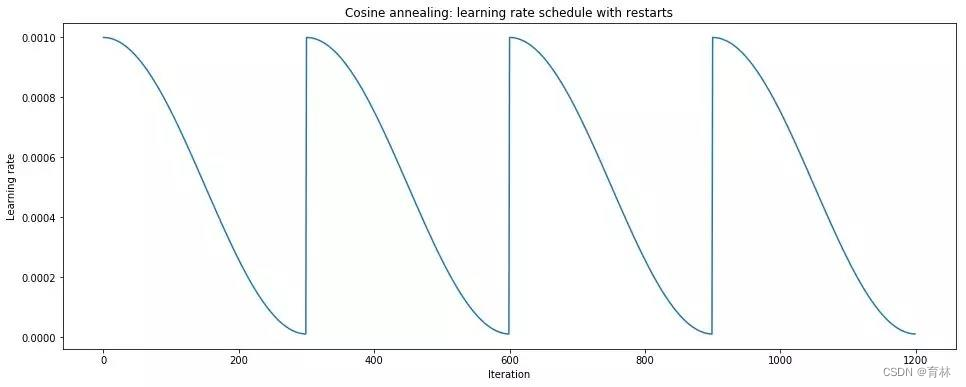

• SGDR [Loshchilov, et al., ICLR’17]

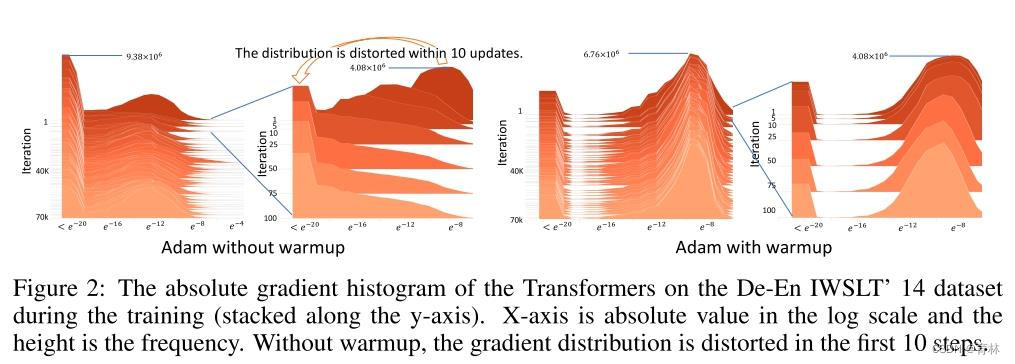

Adam need warm-up

Experiments show that the gradient distribution distorted in the first 10 steps

Keep your step size small at the beginning of training helps to reduce the variance of the gradients

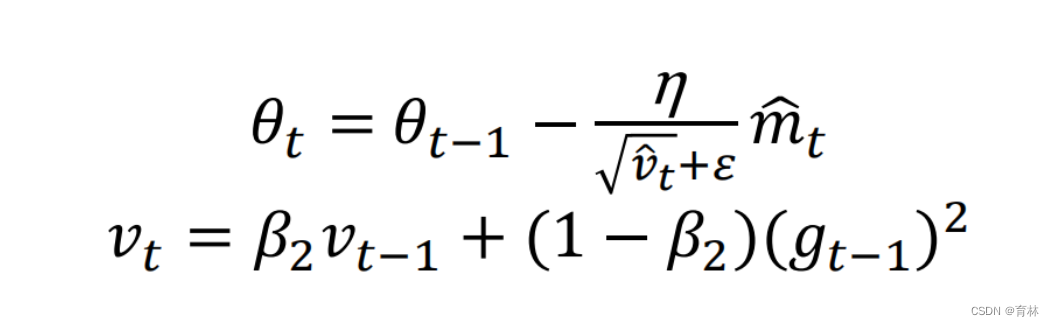

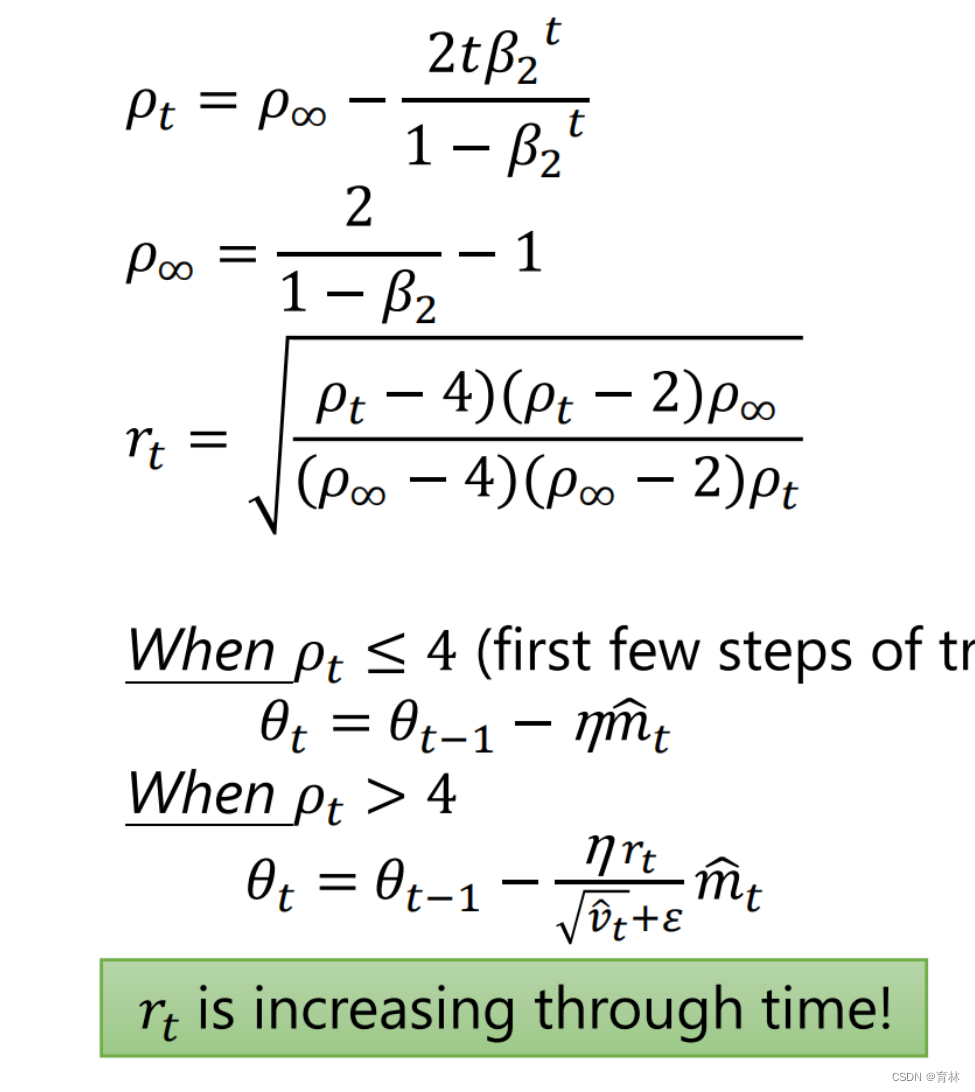

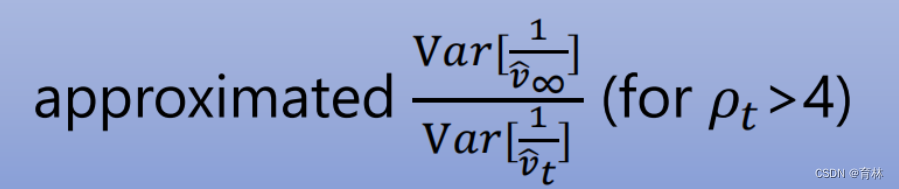

RAdam [Liu, et al., ICLR’20]

1 、effective memory size of EMA

2、max memory size (t → ∞)

3、**

**

RAdam vs SWATS

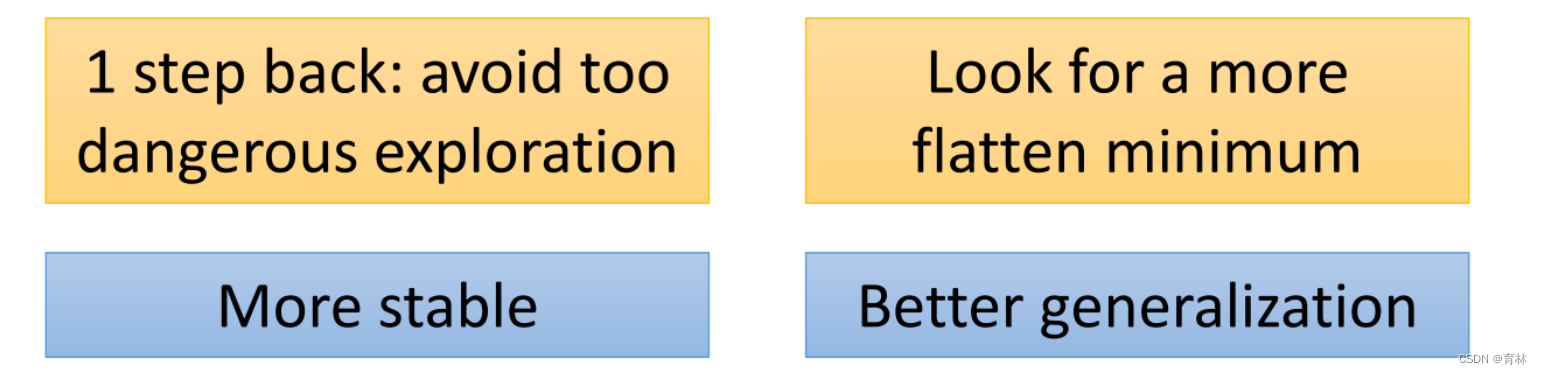

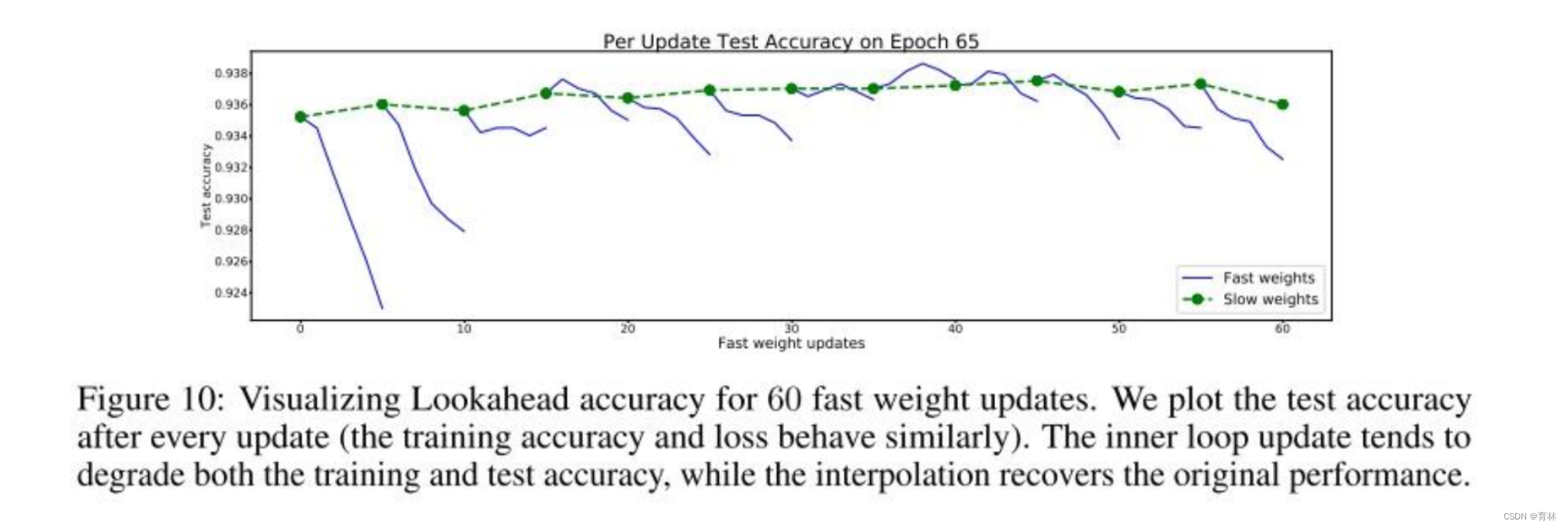

Lookahead [Zhang, et al., arXiv’19]

Momentum recap

Can we look into the future

Nesterov accelerated gradient (NAG) [Nesterov, jour Dokl. Akad. Nauk SSSR’83]

SGDM:

= −1 −

= −1 + ∇(−1)

Look into the future:

= −1 −

= −1 + ∇(−1 − −1)

Nesterov accelerated gradient (NAG):

= −1 −

= −1 + ∇(−1 − −1)

′ = −

= −1 − −

= −1 − − −1 − ∇(−1 − −1)

= −1’ − − ∇(−1′)

= −1 + ∇(−1′)

SGDM:

= −1 −

= −1 + ∇(−1)

or

= −1 − −1-∇(−1)

= −1 + ∇(−1)

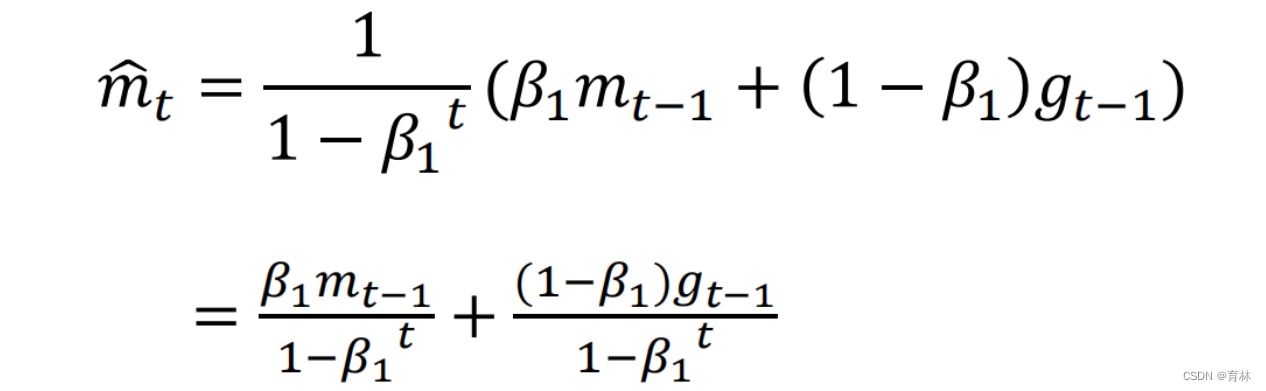

Nadam [Dozat, ICLR workshop’16]

五、optimizer

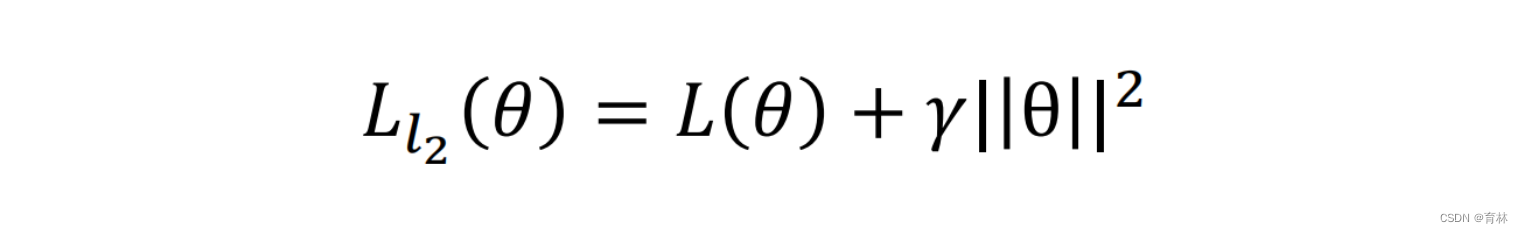

L2

AdamW & SGDW with momentum

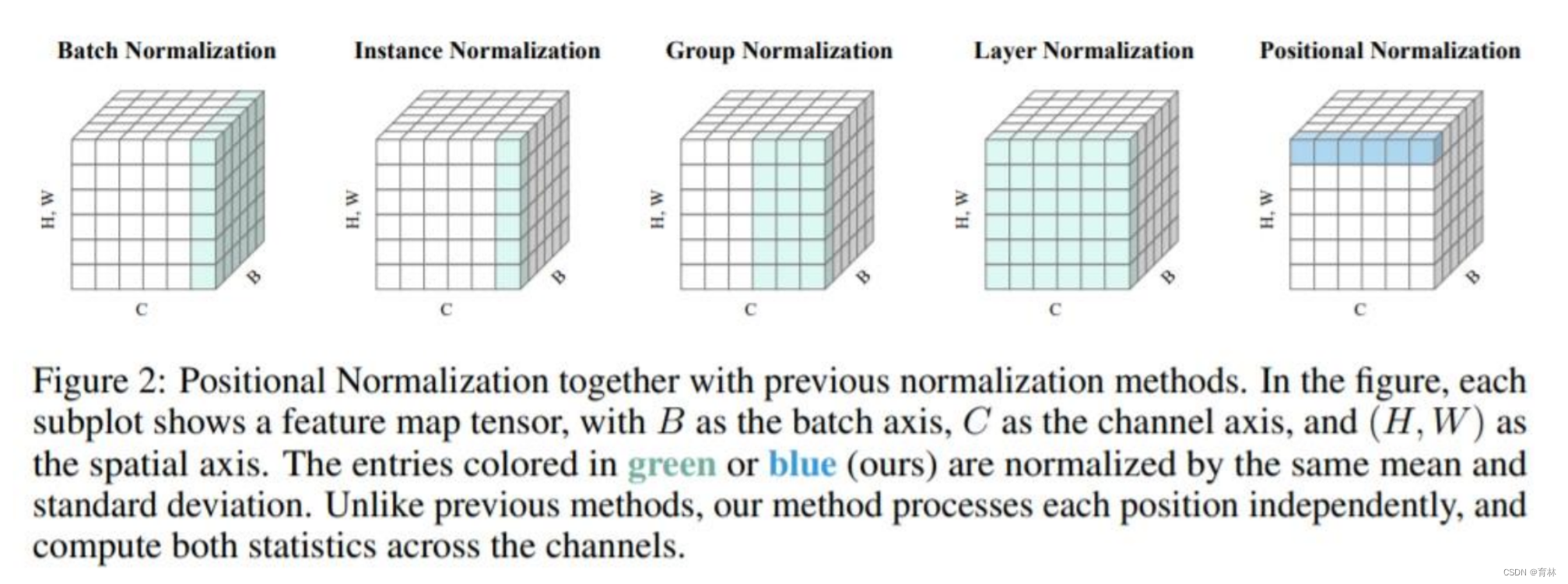

Something helps optimization

Normalization

总结

Advices: