从0-1快速搭建一个k8s集群(一主多从)

前言

Kubernetes是一个为用户提供具有普遍意义的容器编排工具。能够按照用户的意愿和整个系统的规则,完全自动化地处理好容器之间的各种关系。同时,能提供一套基于容器构建分布式系统的基础依赖。下面就以Kubernetes1.15.3为例,带领大家从0到1搭建一个完整的Kubernetes集群。该集群基于kubeadm的方式搭建,应用节点具有高可用。

(一)服务器准备

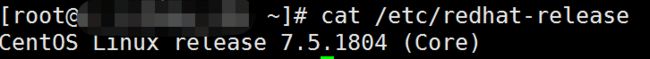

1. Linux版本:CentOS 7.5

通过 cat /etc/redhat-release 列出版本信息。

2. 准备三个节点

需要一个主节点,至少两个从节点。

3. 主节点的配置要求

需要有2个CPU,至少2G内存。

4.k8s版本

本次k8s部署的版本为1.15.3。

(二)环境预安装(所有节点均需要安装)

1. 更换yum源

<1> 新建文件Centos-7.repo,内容如下:

# CentOS-Base.repo

#

# The mirror system uses the connecting IP address of the client and the

# update status of each mirror to pick mirrors that are updated to and

# geographically close to the client. You should use this for CentOS updates

# unless you are manually picking other mirrors.

#

# If the mirrorlist= does not work for you, as a fall back you can try the

# remarked out baseurl= line instead.

#

#

[base]

name=CentOS-$releasever - Base - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#released updates

[updates]

name=CentOS-$releasever - Updates - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that may be useful

[extras]

name=CentOS-$releasever - Extras - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that extend functionality of existing packages

[centosplus]

name=CentOS-$releasever - Plus - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/centosplus/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/centosplus/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#contrib - packages by Centos Users

[contrib]

name=CentOS-$releasever - Contrib - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/contrib/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/contrib/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

<2> 将 Centos-7.repo 放到 /etc/yum.repos.d/ 里面去。

2. 关闭swap分区

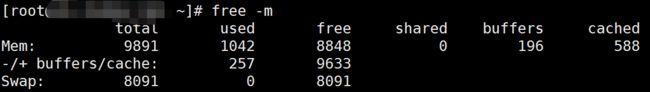

<1> 查看分区情况

通过 free -m 查看分区情况,此时分区已打开;

<2> 关闭分区

vim /etc/fstab,将swap的那一行注释(用#号注释)。

保存,退出。

<3> 重启

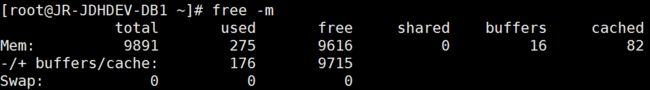

通过reboot重启服务器,最后用free -m验证。

此时swap都为0,证明swap分区已关闭。

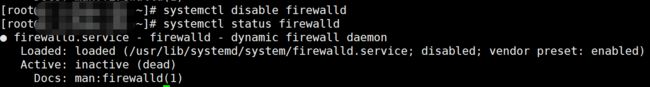

3. 关闭防火墙

通过 systemctl disable firewalld 关闭防火墙,然后 systemctl status firewalld 查看防火墙状态

4. 修改hostname

执行hostname XXX。

比如hostname zz-node-1,修改主机名为zz-node-1。

5. 同步时间

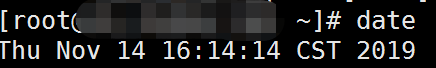

通过 ntpdate 0.asia.pool.ntp.org 同步时间。同步完成后,通过 date 查看时间。

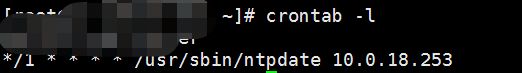

注意:如果有时间同步服务,也可以与它同步,具体步骤(假设时间同步服务地址为:10.0.18.253):

通过 crontab -e 命令,输入如下内容并保存:

*/1 * * * * /usr/sbin/ntpdate 10.0.18.253通过 crontab -l 命令查看是否生效。

6. docker安装

参考链接:https://blog.csdn.net/li1325169021/article/details/90780627

7. kubeadm安装

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kube*

EOF

# 将 SELinux 设置为 permissive 模式(将其禁用)

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

yum install -y kubelet-1.15.3 kubeadm-1.15.3 kubectl-1.15.3 --disableexcludes=kubernetes

systemctl enable kubelet && systemctl start kubelet 8. 拉取k8s镜像

本文以Kubernetes1.16.3版本为例。在安装部署中,需要从k8s.grc.io仓库中拉取所需镜像文件,而该仓库需要才能正常拉取,因此需要解决从k8s.gcr.io拉取镜像失败问题。可以通过手动从能获取到镜像的仓库拉取镜像,并改名的方式来解决。

<1> 通过docker.io仓库拉取k8镜像

docker pull mirrorgooglecontainers/kube-apiserver-amd64:v1.15.3

docker pull mirrorgooglecontainers/kube-controller-manager-amd64:v1.15.3

docker pull mirrorgooglecontainers/kube-scheduler-amd64:v1.15.3

docker pull mirrorgooglecontainers/kube-proxy-amd64:v1.15.3

docker pull mirrorgooglecontainers/pause:3.1

docker pull mirrorgooglecontainers/etcd-amd64:3.3.10

docker pull coredns/coredns:1.3.1上面的版本可以根据部署的需要修改。

<2> 通过 docker tag 命令修改镜像标签

docker tag docker.io/mirrorgooglecontainers/kube-proxy-amd64:v1.15.3 k8s.gcr.io/kube-proxy:v1.15.3

docker tag docker.io/mirrorgooglecontainers/kube-scheduler-amd64:v1.15.3 k8s.gcr.io/kube-scheduler:v1.15.3

docker tag docker.io/mirrorgooglecontainers/kube-apiserver-amd64:v1.15.3 k8s.gcr.io/kube-apiserver:v1.15.3

docker tag docker.io/mirrorgooglecontainers/kube-controller-manager-amd64:v1.15.3 k8s.gcr.io/kube-controller-manager:v1.15.3

docker tag docker.io/mirrorgooglecontainers/etcd-amd64:3.3.10 k8s.gcr.io/etcd:3.3.10

docker tag docker.io/mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag coredns/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

(三)安装k8s集群

- 安装主节点

1. 初始化k8s集群

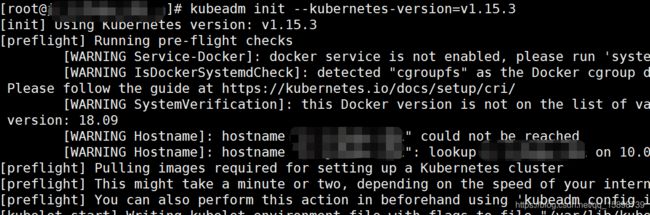

<1> 通过 kubeadm init 命令初始化k8s集群

kubeadm init --kubernetes-version=v1.15.3初始化成功的结果如下所示:

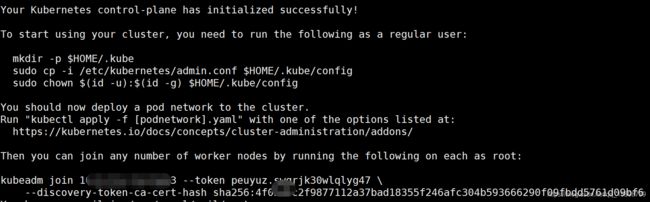

<2> 按照初始化完成提示,初始化配置

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config并保存 kubeadm join 的提示信息:

kubeadm join 10.0.112.63:6443 --token peuyuz.swqrjk30wlqlyg47 \

--discovery-token-ca-cert-hash sha256:4f05d1c2f9877112a37bad18355f246afc304b593666290f09fbdd5761d09bf62. 部署网络插件

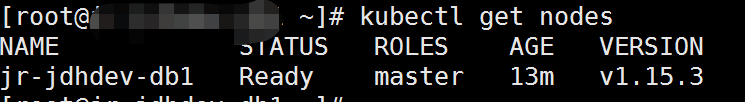

kubectl apply -f https://git.io/weave-kube-1.63. 通过 kubectl get nodes 查看主节点状态

可以看到STATUS状态为Ready,证明k8s集群初始化成功。

- 安装从节点

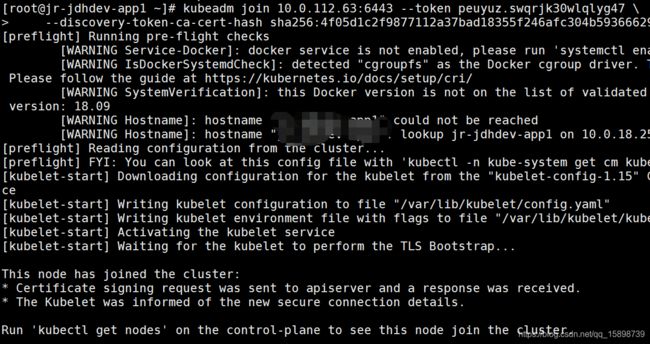

1. 加入k8s集群

执行上面保存的kubeadm join。

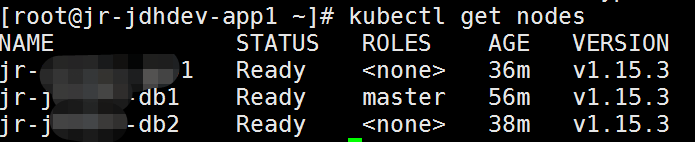

2. 通过 kubectl get nodes 查看节点状态

如果STATUS状态都为Ready,证明集群安装成功

(四)部署Rook插件

插件下载地址:https://github.com/rook/rook/tree/release-1.0

拷贝cluster/examples/kubernetes/ceph下的common.yaml,operator.yaml,cluster.yaml到主节点上,然后执行如下命令:

kubectl apply -f common.yaml

kubectl apply -f operator.yaml

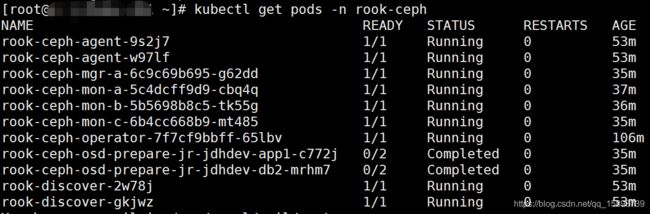

kubectl apply -f cluster.yaml通过 kubectl get pods -n rook-ceph 查看部署情况:

如图所示,证明部署成功。

(五)部署Dashboard

1. 镜像拉取

拉取 kubernetes-dashboard(1.8.3)的镜像。

docker pull docker.io/siriuszg/kubernetes-dashboard-amd64:v1.8.32. 新增 kubernetes-dashboard.yaml 文件

新增kubernetes-dashboard.yaml文件,并输入如下内容:

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Configuration to deploy release version of the Dashboard UI compatible with

# Kubernetes 1.8.

#

# Example usage: kubectl create -f

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: docker.io/siriuszg/kubernetes-dashboard-amd64:v1.8.3

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard 3. 执行kubectl apply

通过 kubectl apply 命令,生成pod对象。

kubectl apply -f kubernetes-dashboard.yaml

4. 配置代理

<1> 通过 kubectl proxy 启动代理

nohup kubectl proxy --address='0.0.0.0' --accept-hosts='^*$' &其中nohup表示后台运行。

<2> 验证

访问如下URL验证:

http://主节点IP:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

看到这个界面,很开心,因为仪表板已经成功启动了。

5. 配置权限

<1> 新增 dashboard-admin.yml 文件

通过 vim dashboard-admin.yml 命令,输入以下内容并保存:

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system<2> 执行 kubectl apply

通过 kubectl apply 命令生成对象。

kubectl apply -f dashboard-admin.yml<3> 验证

在界面上点击跳过

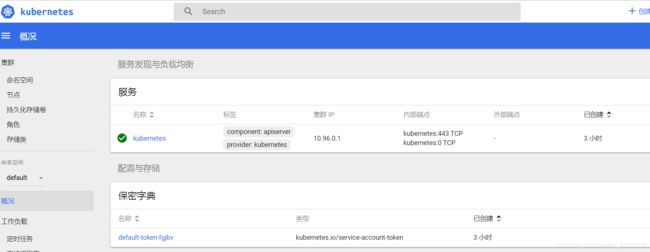

出现如下界面,现在仪表板能正确展示啦!

总结

本文主要介绍了如何从0到1搭建k8s集群,从下一节开始,我会针对k8s所具有的所有特性,逐一实践。为读者在生产环境部署应用k8s铺路。欢迎继续收看。

参考资料

1. docker与k8s的版本匹配

https://blog.csdn.net/cdbdqn001/article/details/88183777

https://blog.csdn.net/CSDN_duomaomao/article/details/79171027

2. 如何解决从k8s.gcr.io拉取镜像失败问题

https://blog.csdn.net/jinguangliu/article/details/82792617

3. curl : Depends: libcurl3-gnutls (= 7.47.0-1ubuntu2.12) but 7.58.0-2ubuntu3.6 is to be installed

https://blog.csdn.net/wanttifa/article/details/88965082

4. gpg: no valid OpenPGP data found

https://blog.csdn.net/wiborgite/article/details/52840371

5. Kubernetes-kubectl命令出现错误:The connection to the server localhost:8080 was refused - did you specify the right host or port?

https://www.jianshu.com/p/6fa06b9bbf6a