python共词矩阵分析结果一步到位

import os

import re

import pandas as pd

from PyPDF2 import PdfFileReader

import string

import yake

if __name__ == '__main__':

# 运行第一部分代码

pdf_files_path = 'C:/Users/win10/Documents/美国智库/pdf_files'

# 定义一个函数,用于读取PDF文件并将其转化成文本

def read_pdf(filename):

filepath = os.path.join(pdf_files_path, filename)

with open(filepath, 'rb') as f:

pdf = PdfFileReader(f)

text = ''

for i in range(pdf.getNumPages()):

page = pdf.getPage(i)

text += page.extractText()

return text

# 读取Excel文件

df = pd.read_excel(r'C:\Users\win10\Desktop\2022.xlsx')

# 循环遍历每行数据

for index, row in df.iterrows():

# 判断是否有PDF文件名

if pd.notna(row['pdf_filename']):

try:

# 尝试读取PDF文件并识别成文字

text = read_pdf(row['pdf_filename'])

except:

# 读取失败则跳过

continue

# 替换art_content列的值为PDF中的文字

df.at[index, 'art_content'] = text

# 对art_content列进行分词

stop_words = ["d"]

def phrase_extract(text):

text = text.lower()

custom_kw_extractor = yake.KeywordExtractor(top=10, lan="en")

keywords = custom_kw_extractor.extract_keywords(text)

phrase_list = []

for keyword, score in keywords:

if len(keyword.split(' ')) > 1:

phrase_list.append(keyword.lower())

phrases_list = []

for phrase in phrase_list:

for i in range(0, len(text.split(phrase)) - 1):

phrases_list.append(phrase)

return phrases_list

def segment(text):

phrases_list = phrase_extract(text)

# word_tokens = nltk.tokenize.word_tokenize(text.strip())

word_list = []

for i in text.split(' '):

word_list.append(i)

table = str.maketrans('', '', string.punctuation)

tokens = [w.translate(table) for w in word_list]

# tokens = [w.translate(table) for w in word_tokens]

tokens = [word for word in tokens if word.isalpha()]

tokens = [w for w in tokens if not w in stop_words]

tokens = [word for word in tokens if len(word) > 1]

tokens = list(set(tokens+phrases_list))

return tokens

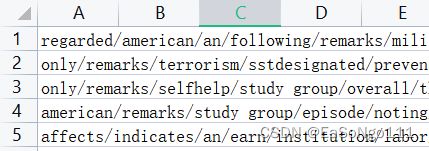

df['col'] = df['art_content'].apply(lambda x: '/'.join(segment(x)).lower())

df['col'].to_excel(r'C:\Users\win10\Desktop\结果P1.xlsx', index=False, header=False)

# -*- coding: utf-8 -*-

import numpy as np

import time

from pprint import pprint as p

import pandas as pd

def log(func):

def wrapper(*args, **kwargs):

now_time = str(time.strftime('%Y-%m-%d %X', time.localtime()))

print('------------------------------------------------')

print('%s func [%s] called' % (now_time, func.__name__))

print('# %s' % func.__doc__)

print('%s returns:' % func.__name__)

re = func(*args, **kwargs)

p(re)

return re

return wrapper

def readxls(path):

import xlrd

xl = xlrd.open_workbook(path)

sheet = xl.sheets()[0]

data = []

for i in range(0, sheet.ncols):

data.append(list(sheet.col_values(i)))

return (data[0])

@log

def get_set_key(data, threshold=2):

'''选取频数大于等于Threshold的关键词构建一个集合,用于作为共现矩阵的首行和首列'''

all_key = '/'.join(data)

key_list = [it.strip() for it in all_key.strip().split('/')]

keys = set(key_list)

dic = dict(zip(keys, [key_list.count(k) for k in keys]))

wf = {k: v for k, v in dic.items() if k != '' and v >= threshold}

set_key_list = []

for a in sorted(wf.items(), key=lambda item: item[1], reverse=True):

set_key_list.append(a[0])

return set_key_list

@log

def format_data(data, set_key_list):

'''格式化需要计算的数据,将原始数据格式转换成二维数组'''

formated_data = []

for ech in data:

ech_line = str(ech).split('/')

temp = [] # 筛选出format_data中属于关键词集合的词

for e in ech_line:

if e in set_key_list:

temp.append(e)

ech_line = temp

ech_line = list(set(filter(lambda x: x != '', ech_line))) # set去掉重复数据

formated_data.append(ech_line)

return formated_data

# @log

def build_matirx(set_key_list):

'''建立矩阵,矩阵的高度和宽度为关键词集合的长度+1'''

edge = len(set_key_list) + 1

# matrix = np.zeros((edge, edge), dtype=str)

matrix = [[0 for j in range(edge)] for i in range(edge)]

return matrix

@log

def init_matrix(matrix, set_key_list):

'''初始化矩阵,将关键词集合赋值给第一列和第二列'''

matrix[0][1:] = np.array(set_key_list)

matrix = list(map(list, zip(*matrix)))

matrix[0][1:] = np.array(set_key_list)

return matrix

@log

def count_matrix(matrix, formated_data):

'''计算各个关键词共现次数'''

keywordlist = matrix[0][1:] # 列出所有关键词

appeardict = {} # 每个关键词与 [出现在的行(formated_data)的list] 组成的dictionary

for w in keywordlist:

appearlist = []

i = 0

for each_line in formated_data:

if w in each_line:

appearlist.append(i)

i += 1

appeardict[w] = appearlist

for row in range(1, len(matrix)):

# 遍历矩阵第一行,跳过下标为0的元素

for col in range(1, len(matrix)):

# 遍历矩阵第一列,跳过下标为0的元素

# 实际上就是为了跳过matrix中下标为[0][0]的元素,因为[0][0]为空,不为关键词

if col >= row:

# 仅计算上半个矩阵

if matrix[0][row] == matrix[col][0]:

# 如果取出的行关键词和取出的列关键词相同,则其对应的共现次数为0,即矩阵对角线为0

matrix[col][row] = 0

else:

counter = len(set(appeardict[matrix[0][row]]) & set(appeardict[matrix[col][0]]))

matrix[col][row] = counter

else:

matrix[col][row] = matrix[row][col]

return matrix

def main():

keyword_path = r'C:\Users\win10\Desktop\结果P1.xlsx'

output_path = r'C:\Users\win10\Desktop\结果P2.xlsx'

data = readxls(keyword_path)

set_key_list = get_set_key(data)

formated_data = format_data(data, set_key_list)

matrix = build_matirx(set_key_list)

matrix = init_matrix(matrix, set_key_list)

result_matrix = count_matrix(matrix, formated_data)

print(result_matrix)

pd.DataFrame(result_matrix).to_excel(output_path,index=False)

# np.savetxt(output_path, result_matrix, fmt=('%s,' * len(matrix))[:-1])

if __name__ == '__main__':

main()

import pandas as pd

if __name__ == '__main__':

# 读取 Excel 文件并将每个单元格转换为整数类型

df = pd.read_excel(r'C:\Users\win10\Desktop\结果P2.xlsx', index_col=0)

# df = df.astype(int)

# 定义一个空字典用于存储词组搭配及其出现次数

co_occurrence_dict = {}

# 遍历共现矩阵中每个单元格,并将词组搭配及其出现次数存储到字典中

for i in range(df.shape[0]):

for j in range(i, df.shape[1]): # 只遍历矩阵对角线以上的所有元素

# 获取当前单元格的值

count = df.iloc[i, j]

# 获取当前单元格所对应的行和列的单词

word1 = df.index[i]

word2 = df.columns[j]

# 构造词组搭配

collocation = (word1, word2)

# 更新字典中的词组搭配及其出现次数

if collocation in co_occurrence_dict:

co_occurrence_dict[collocation] += count

else:

co_occurrence_dict[collocation] = count

# 将词组搭配及其出现次数转换为 DataFrame 类型

result = pd.DataFrame([(collocation[0], collocation[1], count) for collocation, count in co_occurrence_dict.items()], columns=["Word1", "Word2", "Count"])

# 将 DataFrame 写入到新的 Excel 表中

result.to_excel(r'C:\Users\win10\Desktop\结果P3.xlsx', index=False)

每个函数的作用:

- read_pdf():读取 PDF 文件并将其转换成文本,返回字符串类型的文本。

- phrase_extract():利用 YAKE 模型提取出文本中的关键词短语。默认提取前 10 个得分最高的关键短语,返回一个列表。

- segment():将原始文本分成单词和短语,返回一个列表。

- get_set_key():将所有输入的文本中出现频数大于等于 threshold 的单词构建成一个集合,用于作为共现矩阵的首行和首列。返回一个列表。

- format_data():将原始数据格式转换成二维数组。返回一个列表。

- build_matrix():建立矩阵,矩阵的高度和宽度为关键词集合的长度+1。返回一个二维列表。

- init_matrix():初始化矩阵,将关键词集合赋值给第一列和第二列。返回一个二维列表。

- count_matrix():计算各个关键词共现次数,生成一个共现矩阵,返回一个二维列表。

- main():主函数,将以上函数调用整合在一起,并将结果写入 Excel 文件。

- readxls():读取 Excel 文件数据并返回一个列表,每个列表项为单元格中的字符串。

- log():一个装饰器函数,用于记录函数调用情况并输出结果。

- pd.read_excel():读取 Excel 文件,返回一个 Pandas DataFrame。

- np.array():将输入转换为 NumPy 数组。

- np.zeros():生成一个全 0 矩阵。

- map():对序列中的每个元素都执行相同的操作,返回一个可迭代对象。

- set():创建一个无序不重复元素集合。

- dict():创建一个字典。

- filter():过滤掉不符合规则的元素,返回一个可迭代对象。

- list():将输入转换为列表类型。

- zip():将多个序列压缩成一个元组列表。

- sorted():对列表进行排序。

- pd.DataFrame():将输入转换为 Pandas DataFrame 类型。

- to_excel():将数据保存为 Excel 格式。

思路过程:

1.首先,使用 PyPDF2 库读取 PDF 文件并将其转化为文本。

2.读取 Excel 文件,并循环遍历每行数据,尝试读取 PDF 文件并识别成文字,并将 art_content 列的值替换为 PDF 中的文字。

3.对 art_content 列进行分词处理,使用 yake 库提取关键词,并将处理结果写入到结果P1.xlsx文件中。

4.读取 P1 文件中的关键词,并将其转换为矩阵。

5.定义词组搭配的字典 co_occurrence_dict,遍历共现矩阵中每个单元格,并将词组搭配及其出现次数存储到字典中。

6.将得到的词组搭配及其出现次数转换为 DataFrame 类型

结果