k8s简单搭建

前言

最近学习k8s,跟着网上各种教程搭建了简单的版本,一个master节点,两个node节点,这里记录下防止以后忘记。

具体步骤

- 准备环境

- 用Oracle VM VirtualBox虚拟机软件安装3台虚拟机,一台master节点,两台node节点,系统使用centOS 7.9,每台虚拟机都是2G内存,2核CPU,10G硬盘。

- 网络连接使用桥接模式,并将IP固定如下

| master | 192.168.1.41 |

|---|---|

| node1 | 192.168.1.42 |

| node1 | 192.168.1.43 |

- 系统初始化(全部节点执行)

- 重新命名主机名,并修改hosts文件

这步必需做,后续node节点join到master的时候,如果主机名相同是会报错的。

[root@localhost ~] cat > /etc/hosts << EOF

> 192.168.1.41 k8s-master

> 192.168.1.42 k8s-node1

> 192.168.1.43 k8s-node2

> EOF

- 修改一些内核参数

这步必需做,后续会报错的。

[root@localhost ~] cat > /etc/sysctl.conf << EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_nonlocal_bind = 1

> net.ipv4.ip_forward = 1

> vm.swappiness=0

> EOF

[root@localhost ~] sysctl -p #让参数生效到内核里面

- 关闭防火墙

[root@localhost ~] systemctl stop firewalld

[root@localhost ~] systemctl disable firewalld

- 关闭 selinux

[root@localhost ~] sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久,重启后生效

- 关闭 swap

vi /etc/fstab

#注释掉下面的设置

/dev/mapper/centos-swap swap

- 时间同步

[root@localhost ~] yum install ntpdate -y

[root@localhost ~] ntpdate time.windows.com

- 安装 Docker(全部节点执行)

Kubernetes 默认 CRI( 容器运行时) 为 Docker,注意这里选择的版本和后边的k8s是能匹配的。

- 安装指定版本docker

[root@localhost ~] wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

[root@localhost ~] yum -y install docker-ce-20.10.7-3.el7

[root@localhost ~] systemctl enable docker && systemctl start docker

[root@localhost ~] docker --version

- 配置docker 镜像加速器

没有daemon.json这个文件,就创建一个

[root@localhost ~] cat > /etc/docker/daemon.json << EOF

> {

> "exec-opts": ["native.cgroupdriver=systemd"],

> "registry-mirrors": ["https://kn0t2bca.mirror.aliyuncs.com"]

> }

> EOF

- k8s镜像切换成国内源

[root@localhost ~] cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

> https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

- 安装 kubeadm、 kubelet 和 kubectl(全部节点执行)

[root@localhost ~] yum install -y kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6

[root@localhost ~] systemctl enable kubelet #设置kubelet开机启动

注意:master服务此时无法启动,因为没有配置文件 /var/lib/kubelet/config.yaml ,这个文件在 kubeadm init 的[kubelet-start]这一步写进来的。且写完后kubeadm帮启动了,无需自己启。

node节点也无法启动,查看日志也是没有配置文件 /var/lib/kubelet/config.yaml ,这个文件在 kubeadm join 的[kubelet-start]这一步写进来的。

- 部署K8s master(master节点执行)

- master节点初始化

注意敲打命令时"\"后不要有空格

[root@localhost ~] kubeadm init \

> --apiserver-advertise-address=192.168.1.41 \ #集群通告地址(master 机器IP)

> --image-repository registry.aliyuncs.com/google_containers \ #由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

> --kubernetes-version v1.23.6 \ #K8s版本,与上面安装的一致

> --service-cidr=10.96.0.0/12 \ #集群内部虚拟网络,Pod统一访问入口

> --pod-network-cidr=10.244.0.0/16 #Pod网络,与下面部署的CNI网络组件yaml中保持一致

初始化后,日志的最后有一行如下,这个是在node节点执行的,默认token有效期为24小时,当过期之后,该token就不可用了,这时就需要重新创建token。

[root@localhost ~] kubeadm join 192.168.1.41:6443 --token ih48s6.x1mjtokujdyt9ysu \

> --discovery-token-ca-cert-hash sha256:392750ed054b8288000d3969e8fdf47cc665c4bf8f8f025a255a457f5ec74814

- 使用 kubectl 工具,创建必要文件

[root@localhost ~] mkdir -p $HOME/.kube

[root@localhost ~] sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@localhost ~] sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 部署容器网络(CNI)

k8s的网络插件:作用就是实现不同宿主机之间pod的通信,需要下载kube-flannel.yaml 文件并修改定义Pod网络(CALICO_IPV4POOL_CIDR),与前面kubeadm init的–pod-network-cidr指定的一样,这里给出这个文件

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.19.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.19.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

执行部署flannel

[root@localhost ~] kubectl apply -f kube-flannel.yaml

查看节点状态

[root@localhost ~] kubectl get pods -n nodes

#查看通信状态

[root@localhost ~] kubectl get pods -n kube-system -w

- 部署K8s node(node节点执行)

在每个node节点上,执行master节点初始化时生成的join语句,如下

[root@localhost ~] kubeadm join 192.168.1.41:6443 --token ih48s6.x1mjtokujdyt9ysu \

--discovery-token-ca-cert-hash sha256:392750ed054b8288000d3969e8fdf47cc665c4bf8f8f025a255a457f5ec74814

- 测试K8s

在 Kubernetes 集群中创建一个 pod, 验证是否正常运行,在master节点执行。

[root@localhost ~] kubectl create deployment nginx --image=nginx

[root@localhost ~] kubectl expose deployment nginx --port=80 --type=NodePort

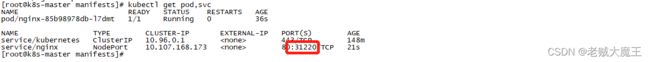

[root@localhost ~] kubectl get pod,svc

执行kubectl get pod,svc后如下图中有端口号

浏览器访问地址: http://NodeIP:Port,例如http://192.168.1.42:31220,出现Nginx首页即安装搭建成功。

总结

安装过程会遇到各种问题:

1.比如docker和k8s的版本不匹配,因为我第一次安装的时候docker安装了最新版,导致不匹配,删除docker后重新安装指定版本就好。

2.kubectl init \报错后再次执行提示各种文件已经生产了,删除相关文件后重新初始化就好。

3.像kubectl get nodes等命令只能在master上执行,node节点执行会报错Unable to connect to the server: dial tcp: lookup localhost on 8.8.8.8:53: no such host