Day58 Hive中的窗口函数

后台启动Hive的JDBC连接

0表示标准输入,1表示标准输出,2表示标准错误输出,nohup表示挂起,&表示后台启动

nohup hive --service hiveserver2 > /usr/local/soft/hive-3.1.2/log/hiveserver2.log 2>&1 &关闭后台启动的jdbc

用jps查看RunJob,使用kill -9 关闭RunJob对应的进程号

Hive中的wordcount实例

使用后台启动hive的jdbc连接,进入到jdbc中创建一个新的数据库learn3,向其中创建新的表wordcount

-- 创建wordcount表:

CREATE TABLE learn3.wordcount(

word STRING COMMENT "单词"

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS TEXTFILE;

-- 向表中添加数据:

insert into table learn3.wordcount (word) values ("hello,word"),("hello,java"),("hive,hello");

对wordcount中的数据进行统计,统计每个单词以及单词出现的次数:

先切分出每一个单词:

select

split(word,",")[0] as col1,-- 使用下标去除切分后的每个元素

split(word,",")[1] as col2

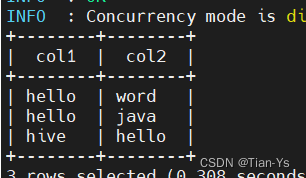

from learn3.wordcount结果:这里使用MobaXterm工具进行操作,使用其他工具操作步骤不变

将切分出的结果作为一个新的表T,分别对该表中的col1列中的单词和col2中的单词进行统计:

-- 统计col1列中的单词个数

select T.col1 as col,

count(*) as num

from(

select

split(word,",")[0] as col1,

split(word,",")[1] as col2

from learn3.wordcount

)T GROUP BY col1;

-- 统计col2列中的单词个数:

SELECT T.col2 as col,

count(*) as num

from (

select

split(word,",")[0] as col1,

split(word,",")[1] as col2

from learn3.wordcount

)T GROUP BY col2;

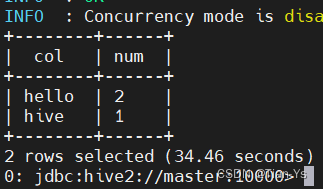

结果:左边为col1列中的单词数,右边为col2列中的单词数

将两个结果作为两个表,进行表连接,获取总的单词及其个数:

select T1.col,

sum(num) as sum_num

from (

-- col1列的单词个数

select T.col1 as col,

count(*) as num

from(

select

split(word,",")[0] as col1,

split(word,",")[1] as col2

from learn3.wordcount

)T GROUP BY col1

UNION ALL-- 表连接

-- col2列的单词个数

SELECT T.col2 as col,

count(*) as num

from (

select

split(word,",")[0] as col1,

split(word,",")[1] as col2

from learn3.wordcount

)T GROUP BY col2

-- 将连接后的表作为新表T1,按col进行分组

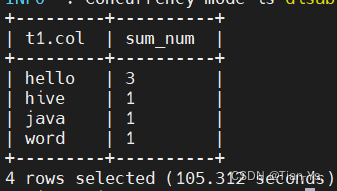

)T1 GROUP BY T1.col;结果:

Hive窗口函数

with as 用法

将查询过程中反复使用的表进行一个缓存,让其存放在内存中,使得可以反复使用该表

格式:

with table1 as(

select 查询语句1

)

,table2 as(

select 查询语句2

)

[INSERT INTO TABLE] SELECT FROM

使用with as将上述sql查询操作简化:

WITH split_res AS(-- 切分的表

SELECT

split(word,",")[0] as col1,

split(word,",")[1] as col2

FROM learn3.wordcount

)

,col1_count AS(--col1列的表

SELECT col1 as col,

count(*) as num

FROM split_res

GROUP BY col1

)

,col2_count AS(col2列的表

SELECT col2 as col,

count(*) as num

FROM split_res

GROUP BY col2

)

SELECT T.col,--最终查询

sum(T.num) AS sum_num

FROM (

select * FROM col1_count

UNION ALL

select * FROM col2_count

)T GROUP BY T.col;结果:与上述结果相同

集合函数

COLLECT_LIST(column2):需要与GROUP BY column1配合使用,将column1中相同组column2数据放到一个集合中

COLLECT_SET(column2):与COLLECT_LIST相同,但COLLECT_SET会对集合中的数据进行去重操作

需求:创建一个表wordcount2,向表中添加数据,将word列中所有的相同单词对应的num放到一个数组中

-- 建表

CREATE TABLE learn3.wordcount2(

word STRING COMMENT "单词",

num int COMMENT "数量"

)

STORED AS TEXTFILE;

-- 插入数据,该行执行两次

INSERT INTO TABLE learn3.wordcount2 (word,num) VALUES ("hello",1),("hello",2),("hive",3);

在插入两次数据后,查看当前表中的内容:

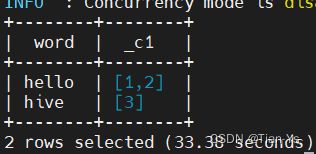

使用两种集合函数分别查询:

-- 使用COLLECT_LIST()函数

select word,

COLLECT_LIST(num)

from learn3.wordcount2

GROUP BY word;

--使用COLLECT_SET()函数

select word,

COLLECT_SET(num)

from learn3.wordcount2

GROUP BY word;结果:左侧为COLLECT_LIST()结果,右侧为COLLECT_SET()结果

行列互换

需求:将学生表中按照文理科以及性别进行分组,将相同学科和相同性别的学生放入同一数组中

-- 创建表

CREATE EXTERNAL TABLE IF NOT EXISTS learn3.student20(

id STRING COMMENT "学生ID",

name STRING COMMENT "学生姓名",

age int COMMENT "年龄",

gender STRING COMMENT "性别",

subject STRING COMMENT "学科"

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ","

STORED AS TEXTFILE;

-- 导入数据

load data local inpath "/usr/local/soft/hive-3.1.2/data/student_20.txt" INTO TABLE learn3.student20;

-- 操作:

WITH stu_split AS(

select

CONCAT(subject,"|",gender) as subject_gender,

CONCAT_WS("|",id,name) as id_name

from learn3.student20

)

select subject_gender,

COLLECT_LIST(id_name) as stu

from stu_split

GROUP BY subject_gender;

使用COLLECT_LIST()函数或者COLLECT_SET()和GROUP BY进行搭配实现行转列,将GROUP BY分组的数据进行存放在一个集合中,将原先多行数据转为一行数据

结果:

+-----------------+----------------------------------------------------+

| subject_gender | stu |

+-----------------+----------------------------------------------------+

| 文科|女 | ["1500100001|施笑槐","1500100007|尚孤风","1500100016|潘访烟","1500100018|骆怜雪"] |

| 文科|男 | ["1500100002|吕金鹏","1500100013|逯君昊"] |

| 理科|女 | ["1500100003|单乐蕊","1500100005|宣谷芹","1500100008|符半双","1500100012|梁易槐","1500100015|宦怀绿","1500100017|高芷天"] |

| 理科|男 | ["1500100004|葛德曜","1500100006|边昂雄","1500100009|沈德昌","1500100010|羿彦昌","1500100011|宰运华","1500100014|羿旭炎","1500100019|娄曦之","1500100020|杭振凯"] |

+-----------------+----------------------------------------------------+

改进:可以使用concat_ws将数组符号以及引号去除,更改为逗号进行拼接,只需在使用list函数时做如下改动:

CONCAT_WS(",",COLLECT_LIST(id_name)) as stu结果:

+-----------------+----------------------------------------------------+

| subject_gender | stu |

+-----------------+----------------------------------------------------+

| 文科|女 | 1500100001|施笑槐,1500100007|尚孤风,1500100016|潘访烟,1500100018|骆怜雪 |

| 文科|男 | 1500100002|吕金鹏,1500100013|逯君昊 |

| 理科|女 | 1500100003|单乐蕊,1500100005|宣谷芹,1500100008|符半双,1500100012|梁易槐,1500100015|宦怀绿,1500100017|高芷天 |

| 理科|男 | 1500100004|葛德曜,1500100006|边昂雄,1500100009|沈德昌,1500100010|羿彦昌,1500100011|宰运华,1500100014|羿旭炎,1500100019|娄曦之,1500100020|杭振凯 |

+-----------------+----------------------------------------------------+

将一行数据转换为多行数据

EXPLODE()函数:将集合中的数据转换为多行

--插入一条数据

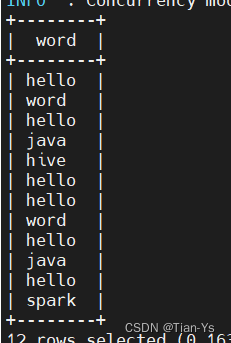

INSERT INTO TABLE learn3.wordcount (word) VALUES ("hello,word,hello,java,hello,spark");

--使用explode()函数:

select

explode(split(word,",")) as word

from learn3.wordcount;

结果:

LATERAL VIEW

创建表movie:

CREATE TABLE learn3.movie(

movie_name STRING COMMENT "电影名",

type STRING COMMENT "电影类型"

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ","

STORED AS TEXTFILE;

load data local inpath "/usr/local/soft/hive-3.1.2/data/movie.txt" into table learn3.movie;查看其中的内容:

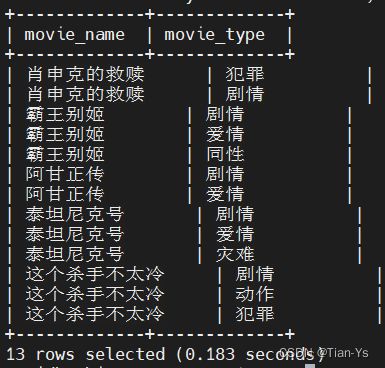

将type列中的电影类型进行分隔,并且与电影名进行对应

按照理论应该使用explode函数进行处理:

select

movie_name,

EXPLODE(split(type,"/")) as type

from learn3.movie;

但在实际操作时,这样运行后会报错:

Error: Error while compiling statement: FAILED: SemanticException [Error 10081]: UDTF's are not supported outside the SELECT clause, nor nested in expressions (state=42000,code=10081)

原因:explode在处理单列数据时,可以成功处理将一行数据转为多行,但当数据列不止一列时,explode函数在转换目标列之后不知道与另外列中的数据以何种方式进行对应

解决办法:使用hive高级函数LATERAL VIEW进行行转列

select

movie_name,movie_type

from learn3.movie LATERAL VIEW EXPLODE(split(type,"/")) view as movie_type;结果:

解析:

1、通过split方法切分数据并通过explode方法进行行转列

2、使用LAETRAL VIEW方法将EXPLODE转换后的结果包装成一个名为view的侧写表,列名为movie_type

3、通过全连接将侧写表的数据与原表进行全连接

排序函数

排序函数有多种:

row_number:无并列排名

用法:

select xxxx, row_number() over(partition by 分组字段 order by 排序字段 desc) as rn

from tb group by xxxx

dense_rank:有并列排名,并且依次递增

用法:

select xxxx, dense_rank() over(partition by 分组字段 order by 排序字段 desc) as rn

from tb group by xxxx

rank:有并列排名,不依次递增

用法:

select xxxx, rank() over(partition by 分区字段 order by 排序字段 desc) as rn

from tb group by xxxx

percent_rank:(rank的结果-1)/(分区内数据的个数-1),按百分比排序,以小数表示

用法:

select xxxx, percent_rank() over(partition by 分组字段 order by 排序字段 desc) as rn

from tb group by xxxx

创建表student和表score:

CREATE EXTERNAL TABLE IF NOT EXISTS learn3.student1(

id STRING COMMENT "学生ID",

name STRING COMMENT "学生姓名",

age int COMMENT "年龄",

gender STRING COMMENT "性别",

clazz STRING COMMENT "班级"

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ",";

--加载数据

load data local inpath "/usr/local/soft/hive-3.1.2/data/students.txt" INTO TABLE learn3.student1;

CREATE EXTERNAL TABLE IF NOT EXISTS learn3.score1(

id STRING COMMENT "学生ID",

subject_id STRING COMMENT "科目ID",

score int COMMENT "成绩"

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ","

--加载数据

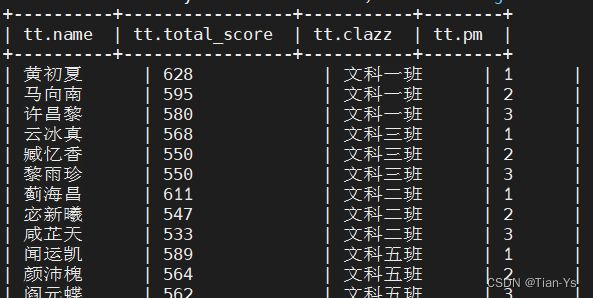

load data local inpath "/usr/local/soft/hive-3.1.2/data/score.txt" INTO TABLE learn3.score1;需求:统计各班级学生总成绩前三名

分析:先按照学生id计算出总成绩,再将计算结果作为一个表与学生表连接起来,最后使用row_number()方法进行排序

-- 算出学生总成绩

WITH stu_score AS(

select

id,sum(score) as total_score

from learn3.score1

GROUP BY id

)

--表的连接

,student_score AS(

select

T1.name

,T2.total_score

,T1.clazz

from learn3.student1 T1

JOIN stu_score T2 ON T1.id=T2.id

)

select

TT.*

from(

select

T.name,

T.total_score,

T.clazz,

ROW_NUMBER() OVER(PARTITION BY T.clazz ORDER BY total_score desc) as pm

from student_score T

)TT

WHERE TT.pm<=3;结果:

其他排序函数的使用类似,但是结果不同

需求:取每个班级总分最大的同学

创建一个表用于存放刚刚计算出的各同学的总分

CREATE TABLE learn3.student_score(

name STRING COMMENT "",

total_score int COMMENT "",

clazz STRING COMMENT ""

);

WITH score_sum AS (

SELECT

id

,sum(score) as total_score

FROM learn3.score1

GROUP BY id

)

INSERT INTO TABLE learn3.student_score

SELECT

T1.name

,T2.total_score

,T1.clazz

FROM learn3.student1 T1

JOIN score_sum T2 ON T1.id = T2.id通过max方法取出班级分区中的学生成绩的最大值

max用法:

max(total_score) OVER(PARTITION BY T1.clazz ORDER BY T1.total_score DESC)

基于每个partition分区内数据取最大值max(T1.total_score) OVER()

基于整个数据集取最大值

相对应,min,avg,count,sum用法同max一样

select

TT.*

from(

-- 子查询按班级分区求出每个班最大成绩

select

T.*,

max(total_score) OVER(PARTITION BY T.clazz ORDER BY total_score desc) as max_score

from student_score T

)TT

WHERE TT.total_score=TT.max_score;结果:

窗口帧:用于从分区中选择指定的多条记录,供窗口函数处理

Hive 提供了两种定义窗口帧的形式:ROWS 和 RANGE。两种类型都需要配置上界和下界。

例如

ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW

表示选择分区起始记录到当前记录的所有行

ROWS BETWEEN 1 PRECEDING AND CURRENT ROW

表示选择分区前一条记录到当前记录的所有行

ROWS BETWEEN 1 PRECEDING AND 1 FOLLOWING

表示选择分区前一条记录和当前记录的后一条记录

SUM(close) RANGE BETWEEN 100 PRECEDING AND 200 FOLLOWING 则通过 字段差值 来进行选择。如当前行的 close 字段值是 200,那么这个窗口帧的定义就会选择分区中 close 字段值落在 100 至 400 区间的记录。

以下是所有可能的窗口帧定义组合。如果没有定义窗口帧,则默认为 RANGE BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW。

只能运用在max、min、avg、count、sum、FIRST_VALUE、LAST_VALUE这几个窗口函数上

LAG(col,n):往前第n行数据

LEAD(col,n):往后第n行数据

FIRST_VALUE:取分组内排序后,截止到当前行,第一个值

LAST_VALUE:取分组内排序后,截止到当前行,最后一个值,对于并列的排名,取最后一个

具体关于窗口函数的其他更多内容可查看博客:

Hive窗口函数_过河卒子Rover的博客-CSDN博客

总结:

OVER(): 会为每条数据都开启一个窗口,默认窗口大小就是当前数据集的大小

OVER(PARTITION BY) 会按照指定的字段进行分区,在获取一条数据时,窗口大小为整个分区的大小,之后根据分区中的数据进行计算

OVER(PARTITION BY ... ORDER BY ...) 根据给定的分区,在获取一条数据时,窗口大小为整个分区的大小,并且对分区中的数据进行排序

OVER中的取数据格式:

(ROWS | RANGE) BETWEEN (UNBOUNDED | [num]) PRECEDING AND ([num] PRECEDING | CURRENT ROW | (UNBOUNDED | [num]) FOLLOWING)

(ROWS | RANGE) BETWEEN CURRENT ROW AND (CURRENT ROW | (UNBOUNDED | [num]) FOLLOWING)

(ROWS | RANGE) BETWEEN [num] FOLLOWING AND (UNBOUNDED | [num]) FOLLOWINGOVER():指定分析函数工作的数据窗口大小,这个数据窗口大小可能会随着行的改变而变

CURRENT ROW:当前行

n PRECEDING:往前n行数据

n FOLLOWING:往后n行数据

UNBOUNDED :起点

UNBOUNDED PRECEDING 表示从前面的起点,

UNBOUNDED FOLLOWING 表示到后面的终点

LAG(col,n,default_val):往前第n行数据

LEAD(col,n, default_val):往后第n行数据

NTILE(n):把有序窗口的行分发到指定数据的组中,各个组有编号,编号从1开始,对于每一行,

NTILE返回此行所属的组的编号。