nacos2.0.1 配置中心 使用grpc源码分析

经过大佬指点,选择直接看nacos2.x版本源码,探讨最新的实现方式。

1.nacos2.x的架构实现

对比1.X的架构,主要是提供了grpc的长连接实现。grpc官网传送门

grpc是一个语言无关,平台无关的rpc实现框架,谷歌开源,支持多种编程语言。使用HTTP2.0的特性,支持长连接,服务端推送,header压缩,多路复用。HTTP2.0特性介绍

从架构图可以看到,2.x框架,主要是添加了grpc通信实现,是兼容1.x版本。使用grpc,主要是为了解析1.x框架下,http连接带来的短连接,频繁心跳包导致的资源空耗,还有UDP协议,广播不可靠。

详情看阿里nacos PMC写的文章 支持 gRPC 长链接,深度解读 Nacos 2.0 架构设计及新模型

2.源码分析nacos 2.0.1客户端 配置中心使用grpc

引入sdk

com.alibaba.nacos

nacos-client

2.0.1

创建ConfigService

System.setProperty("nacos.logging.default.config.enabled", "false");

String serverAddr = NacosServerProperties.serverAddr;

String dataId = "austin-provider-server";

String group = "DEFAULT_GROUP";

configService = NacosFactory.createConfigService(serverAddr);观察创建配置服务的核心代码

public NacosConfigService(Properties properties) throws NacosException {

ValidatorUtils.checkInitParam(properties);

initNamespace(properties);

this.configFilterChainManager = new ConfigFilterChainManager(properties);

ServerListManager serverListManager = new ServerListManager(properties);

serverListManager.start();

this.worker = new ClientWorker(this.configFilterChainManager, serverListManager, properties);

// will be deleted in 2.0 later versions

agent = new ServerHttpAgent(serverListManager);

}核心代码在ClientWorker上

public void executeConfigListen() {

Map> listenCachesMap = new HashMap>(16);

Map> removeListenCachesMap = new HashMap>(16);

long now = System.currentTimeMillis();

boolean needAllSync = now - lastAllSyncTime >= ALL_SYNC_INTERNAL;

for (CacheData cache : cacheMap.get().values()) {

synchronized (cache) {

//check local listeners consistent.

主要核心代码在executeConfigListen方法上。功能主要是定时地查看配置项,监听本地配置和服务器配置是否一致,不一致,则同步配置。

for (Map.Entry> entry : listenCachesMap.entrySet()) {

String taskId = entry.getKey();

List listenCaches = entry.getValue();

ConfigBatchListenRequest configChangeListenRequest = buildConfigRequest(listenCaches);

configChangeListenRequest.setListen(true);

try {

//grpc调用

RpcClient rpcClient = ensureRpcClient(taskId);

ConfigChangeBatchListenResponse configChangeBatchListenResponse = (ConfigChangeBatchListenResponse) requestProxy(

rpcClient, configChangeListenRequest);

if (configChangeBatchListenResponse != null && configChangeBatchListenResponse.isSuccess()) {

Set changeKeys = new HashSet();

//handle changed keys,notify listener

if (!CollectionUtils.isEmpty(configChangeBatchListenResponse.getChangedConfigs())) {

hasChangedKeys = true;

for (ConfigChangeBatchListenResponse.ConfigContext changeConfig : configChangeBatchListenResponse

.getChangedConfigs()) {

String changeKey = GroupKey

.getKeyTenant(changeConfig.getDataId(), changeConfig.getGroup(),

changeConfig.getTenant());

changeKeys.add(changeKey);

boolean isInitializing = cacheMap.get().get(changeKey).isInitializing();

refreshContentAndCheck(changeKey, !isInitializing);

}

}

//handler content configs

for (CacheData cacheData : listenCaches) {

String groupKey = GroupKey

.getKeyTenant(cacheData.dataId, cacheData.group, cacheData.getTenant());

if (!changeKeys.contains(groupKey)) {

//sync:cache data md5 = server md5 && cache data md5 = all listeners md5.

synchronized (cacheData) {

if (!cacheData.getListeners().isEmpty()) {

cacheData.setSyncWithServer(true);

continue;

}

}

}

cacheData.setInitializing(false);

}

}

} catch (Exception e) {

LOGGER.error("Async listen config change error ", e);

try {

Thread.sleep(50L);

} catch (InterruptedException interruptedException) {

//ignore

}

}

}

看一下获取配置的源码

public ConfigResponse queryConfig(String dataId, String group, String tenant, long readTimeouts, boolean notify)

throws NacosException {

ConfigQueryRequest request = ConfigQueryRequest.build(dataId, group, tenant);

request.putHeader("notify", String.valueOf(notify));

ConfigQueryResponse response = (ConfigQueryResponse) requestProxy(getOneRunningClient(), request,

readTimeouts);

ConfigResponse configResponse = new ConfigResponse();

if (response.isSuccess()) {

LocalConfigInfoProcessor.saveSnapshot(this.getName(), dataId, group, tenant, response.getContent());

configResponse.setContent(response.getContent());

String configType;

if (StringUtils.isNotBlank(response.getContentType())) {

configType = response.getContentType();

} else {

configType = ConfigType.TEXT.getType();

}

configResponse.setConfigType(configType);

String encryptedDataKey = response.getEncryptedDataKey();

LocalEncryptedDataKeyProcessor

.saveEncryptDataKeySnapshot(agent.getName(), dataId, group, tenant, encryptedDataKey);

configResponse.setEncryptedDataKey(encryptedDataKey);

return configResponse;

} else if (response.getErrorCode() == ConfigQueryResponse.CONFIG_NOT_FOUND) {

LocalConfigInfoProcessor.saveSnapshot(this.getName(), dataId, group, tenant, null);

LocalEncryptedDataKeyProcessor.saveEncryptDataKeySnapshot(agent.getName(), dataId, group, tenant, null);

return configResponse;

} else if (response.getErrorCode() == ConfigQueryResponse.CONFIG_QUERY_CONFLICT) {

LOGGER.error(

"[{}] [sub-server-error] get server config being modified concurrently, dataId={}, group={}, "

+ "tenant={}", this.getName(), dataId, group, tenant);

throw new NacosException(NacosException.CONFLICT,

"data being modified, dataId=" + dataId + ",group=" + group + ",tenant=" + tenant);

} else {

LOGGER.error("[{}] [sub-server-error] dataId={}, group={}, tenant={}, code={}", this.getName(), dataId,

group, tenant, response);

throw new NacosException(response.getErrorCode(),

"http error, code=" + response.getErrorCode() + ",dataId=" + dataId + ",group=" + group

+ ",tenant=" + tenant);

}

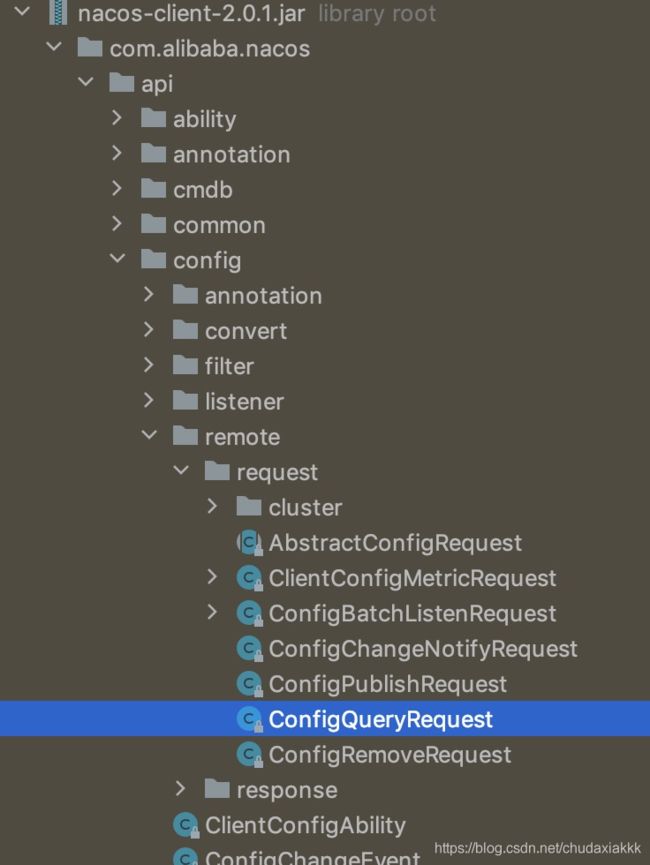

}nacos2.x使用策略模式,创建多种请求类型。

ConfigQueryRequest是用于查询配置,注意request名字,在server端,将会根据该类名查找相应的处理类。

3.源码分析nacos 2.0.1服务端 配置中心使用grpc

3.1grpc的proto文件

nacos使用grpc,采用大而全的方式。所有request都抽象成一个服务处理。proto文件在nacos-api包上,nacos_grpc_service.proto

/*

* Copyright 1999-2020 Alibaba Group Holding Ltd.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

syntax = "proto3";

import "google/protobuf/any.proto";

import "google/protobuf/timestamp.proto";

option java_multiple_files = true;

option java_package = "com.alibaba.nacos.api.grpc.auto";

message Metadata {

string type = 3;

string clientIp = 8;

map headers = 7;

}

message Payload {

Metadata metadata = 2;

google.protobuf.Any body = 3;

}

service RequestStream {

// build a streamRequest

rpc requestStream (Payload) returns (stream Payload) {

}

}

service Request {

// Sends a commonRequest

rpc request (Payload) returns (Payload) {

}

}

service BiRequestStream {

// Sends a commonRequest

rpc requestBiStream (stream Payload) returns (stream Payload) {

}

}

注意到Payload,作为入参和出参。body的类型是Any,接纳各种请求类型的参数。

分了三种请求service,待确认区分目的。

使用protocol插件,生成源码

3.2grpc服务端启动端口,监听服务

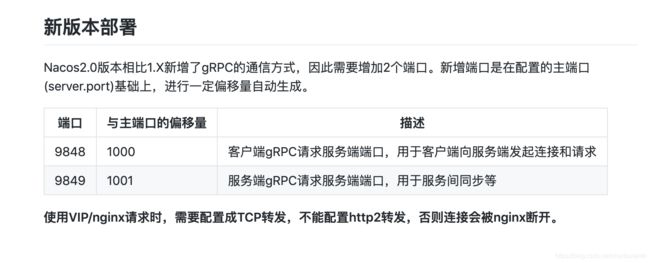

在nacos架构中,grpc有两个作用,CS模式和SS模式(服务端同步信息),所以启动了两个端口服务。nacos2.x兼容性文档

代码实现在nacos-core包下

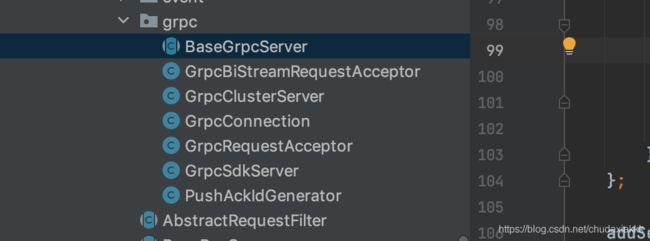

观察BaseGrpcServer,GrpcClusterServer,GrpcSdkServer。指定了启动方法和端口号偏移号

@Override

public void startServer() throws Exception {

final MutableHandlerRegistry handlerRegistry = new MutableHandlerRegistry();

// server interceptor to set connection id.

ServerInterceptor serverInterceptor = new ServerInterceptor() {

@Override

public ServerCall.Listener interceptCall(ServerCall call, Metadata headers,

ServerCallHandler next) {

Context ctx = Context.current()

.withValue(CONTEXT_KEY_CONN_ID, call.getAttributes().get(TRANS_KEY_CONN_ID))

.withValue(CONTEXT_KEY_CONN_REMOTE_IP, call.getAttributes().get(TRANS_KEY_REMOTE_IP))

.withValue(CONTEXT_KEY_CONN_REMOTE_PORT, call.getAttributes().get(TRANS_KEY_REMOTE_PORT))

.withValue(CONTEXT_KEY_CONN_LOCAL_PORT, call.getAttributes().get(TRANS_KEY_LOCAL_PORT));

if (REQUEST_BI_STREAM_SERVICE_NAME.equals(call.getMethodDescriptor().getServiceName())) {

Channel internalChannel = getInternalChannel(call);

ctx = ctx.withValue(CONTEXT_KEY_CHANNEL, internalChannel);

}

return Contexts.interceptCall(ctx, call, headers, next);

}

};

addServices(handlerRegistry, serverInterceptor);

//这里是核心代码,创建服务

server = ServerBuilder.forPort(getServicePort()).executor(getRpcExecutor())

.maxInboundMessageSize(getInboundMessageSize()).fallbackHandlerRegistry(handlerRegistry)

.compressorRegistry(CompressorRegistry.getDefaultInstance())

.decompressorRegistry(DecompressorRegistry.getDefaultInstance())

.addTransportFilter(new ServerTransportFilter() {

@Override

public Attributes transportReady(Attributes transportAttrs) {

InetSocketAddress remoteAddress = (InetSocketAddress) transportAttrs

.get(Grpc.TRANSPORT_ATTR_REMOTE_ADDR);

InetSocketAddress localAddress = (InetSocketAddress) transportAttrs

.get(Grpc.TRANSPORT_ATTR_LOCAL_ADDR);

int remotePort = remoteAddress.getPort();

int localPort = localAddress.getPort();

String remoteIp = remoteAddress.getAddress().getHostAddress();

Attributes attrWrapper = transportAttrs.toBuilder()

.set(TRANS_KEY_CONN_ID, System.currentTimeMillis() + "_" + remoteIp + "_" + remotePort)

.set(TRANS_KEY_REMOTE_IP, remoteIp).set(TRANS_KEY_REMOTE_PORT, remotePort)

.set(TRANS_KEY_LOCAL_PORT, localPort).build();

String connectionId = attrWrapper.get(TRANS_KEY_CONN_ID);

Loggers.REMOTE_DIGEST.info("Connection transportReady,connectionId = {} ", connectionId);

return attrWrapper;

}

@Override

public void transportTerminated(Attributes transportAttrs) {

String connectionId = null;

try {

connectionId = transportAttrs.get(TRANS_KEY_CONN_ID);

} catch (Exception e) {

// Ignore

}

if (StringUtils.isNotBlank(connectionId)) {

Loggers.REMOTE_DIGEST

.info("Connection transportTerminated,connectionId = {} ", connectionId);

connectionManager.unregister(connectionId);

}

}

}).build();

server.start();

} 3.3 request调度源码分析

grpc是RPC调用(remote Procedure call),protocal文件定义的是接口service。那么定义好CS接口后,服务端就要implement 接口。

grpc对于service,会创建一个抽象类,实现这个抽象类的接口。

实现了两个service, RequestGrpc和BiRequestStreamGrpc

实现类是GrpcRequestAcceptor

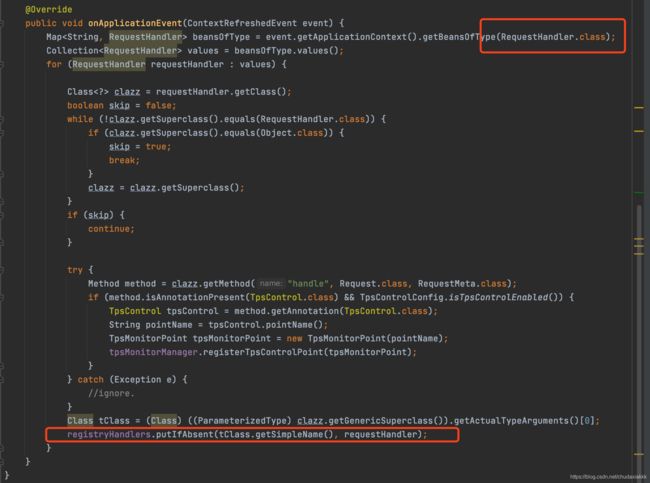

再看下requestHandlerRegistry,是保存registry的对象,看看它是怎么收集handler的

原理是通过spring的类查找,找到RequestHandler的所有实现类,将类名关联上处理器。

观察配置中心的实现类

好,分析先到这里。

后面将会写更多文章

- 配置管理服务间的grpc调用;

- 服务发现的grpc调用