分布式链路追踪之Skywalking从入门到CRUD

文章目录

- Skywalking介绍

-

- Skywalking和Spring Cloud Sleuth + ZipKin对比

- Skywalking架构

- SpringCloud引入Skywalking

-

- Skywalking下载

-

- 配置

- 启动

- 客户端整合

- Skywalking数据持久化(es)

- 定义日志配置

- 验收

Skywalking介绍

- Skywalking为国产开源软件,由国内开源爱好者吴晟开源并提交到Apache孵化器的产品;

- SkyWalking增长势头强劲,社区活跃,中文文档齐全,没有语言障碍;

- SkyWalking支持多语言,切引入到项目中非常方便,无代码侵入性;

Skywalking和Spring Cloud Sleuth + ZipKin对比

- SkyWalking采用字节码增强的技术实现代码无侵入,zipKin代码侵入性比较高;

- SkyWalking功能比较丰富,报表统计,UI界面更加人性化;

- 如果是新架构,建议优先选择SkyWalking;

Skywalking架构

- 上面的Agent:负责收集日志数据,并且传递给中间的OAP服务器;

- 中间的OAP:负责接收 Agent 发送的 Tracing 和Metric的数据信息,然后进行分析(AnalysisCore) ,存储到外部存储器( Storage ),最终提供查询( Query )功能;

- 左面的UI:负责提供web控制台,查看链路,查看各种指标,性能等等;

- 右面Storage:负责数据的存储,支持多种存储类型;

SpringCloud引入Skywalking

Skywalking下载

下载地址:https://archive.apache.org/dist/skywalking/,下载对应的版本(本篇文章使用8.7.0),目录大致如下:

配置

修改配置文件,这里配合nacos与es一起使用,配置文件为config目录application.yml文件(oap服务的配置文件);

对接nacos:

cluster:

# 这里修改为 nacos

selector: ${SW_CLUSTER:nacos}

standalone:

zookeeper:

nameSpace: ${SW_NAMESPACE:""}

hostPort: ${SW_CLUSTER_ZK_HOST_PORT:localhost:2181}

# Retry Policy

baseSleepTimeMs: ${SW_CLUSTER_ZK_SLEEP_TIME:1000}

maxRetries: ${SW_CLUSTER_ZK_MAX_RETRIES:3}

# Enable ACL

enableACL: ${SW_ZK_ENABLE_ACL:false}

schema: ${SW_ZK_SCHEMA:digest}

expression: ${SW_ZK_EXPRESSION:skywalking:skywalking}

kubernetes:

namespace: ${SW_CLUSTER_K8S_NAMESPACE:default}

labelSelector: ${SW_CLUSTER_K8S_LABEL:app=collector,release=skywalking}

uidEnvName: ${SW_CLUSTER_K8S_UID:SKYWALKING_COLLECTOR_UID}

consul:

serviceName: ${SW_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

hostPort: ${SW_CLUSTER_CONSUL_HOST_PORT:localhost:8500}

aclToken: ${SW_CLUSTER_CONSUL_ACLTOKEN:""}

etcd:

endpoints: ${SW_CLUSTER_ETCD_ENDPOINTS:localhost:2379}

namespace: ${SW_CLUSTER_ETCD_NAMESPACE:/skywalking}

serviceName: ${SW_SCLUSTER_ETCD_ERVICE_NAME:"SkyWalking_OAP_Cluster"}

authentication: ${SW_CLUSTER_ETCD_AUTHENTICATION:false}

user: ${SW_SCLUSTER_ETCD_USER:}

password: ${SW_SCLUSTER_ETCD_PASSWORD:}

nacos:

# 这里为在nacos中展示的服务名称,在这里默认就好

serviceName: ${SW_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

# 这里填写nacos注册中心地址

hostPort: ${SW_CLUSTER_NACOS_HOST_PORT:127.0.0.1:8848}

# 如果有配置命名空间的话,填写命名空间ID

namespace: ${SW_CLUSTER_NACOS_NAMESPACE:"729f3090-638e-4b45-bfde-b91312ffe3d0"}

# Nacos auth username

username: ${SW_CLUSTER_NACOS_USERNAME:"nacos"}

password: ${SW_CLUSTER_NACOS_PASSWORD:"nacos"}

# Nacos auth accessKey

accessKey: ${SW_CLUSTER_NACOS_ACCESSKEY:""}

secretKey: ${SW_CLUSTER_NACOS_SECRETKEY:""}

启动

其中bin目录下,oapService.bat与webappService.bat分别对应启动服务端与客户端,对应.sh后缀同理,startup.bat为前后端同时启动;

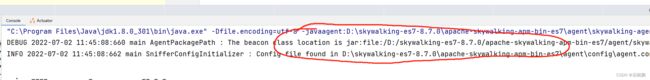

这里执行startup.bat进行启动,下面为启动成功样式:

注意点:

- 启动命令目录下不可以有中文、空格,否则会出现闪退;

- 端口冲突也会造成闪退,修改端口可在

webapp/webapp.yml中进行修改,默认为8080;

这时访问localhost:8080即可访问成功,但因为没有做任何配置,所以只是个空页面:

(这里不管页面展示与否,都不用担心,只要保证startup.bat启动之后没有闪退就可以)

客户端整合

- 客户端整合无需修改代码,只需要在启动时,指定配置即可;

-Dfile.encoding=utf-8

// 指定jar

-javaagent:D:\skywalking-es7-8.7.0\apache-skywalking-apm-bin-es7\agent\skywalking-agent.jar

// 自定义服务名称

-Dskywalking.agent.service_name=blog-article

// 这个端口是默认的,除非自己修改了配置

-Dskywalking.collector.backend_service=127.0.0.1:11800

-Xms400m

-Xmx400m

Skywalking数据持久化(es)

- Skywalking数据持久化可以对接H2以及es,这里以es为例,首先搭建es,访问localhost:9200,展示es相关信息,代表搭建完成,搭建es相关步骤就不在这里展开讲解了;

{

"name": "28f784356f66",

"cluster_name": "docker-cluster",

"cluster_uuid": "pD1a-jZYQ1iiHlQ7KezQHA",

"version": {

"number": "7.8.1",

"build_flavor": "oss",

"build_type": "docker",

"build_hash": "b5ca9c58fb664ca8bf9e4057fc229b3396bf3a89",

"build_date": "2020-07-21T16:40:44.668009Z",

"build_snapshot": false,

"lucene_version": "8.5.1",

"minimum_wire_compatibility_version": "6.8.0",

"minimum_index_compatibility_version": "6.0.0-beta1"

},

"tagline": "You Know, for Search"

}

- 因为这里Skywalking使用的是8.7.0版本,所以es最好使用7.0版本以上,本篇使用的es版本为

7.8.1; - 修改

config/application.yml配置文件,storage节点下指定elasticsearch7,elasticsearch7节点下配置es的访问地址; - 注意配置文件中有

elasticsearch以及elasticsearch7节点,不要混淆;

storage:

selector: elasticsearch7

elasticsearch7:

nameSpace: ${SW_NAMESPACE:"docker-cluster"}

# 指定地址

clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:127.0.0.1:9200}

protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"}

connectTimeout: ${SW_STORAGE_ES_CONNECT_TIMEOUT:500}

socketTimeout: ${SW_STORAGE_ES_SOCKET_TIMEOUT:30000}

trustStorePath: ${SW_STORAGE_ES_SSL_JKS_PATH:""}

trustStorePass: ${SW_STORAGE_ES_SSL_JKS_PASS:""}

dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index.

indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:1} # Shard number of new indexes

indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:1} # Replicas number of new indexes

# Super data set has been defined in the codes, such as trace segments.The following 3 config would be improve es performance when storage super size data in es.

superDatasetDayStep: ${SW_SUPERDATASET_STORAGE_DAY_STEP:-1} # Represent the number of days in the super size dataset record index, the default value is the same as dayStep when the value is less than 0

superDatasetIndexShardsFactor: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_SHARDS_FACTOR:5} # This factor provides more shards for the super data set, shards number = indexShardsNumber * superDatasetIndexShardsFactor. Also, this factor effects Zipkin and Jaeger traces.

superDatasetIndexReplicasNumber: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_REPLICAS_NUMBER:0} # Represent the replicas number in the super size dataset record index, the default value is 0.

indexTemplateOrder: ${SW_STORAGE_ES_INDEX_TEMPLATE_ORDER:0} # the order of index template

user: ${SW_ES_USER:""}

password: ${SW_ES_PASSWORD:""}

secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool.

bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:5000} # Execute the async bulk record data every ${SW_STORAGE_ES_BULK_ACTIONS} requests

# flush the bulk every 10 seconds whatever the number of requests

# INT(flushInterval * 2/3) would be used for index refresh period.

flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:15}

concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests

resultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000}

metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:5000}

segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200}

profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200}

oapAnalyzer: ${SW_STORAGE_ES_OAP_ANALYZER:"{\"analyzer\":{\"oap_analyzer\":{\"type\":\"stop\"}}}"} # the oap analyzer.

oapLogAnalyzer: ${SW_STORAGE_ES_OAP_LOG_ANALYZER:"{\"analyzer\":{\"oap_log_analyzer\":{\"type\":\"standard\"}}}"} # the oap log analyzer. It could be customized by the ES analyzer configuration to support more language log formats, such as Chinese log, Japanese log and etc.

advanced: ${SW_STORAGE_ES_ADVANCED:""}

- 修改完配置文件,再次启动Skywalking时,会发现控制台已经有了数据,刷新项目之后也会在日志栏中展示日志;

定义日志配置

logback-spring.xml

<configuration scan="true" scanPeriod=" 5 seconds">

<property name="logPath" value="logs"/>

<appender name="console_log" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="ch.qos.logback.core.encoder.LayoutWrappingEncoder">

<layout class="org.apache.skywalking.apm.toolkit.log.logback.v1.x.TraceIdPatternLogbackLayout">

<Pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%tid] [%thread] %-5level %logger{36} -%msg%nPattern>

layout>

encoder>

appender>

<appender name="ASYNC" class="ch.qos.logback.classic.AsyncAppender">

<discardingThreshold>0discardingThreshold>

<queueSize>1024queueSize>

<neverBlock>trueneverBlock>

<appender-ref ref="console_log"/>

appender>

<appender name="grpc-log" class="org.apache.skywalking.apm.toolkit.log.logback.v1.x.log.GRPCLogClientAppender">

<encoder class="ch.qos.logback.core.encoder.LayoutWrappingEncoder">

<layout class="org.apache.skywalking.apm.toolkit.log.logback.v1.x.mdc.TraceIdMDCPatternLogbackLayout">

<Pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%X{tid}] [%thread] %-5level %logger{36} -%msg%nPattern>

layout>

encoder>

appender>

<appender name="fileDEBUGLog"

class="ch.qos.logback.core.rolling.RollingFileAppender">

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>Errorlevel>

<onMatch>DENYonMatch>

<onMismatch>ACCEPTonMismatch>

filter>

<File>${logPath}/blog-gateway.logFile>

<rollingPolicy

class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<FileNamePattern>${logPath}/blog-gateway_%d{yyyy-MM-dd}.logFileNamePattern>

<maxHistory>90maxHistory>

<totalSizeCap>1GBtotalSizeCap>

rollingPolicy>

<encoder>

<charset>UTF-8charset>

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%X{tid}] [%thread] %-5level %logger{36} -%msg%npattern>

encoder>

appender>

<appender name="fileErrorLog"

class="ch.qos.logback.core.rolling.RollingFileAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>Errorlevel>

filter>

<File>${logPath}/blog-gateway_error.logFile>

<rollingPolicy

class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<FileNamePattern>${logPath}/blog-gateway_error_%d{yyyy-MM-dd}.logFileNamePattern>

<maxHistory>90maxHistory>

<totalSizeCap>1GBtotalSizeCap>

rollingPolicy>

<encoder>

<charset>UTF-8charset>

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%X{tid}] [%thread] %-5level %logger{36} -%msg%npattern>

encoder>

appender>

<root level="INFO">

<appender-ref ref="ASYNC"/>

<appender-ref ref="grpc-log"/>

<appender-ref ref="fileDEBUGLog"/>

<appender-ref ref="fileErrorLog"/>

root>

configuration>