import numpy as np

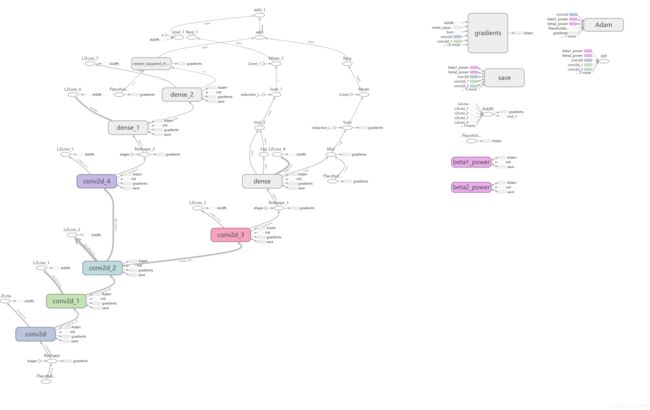

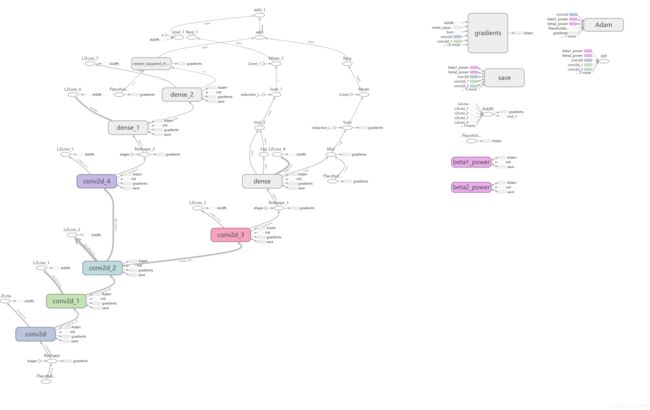

import tensorflow as tf

from game import Board, Game

from policy_value_net_tensorflow import PolicyValueNet # Tensorflow

n = 5

width, height = 8, 8

model_file = 'current_policy.model'

board = Board(width=width, height=height, n_in_row=n)

game = Game(board)

board.init_board(0)

input_states = tf.placeholder(

tf.float32, shape=[None, 4, height, width])

input_states_reshaped = tf.reshape(

input_states, [-1, height, width, 4])

# 2. Common Networks Layers

conv1 = tf.layers.conv2d(inputs=input_states_reshaped,

filters=32, kernel_size=[3, 3],

padding="same", activation=tf.nn.relu)

conv2 = tf.layers.conv2d(inputs=conv1, filters=64,

kernel_size=[3, 3], padding="same",

activation=tf.nn.relu)

conv3 = tf.layers.conv2d(inputs=conv2, filters=128,

kernel_size=[3, 3], padding="same",

activation=tf.nn.relu)

# 3-1 Action Networks

action_conv = tf.layers.conv2d(inputs=conv3, filters=4,

kernel_size=[1, 1], padding="same",

activation=tf.nn.relu)

# Flatten the tensor

action_conv_flat = tf.reshape(

action_conv, [-1, 4 * height * width])

# 3-2 Full connected layer, the output is the log probability of moves

# on each slot on the board

action_fc = tf.layers.dense(inputs=action_conv_flat,

units=height * width,

activation=tf.nn.log_softmax)

# 4 Evaluation Networks

evaluation_conv = tf.layers.conv2d(inputs=conv3, filters=2,

kernel_size=[1, 1],

padding="same",

activation=tf.nn.relu)

evaluation_conv_flat = tf.reshape(

evaluation_conv, [-1, 2 * height * width])

evaluation_fc1 = tf.layers.dense(inputs=evaluation_conv_flat,

units=64, activation=tf.nn.relu)

# output the score of evaluation on current state

evaluation_fc2 = tf.layers.dense(inputs=evaluation_fc1,

units=1, activation=tf.nn.tanh)

# Define the Loss function

# 1. Label: the array containing if the game wins or not for each state

labels = tf.placeholder(tf.float32, shape=[None, 1])

# 2. Predictions: the array containing the evaluation score of each state

# which is evaluation_fc2

# 3-1. Value Loss function

value_loss = tf.losses.mean_squared_error(labels,

evaluation_fc2)

# 3-2. Policy Loss function

mcts_probs = tf.placeholder(

tf.float32, shape=[None, height * width])

policy_loss = tf.negative(tf.reduce_mean(

tf.reduce_sum(tf.multiply(mcts_probs, action_fc), 1)))

# 3-3. L2 penalty (regularization)

l2_penalty_beta = 1e-4

vars = tf.trainable_variables()

l2_penalty = l2_penalty_beta * tf.add_n(

[tf.nn.l2_loss(v) for v in vars if 'bias' not in v.name.lower()])

# 3-4 Add up to be the Loss function

loss = value_loss + policy_loss + l2_penalty

# Define the optimizer we use for training

learning_rate = tf.placeholder(tf.float32)

optimizer = tf.train.AdamOptimizer(

learning_rate=learning_rate).minimize(loss)

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

# Make a session

session = tf.Session(config=config)

# calc policy entropy, for monitoring only

entropy = tf.negative(tf.reduce_mean(

tf.reduce_sum(tf.exp(action_fc) * action_fc, 1)))

# Initialize variables

init = tf.global_variables_initializer()

session.run(init)

# For saving and restoring

saver = tf.train.Saver()

# log_act_probs, value = session.run(

# [action_fc, evaluation_fc2],

# feed_dict={input_states: np.ascontiguousarray(board.current_state().reshape(-1, 4, 8,8))}

# )

# print(log_act_probs)

saver.restore(session, model_file)

state_batch=np.ascontiguousarray(board.current_state().reshape(-1, 4, width, height ))

log_act_probs, value = session.run(

[action_fc, evaluation_fc2],

feed_dict={input_states: state_batch}

)

act_probs = np.exp(log_act_probs)

print(act_probs)

winner_batch = np.reshape([1], (-1, 1))

loss, entropy, _ = session.run(

[loss, entropy, optimizer],

feed_dict={input_states: state_batch,

mcts_probs: act_probs,

labels: winner_batch,

learning_rate: 2e-3})

print(loss,entropy)

# saver.save(session, model_path_my)

log_act_probs, value = session.run(

[action_fc, evaluation_fc2],

feed_dict={input_states: state_batch}

)

act_probs = np.exp(log_act_probs)

print(act_probs)

# tf.summary.FileWriter("./logs", session.graph)