A Rigorous & Readable Review on RNNs

A Rigorous & Readable Review on RNNs

This post introduces a new Critical Review on Recurrent Neural Networks for Sequence Learning.

Twelve nights back, while up late preparing pretty pictures for a review onRecurrent Neural Networks for Sequence Learning, I figured I should share my Google art with the world. After all, RNNs are poorly understood by most outside the subfield, even within the machine learning community.

Since hitting post around 2AM, we've had great feedback and the article quickly jumped to one of this blog's most popular pieces. However, as the article hit Reddit the response was far from universal praise. Some readers were upset that the portion devoted to the LSTM itself was too short. Others noted in their comments that it had the misfortune of appearing on the same day as Andrej Karpathy's terrific, more thorough tutorial.

Some courteous Redditors even graciously noted that there was a typo in the title - the word Demistifying should have been Demystifying - that somehow made it through my bleary eyed typing. It seems this Redditor has never lived in the Bay Area or San Diego where mist and not myst is the true obstacle to perceiving the world clearly.

Jokes aside, since the community appears to demand a more thorough review on Recurrent Nets, I've posted my full 33-page review on my personal site and on the arXiv as well.

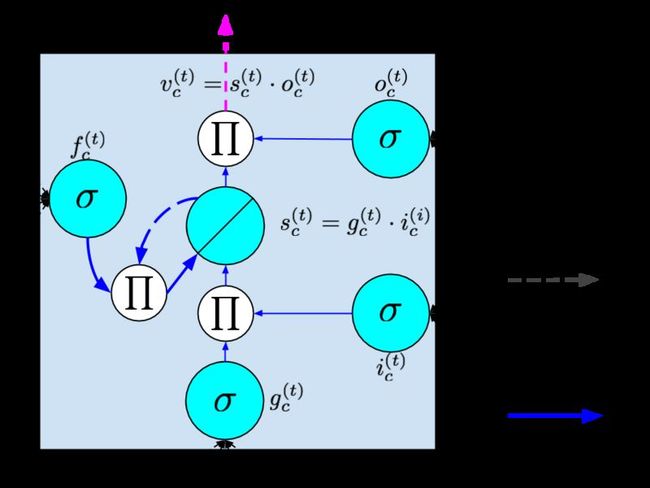

The review covers high level arguments, exact update equations, backpropagation, LSTMs, BRNNs and also the history dating from Hopfield nets, Elman nets, and Jordan nets. It explores evaluation methodology on sequence learning tasks, and the slew of recent amazing papers on novel applications from leading researchers in the field including the work of Jurgen Schmidhuber, Yoshua Bengio, Geoff Hinton, Ilya Sutskever, Alex Graves, Andrej Karpathy, Trevor Darrel, Quoc Le, Oriol Vinyals, Wojciech Zaremba, and many more. It also offers a high level introduction to the growing body of work characterizing the optimization problems.

Why I've written this review

I started off trying to understand the deep literature on LSTMs. This work can be difficult to access at first. Different papers have slightly different calculations, and notation varies tremendously across papers. This variability is compounded by the fact that so many papers seem to require familiarity with the rest of the literature. The best reviews I found were Alex Graves' excellent book on sequence labeling and Felix Gers thorough thesis. However, the first is very specific in scope and the latter is over a decade old.

My goal in composing this document is to provide a critical but gentle overview of the following important elements: motivation, historical perspective, modern models, optimization problem, training algorithms, and important applications.

For the moment, this is an evolving document and the highest priority is clarity. To that end any feedback about omitted details, glaring typos, or papers that anyone might find valuable is welcomed.