n9e体验.以及部分高可用集群测试验证

1.docker 环境 , docker-compose

参考1

参考2

参考3

下载二进制docker(离线内网安装)

linux下载docker包地址

wget https://download.docker.com/linux/static/stable/x86_64/docker-20.10.9.tgz

创建属组docker

groupadd docker

创建docker用户 加入docker足额

useradd -m -d /data/dock dock

gpasswd -a dock docker

编辑systemctrl托管文件

/usr/lib/systemd/system/docker.service

[root@c78-mini-template system]# cat docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd --graph /data/dockerdata

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

[root@c78-mini-template system]# cat docker.socket

[Unit]

Description=Docker Socket for the API

[Socket]

# If /var/run is not implemented as a symlink to /run, you may need to

# specify ListenStream=/var/run/docker.sock instead.

ListenStream=/run/docker.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

测试通过

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

# restart the docker process if it exits prematurely

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

安装docker

[root@c78-mini-template dock]# tar zxvf docker-19.03.9.tgz

[root@c78-mini-template dock]# cp docker/* /usr/bin

配置服务

[root@c78-mini-template dock]# systemctl start docker

[root@c78-mini-template dock]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /etc/systemd/system/docker.service.

禁用SELINUX

sed -i '/SELINUX/s/enforcing/disabled/' /etc/selinux/config

使用docker用户安装镜像

[root@c78-mini-template dock]# su - dock #非root也可以

上一次登录:三 7月 15 00:13:34 CST 2020pts/0 上

[dock@c78-mini-template ~]$ docker run hello-world

内网导入镜像(离线安装镜像)

导入导出命令

涉及的命令有export、import、save、load

save 命令

docker save [options] images [images…]

示例

docker save -o nginx.tar nginx:latest

或

docker save > nginx.tar nginx:latest

其中-o和>表示输出到文件,nginx.tar为目标文件,nginx:latest是源镜像名(name:tag)

load命令

docker load [options]

示例

docker load -i nginx.tar

或

docker load < nginx.tar

其中-i和<表示从文件输入。会成功导入镜像及相关元数据,包括tag信息

export命令

docker export [options] container

示例

docker export -o nginx-test.tar nginx-test

其中-o表示输出到文件,nginx-test.tar为目标文件,nginx-test是源容器名(name)

import命令

docker import [options] file|URL|- [REPOSITORY[:TAG]]

示例

docker import nginx-test.tar nginx:imp

或

cat nginx-test.tar | docker import - nginx:imp

区别

export命令导出的tar文件略小于save命令导出的

export命令是从容器(container)中导出tar文件,而save命令则是从镜像(images)中导出

基于第二点,export导出的文件再import回去时,无法保留镜像所有历史(即每一层layer信息,不熟悉的可以去看Dockerfile),不能进行回滚操作;而save是依据镜像来的,所以导入时可以完整保留下每一层layer信息。如下图所示,nginx:latest是save导出load导入的,nginx:imp是export导出import导入的。

可以依据具体使用场景来选择命令

若是只想备份images,使用save、load即可

若是在启动容器后,容器内容有变化,需要备份,则使用export、import

Docker Compose 安装

下载二进制直接安装

curl -L https://get.daocloud.io/docker/compose/releases/download/v2.4.1/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

可执行权限应用于二进制文件:

$ sudo chmod +x /usr/local/bin/docker-compose

创建软链:

$ sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

测试是否安装成功:

$ docker-compose version

cker-compose version 2.4.1, build 4667896b

2.DNS服务 ,便于高可用切换测试

使用docker-compose.yml 配置

[userapp@lqz-test DNS]$ pwd

/data/docker/DNS

[userapp@lqz-test DNS]$ ls -l

总用量 4

drwxr-xr-x 3 root root 26 9月 17 11:16 dns

-rw-rw-r-- 1 userapp userapp 501 9月 17 11:16 docker-compose.yml

[userapp@lqz-test DNS]$

[userapp@lqz-test DNS]$ cat docker-compose.yml

version: '3.7'

networks:

net_dns:

driver: bridge

ipam:

driver: default

config:

- subnet: 172.10.0.1/16

services:

dns-server:

container_name: dns-server

image: 'jpillora/dnsmasq'

restart: always

networks:

net_dns:

ipv4_address: 172.10.0.100

environment:

- TZ=Asia/Shanghai

- HTTP_USER=foo

- HTTP_PASS=bar

ports:

- 53:53/udp

- 5380:8080

volumes:

- ./dns/dnsmasq.conf:/etc/dnsmasq.conf:rw

增加容器配置文件

[userapp@lqz-test DNS]$ cat dns/dnsmasq.conf

#dnsmasq config, for a complete example, see:

# http://oss.segetech.com/intra/srv/dnsmasq.conf

#log all dns queries

log-queries

#dont use hosts nameservers

no-resolv

#use cloudflare as default nameservers, prefer 1^4

server=1.0.0.1

server=1.1.1.1

strict-order

#serve all .company queries using a specific nameserver

server=/company/10.0.0.1

#explicitly define host-ip mappings

address=/myhost.company/10.0.0.2

address=/master.redis/172.19.0.12

启动容器

[userapp@lqz-test DNS]$ docker-compose up -d

配置物理机域名解析地址

增加 nameserver 127.0.0.1

[userapp@lqz-test DNS]$ cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 127.0.0.1

nameserver 192.168.0.1

nameserver 2409:8008:2000::1

nameserver 2409:8008:2000:100::1

DNSdocker启动成功后 登录 http://192.168.0.130:5380/

默认 用户/密码 foo/bar

增加解析配置测试

测试

[userapp@lqz-test redis_sentinel]$ ping master.redis

PING master.redis (172.19.0.10) 56(84) bytes of data.

64 bytes from 172.19.0.10 (172.19.0.10): icmp_seq=1 ttl=64 time=0.062 ms

64 bytes from 172.19.0.10 (172.19.0.10): icmp_seq=2 ttl=64 time=0.033 ms

docker资源说明介绍

参考1

参考2

参考3-dnsmasq

*** 测试网络规划 ***

目前我测试并没有做集群网络规划,单组件单独部署一个子网.实际使用时可以灵活变化.强依赖的可以放一个子网即可.对于K8S的知识点目前涉及不深无法解答.

3 安装待测试的监控组件redis

这里使用容器搭建,

具体docker-compose网通配置说明可以

参考1

参考2

创建的redis集群架构说明可以参考

redis 高可用集群

redis操作命令

参考

官方手册

3.1 redis一主两从

自行创建持久化目录

[userapp@lqz-test redis_master_slave]$ pwd

/data/docker/redis/redis_master_slave

[userapp@lqz-test redis_master_slave]$ ls -l

总用量 4

-rw-rw-r-- 1 userapp userapp 1331 9月 16 22:05 docker-compose.yaml

drwxrwxr-x 3 userapp userapp 36 9月 16 22:05 master

drwxrwxr-x 3 userapp userapp 36 9月 16 22:03 slave11

drwxrwxr-x 3 userapp userapp 36 9月 16 22:03 slave12

[userapp@lqz-test redis_master_slave]$

创建配置文件并填充内容如下

[userapp@lqz-test redis_master_slave]$ cat master/redis.conf

bind 0.0.0.0

protected-mode no

port 6379

timeout 0

save 900 1 # 900s内至少一次写操作则执行bgsave进行RDB持久化

save 300 10

save 60 10000

rdbcompression yes

dbfilename dump.rdb

dir /data

appendonly yes

appendfsync everysec

requirepass 12345678

[userapp@lqz-test redis_master_slave]$

[userapp@lqz-test redis_master_slave]$ cat slave11/redis.conf

bind 0.0.0.0

protected-mode no

port 6379

timeout 0

save 900 1 # 900s内至少一次写操作则执行bgsave进行RDB持久化

save 300 10

save 60 10000

rdbcompression yes

dbfilename dump.rdb

dir /data

appendonly yes

appendfsync everysec

requirepass 12345678

slaveof 172.19.0.10 6379

masterauth 12345678

[userapp@lqz-test redis_master_slave]$

[userapp@lqz-test redis_master_slave]$ cat slave12/redis.conf

bind 0.0.0.0

protected-mode no

port 6379

timeout 0

save 900 1 # 900s内至少一次写操作则执行bgsave进行RDB持久化

save 300 10

save 60 10000

rdbcompression yes

dbfilename dump.rdb

dir /data

appendonly yes

appendfsync everysec

requirepass 12345678

slaveof 172.19.0.10 6379

masterauth 12345678

[userapp@lqz-test redis_master_slave]$

使用docker-compose创建容器

[userapp@lqz-test redis_master_slave]$ docker-compose up -d

[+] Running 3/3

⠿ Container redis_slave1 Started 0.4s

⠿ Container redis_slave2 Started 0.3s

⠿ Container redis_master Started 0.5s

[userapp@lqz-test redis_master_slave]$

docker-compose.yml 内容如下

version: "3.7"

networks:

net_redis_master_slave:

driver: bridge

ipam:

driver: default

config:

- subnet: 172.19.0.1/16

services:

redis-master:

image: redis:latest

container_name: redis_master

restart: always

ports:

- 16379:6379

networks:

net_redis_master_slave:

ipv4_address: 172.19.0.10

volumes:

- ./master/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./master/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

redis-slave11:

image: redis:latest

container_name: redis_slave1

restart: always

ports:

- 16380:6379

networks:

net_redis_master_slave:

ipv4_address: 172.19.0.11

volumes:

- ./slave11/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./slave11/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

redis-slave12:

image: redis:latest

container_name: redis_slave2

restart: always

ports:

- 16381:6379

networks:

net_redis_master_slave:

ipv4_address: 172.19.0.12

volumes:

- ./slave12/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./slave12/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

打印日志信息如下 说明创建成功

[userapp@lqz-test redis_master_slave]$ docker logs redis_master | tail

1:M 16 Sep 2022 14:09:44.798 * Background saving terminated with success

1:M 16 Sep 2022 14:09:44.798 * Synchronization with replica 172.19.0.12:6379 succeeded

1:M 16 Sep 2022 14:09:44.881 * Replica 172.19.0.11:6379 asks for synchronization

1:M 16 Sep 2022 14:09:44.881 * Full resync requested by replica 172.19.0.11:6379

1:M 16 Sep 2022 14:09:44.881 * Starting BGSAVE for SYNC with target: disk

1:M 16 Sep 2022 14:09:44.883 * Background saving started by pid 13

13:C 16 Sep 2022 14:09:44.886 * DB saved on disk

13:C 16 Sep 2022 14:09:44.888 * RDB: 2 MB of memory used by copy-on-write

1:M 16 Sep 2022 14:09:44.899 * Background saving terminated with success

1:M 16 Sep 2022 14:09:44.899 * Synchronization with replica 172.19.0.11:6379 succeeded

[userapp@lqz-test redis_master_slave]$

[userapp@lqz-test redis_master_slave]$ docker logs redis_slave1 | tail

1:S 16 Sep 2022 14:09:44.900 * MASTER <-> REPLICA sync: Finished with success

1:S 16 Sep 2022 14:09:44.900 * Background append only file rewriting started by pid 12

1:S 16 Sep 2022 14:09:44.936 * AOF rewrite child asks to stop sending diffs.

12:C 16 Sep 2022 14:09:44.936 * Parent agreed to stop sending diffs. Finalizing AOF...

12:C 16 Sep 2022 14:09:44.936 * Concatenating 0.00 MB of AOF diff received from parent.

12:C 16 Sep 2022 14:09:44.936 * SYNC append only file rewrite performed

12:C 16 Sep 2022 14:09:44.936 * AOF rewrite: 4 MB of memory used by copy-on-write

1:S 16 Sep 2022 14:09:44.980 * Background AOF rewrite terminated with success

1:S 16 Sep 2022 14:09:44.980 * Residual parent diff successfully flushed to the rewritten AOF (0.00 MB)

1:S 16 Sep 2022 14:09:44.980 * Background AOF rewrite finished successfully

[userapp@lqz-test redis_master_slave]$

[userapp@lqz-test redis_master_slave]$ docker logs redis_slave2 | tail

1:S 16 Sep 2022 14:09:44.799 * MASTER <-> REPLICA sync: Finished with success

1:S 16 Sep 2022 14:09:44.800 * Background append only file rewriting started by pid 12

1:S 16 Sep 2022 14:09:44.838 * AOF rewrite child asks to stop sending diffs.

12:C 16 Sep 2022 14:09:44.838 * Parent agreed to stop sending diffs. Finalizing AOF...

12:C 16 Sep 2022 14:09:44.838 * Concatenating 0.00 MB of AOF diff received from parent.

12:C 16 Sep 2022 14:09:44.838 * SYNC append only file rewrite performed

12:C 16 Sep 2022 14:09:44.840 * AOF rewrite: 6 MB of memory used by copy-on-write

1:S 16 Sep 2022 14:09:44.927 * Background AOF rewrite terminated with success

1:S 16 Sep 2022 14:09:44.928 * Residual parent diff successfully flushed to the rewritten AOF (0.00 MB)

1:S 16 Sep 2022 14:09:44.928 * Background AOF rewrite finished successfully

[userapp@lqz-test redis_master_slave]$

step by step 的流程可以

参考

一主两从简单验证

主节点写入/读取

[userapp@lqz-test redis_master_slave]$ docker exec -it redis_master /bin/sh

# redis-cli -c

127.0.0.1:6379> auth 12345678

OK

127.0.0.1:6379> set name Stephen

OK

127.0.0.1:6379> get name

"Stephen"

127.0.0.1:6379>

从节点读取

[userapp@lqz-test docker]$ docker exec -it redis_slave1 /bin/sh

# redis-cli -c

127.0.0.1:6379> auth 12345678

OK

127.0.0.1:6379> get name

"Stephen"

127.0.0.1:6379>

配置OK

3.2 redis-Sentinel哨兵模式

自行创建持久化目录

[userapp@lqz-test redis_sentinel]$ pwd

/data/docker/redis/redis_sentinel

[userapp@lqz-test redis_sentinel]$ ls -l

总用量 4

-rw-rw-r-- 1 userapp userapp 2330 9月 17 00:12 docker-compose.yml

drwxrwxr-x 3 userapp userapp 36 9月 16 23:48 master

drwxrwxr-x 2 userapp userapp 93 9月 17 00:11 sentinel_cfg

drwxrwxr-x 3 userapp userapp 36 9月 16 23:48 slave11

drwxrwxr-x 3 userapp userapp 36 9月 16 23:48 slave12

[userapp@lqz-test redis_sentinel]$

创建redis配置文件并填充内容如下

[userapp@lqz-test redis_sentinel]$ cat master/redis.conf

bind 0.0.0.0

protected-mode no

port 6379

timeout 0

save 900 1 # 900s内至少一次写操作则执行bgsave进行RDB持久化

save 300 10

save 60 10000

rdbcompression yes

dbfilename dump.rdb

dir /data

appendonly yes

appendfsync everysec

requirepass 12345678

# 不配置主节点密码 宕机重启后无法加入集群

masterauth 12345678

[userapp@lqz-test redis_sentinel]$

[userapp@lqz-test redis_sentinel]$ cat slave11/redis.conf

bind 0.0.0.0

protected-mode no

port 6379

timeout 0

save 900 1 # 900s内至少一次写操作则执行bgsave进行RDB持久化

save 300 10

save 60 10000

rdbcompression yes

dbfilename dump.rdb

dir /data

appendonly yes

appendfsync everysec

requirepass 12345678

slaveof 172.20.0.10 6379

masterauth 12345678

[userapp@lqz-test redis_sentinel]$

[userapp@lqz-test redis_sentinel]$ cat slave12/redis.conf

bind 0.0.0.0

protected-mode no

port 6379

timeout 0

save 900 1 # 900s内至少一次写操作则执行bgsave进行RDB持久化

save 300 10

save 60 10000

rdbcompression yes

dbfilename dump.rdb

dir /data

appendonly yes

appendfsync everysec

requirepass 12345678

slaveof 172.20.0.10 6379

masterauth 12345678

哨兵配置文件

[userapp@lqz-test redis_sentinel]$ cat sentinel1/sentinel1.conf

port 26379

dir /tmp

sentinel monitor my_redis_sentine 172.20.0.10 6379 2

sentinel auth-pass my_redis_sentine 12345678

sentinel down-after-milliseconds my_redis_sentine 30000

sentinel parallel-syncs my_redis_sentine 1

sentinel failover-timeout my_redis_sentine 180000

sentinel deny-scripts-reconfig yes

[userapp@lqz-test redis_sentinel]$

[userapp@lqz-test redis_sentinel]$ cat sentinel2/sentinel2.conf

port 26379

dir /tmp

sentinel monitor my_redis_sentine 172.20.0.10 6379 2

sentinel auth-pass my_redis_sentine 12345678

sentinel down-after-milliseconds my_redis_sentine 30000

sentinel parallel-syncs my_redis_sentine 1

sentinel failover-timeout my_redis_sentine 180000

sentinel deny-scripts-reconfig yes

[userapp@lqz-test redis_sentinel]$

[userapp@lqz-test redis_sentinel]$ cat sentinel3/sentinel3.conf

port 26379

dir /tmp

sentinel monitor my_redis_sentine 172.20.0.10 6379 2

sentinel auth-pass my_redis_sentine 12345678

sentinel down-after-milliseconds my_redis_sentine 30000

sentinel parallel-syncs my_redis_sentine 1

sentinel failover-timeout my_redis_sentine 180000

sentinel deny-scripts-reconfig yes

可以先配置一个再复制到其他目录

[userapp@lqz-test sentinel_cfg]$ cat sentinel.conf

port 26379

dir /tmp

# 自定义集群名,其中 172.20.0.10 为 redis-master 的 ip,6379 为 redis-master 的端口,2 为最小投票数(因为有 3 台 Sentinel 所以可以设置成 2)

sentinel monitor my_redis_sentine 172.20.0.10 6379 2

sentinel auth-pass my_redis_sentine 12345678

# 指定多少毫秒之后 主节点没有应答哨兵sentinel 此时 哨兵主观上认为主节点下线 默认30秒

sentinel down-after-milliseconds my_redis_sentine 30000

# 这个配置项指定了在发生failover主备切换时最多可以有多少个slave同时对新的master进行 同步,

# 这个数字越小,完成failover所需的时间就越长,

# 但是如果这个数字越大,就意味着越 多的slave因为replication而不可用。

# 可以通过将这个值设为 1 来保证每次只有一个slave 处于不能处理命令请求的状态。

sentinel parallel-syncs my_redis_sentine 1

# 故障转移的超时时间 failover-timeout 可以用在以下这些方面:

#1. 同一个sentinel对同一个master两次failover之间的间隔时间。

#2. 当一个slave从一个错误的master那里同步数据开始计算时间。直到slave被纠正为向正确的master那里同步数据时。

#3.当想要取消一个正在进行的failover所需要的时间。

#4.当进行failover时,配置所有slaves指向新的master所需的最大时间。不过,即使过了这个超时,slaves依然会被正确配置为指向master,但是就不按parallel-syncs所配置的规则来了

# 默认三分钟

sentinel failover-timeout my_redis_sentine 180000

sentinel deny-scripts-reconfig yes

注意:

① 第三行中 my_redis_sentine 是可以自定义集群的名字,如果使用其他语言连接集群,需要写上该名字。

② 172.20.0.10是我容器的ip,请换成自己的ip,不要127.0.0.1 否则会链接到你的应用程序去,写IP就可以。

③ 那个2呢,是因为我有3台哨兵,2个投票超过50%了,所以设置2即可,如果是更多,设置超过50%概率就好,自己喜欢。

复制三份

cp sentinel.conf sentinel1/sentinel1.conf

cp sentinel.conf sentinel2/sentinel2.conf

cp sentinel.conf sentinel3/sentinel3.conf

使用docker-compose创建容器

[userapp@lqz-test redis_sentinel]$ docker-compose up

[+] Running 7/7

⠿ Network redis_sentinel_net_redis_sentinel Created 0.0s

⠿ Container redis_s_slave1 Created 0.0s

⠿ Container redis_s_slave2 Created 0.0s

⠿ Container redis-sentinel-1 Created 0.0s

⠿ Container redis-sentinel-2 Created 0.0s

⠿ Container redis-sentinel-3 Created 0.1s

⠿ Container redis_s_master Created 0.0s

Attaching to redis-sentinel-1, redis-sentinel-2, redis-sentinel-3, redis_s_master, redis_s_slave1, redis_s_slave2

redis-sentinel-3 | 1:X 16 Sep 2022 16:29:32.833 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

redis-sentinel-3 | 1:X 16 Sep 2022 16:29:32.833 # Redis version=6.2.6, bits=64, commit=00000000, modified=0, pid=1, just started

.....

日志无报错启动正常即可

docker-compose.yml 内容如下

version: "3.7"

networks:

net_redis_sentinel:

driver: bridge

ipam:

driver: default

config:

- subnet: 172.20.0.1/16

services:

redis-master:

image: redis:latest

container_name: redis_s_master

restart: always

ports:

- 36379:6379

networks:

net_redis_sentinel:

ipv4_address: 172.20.0.10

volumes:

- ./master/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./master/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

redis-slave11:

image: redis:latest

container_name: redis_s_slave1

restart: always

ports:

- 36380:6379

networks:

net_redis_sentinel:

ipv4_address: 172.20.0.11

volumes:

- ./slave11/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./slave11/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

redis-slave12:

image: redis:latest

container_name: redis_s_slave2

restart: always

ports:

- 36381:6379

networks:

net_redis_sentinel:

ipv4_address: 172.20.0.12

volumes:

- ./slave12/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./slave12/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

sentinel1:

image: redis

container_name: redis-sentinel-1

restart: always

ports:

- 26379:26379

networks:

net_redis_sentinel:

ipv4_address: 172.20.0.20

volumes:

- ./sentinel1:/home/Software/Docker/sentinel:rw

command: redis-sentinel /home/Software/Docker/sentinel/sentinel1.conf

sentinel2:

image: redis

container_name: redis-sentinel-2

restart: always

ports:

- 26380:26379

networks:

net_redis_sentinel:

ipv4_address: 172.20.0.21

volumes:

- ./sentinel2:/home/Software/Docker/sentinel:rw

command: redis-sentinel /home/Software/Docker/sentinel/sentinel2.conf

sentinel3:

image: redis

container_name: redis-sentinel-3

restart: always

ports:

- 26381:26379

networks:

net_redis_sentinel:

ipv4_address: 172.20.0.22

volumes:

- ./sentinel3:/home/Software/Docker/sentinel:rw

command: redis-sentinel /home/Software/Docker/sentinel/sentinel3.conf

查看容器状态是否启动成功

[userapp@lqz-test redis_sentinel]$ docker ps

812cb738285e redis "docker-entrypoint.s…" 7 minutes ago Up 6 minutes 6379/tcp, 0.0.0.0:26381->26379/tcp, :::26381->26379/tcp redis-sentinel-3

e1dd2bbf113b redis "docker-entrypoint.s…" 7 minutes ago Up 6 minutes 6379/tcp, 0.0.0.0:26380->26379/tcp, :::26380->26379/tcp redis-sentinel-2

d7441d9346e4 redis "docker-entrypoint.s…" 7 minutes ago Up 6 minutes 6379/tcp, 0.0.0.0:26379->26379/tcp, :::26379->26379/tcp redis-sentinel-1

6459eb3e9b43 redis:latest "docker-entrypoint.s…" 13 minutes ago Up 6 minutes 0.0.0.0:36381->6379/tcp, :::36381->6379/tcp redis_s_slave2

01e82f4d7a7f redis:latest "docker-entrypoint.s…" 13 minutes ago Up 6 minutes 0.0.0.0:36379->6379/tcp, :::36379->6379/tcp redis_s_master

2fecec22f57f redis:latest "docker-entrypoint.s…" 13 minutes ago Up 6 minutes 0.0.0.0:36380->6379/tcp, :::36380->6379/tcp redis_s_slave1

哨兵模式简单验证

检查这几个参数

num-other-sentinels 是2,

所以我们知道对于这个主节点Sentinel已经发现了两个以上的Sentinels。

如果你检查日志,你可以看到+sentinel事件发生。

flags是master。

如果主节点挂掉了,我们可以看到sdown或者odown标志。

num-slaves 现在是2,

所以Sentinel发现有两个从节点。

[userapp@lqz-test ~]$ docker exec -it redis-sentinel-1 /bin/sh

# redis-cli -p 26379

127.0.0.1:26379> sentinel master my_redis_sentine

1) "name"

2) "my_redis_sentine"

3) "ip"

4) "172.20.0.10"

5) "port"

6) "6379"

7) "runid"

8) "ef3ab5ee1e613edc84ee002a9647bb62e57788df"

9) "flags"

10) "master"

11) "link-pending-commands"

12) "0"

13) "link-refcount"

14) "1"

15) "last-ping-sent"

16) "0"

17) "last-ok-ping-reply"

18) "135"

19) "last-ping-reply"

20) "135"

21) "down-after-milliseconds"

22) "30000"

23) "info-refresh"

24) "6815"

25) "role-reported"

26) "master"

27) "role-reported-time"

28) "499693"

29) "config-epoch"

30) "0"

31) "num-slaves"

32) "2"

33) "num-other-sentinels"

34) "2"

35) "quorum"

36) "2"

37) "failover-timeout"

38) "180000"

39) "parallel-syncs"

40) "1"

127.0.0.1:26379>

故障模拟

宕机

[userapp@lqz-test redis_sentinel]$ docker stop redis_s_master

redis_s_master

[userapp@lqz-test redis_sentinel]$

可以看到哨兵日志 显示 +sdown slave 172.20.0.10:6379

[userapp@lqz-test ~]$ docker logs redis-sentinel-1 |tail

1:X 17 Sep 2022 00:50:07.710 # +new-epoch 1

1:X 17 Sep 2022 00:50:07.710 # +vote-for-leader a3438b63c6cdda1d2a0df780a49bfeb9f87301b1 1

1:X 17 Sep 2022 00:50:07.735 # +sdown master my_redis_sentine 172.20.0.10 6379

1:X 17 Sep 2022 00:50:07.788 # +odown master my_redis_sentine 172.20.0.10 6379 #quorum 3/2

1:X 17 Sep 2022 00:50:07.788 # Next failover delay: I will not start a failover before Sat Sep 17 00:56:08 2022

1:X 17 Sep 2022 00:50:08.211 # +config-update-from sentinel a3438b63c6cdda1d2a0df780a49bfeb9f87301b1 172.20.0.22 26379 @ my_redis_sentine 172.20.0.10 6379

1:X 17 Sep 2022 00:50:08.211 # +switch-master my_redis_sentine 172.20.0.10 6379 172.20.0.12 6379

1:X 17 Sep 2022 00:50:08.211 * +slave slave 172.20.0.11:6379 172.20.0.11 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 00:50:08.211 * +slave slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 00:50:38.215 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

[userapp@lqz-test ~]$

[userapp@lqz-test ~]$ docker logs redis-sentinel-2 |tail

1:X 17 Sep 2022 00:37:48.287 # Sentinel ID is 7f99d0ac48d230393e9c99169b9e4bee16ff7aee

1:X 17 Sep 2022 00:37:48.287 # +monitor master my_redis_sentine 172.20.0.10 6379 quorum 2

1:X 17 Sep 2022 00:50:07.612 # +sdown master my_redis_sentine 172.20.0.10 6379

1:X 17 Sep 2022 00:50:07.709 # +new-epoch 1

1:X 17 Sep 2022 00:50:07.710 # +vote-for-leader a3438b63c6cdda1d2a0df780a49bfeb9f87301b1 1

1:X 17 Sep 2022 00:50:08.211 # +config-update-from sentinel a3438b63c6cdda1d2a0df780a49bfeb9f87301b1 172.20.0.22 26379 @ my_redis_sentine 172.20.0.10 6379

1:X 17 Sep 2022 00:50:08.211 # +switch-master my_redis_sentine 172.20.0.10 6379 172.20.0.12 6379

1:X 17 Sep 2022 00:50:08.211 * +slave slave 172.20.0.11:6379 172.20.0.11 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 00:50:08.211 * +slave slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 00:50:38.216 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

[userapp@lqz-test ~]$

[userapp@lqz-test ~]$ docker logs redis-sentinel-3 |tail

1:X 17 Sep 2022 00:50:08.159 # +failover-state-reconf-slaves master my_redis_sentine 172.20.0.10 6379

1:X 17 Sep 2022 00:50:08.211 * +slave-reconf-sent slave 172.20.0.11:6379 172.20.0.11 6379 @ my_redis_sentine 172.20.0.10 6379

1:X 17 Sep 2022 00:50:08.818 # -odown master my_redis_sentine 172.20.0.10 6379

1:X 17 Sep 2022 00:50:09.188 * +slave-reconf-inprog slave 172.20.0.11:6379 172.20.0.11 6379 @ my_redis_sentine 172.20.0.10 6379

1:X 17 Sep 2022 00:50:09.188 * +slave-reconf-done slave 172.20.0.11:6379 172.20.0.11 6379 @ my_redis_sentine 172.20.0.10 6379

1:X 17 Sep 2022 00:50:09.265 # +failover-end master my_redis_sentine 172.20.0.10 6379

1:X 17 Sep 2022 00:50:09.265 # +switch-master my_redis_sentine 172.20.0.10 6379 172.20.0.12 6379

1:X 17 Sep 2022 00:50:09.265 * +slave slave 172.20.0.11:6379 172.20.0.11 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 00:50:09.265 * +slave slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 00:50:39.297 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

登录其他节点redis查看 ,可以看到master已经切换到172.20.0.12

[userapp@lqz-test ~]$ docker exec -it redis_s_slave1 /bin/sh

# redis-cli -a 12345678

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6379> info replication

# Replication

role:slave

master_host:172.20.0.12

master_port:6379

master_link_status:up

master_last_io_seconds_ago:0

master_sync_in_progress:0

slave_read_repl_offset:222744

slave_repl_offset:222744

slave_priority:100

slave_read_only:1

replica_announced:1

connected_slaves:0

master_failover_state:no-failover

master_replid:2b97547fe34601aaa7d36eddba769168e7051c2d

master_replid2:e28b5c49205504dbb5e138c966629fde43a9432c

master_repl_offset:222744

second_repl_offset:151949

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:222744

127.0.0.1:6379>

在切换后的mast节点写入信息

127.0.0.1:6379> set test1 123

OK

127.0.0.1:6379> set test2 223

OK

再启动之前的master节点

docker start redis_s_master

并观察日志可以看到哨兵发现 ,并做为从节点

+convert-to-slave slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

[userapp@lqz-test master]$ docker logs redis-sentinel-1 |tail

1:X 17 Sep 2022 01:09:12.020 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:09:27.192 * +reboot slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:09:27.238 # -sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:09:37.244 * +convert-to-slave slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:23:00.406 * +reboot slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:23:10.479 * +convert-to-slave slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:31:28.408 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:32:01.659 * +reboot slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:32:01.712 # -sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:32:11.700 * +convert-to-slave slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

[userapp@lqz-test master]$

[userapp@lqz-test master]$ docker logs redis-sentinel-2 |tail

1:X 17 Sep 2022 00:50:38.216 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 00:56:46.272 # -sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 00:56:56.265 * +convert-to-slave slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:09:11.966 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:09:28.233 * +reboot slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:09:28.299 # -sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:23:00.430 * +reboot slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:31:28.338 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:32:01.658 * +reboot slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:32:01.707 # -sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

[userapp@lqz-test master]$

[userapp@lqz-test master]$ docker logs redis-sentinel-3 |tail

1:X 17 Sep 2022 00:50:09.265 * +slave slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 00:50:39.297 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 00:56:46.298 # -sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:09:11.965 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:09:28.204 * +reboot slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:09:28.251 # -sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:23:00.438 * +reboot slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:31:28.356 # +sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:32:01.707 * +reboot slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

1:X 17 Sep 2022 01:32:01.760 # -sdown slave 172.20.0.10:6379 172.20.0.10 6379 @ my_redis_sentine 172.20.0.12 6379

登录容器查看连接信息 ,并查询宕机期间的写入内容

127.0.0.1:6379> [root@lqz-test ~]# docker exec -it redis_s_master /bin/sh

# redis-cli -a 12345678

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6379> info replication

# Replication

role:slave

master_host:172.20.0.12

master_port:6379

master_link_status:up

master_last_io_seconds_ago:0

...

127.0.0.1:6379> get test1

"123"

127.0.0.1:6379> get test2

"223"

127.0.0.1:6379>

常见问题

搭建哨兵模式过程中的常见问题

指定sentinel.conf配置文件映射到容器内时直接使用文件映射, 这么做有可能导致哨兵没有写入配置文件的权限, 表现为

WARNING: Sentinel was not able to save the new configuration on disk!!!: Device or resource busy.

解决方案:使用文件夹映射

看似搭建起来了, 可当stop掉master后哨兵却不会选举新的主节点, 可能是哨兵改写了sentinel.conf后使用了一样的myid.

解决方案: stop掉哨兵, 删掉myid那一行, 重新启动哨兵. myid会自动重新生成.

从库没有权限打开临时文件, 表现为同步时大量出现 Opening the temp file needed for MASTER <-> REPLICA synchronization: Permission denied 的log记录.

解决方案: sudo -u root ./redis-server [配置文件] 方式启动

Master恢复后不同步问题

就是说你的主节点恢复后不会像keeplived一样会重新变成主节点,而是会变成从节点,你可以使用redis 中的info查看当前redis 的主从相关信息,可能是和master连接状态是down关闭状态,所以要进行排查。

发生场景

原来的master 挂机了一段时间,然后又恢复了,他的节点变成了Slave状态,可能他的连接也不成功了

127.0.0.1:6379> info replication

# Replication

role:slave

master_host:172.20.0.12

master_port:6379

master_link_status:down

master_last_io_seconds_ago:-1

...

可能之前是主节点,没有配置从节点的连接信息 如:masterauth 连接密码,当master转变为slave后,由于他没有密码,所以他不能从新的master同步数据,随之导致 info replication 的时候,同步状态为 down ,所以只需要修改 redis.conf 中的 masterauth 为 对应的密码。

还有一些情况一般master数据无法同步给slave的方案检查为如下:

网络通信问题,要保证互相ping通,内网互通。

关闭防火墙,对应的端口开发(虚拟机中建议永久关闭防火墙,云服务器的话需要保证内网互通)。

统一所有的密码,不要漏了某个节点没有设置。

step by step 可以

参考

结构说明可以

参考

其他经验

参考

3.3 redis-集群模式

持久化目录创建,redis配置初始化

创建配置模板 redis-cluster.tmpl

# redis端口

port 6379

#redis 访问密码

requirepass 12345678

#redis 访问Master节点密码

masterauth 12345678

# 关闭保护模式

protected-mode no

# 开启集群

cluster-enabled yes

# 集群节点配置

cluster-config-file nodes.conf

# 超时

cluster-node-timeout 5000

# 集群节点IP host模式为宿主机IP

cluster-announce-ip 172.21.0.1${NODE}

# 集群节点端口

cluster-announce-port 6379

cluster-announce-bus-port 16379

# 开启 appendonly 备份模式

appendonly yes

# 每秒钟备份

appendfsync everysec

# 对aof文件进行压缩时,是否执行同步操作

no-appendfsync-on-rewrite no

# 当目前aof文件大小超过上一次重写时的aof文件大小的100%时会再次进行重写

auto-aof-rewrite-percentage 100

# 重写前AOF文件的大小最小值 默认 64mb

auto-aof-rewrite-min-size 64mb

# 日志配置

# debug:会打印生成大量信息,适用于开发/测试阶段

# verbose:包含很多不太有用的信息,但是不像debug级别那么混乱

# notice:适度冗长,适用于生产环境

# warning:仅记录非常重要、关键的警告消息

loglevel notice

# 日志文件路径

logfile "/data/redis.log"

批量生成配置脚本

[userapp@lqz-test redis_cluster]$ cat init.sh

for nodeid in $(seq 0 8); do \

mkdir -p ./node-${nodeid}/conf \

&& NODE=${nodeid} envsubst < ./redis-cluster.tmpl > ./node-${nodeid}/conf/redis.conf \

&& mkdir -p ./node-${nodeid}/data; \

done

[userapp@lqz-test redis_cluster]$ sh ./init.sh

使用docker-compos.yml创建容器

version: "3.7"

networks:

net_redis_cluster:

driver: bridge

ipam:

driver: default

config:

- subnet: 172.21.0.1/16

services:

redis_c_0:

image: redis:latest

container_name: redis_c_0

restart: always

ports:

- 46380:6379

networks:

net_redis_cluster:

ipv4_address: 172.21.0.10

volumes:

- ./node-0/conf/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./node-0/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

environment:

# 设置时区为上海,否则时间会有问题

- TZ=Asia/Shanghai

logging:

options:

max-size: '100m'

max-file: '10'

redis_c_1:

image: redis:latest

container_name: redis_c_1

restart: always

ports:

- 46381:6379

networks:

net_redis_cluster:

ipv4_address: 172.21.0.11

volumes:

- ./node-1/conf/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./node-1/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

environment:

# 设置时区为上海,否则时间会有问题

- TZ=Asia/Shanghai

logging:

options:

max-size: '100m'

max-file: '10'

redis_c_2:

image: redis:latest

container_name: redis_c_2

restart: always

ports:

- 46382:6379

networks:

net_redis_cluster:

ipv4_address: 172.21.0.12

volumes:

- ./node-2/conf/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./node-2/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

environment:

# 设置时区为上海,否则时间会有问题

- TZ=Asia/Shanghai

logging:

options:

max-size: '100m'

max-file: '10'

redis_c_3:

image: redis:latest

container_name: redis_c_3

restart: always

ports:

- 46383:6379

networks:

net_redis_cluster:

ipv4_address: 172.21.0.13

volumes:

- ./node-3/conf/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./node-3/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

environment:

# 设置时区为上海,否则时间会有问题

- TZ=Asia/Shanghai

logging:

options:

max-size: '100m'

max-file: '10'

redis_c_4:

image: redis:latest

container_name: redis_c_4

restart: always

ports:

- 46384:6379

networks:

net_redis_cluster:

ipv4_address: 172.21.0.14

volumes:

- ./node-4/conf/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./node-4/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

environment:

# 设置时区为上海,否则时间会有问题

- TZ=Asia/Shanghai

logging:

options:

max-size: '100m'

max-file: '10'

redis_c_5:

image: redis:latest

container_name: redis_c_5

restart: always

ports:

- 46385:6379

networks:

net_redis_cluster:

ipv4_address: 172.21.0.15

volumes:

- ./node-5/conf/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./node-5/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

environment:

# 设置时区为上海,否则时间会有问题

- TZ=Asia/Shanghai

logging:

options:

max-size: '100m'

max-file: '10'

redis_c_6:

image: redis:latest

container_name: redis_c_6

restart: always

ports:

- 46386:6379

networks:

net_redis_cluster:

ipv4_address: 172.21.0.16

volumes:

- ./node-6/conf/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./node-6/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

environment:

# 设置时区为上海,否则时间会有问题

- TZ=Asia/Shanghai

logging:

options:

max-size: '100m'

max-file: '10'

redis_c_7:

image: redis:latest

container_name: redis_c_7

restart: always

ports:

- 46387:6379

networks:

net_redis_cluster:

ipv4_address: 172.21.0.17

volumes:

- ./node-7/conf/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./node-7/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

environment:

# 设置时区为上海,否则时间会有问题

- TZ=Asia/Shanghai

logging:

options:

max-size: '100m'

max-file: '10'

redis_c_8:

image: redis:latest

container_name: redis_c_8

restart: always

ports:

- 46388:6379

networks:

net_redis_cluster:

ipv4_address: 172.21.0.18

volumes:

- ./node-8/conf/redis.conf:/usr/local/etc/redis/redis.conf:rw

- ./node-8/data:/data:rw

command:

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

environment:

# 设置时区为上海,否则时间会有问题

- TZ=Asia/Shanghai

logging:

options:

max-size: '100m'

max-file: '10'

启动验证

[userapp@lqz-test redis_cluster]$ docker-compose up -d

[+] Running 9/9

⠿ Container redis_c_7 Started 1.3s

⠿ Container redis_c_3 Started 1.3s

⠿ Container redis_c_0 Started 1.3s

⠿ Container redis_c_8 Started 1.3s

⠿ Container redis_c_6 Started 0.7s

⠿ Container redis_c_4 Started 1.1s

⠿ Container redis_c_1 Started 1.3s

⠿ Container redis_c_5 Started 1.1s

⠿ Container redis_c_2 Started

[userapp@lqz-test redis_cluster]$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

92edf1119fc0 redis:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:46382->6379/tcp, :::46382->6379/tcp redis_c_2

1f78cc5fac42 redis:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:46385->6379/tcp, :::46385->6379/tcp redis_c_5

7da28f97ce0c redis:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:46381->6379/tcp, :::46381->6379/tcp redis_c_1

b4514bafdb69 redis:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:46380->6379/tcp, :::46380->6379/tcp redis_c_0

adc4ced85fb0 redis:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:46386->6379/tcp, :::46386->6379/tcp redis_c_6

da5466f2a5bf redis:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:46384->6379/tcp, :::46384->6379/tcp redis_c_4

cdc2fd661cfe redis:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:46383->6379/tcp, :::46383->6379/tcp redis_c_3

15ccd1a72089 redis:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:46388->6379/tcp, :::46388->6379/tcp redis_c_8

e7142228187d redis:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:46387->6379/tcp, :::46387->6379/tcp redis_c_7

配置集群

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_0 redis-cli -p 6379 -a 12345678 --cluster create 172.21.0.10:6379 172.21.0.11:6379 172.21.0.12:6379 172.21.0.13:6379 172.21.0.14:6379 172.21.0.15:6379 172.21.0.16:6379 172.21.0.17:6379 172.21.0.18:6379 --cluster-replicas 2

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing hash slots allocation on 9 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.21.0.14:6379 to 172.21.0.10:6379

Adding replica 172.21.0.15:6379 to 172.21.0.10:6379

Adding replica 172.21.0.16:6379 to 172.21.0.11:6379

Adding replica 172.21.0.17:6379 to 172.21.0.11:6379

Adding replica 172.21.0.18:6379 to 172.21.0.12:6379

Adding replica 172.21.0.13:6379 to 172.21.0.12:6379

M: 5397e34275be2bd6ef4012ff6942530e01c00143 172.21.0.10:6379

slots:[0-5460] (5461 slots) master

M: f9f9216b6a5854b10b13b8618113d81cae64cadd 172.21.0.11:6379

slots:[5461-10922] (5462 slots) master

M: 4f874f47d9d34f8e6cc0162acea79338fe01f18c 172.21.0.12:6379

slots:[10923-16383] (5461 slots) master

S: f371c99666e7383077f8d2f185ab2bea09cb37a5 172.21.0.13:6379

replicates 4f874f47d9d34f8e6cc0162acea79338fe01f18c

S: 9486b90783f04c7b4cf4b9bca78b058749cbfb46 172.21.0.14:6379

replicates 5397e34275be2bd6ef4012ff6942530e01c00143

S: 1391dbad6b57a01e6eb461eace3ac2a250a64fe9 172.21.0.15:6379

replicates 5397e34275be2bd6ef4012ff6942530e01c00143

S: 69f58d2ebc5105ca58e65dffc57a3c4343b6c4ca 172.21.0.16:6379

replicates f9f9216b6a5854b10b13b8618113d81cae64cadd

S: edfc3354ed18988f5d8c937c32a1bcaf383eca72 172.21.0.17:6379

replicates f9f9216b6a5854b10b13b8618113d81cae64cadd

S: 1bad14c41ea10f93da18462880d8896cbe57eb1b 172.21.0.18:6379

replicates 4f874f47d9d34f8e6cc0162acea79338fe01f18c

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 172.21.0.10:6379)

M: 5397e34275be2bd6ef4012ff6942530e01c00143 172.21.0.10:6379

slots:[0-5460] (5461 slots) master

2 additional replica(s)

S: 9486b90783f04c7b4cf4b9bca78b058749cbfb46 172.21.0.14:6379

slots: (0 slots) slave

replicates 5397e34275be2bd6ef4012ff6942530e01c00143

S: 1bad14c41ea10f93da18462880d8896cbe57eb1b 172.21.0.18:6379

slots: (0 slots) slave

replicates 4f874f47d9d34f8e6cc0162acea79338fe01f18c

S: f371c99666e7383077f8d2f185ab2bea09cb37a5 172.21.0.13:6379

slots: (0 slots) slave

replicates 4f874f47d9d34f8e6cc0162acea79338fe01f18c

S: edfc3354ed18988f5d8c937c32a1bcaf383eca72 172.21.0.17:6379

slots: (0 slots) slave

replicates f9f9216b6a5854b10b13b8618113d81cae64cadd

S: 1391dbad6b57a01e6eb461eace3ac2a250a64fe9 172.21.0.15:6379

slots: (0 slots) slave

replicates 5397e34275be2bd6ef4012ff6942530e01c00143

S: 69f58d2ebc5105ca58e65dffc57a3c4343b6c4ca 172.21.0.16:6379

slots: (0 slots) slave

replicates f9f9216b6a5854b10b13b8618113d81cae64cadd

M: f9f9216b6a5854b10b13b8618113d81cae64cadd 172.21.0.11:6379

slots:[5461-10922] (5462 slots) master

2 additional replica(s)

M: 4f874f47d9d34f8e6cc0162acea79338fe01f18c 172.21.0.12:6379

slots:[10923-16383] (5461 slots) master

2 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[userapp@lqz-test redis_cluster]$

-p 指定端口号 默认6379

-a 指定连接密码,若配置时未设置则不用

--cluster-replicas 指定每个master下有几个slave,会自动进行分配

--cluster create 指定每个redis的IP:port,这里因为是host网络,直接127.0.0.1:7000即可,

若使用的默认bridge则需要查出各容器ip:6379

####运行测试

集群状态

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_2 redis-cli -c -a 12345678 cluster nodes

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

5397e34275be2bd6ef4012ff6942530e01c00143 172.21.0.10:6379@16379 master - 0 1663391642064 1 connected 0-5460

f9f9216b6a5854b10b13b8618113d81cae64cadd 172.21.0.11:6379@16379 master - 0 1663391640000 2 connected 5461-10922

4f874f47d9d34f8e6cc0162acea79338fe01f18c 172.21.0.12:6379@16379 myself,master - 0 1663391640000 3 connected 10923-16383

1391dbad6b57a01e6eb461eace3ac2a250a64fe9 172.21.0.15:6379@16379 slave 5397e34275be2bd6ef4012ff6942530e01c00143 0 1663391641560 1 connected

f371c99666e7383077f8d2f185ab2bea09cb37a5 172.21.0.13:6379@16379 slave 4f874f47d9d34f8e6cc0162acea79338fe01f18c 0 1663391641157 3 connected

69f58d2ebc5105ca58e65dffc57a3c4343b6c4ca 172.21.0.16:6379@16379 slave f9f9216b6a5854b10b13b8618113d81cae64cadd 0 1663391641000 2 connected

1bad14c41ea10f93da18462880d8896cbe57eb1b 172.21.0.18:6379@16379 slave 4f874f47d9d34f8e6cc0162acea79338fe01f18c 0 1663391641661 3 connected

9486b90783f04c7b4cf4b9bca78b058749cbfb46 172.21.0.14:6379@16379 slave 5397e34275be2bd6ef4012ff6942530e01c00143 0 1663391640148 1 connected

edfc3354ed18988f5d8c937c32a1bcaf383eca72 172.21.0.17:6379@16379 slave f9f9216b6a5854b10b13b8618113d81cae64cadd 0 1663391642165 2 connected

单节点写入

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_0 redis-cli -a 12345678 set test111 123

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

OK

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_2 redis-cli -a 12345678 set test222 123

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

OK

集群模式读取

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_2 redis-cli -c -a 12345678 get test111

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

"123"

[userapp@lqz-test redis_cluster]$

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_2 redis-cli -c -a 12345678 get test222

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

"123"

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_4 redis-cli -c -a 12345678 get test222

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

"123"

[userapp@lqz-test redis_cluster]$

故障模拟

故障前单节点写入

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_0 redis-cli -a 12345678 set err111 aaa

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

OK

故障后无法写入

docker stop redis_c_0

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_0 redis-cli -a 12345678 set err222 bbb

Error response from daemon: Container c20b990e8f28bf20d2df26568c4dd3a9d4e0a4a30d2424b147f17e1a26245344 is not running

[userapp@lqz-test redis_cluster]$

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_0 redis-cli -c -a 12345678 set err222 bbb

Error response from daemon: Container c20b990e8f28bf20d2df26568c4dd3a9d4e0a4a30d2424b147f17e1a26245344 is not running

故障后查询集群可以查询

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_1 redis-cli -c -a 12345678 get err111

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

"aaa"

查询节点信息

找到故障主机172.21.0.14

分片主节接管机 172.21.0.14

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_2 redis-cli -c -a 12345678 cluster nodes

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

5397e34275be2bd6ef4012ff6942530e01c00143 172.21.0.10:6379@16379 master,fail - 1663391890299 1663391888000 1 connected

f9f9216b6a5854b10b13b8618113d81cae64cadd 172.21.0.11:6379@16379 master - 0 1663392043107 2 connected 5461-10922

4f874f47d9d34f8e6cc0162acea79338fe01f18c 172.21.0.12:6379@16379 myself,master - 0 1663392042000 3 connected 10923-16383

1391dbad6b57a01e6eb461eace3ac2a250a64fe9 172.21.0.15:6379@16379 slave 9486b90783f04c7b4cf4b9bca78b058749cbfb46 0 1663392043610 10 connected

f371c99666e7383077f8d2f185ab2bea09cb37a5 172.21.0.13:6379@16379 slave 4f874f47d9d34f8e6cc0162acea79338fe01f18c 0 1663392043107 3 connected

69f58d2ebc5105ca58e65dffc57a3c4343b6c4ca 172.21.0.16:6379@16379 slave f9f9216b6a5854b10b13b8618113d81cae64cadd 0 1663392043107 2 connected

1bad14c41ea10f93da18462880d8896cbe57eb1b 172.21.0.18:6379@16379 slave 4f874f47d9d34f8e6cc0162acea79338fe01f18c 0 1663392043000 3 connected

9486b90783f04c7b4cf4b9bca78b058749cbfb46 172.21.0.14:6379@16379 master - 0 1663392042000 10 connected 0-5460

edfc3354ed18988f5d8c937c32a1bcaf383eca72 172.21.0.17:6379@16379 slave f9f9216b6a5854b10b13b8618113d81cae64cadd 0 1663392042000 2 connected

故障期间在 172.21.0.14 单点不可以写入,集群可以写入

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_4 redis-cli -c -a 12345678 set err222 bbb

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

OK

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_4 redis-cli -a 12345678 set err333 bbb

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

(error) MOVED 8194 172.21.0.11:6379

[userapp@lqz-test redis_cluster]$

故障机恢复,故障期内容读取

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_0 redis-cli -c -a 12345678 get err222

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

"bbb"

[userapp@lqz-test redis_cluster]$

[userapp@lqz-test redis_cluster]$ docker exec -it redis_c_0 redis-cli -c -a 12345678 cluster nodes

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

4f874f47d9d34f8e6cc0162acea79338fe01f18c 172.21.0.12:6379@16379 master - 0 1663392312970 3 connected 10923-16383

1391dbad6b57a01e6eb461eace3ac2a250a64fe9 172.21.0.15:6379@16379 slave 9486b90783f04c7b4cf4b9bca78b058749cbfb46 0 1663392313575 10 connected

9486b90783f04c7b4cf4b9bca78b058749cbfb46 172.21.0.14:6379@16379 master - 0 1663392313000 10 connected 0-5460

f371c99666e7383077f8d2f185ab2bea09cb37a5 172.21.0.13:6379@16379 slave 4f874f47d9d34f8e6cc0162acea79338fe01f18c 0 1663392313000 3 connected

edfc3354ed18988f5d8c937c32a1bcaf383eca72 172.21.0.17:6379@16379 slave f9f9216b6a5854b10b13b8618113d81cae64cadd 0 1663392312566 2 connected

f9f9216b6a5854b10b13b8618113d81cae64cadd 172.21.0.11:6379@16379 master - 0 1663392313576 2 connected 5461-10922

69f58d2ebc5105ca58e65dffc57a3c4343b6c4ca 172.21.0.16:6379@16379 slave f9f9216b6a5854b10b13b8618113d81cae64cadd 0 1663392312000 2 connected

1bad14c41ea10f93da18462880d8896cbe57eb1b 172.21.0.18:6379@16379 slave 4f874f47d9d34f8e6cc0162acea79338fe01f18c 0 1663392312567 3 connected

5397e34275be2bd6ef4012ff6942530e01c00143 172.21.0.10:6379@16379 myself,slave 9486b90783f04c7b4cf4b9bca78b058749cbfb46 0 1663392313000 10 connected

总结 集群模式一般选择集群-c方式访问了.尽量不使用单点模式访问。其他场景可以自行测试

参考资料1

参考资料2

参考资料3

参考资料4

参考资料5

参考资料6

3.4 zk

目录结构

[userapp@lqz-test zk]$ pwd

/data/docker/zk

[userapp@lqz-test zk]$ ls -l

总用量 4

-rw-rw-r-- 1 userapp userapp 1802 9月 17 17:30 docker-compose.yml

drwxrwxr-x 4 userapp userapp 33 9月 17 13:44 zk1

drwxrwxr-x 4 userapp userapp 33 9月 17 13:44 zk2

drwxrwxr-x 4 userapp userapp 33 9月 17 13:44 zk3

docker-compose.yml 配置如下.

直接 docker-compose up -d

version: "3.7"

networks:

net_zk:

driver: bridge

ipam:

config:

- subnet: 172.22.0.1/16

# 配置zk集群的

# container services下的每一个子配置都对应一个zk节点的docker container

services:

zk1:

# docker container所使用的docker image

image: zookeeper

hostname: zk1

container_name: zk1

# 配置docker container和宿主机的端口映射

ports:

- 22181:2181

- 28081:8080

# 配置docker container的环境变量

environment:

# 当前zk实例的id

ZOO_MY_ID: 1

# 整个zk集群的机器、端口列表

ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zk2:2888:3888;2181 server.3=zk3:2888:3888;2181

# 将docker container上的路径挂载到宿主机上 实现宿主机和docker container的数据共享

volumes:

- ./zk1/data:/data

- ./zk1/datalog:/datalog

- ./zk1/config:/conf

# 当前docker container加入名为zk-net的隔离网络

networks:

net_zk:

ipv4_address: 172.22.0.11

zk2:

image: zookeeper

hostname: zk2

container_name: zk2

ports:

- 22182:2181

- 28082:8080

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zk1:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zk3:2888:3888;2181

volumes:

- ./zk2/data:/data

- ./zk2/datalog:/datalog

- ./zk2/config:/conf

networks:

net_zk:

ipv4_address: 172.22.0.12

zk3:

image: zookeeper

hostname: zk3

container_name: zk3

ports:

- 22183:2181

- 28083:8080

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zk1:2888:3888;2181 server.2=zk2:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181

volumes:

- ./zk3/data:/data

- ./zk3/datalog:/datalog

- ./zk3/config:/conf

networks:

net_zk:

ipv4_address: 172.22.0.13

启动之后 需要在配置文件中增加

4lw.commands.whitelist=*

用以支持4字命令进行探测 并重启zk集群

[userapp@lqz-test zk]$ vi zk3/config/zoo.cfg

dataDir=/data

dataLogDir=/datalog

tickTime=2000

initLimit=5

syncLimit=2

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

standaloneEnabled=true

admin.enableServer=true

server.1=zk1:2888:3888;2181

server.2=zk2:2888:3888;2181

server.3=0.0.0.0:2888:3888;2181

4lw.commands.whitelist=*

docker restart zk1 zk2 zk3

[userapp@lqz-test zk]$ docker-compose up -d

[+] Running 3/3

⠿ Container zk1 Started 0.5s

⠿ Container zk2 Started 0.6s

⠿ Container zk3 Started

[userapp@lqz-test zk]$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a34f062493af zookeeper "/docker-entrypoint.…" 4 minutes ago Up 4 minutes 2888/tcp, 3888/tcp, 0.0.0.0:22181->2181/tcp, :::22181->2181/tcp, 0.0.0.0:28081->8080/tcp, :::28081->8080/tcp zk1

910e4c61d36d zookeeper "/docker-entrypoint.…" 4 minutes ago Up 4 minutes 2888/tcp, 3888/tcp, 0.0.0.0:22182->2181/tcp, :::22182->2181/tcp, 0.0.0.0:28082->8080/tcp, :::28082->8080/tcp zk2

815191ea45aa zookeeper "/docker-entrypoint.…" 4 minutes ago Up 4 minutes 2888/tcp, 3888/tcp, 0.0.0.0:22183->2181/tcp, :::22183->2181/tcp, 0.0.0.0:28083->8080/tcp, :::28083->8080/tcp zk3

模拟故障

略

其他详细说明可以

参考1

参考2

3.5 kafak (依赖上一步ZK安装)

docker-compose.yml配置如下

version: '3.7'

networks:

net_kafka:

driver: bridge

ipam:

driver: default

config:

- subnet: 172.23.0.0/16

services:

kafka1:

image: wurstmeister/kafka

container_name: kafka1

ports:

- "9093:9092"

environment:

KAFKA_BROKER_ID: 0

KAFKA_NUM_PARTITIONS: 3

KAFKA_DEFAULT_REPLICATION_FACTOR: 2

KAFKA_ZOOKEEPER_CONNECT: "zk1:2181,zk2:2181,zk3:2181"

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://172.23.0.11:9092

volumes:

- ./broker1/logs:/opt/kafka/logs

#- ./broker1/docker.sock:/var/run/docker.sock

restart: always

networks:

net_kafka:

ipv4_address: 172.23.0.11

kafka2:

image: wurstmeister/kafka

container_name: kafka2

ports:

- "9094:9092"

environment:

KAFKA_BROKER_ID: 1

KAFKA_NUM_PARTITIONS: 3

KAFKA_DEFAULT_REPLICATION_FACTOR: 2

KAFKA_ZOOKEEPER_CONNECT: "zk1:2181,zk2:2181,zk3:2181"

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://172.23.0.12:9092

volumes:

- ./broker2/logs:/opt/kafka/logs

#- ./broker2/docker.sock:/var/run/docker.sock

restart: always

networks:

net_kafka:

ipv4_address: 172.23.0.12

kafka3:

image: wurstmeister/kafka

container_name: kafka3

ports:

- "9095:9092"

environment:

KAFKA_BROKER_ID: 2

KAFKA_NUM_PARTITIONS: 3

KAFKA_DEFAULT_REPLICATION_FACTOR: 2

KAFKA_ZOOKEEPER_CONNECT: "zk1:2181,zk2:2181,zk3:2181"

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://172.23.0.13:9092

volumes:

- ./broker3/logs:/opt/kafka/logs

#- ./broker3/docker.sock:/var/run/docker.sock

restart: always

networks:

net_kafka:

ipv4_address: 172.23.0.13

简单验证

故障模拟

问题

不同网桥的容器通信

找到kafka的网桥名称增加kafka网桥与zk集群间通信

[userapp@lqz-test kafka]$ docker network ls

NETWORK ID NAME DRIVER SCOPE

1e7baede63d4 bridge bridge local

eb8559cd5925 dns_net_dns bridge local

afd2117c52a4 docker_nightingale bridge local

6eb713cec437 host host local

03885753d7df kafka_net_kafka bridge local

27299c683eee none null local

603c66a3af95 redis_cluster_net_redis_cluster bridge local

6811c1bfe60e redis_master_slave_net_redis_master_slave bridge local

4076b2c9e805 redis_sentinel_net_redis_sentinel bridge local

56a1419c8f1b zk_net_zk bridge local

[root@lqz-test ~]# docker network connect kafka_net_kafka zk1

[root@lqz-test ~]# docker network connect kafka_net_kafka zk2

[root@lqz-test ~]# docker network connect kafka_net_kafka zk3

目前好像每次重启容器都要加网卡通信策略. 测试够用.没找到好方法固化

Opening socket connection to server zk1/172.23.0.4:2182. Will not attempt to authenticate using SASL

网络通信问题.

可以自行排查网桥间通信问题 或者路由转发配置问题或者其他网络问题

参考1

参考2

参考3

参考4

4.n9e

n9e官网

4.1 docker安装N9E

$ git clone https://gitlink.org.cn/ccfos/nightingale.git

$ cd nightingale/docker

# docker compose V2版本执行 docker compose up -d (https://docs.docker.com/compose/#compose-v2-and-the-new-docker-compose-command)

$ docker-compose up -d

Creating network "docker_nightingale" with driver "bridge"

Creating mysql ... done

Creating redis ... done

Creating prometheus ... done

Creating ibex ... done

Creating agentd ... done

Creating nwebapi ... done

Creating nserver ... done

Creating telegraf ... done

# docker compose V2版本执行 docker compose ps (https://docs.docker.com/compose/#compose-v2-and-the-new-docker-compose-command)

$ docker-compose ps

Name Command State Ports

----------------------------------------------------------------------------------------------------------------------------

agentd /app/ibex agentd Up 10090/tcp, 20090/tcp

ibex /app/ibex server Up 0.0.0.0:10090->10090/tcp, 0.0.0.0:20090->20090/tcp

mysql docker-entrypoint.sh mysqld Up 0.0.0.0:3306->3306/tcp, 33060/tcp

nserver /app/n9e server Up 18000/tcp, 0.0.0.0:19000->19000/tcp

nwebapi /app/n9e webapi Up 0.0.0.0:18000->18000/tcp, 19000/tcp

prometheus /bin/prometheus --config.f ... Up 0.0.0.0:9090->9090/tcp

redis docker-entrypoint.sh redis ... Up 0.0.0.0:6379->6379/tcp

telegraf /entrypoint.sh telegraf Up 0.0.0.0:8092->8092/udp, 0.0.0.0:8094->8094/tcp, 0.0.0.0:8125->8125/udp

4.2 登录N9E

http://192.168.0.130:18000/metric/explorer

root

root.2020

终于到了正题监控了,一不小心搞了一天了…

4.3 组件探测指标接入监控

预先安装GoLang环境

编译categraf 采集器需要用到GoLang环境 使用GoLang版本管理器安装GoLang环境

github

参考文档

我的是centos7直接下载编译好的二进制文件.可以根据自己系统自行选择

[userapp@lqz-test pkg]$ wget https://github.com/voidint/g/releases/download/v1.4.0/g1.4.0.linux-amd64.tar.gz

[userapp@lqz-test pkg]$ tar xvzf g1.4.0.linux-amd64.tar.gz

[userapp@lqz-test pkg]$ ls -l

总用量 354700

-rwxr-xr-x 1 userapp userapp 10678272 6月 8 21:29 g

mkdir -p /data/app/g/bin

mkdir -p /data/app/g/go

mv ./g /data/app/g/bin/g

ln -s /data/app/g/ ~/.g

增加环境变量

export G_HOME=/data/app/g

export GOROOT=${G_HOME}/go

export PATH=${PATH}:${GOROOT}/bin:${G_HOME}/bin

安装go环境

[userapp@lqz-test pkg]$ g ls-remote

[userapp@lqz-test pkg]$ g install 1.19.1

Downloading 100% |███████████████| (142/142 MB, 10.768 MB/s)

Computing checksum with SHA256

Checksums matched

Now using go1.19.1

[userapp@lqz-test versions]$ which go

/data/app/g/go/bin/go

[userapp@lqz-test versions]$ go version

go version go1.19.1 linux/amd64

- 如果速度慢可以自行寻找国内源.目前我的速度还可以

编译安装采集组件 categraf

github

下载源码

[userapp@lqz-test git]$ git clone https://github.com/flashcatcloud/categraf.git

正克隆到 'categraf'...

remote: Enumerating objects: 5366, done.

remote: Counting objects: 100% (665/665), done.

remote: Compressing objects: 100% (371/371), done.

remote: Total 5366 (delta 330), reused 503 (delta 254), pack-reused 4701

接收对象中: 100% (5366/5366), 13.76 MiB | 2.63 MiB/s, done.

处理 delta 中: 100% (2976/2976), done.

编译

[userapp@lqz-test git]$ cd categraf/

[userapp@lqz-test categraf]$ export GO111MODULE=on

[userapp@lqz-test categraf]$ export GOPROXY=https://goproxy.cn

[userapp@lqz-test categraf]$ go build

喝杯茶水等个10分钟 .只需要需要 categraf conf 打包就可以部署啦

.....

go: downloading github.com/VividCortex/gohistogram v1.0.0

go: downloading github.com/emicklei/go-restful v2.9.5+incompatible

go: downloading github.com/munnerz/goautoneg v0.0.0-20191010083416-a7dc8b61c822

[userapp@lqz-test categraf]$ ls -l

总用量 133860

drwxrwxr-x 2 userapp userapp 184 9月 17 23:17 agent

drwxrwxr-x 2 userapp userapp 153 9月 17 23:17 api

-rwxrwxr-x 1 userapp userapp 136815681 9月 17 23:21 categraf

drwxrwxr-x 47 userapp userapp 4096 9月 17 23:17 conf

...

[userapp@lqz-test categraf]$ tar zcvf categraf.tar.gz categraf conf

先本地启动测试一下一切正常!

[userapp@lqz-test categraf]$ ./categraf --test --inputs system:mem

2022/09/17 23:25:05 main.go:118: I! runner.binarydir: /data/git/categraf

2022/09/17 23:25:05 main.go:119: I! runner.hostname: lqz-test

2022/09/17 23:25:05 main.go:120: I! runner.fd_limits: (soft=4096, hard=4096)

2022/09/17 23:25:05 main.go:121: I! runner.vm_limits: (soft=unlimited, hard=unlimited)

2022/09/17 23:25:05 config.go:33: I! tracing disabled

2022/09/17 23:25:05 provider.go:63: I! use input provider: [local]

2022/09/17 23:25:05 agent.go:82: I! agent starting

2022/09/17 23:25:05 metrics_agent.go:93: I! input: local.mem started

2022/09/17 23:25:05 metrics_agent.go:93: I! input: local.system started

2022/09/17 23:25:05 prometheus_scrape.go:14: I! prometheus scraping disabled!

2022/09/17 23:25:05 agent.go:93: I! agent started

....

非root 用户某些目录权限不具备暂时不关注,.

目前我只需要redis kafka zk的监控采集插件

接入redis

修改配置文件 ./conf/input.redis/redis.toml

主备模式redis

[[instances]]

address = "172.19.0.10:6379"

labels = { instance="redis_master_slave-master" }

password = "12345678"

[[instances]]

address = "172.19.0.11:6379"

labels = { instance="redis_master_slave-slave" }

password = "12345678"

启动

./categraf --inputs redis:net

接入kafka

/categraf/conf/input.kafka/kafka.toml

[[instances]]

kafka_uris = ["172.23.0.13:9092","172.23.0.12:9092","172.23.0.11:9092"]

labels = { cluster="kafka-cluster-01" }

启动命令增加 kakfa

./categraf --inputs redis:net:kafka:zookeeper

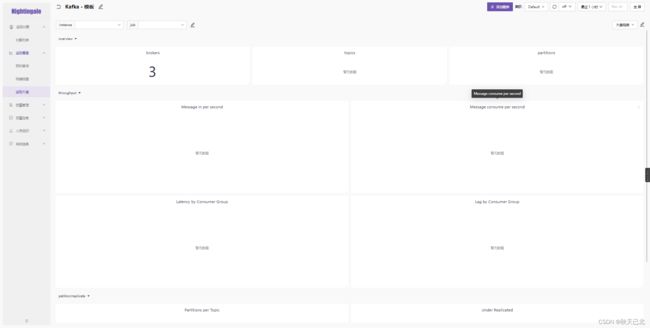

数据已经采集到没有找到特别好的大盘,也可能没有topic以及业务.看到的大盘信息不太多

接入zk

需要提前放看4字命令白名单

./categraf/conf/input.zookeeper/zookeeper.toml

[[instances]]

cluster_name = "zk-cluster"

labels = { instance="zk-cluster-1" }

addresses = "172.22.0.11:2181"

[[instances]]

cluster_name = "zk-cluster"

labels = { instance="zk-cluster-2" }

addresses = "172.22.0.12:2181"

[[instances]]

cluster_name = "zk-cluster"

labels = { instance="zk-cluster-3" }

addresses = "172.22.0.13:2181"

启动命令增加 zk

./categraf --inputs redis:net:kafka:zookeeper

可以看到已经收集到部分指标数据

但是目前没找到好用的大盘模板估计得自己做大盘

夜莺告警及其他说明可以参考官方视频学习

后续可以继续探索

prometheus 的采集器是否有比较合适的策略

Grafana 中是否有好用的模板

参考1

参考2

5. redis_exporter 采集器

下载编译redis_exporter

[userapp@lqz-test git]$ git clone https://github.com/oliver006/redis_exporter.git

正克隆到 'redis_exporter'...

remote: Enumerating objects: 3492, done.

remote: Counting objects: 100% (14/14), done.

remote: Compressing objects: 100% (12/12), done.

remote: Total 3492 (delta 2), reused 4 (delta 1), pack-reused 3478

接收对象中: 100% (3492/3492), 7.53 MiB | 1.40 MiB/s, done.

处理 delta 中: 100% (1861/1861), done.

[userapp@lqz-test git]$

[userapp@lqz-test git]$ cd redis_exporter/

[userapp@lqz-test redis_exporter]$ go build .

go: downloading github.com/sirupsen/logrus v1.9.0

go: downloading github.com/prometheus/client_golang v1.13.0

go: downloading github.com/gomodule/redigo v1.8.9

go: downloading github.com/mna/redisc v1.3.2

go: downloading golang.org/x/sys v0.0.0-20220715151400-c0bba94af5f8

go: downloading github.com/prometheus/procfs v0.8.0

go: downloading google.golang.org/protobuf v1.28.1

[userapp@lqz-test redis_exporter]$

启动采集器

[userapp@lqz-test redis_exporter]$ ./redis_exporter --debug --redis.addr "redis://172.19.0.10:6379" --redis.password "12345678"

访问 redis_exporter 服务查询看指标

192.168.0.130:9121/metrics

配置prometheus 采集(可以直接使用夜莺集群中自带的prometheus )

- job_name: 'redis_exporter_targets'

static_configs:

- targets:

- redis://172.19.0.10:6379

- redis://172.19.0.11:6379

- redis://172.19.0.12:6379

metrics_path: /scrape

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 192.168.0.130:9121

查看prometheus 中已有采集指标

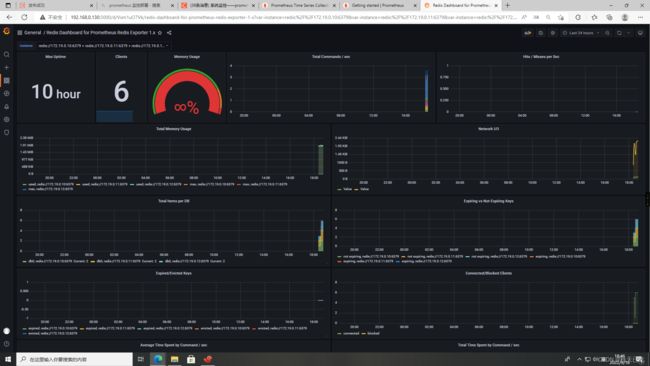

grafana展示

docker 安装展示测试(根据个人需求可以自行封装到某个docker-compose.yml)

docker run -d --name=grafana -p 3000:3000 grafana/grafana

登录用户 / 密码

admin / admin

增加 prometheus 数据源

创建大屏 使用763号模板

到此告一段落吧 kafaka zk 可以按照这个思路再做吧