nginx开发相关笔记

nginx的生命力,首先要清楚他在整个软件体系中的位置 在网络层次中的位置。

nginx作为一个webserver与七层代理(主要是HTTP),其实七层代理更为官方现如今,webserver一般都用golang/ptyhon/java做后段专门写业务逻辑了。而nginx是主力角色其实就是代理。

其实HTTP的生命力就决定了nginx的生命力,几乎现在网上的很多流量都是HTTP的(由于其无状态简单、高可扩展性,几乎每种业务都会优先选择HTTP(服务治理选择grpc/thrift不在其中考虑,也就是效率与‘成本’的平衡),那不可避免地就会用到nginx。

既然是代理,可以认为是网关,位置就在业务流量入口处,那么理所应当地可以延伸处 包括

安全 -- 七层安全几乎都在这上面,包括对流量的清洗啥的,这里更多的是安全业务了。

负载均衡-- 几乎每个业务都会用到(尤其是在云原声场景下,大量后段服务开始容器化部署),包括对负载均衡的各种玩法(其实这个部分没有太多新花样,wrr/hash/ip-hash/chash/least_conn就这几种,负载均衡层面最近玩的比较多的就是服务发现。这方面其实也很成熟了,动态的upstream。

ssl / http2/quic/等网关 -- nginx可能是现如今跑https流量的主力军吧,配置很方便。其实这种新协议 最好的实现就是在网关,后面的服务不用任何升级就可以享受到这个好处了。

(对HTTP/2的优化真的还要继续。。。/顺便熟悉quic)

然后就是一些业务逻辑需要在网关实现的,类似于apigateway之类(限流/限速/鉴权/流量打标/防护拦截ip等各种你能够想到的需要在网关处实现的业务. (api调用进入的入口网关,主要处理南北流量)

监控/统计/日志:最好莫过于在网关统计这个了。。

上面是从应用层来看到的,7层业务。其实四层和七层的逻辑是类似的(都是运行在端到端)。

而应用层可以说是必须会长期存在下去的,没有应用层,网络的意义也就没有了,所以从这个角度来看,七层网关是会长期存在的一个基础施舍。

要把周边的东西串起来,虽然有你深入的点,但周围的知识框架 你需要串起来,形成一张有逻辑的网,不然只是都是零碎的不好搞。

(如果要深入掌握好网关,还是要深入去玩转nginx,但如果只是看nginx其实你还是缺少实践的,他的代码成本还是很高的,但openresty将其入门门槛从100降低到了30,让其可以通过同步的方式去编程这个nginx性能怪兽,让nginx也变得可爱起来。 所以对luajit/lua-nginx-module的深入,包括对nginx和lua-nginx-module的深入)(彻底玩转nginx 肯定是要掌握这些) (把 lua-nginx-module当作一个or几个nginx的C模块来看待就不容易迷失了)https://github.com/openresty/lua-nginx-module.

另外 随着云原生的浪潮 Kubernetes Ingress的选择也越成为关键。(如今envoy+istion占据了上风)

看到这个讨论很有意思。https://github.com/envoyproxy/envoy/issues/12724

这里也谈到了envoy的扩展也很难上手要写c++,他们也期望能够通过lua来简化envoy的扩展(类似于openresty)。【看他们的讨论 发现外国技术人都好礼貌,每次都是用 could we、if、sorry to这种语气。。】

另外在可编程领域,还是多关注下wasm:。。

Yes, actually there is an internally implementation(we name it EnvoyResty) following the OpenResty design, and we have run it on production for more than half year. Now we hope to contribute this feature to upstream.

FWIW, If we want to have more "extensibility points" to Envoy via Lua, I think we can leverage the proxy-wasm interface here: spec/abi-versions/vNEXT at master · proxy-wasm/spec · GitHub to extend the current Lua support on extending Envoy. Hence it will be consistent with the other efforts for Envoy extensibility.

wasm is definitely the future, but from our experience, it's still a while from production availability. I think the two can go together. Many users want to migrate to envoy-based cloud native api gateways, but they have many Lua plugins based on OpenResty. At NetEase, we use EnvoyResty to migrate Lua plugins. At the same time we track the development of wasm filter, hopefully we can contribute to it someday.

envoy-apisix/table.lua at master · api7/envoy-apisix · GitHub

另外,其实在整个网路图谱中,网管只是作为一个网元存在,还是要深入网络里面去(包括四层及以下,将整个网络掌握,包括vpc等)

1 pool

most nginx allocations are done in pools, memory allocated in an nginx pool is freed autonmatically when the pool is destryed. Htis porovieds good allocation perfomanaceand makes meory control easy.

a pool internally allocates objects in continous blocks ofmemory. Once a block is full, a new one is allocated and added to the pool memory block list. Wthen the requested allocation is too large to fit into a block., the request is forwared to the system allocator and the returned pointer is stored in the pool for further deallocation.

从第二段描述中其实可以很简略地看出pool的架构:就是一个个的block,分配完了这个block就分派下一个block,如果实在有太大的size需要超出了一个block,就直接向system申请了,然后

void*

ngx_pnalloc(ngx_pool_t *pool, size_t size)

{

u_char *m;

ngx_pool_t *p;

if (size <= pool->max) { //即每个block的size

p = pool->current; //当前的block

do {

m = p->d.last;

if ((size_t) (p->d.end - m ) >= size) { //该block剩余空间足够分

p->d.last = m + size;

return m;

}

p = p->d.next;// 当前block剩余空间不够,需要到下个block看看// TODO 这里不移动current么

} while (p);

return ngx_palloc_block_pool(pool, size); // 所有block都不够,需要新增加block了

}

return ngx_palloc_large(pool, size); // 超过了block的最大size 需要向system要了直接分配大内存块,不存储在block里面了。

}

//增加一个新block

static void*

ngx_palloc_block(ngx_pool_t *pool, size_t size)

{

u_char *m;

size_t psize;

ngx_pool_t *p, *new, *current;

psize = (size_t)(pool->d.end - (u_char*)pool); //一个block的真实大小(包括头部的overheader) (其实pool->max只是实际能使用的空间)

m = ngx_memalign(NGX_POOL_ALGINMENT, psize, pool->log); //申请一个block

if (m == NULL) {

return NULL;

}

new = (ngx_pool_t*)m; //new block

new->d.end = m+psize;

new->d.next = NULL;

new->d.failed = 0;

m += sizeof(ngx_pool_data_t); //block的头部开销

m = ngx_algin_ptrm(m, ALGINMENT);

new->d.last = m + size;

current = pool->current;

for (p = current; p->d.next; p = p->d.next) {

if (p->d.failed++ > 4) {

curent = p->d.next;

}

}

p->d.next = new; //挂载new block

pool->current = current ? current : new;

return m;

}

//可以看到current指向的block其实是首次可以被遍历的block(每次分配时)所以在这current之前的block都将不予考虑了(说明几乎已经满了,失败次数每次达到4次)。

static void*

ngx_palloc_large(ngx_pool_t *pool, size_t size)

{

void *p;

ngx_uint_t n;

ngx_pool_large_t *large;

p = ngx_alloc(size, pool->log); //直接从heap上要了。

if (p == NULL) {

retrun NULL;

}

n = 0;

for (large = pool->large; large; large=large->next) {//大内存块看来是链式结构

if (large->alloc == NULL) {

large->alloc = p;

return p;

}

if (n++>3) {

break;

}

}

large = ngx_palloc(pool, sizeof(ngx_pool_large_t));// 这个不会陷入到死循环,large_t的size肯定小于一个block了

if (large == NULL) {

ngx_free(p);

return NULL;

}

large->alloc=p;

large->next=pool->large;

pool->large = large; //头插入

return p;

}

可以看出其实对大内存的分配并没有什么优化,只是将其获取后放入到large链上而已。而且也没有多余空间。

而destory其实刚好可以看出pool是如何管理的:

void

ngx_destroy_pool(ngx_pool_t *pool)

{

ngx_pool_t *p, *n;

ngx_pool_large_t *l;

ngx_pool_cleaup_t *c;

//这是后面要讲到的:在回收pool之前,对挂在该pool上的cleanup钩子做回掉清理

//在释放内存之际可能有一些需要善后的工作

for(c=pool->cleanup; c; c=c->next) {

if (c->handler) {

c->handler(c->data);

}

}

//释放大块内存:很直观

for (l=pool->large; l;l=l->next) {

if(l->alloc) {

ngx_free(l->alloc);

}

}

//释放各个block: 也很直接

for (p=pool,n=pool->d.next;/*void*/;p=n,n=n->d.next) {

ngx_free(p); //释放block

if (n==NULL) {

break;

}

}

另外注意到其实large结构体也是分配在block里面的,释放block后也理所当然地被释放了。

}api:

ngx_palloc(pool, size); -- allocate aligned memory from the specified pool

ngx_pcalloc(pool, size); -- allocate aligned memory from the specified pool with fill it with zeros.

ngx_pnalloc(pool, size); -- allocate unglinged memory from the speicfied pool, mostly used for allocating strings.

ngx_pfree(pool, p) -- free memory that was previously allocated in the specified pool. only allocations that result from requests forwared to the system allocator can be freeed. (从大内存申请出来的才能被这么释放掉)所以可以理解为任何时候都可以调用pfree,但不一定能真正释放掉罢了。

===

chain links(ngx_chain_t) are activley used in nginx, so the nginx pool implementation provides a way to reuse them. The chain filed of ngx_pool_t keeps a list of previoulsy allocated links ready fore reuse. For effecient allocation of a chain link in a pool, use the ngx_alloc_chain_link(pool) function. This function looks up a free chain link in the pool list and allocates a new chain link if the pool list is empty. To free a link, call the ngx_free_chain(pool, cl) function

为何单做了chain的这个复用而其他结构体没有这个待遇?因为这个结构体使用的太频繁了,故而为了提高效率,将其做了一个复用.

所以搜索nginx里面对cl的分配,几乎都是通过 ngx_alloc_chain_link 申请,通过ngx_free_chain释放:

$grep -rn 'cl = ngx' src/ | wc -l

52

ngx_chain_t*

ngx_alloc_chain_link(ngx_pool_t*pool)

{

ngx_chain_t *cl;

cl = pool->chain;

if (cl) {

pool->chain = cl->next;

return cl;

}

cl = ngx_palloc(pool, sizeof(ngx_chain_t));

if (cl == NULL) {

return NULL;

}

return cl;

}

#define ngx_free_chain(pool, cl) //头插

cl->next = pool->chain; \

pool->chain = cl;

这里有个注意点:chain并没有标记指向哪个pool。

cleanup handlers can be greigstered in a pool. A cleanup handler is a callback with an argument which is called when pool is destroyed. (为何要涉及这个策略). => A pool is usually tied to a speicifc nginx object (like an HTTP request) and is destroyed when the object reacheds the end of its lifetime. Registering a pool cleanup is a converient way to release resoures, close file descriptors or make final adjustments to the shared data assocated with the man object(说的很全面概括了)

To register a pool cleanup, call ngx_pool_cleanup_add(pool, size) which retruns a ngx_pool_cleanup_t pointer to be filed in by the calleer, use the size argument to allocate context of the cleanup handler.

ngx_pool_cleanup_t *cln;

cln = ngx_pool_cleanup_add(pool, 0);

if (cln == NULL) {/*error*/}

cln->handler = ngx_my_cleanup;

cln->data = "foo"

...

static void

ngx_my_cleanup(void *data)

{

u_char *msg = data;

ngx_do_smth(msg);

}2 shared memory

Shared memory is used by nginx to share comman data between processes. The ngx_shared_memory_add(cf, name, size, tag) function adds a new shared memory entry ngx_shm_zone_t to a cycle. The function receives the name and size of the zone. Each shared zone must hava a unique name. If a shared zone entry with the provided name an tag already exsits, the existing zone entry is reused. The function fails with an error if an existing entry with the same name has a diffrerent tag. Usually, the address of the module struct is passed as tag ,making it possible to reuse shared zone by name within one nginx module.

For allocating in shared memory, nignx provides the slab pool ngx_slab_pool_t 机制

a slab pool for allocating memory is automatically created in each nginx shared zone. The pool is located in the beginnning of the shared zone and be accessed by the expresision( ngx_slab_pool_t*)shm_zone->shm.addr (和常规的pool有类似的构造)

To protect data in shared memory from current access, use the mutex avaialbe in the mutex field of ngx_slab_pool_t. A mutex is most commonly used by the slab pool while allocating and freeing memory, but it can be used to protect any other user data structes allocated in the shared zone. (slab上有配置锁)

3 logging

Normally, loggers are created by existing nginx code from error_log directives and are availiabe at nearly every stage of processing in cycle, configuration, client connection and other objects.

A log message is formatted in a buffer of size NGX_MAX_ERROR_STR(currently 2048 bytes) on stack. The message is prepended with the severity level, process ID(pid), connection ID(stored in log->connection), and the system error text. For non-debug messages log->handler is called as well to prepend more specific information to the log message. HTTP module sets ngx_http_log_error() function as log handler to log client and server address, current action(stored in log->action) ,client request line, server name etc.

void

ngx_http_init_connection(ngx_connection_t *c)

{

...;

c->log->connection = c->number ; //connection id

c->log->handler = ngx_http_log_error;

c->log->action = "rading client request line";

...;

}

static u_char*

ngx_http_log_error(ngx_log_t* log, u_char *buf, size_t len)

{

u_char *p;

ngx_http_request_t *r;

ngx_http_log_ctx_t *ctx;

//pre-append action ;

if (log->action) {

p = ngx_snprintf(buf, len, " while %s", log->action);

len -= p - buf;

buf = p;

}

ctx = log->data;

// pre-append client address

p = ngx_snprintf(buf, len, ", client: %V", &ctx->connection->addr_text);

len -= p-buf;

// pre-append server address (listening addres)

r = ctx->request;

if (r) {

return r->log_handler(r, ctx->current_request, p, len);

} else {

p = ngx_snprintf(p, len, " , server: %V", &ctx->connection->listening->addr_text);

}

return p;

}这里要注意区分:并不是只有在请求结束时才会logging(针对http场景下),而在任意时候都可以logging. 只不过在http的log阶段他在logging请求时也是调用的通用的写log api : ngx_log_error之类的底层API。

4 cycle

A cycle object stores the nnginx runtime context created from a specific configuration . The current cyclee is referenced by the ngx_cycle global variable and inherited by nginx worker as the start. Each time the nginx configuration is reloaded, a new cycle is created from the new nginx configuration, the old cycle is usually deleted after the new one successfully created.

A cycle is created by the nginx_init_cycle function, which takes the previous cycle as its argument. (为啥需要这样) ==> inherits as many resources as possiable from the previous cycle.

5 buffer

For input/output opertaions, nginx provides the buffer type ngx_buf_t . Normally it's used to hold data to be written to a destination or read from a source, Memory for the buffer is allocated separately and is not related to the buffer structuce.

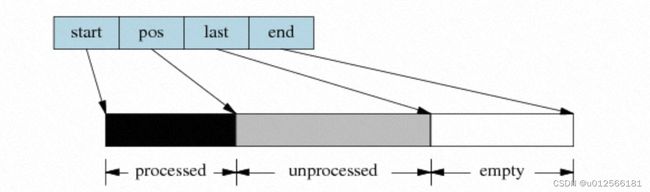

* start, end -- The boundaries of the memory block allocated for the buffer.

* pos, last -- The boundaries of the memory buffer, norlay a subrage of start .. end

* tag -- Uniqu value used to distinguish buffers, created by different nginx modules, usually for the purpose of buffer reuse.

* temporary -- Flag indicating that the buffer refererences writeable memory ( 这个buffer是writeable的)

* memory -- Flag indicating that the buffer refreneces read-only memory (和上面的对比:该buffer只能读取)

* flush -- Flag indicating that all data priror to the buffer need to be flushed (有点类似于tcp里的push)

* recycled -- Flag indicating that the buffer can be reused and needs to be consumed as soon as possible.

* last_buf -- Flag indicating thaht the bufer is the last in outpout

* last_in_chain -- Flag indicating that there are no more data buffers in a request or subrequest.

注意区别 last_buf / last_in_chain: last_in_chain表示的是在当前的chain中是最后一个buf,而last_buf表示是整体的最后一个buf。即last_in_chain不一定是last_buf,但last_buf肯定是last_in_chain。(因为buf可能分了多个chain)

For input and output operations buffers are linked in chains (在输入输出场景下,buffer总是搭配chain一起使用),为何:因为单独的buf可以看到没有回收和管理机制,而搭配了chain就方便做相关管理了:chain在前面说过一般都是从pool中进行分配和挂载重复利用。

buf的使用很灵活感觉,还是需要结合实际场景。(任何input/outpout的地方都会看到buf的使用)

6 Networking

Connection

The connection type (ngx_connection_t) is a wrapper around a socket descriptor.

* fd -- Socket descriptor

* data -- Aribtary connection conetxt. Normally it is a pointer to a higher-levle object built on top of the connection, such as an HTTP request or a Stream session.

* read,write -- Read and write events for the connection

* recv send recv_chain send_chain -- I/O operation handler for the connection

* ssl -- SSL context for the connection

* reuseable -- Flag indicating the connection is in a state that make it eligible for reuse (就是说该c可以被回收了)

* close -- Flag indicating that the connection is being reused and needs to be closed (正在被回收中,而且需要关闭了)这个标记通常在c的read_handler中被判断【在真正drain回收之前做善后)

All connection structures are precreated when a worker starts and store in the (connections) field of the cycle object.

connection的回收与重用:

Because the number of connections per worker is limited, nginx provides a way to grab connections that are currently in use. ( 就是说正在被使用的这些c,可以被grab过来重用,比如一些空闲的长连接)

ngx_reusable_connection(c,1) sets the reuse flag in the connection strucutre and inserts the connection into reuseable_connection_queue of the cycle. Whenever ngx_get_connection find out there are no available connections in cycle's free_connections list, it calls ngx_drain_connections() to release a specific number of reusable connections. (就是说,这些被reusalbe的c,其实还是没有断开的,而且放在了 reuseable_connection_queue队列上,此时调用drain,就会将其释放掉并放入free队列以便使用,俗称挤破),for each sunc connection, the close flag is set and its read handler is called which is supposed to free the connection by calling ngx_close_connection(c) and make it available for reuse. (在close前,这些被drain的connection还会去尝试read_handler 用于善后,看是否有事件需要处理)

//connection为何需要复用:因为c是有限的,如果一些没有正在流数据的c(比如keepalive下,比如刚建立链接但没有收到数据下), 其实可以挤破掉拿来做实际的工作

ngx_connection_t*

ngx_get_connection(s,log)

{

c=ngx_cycle->free_connections;

if(c==NULL){

ngx_drain_connections(); //注意看这里,如果发现free链上没有了,那么是不是就没办法?nginx会尝试从目前在keepalive/or 没有数据的但已经建立链接的c上的一些连接上挤破一批(32)连接来用,因为keepalive的连接目前没有数据,挤破的代价是最小的

c=ngx_cycle->free_connections;

}

if(c==NULL){

ngx_log_error(ALERT,0, "connection not enoguth");

return NULL;

}

...;

ngx_cycle->free_connections=c->data; //摘下 ,每次都从队头拿

ngx_cycle->free_connections_n--;

return c;

}

ngx_free_connceciont(c)

{

c->data=ngx_cycle->free_connections; //每次都放在队头

ngx_cycle->free_connections=c;

..

}

//至于drain机制是如何工作的?

//这里有ngx_cycle->reuseable_connection_queue这个链上面就是放着这些keepalive的c,是可以从这上面挤破的

static void

ngx_drain_connections(void)

{

for(i=0;i<32;i++){

if(ngx_queue_empty(&ngx_cycle->reuseable_connections_queue)){//没有

break;

}

//从最末尾开始:可以猜测这个链表每次加入都是加载表头,最末尾的是最最长时间keepalive的连接【占坑最长】

q=ngx_queue_last(&ngx_cycle->reuse_connection_queue);

c=ngx_queue_data(q,ngx_connection_t,queue);

//这里是挤破的关键:将close标记设置为1,同时调用c->read->handler

//目前来看该链上所有c的read->handler都被设置为了ngx_http_keepalive_handler(http框架内,四层的不讨论)

c->close=1;

c->read->handler(c->read);

}

}

//c的指针函数实现的多态:在七层和四层的函数指着可以不同,且设置为static

static void

ngx_http_keepalive_handler(rev)

{

c=rev->data;

if(rev->timedout || c->close) { //这里:从drain调过来就可以知道,该连接会被clsoe掉

ngx_http_close_connection(c);

return;

}

...;

}

//将正http keepalive的c掐掉

ngx_http_close_connection(c)

{

c->destroyed=1;

pool=c->pool;

ngx_close_connection(c);

ngx_destroy_pool(pool);

}

//这里实现c的回收:回收需要做的事情,1 从ngx_cycle->reuse_connection_queue队列中删掉,因为不再是ka c了,2 调用free 归还到ngx_cycle->free_connections

ngx_close_connection(c)

{

//释放c前的一些前置工作:将对应的事件从timer树/posted队列/epoll拿掉防止无效事件被踩到

if(c->read->timer_set) ngx_del_timer(c->read);

if(ngx_del_conn) ngx_del_conn(c,);

if(c->read->posted)ngx_delete_event(c->read);

if(c->write->posted)ngx_delete_event(c->write);

c->read->closed=1;c->write->closed=1;

//从ngx_cycle->reuse_connection_queue队列中删掉 (防止被再次回收)

ngx_reuseable_connection(c,0);

//归还到ngx_cycle->free_connections

ngx_free_connceciont(c);

}

回头看 c是如何被加入ngx_cycle->reuse_connection_queue链的:每次被keepalive时加入:

ngx_http_finalize_connection(r)

{

if(r->keepalive&&clcf->keealive_timeout>0){

ngx_http_set_keepalive(r);

return;

}

ngx_http_set_keepalive(r)

{

c=r->connection;

rev=c->read;

rev->handler=ngx_http_keepalive_handler;

....;

c->idle=1;

ngx_reuseab_connection(c,1); //加入ngx_cycle->reuse_connection_queue队列

ngx_add_timer(rev,clcf->keepalive_timeout);

...

}

}

ngx_reuseable_connection(c, reusable)

{

//这里将 0/1的逻辑放在一起了,并不是那么直观,其实更直观的方法应该是拆为两个函数:

//ngx_reusable_connection(c, 1) ==> ngx_make_connection_be_reuseable (可以被drain挤破) (一般发生在连接上没有数据了 需要把连接keepalive情况下)

//ngx_reusable_connection(c, 0) ==> ngx_make_connection_not_be_resuable (还需要继续自己使用) (一般发生在keepalive的连接上突然收到收据了,需要继续处理的场景下, or从reuseable队列中已经摘下来了))

//先不管如何处理这个c, 首先check他的状态,决定继续可以挤破/不可挤破之前都需要恢复

if (c->reusable) {

ngx_queue_remove(&c->queue);

ngx_cycle->resuable_connections_n--;

}

c->reusable=reusable;

if(reusble) {

ngx_queue_insert_header((ngx_queue_t*)&ngx_cycle->resuable_connections_queue, &c->queue);

ngx_cycle->resuable_connections_n++;

}

}

//至此:一个连接尽力了从空闲池->被使用->被keepalive->被挤破->空闲池的整个生命周期HTTP client connections are an example of reuselabe connections in nginx; they are makred as reusalbe until the first request byte is received from the client (也就是说,刚建立的链接,如果在没有收到客户端数据时候,他是被marked 为reusable的:

```

//建立tcp链接后被调用初始化该c

ngx_http_init_connection(ngx_connection_t *c)

{

....;

ngx_add_timer(rev, c->listening->post_accept_timeout);

ngx_reusable_connection(c, 1) ;// 此处marked 为reusable

ngx_handler_read_event(rev, 0); //注册可读等待

}

当数据到来了,就要摘除了:

static void

ngx_http_wait_request_handler( ngx_event_t *rev)

{

...;

n = c->recv(c, b->last, size);

if ( n== NGX_AGIN) {

if (!rev->timer_set) {

...;

}

ngx_handle_read_event(rev,0);//再次加入epoll

/* 一般调用pfree是为了释放大块内存归还给system(当然如果确认是大内存的话

所以pfree不一定能够返回oK(如果不是大内存的话),要看实际情况

// we are trying not hold c->buffer's memory for an idle connection

if(ngx_pfree(c->pool, b->start) == NGX_OK) {

b->start == NULL;

}

return;

}

// n > 0

ngx_reusable_connection(c, 0) ;// 读到数据了,不能被挤破了需要摘下来

...;

}

Events

event

Debugging memory issues

To debug memory issues such as buffer overruns or use-after-free errors, you can use the AddressSanitizer(Asan) surrported by some modern compilers. To enable Asan with gcc and clang, use the -fsanitize=address compiler and linker option. When building nginx, this can be done by adding the option to --with-cc-opt and --with-ld-opt parameters of the configigure script.

Since most allocations in nginx are made from nginx internal Pool, enabling Asan may not always be engouh to debug memory issues. THe internal pool allocates a big chunk of memory form the system and cuts smaller allocations from it. However this mechanism can be disabled by setting the NGX_DEBUG_PALLOC macro to 1, in this case, allocations are passed directly to the system allocatir giving it full control over the buffers boundaries.

void *

ngx_palloc(ngx_pool_t *pool, size_t size)

{

#if !(NGX_DEBUG_PALLOC)

if (size <= pool->max) {

return ngx_palloc_small(pool, size, 1);

}

#endif

return ngx_palloc_large(pool, size);

}这里如果NGX_DEBUG_PALLOC置位,后续所有的分配都当作是large分配机制(即直接从system分配了)

Common pitalls

Writing a C modules

In most cases your task can be accomplished by creating a proper configuration. (通过配置能够搞定。。。) i

Lua让nginx可编程了,确实给nginx续了很长一命。

Global Variables

Avoid using global variables in your modules. Most likely this is an error to have a global viarable. Any global data should be tied to a configuration cycle and be allocated from the corresponding memory pool . This allows nignx to perform graceful configuration reloads. An attemp to use global variables will likey break this feature, (就是说尽可能避免在堆上分配全局变量,尽量将全局变量分配到cycle->pool上去,这样能够保证在reload的时候能保持一致性)

Manual Memory Managment

instead of dealing with malloc/free approach which is error prone, learn how to use nginx pool. A pool is created and tied to an object -- configuration, cycle, connection or http request . When the object is destroyed, the associated pool is destroyed too. So when work with an object, it is possible to allocate the amount needed from the correspoding pool and don't crea about freeing memory even in case of errors. (就是说,无论何时需要分配内存了都应该从pool中分配,前提是你要知道你的数据的生命周期随着哪个object,比如提到的cycle,connection ,request等)

Therads

不要尝试在nginx中使用threads

1 most nginx functions are not thread-safe. It is expected that a thread will be executing only system calls and thread-safe library functions.

如果你想要使用threads,尽可能地使用timer (if you want to run some code that is not related to client request processing, the proper way is to shcedule a timer in the init_process module handler and perform required actions in timer handler.

那为何nginx要实现threads:nginx makes use of threads to boost IO-realated operations, but this is a special case with a lot of limitations( 只是为了提高IO能力而做的一种有限制的尝试)

HTTP Requets to Extenal service

如果想要在nginx发起请求到第三方,如果自己手写io其实是比较棘手的,如果你还用了类似libcurl这种阻塞库就更加错误了。其实这种任务nginx自己就可以完成,答案是 subrequest。 (当然lua出来后就很方便了)

There are two basic usage scenarious when a an external request is needed.

* in the context of processing a client request

* in the context of a worker process (eg: timer)

in the first case, the best is to use subrequest API. 其实很直接就是调用subrequest的api到一个location,然后在这个location里面做配置比如proxy_pass让nginx来完成任务(其实这个也很经常用,比如在异步限速模块里面,用cosocket发起请求到locatio,再有location proxy到远程限速中心去)。 如果用C来实现可以参考的例子就是 auth模块( ngx_http_auth_request_module) (这个也是个鉴权模块。。)

location /private/ {

auth_request /auth;

...

}

location = /auth {

proxy_pass ...

proxy_pass_request_body off;

proxy_set_header Content-Length "";

proxy_set_header X-Original-URI $request_uri;

}看了下auth模块的代码,关键点在:

static ngx_int_t

ngx_http_auth_request_handler(ngx_http_request_t *r) //在access阶段插了一个handler

{

.....;

if (ctx != NULL) {

///对子请求来的响应结果进行处理决定主请求是否通行

if (ctx->status == NGX_HTTP_FORBIDDEN) {

return ctx->status;

}

if (ctx->status >= NGX_HTTP_OK

&& ctx->status < NGX_HTTP_SPECIAL_RESPONSE)

{

return NGX_OK;

}

。。。

}

ps->handler = ngx_http_auth_request_done;

ps->data = ctx;

if (ngx_http_subrequest(r, &arcf->uri, NULL, &sr, ps, //发起子请求

NGX_HTTP_SUBREQUEST_WAITED)

!= NGX_OK)

{

return NGX_ERROR;

}

}

} For the second case, it is possible to use basic HTTP client functionlity available in nginx. For example OCSP module impletns simple HTTP client. (话说,用Lua真的解决了好多问题) [这个太复杂了]