Centos stream 8 使用 cephadm 安装 Ceph (17.2.6 quincy)集群

一、环境

1、机器列表

| 名称 | IP | 角色 | 备注 |

| cephnode120 | 10.0.49.120 | _admin | 双网卡 双硬盘/sda/60G /sdb/160G |

| cephnode121 | 10.0.49.121 | _storage | 双网卡 双硬盘/sda/60G /sdb/160G |

| cephnode122 | 10.0.49.122 | _storage | 双网卡 双硬盘/sda/60G /sdb/160G |

2 、软件环境

[root@cephnode120 ~]# cat /etc/redhat-release

CentOS Stream release 8

[root@cephnode120 ~]# python3 --version

Python 3.6.8

[root@cephnode120 ~]# chronyd --version

chronyd (chrony) version 4.2

[root@cephnode120 ~]# docker version

Emulate Docker CLI using podman. Create /etc/containers/nodocker to quiet msg.

Client: Podman Engine

Version: 4.3.1

API Version: 4.3.1

Go Version: go1.19.4

Built: Wed Feb 1 06:06:15 2023

OS/Arch: linux/amd643、软件准备

centos8 因不再维护需将repos源换成阿里源

#备份旧的配置文件

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

#下载新的 CentOS-Base.repo 到 /etc/yum.repos.d/

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-8.repo

#并替换部分字段(非阿里云机器需要做)

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

#生成缓存

yum clean all&&yum makecache

#验证

[root@cephnode120 ~]# yum repolist

Repository extras is listed more than once in the configuration

仓库 id 仓库名称

AppStream CentOS-8-stream - AppStream - mirrors.aliyun.com

Ceph Ceph x86_64

Ceph-noarch Ceph noarch

Ceph-source Ceph SRPMS

appstream CentOS Stream 8 - AppStream

base CentOS-8-stream - Base - mirrors.aliyun.com

baseos CentOS Stream 8 - BaseOS

centos-ceph-quincy CentOS-8-stream - Ceph Quincy

epel Extra Packages for Enterprise Linux 8 - x86_64

epel-modular Extra Packages for Enterprise Linux Modular 8 - x86_64

extras CentOS-8-stream - Extras - mirrors.aliyun.com

extras-common CentOS Stream 8 - Extras common packages4、设置hosts解析

[root@cephnode121 ~]# vi /etc/hosts

#输入

10.0.49.120 cephnode120

10.0.49.121 cephnode121

10.0.49.122 cephnode122保存退出 :wq

5、设置免密登录

#复制cephnode120节点的秘钥到每个ceph节点

[root@cephnode120 ~]# ssh-keygen

ssh-copy-id root@cephnode120

ssh-copy-id root@cephnode121

ssh-copy-id root@cephnode122

##测试每台ceph节点不用密码是否可以登录

[root@cephnode120 ~]# ssh cephnode121

Activate the web console with: systemctl enable --now cockpit.socket

Last login: Sat May 27 15:46:26 2023 from 10.0.49.120

[root@cephnode121 ~]#

cephadm 手动安装系统要求:

-

Python 3

-

Systemd

-

Podman or Docker for running containers

-

Time synchronization (such as chrony or NTP)

-

LVM2 for provisioning storage devices

二、安装

1、安装cephadm

dnf search release-ceph

dnf install --assumeyes centos-release-ceph-quincy

dnf install --assumeyes cephadm2、初始化新集群

cephadm bootstrap --mon-ip 10.0.49.1203、启用 CEPH CLI

cephadm add-repo --release quincy

cephadm install ceph-common

#验证

[root@cephnode120 ~]# ceph -s

cluster:

id: 9621bcc6-fc83-11ed-9be2-0050568b3a3f

health: HEALTH_OK

services:

mon: 3 daemons, quorum cephnode120,cephnode121,cephnode122 (age 38h)

mgr: cephnode120.rvmgrv(active, since 39h), standbys: cephnode121.zlzqmb

mds: 1/1 daemons up, 2 standby

osd: 3 osds: 3 up (since 24h), 3 in (since 24h)

rgw: 4 daemons active (2 hosts, 1 zones)

data:

volumes: 1/1 healthy

pools: 8 pools, 209 pgs

objects: 254 objects, 460 KiB

usage: 627 MiB used, 479 GiB / 480 GiB avail

pgs: 209 active+clean

io:

client: 255 B/s rd, 0 op/s rd, 0 op/s wr4、添加主机

向已初始化的集群添加其他主机

ceph orch host label add cephnode121,cehpnode122 _storage

#验证

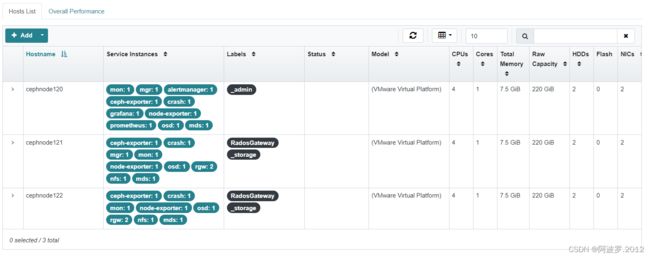

[root@cephnode120 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

cephnode120 10.0.49.120 _admin

cephnode121 10.0.49.121 _storage RadosGateway

cephnode122 10.0.49.122 _storage RadosGateway

3 hosts in cluster

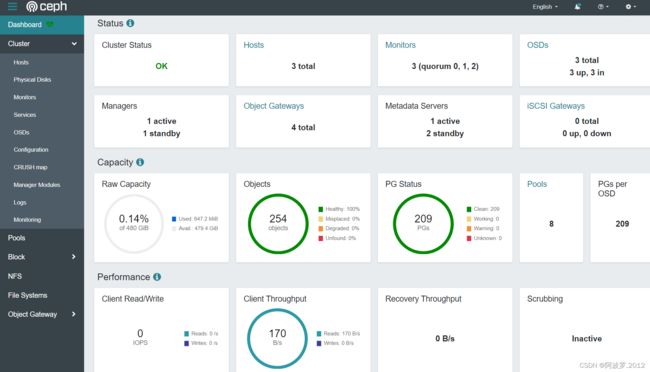

此时也可以从面板中看到基本集群已经形成

三、设置

1、设置osd

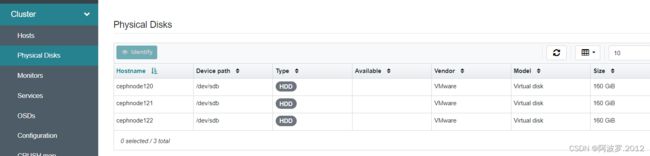

查看集群中可用的设备列表

[root@cephnode120 ~]# ceph orch device ls

HOST PATH TYPE DEVICE ID SIZE AVAILABLE REFRESHED REJECT REASONS

cephnode120 /dev/sdb hdd 171G No 21m ago Insufficient space (<10 extents) on vgs, LVM detected, locked

cephnode121 /dev/sdb hdd 171G No 21m ago Insufficient space (<10 extents) on vgs, LVM detected, locked

cephnode122 /dev/sdb hdd 171G No 21m ago Insufficient space (<10 extents) on vgs, LVM detected, locked也可以在面板中查看

将所有可用设备设置为OSD

ceph orch apply osd --all-available-devices也可以单个添加不同主机中的不同设备

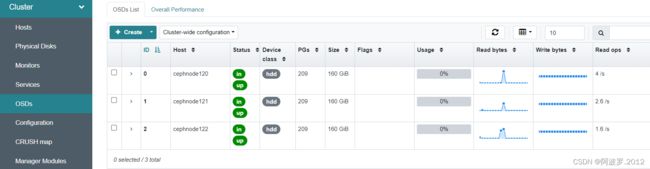

ceph orch daemon add osd cephnode121:/dev/sdc等待几分钟后,验证

[root@cephnode120 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.46857 root default

-7 0.15619 host cephnode120

0 hdd 0.15619 osd.0 up 1.00000 1.00000

-5 0.15619 host cephnode121

1 hdd 0.15619 osd.1 up 1.00000 1.00000

-3 0.15619 host cephnode122

2 hdd 0.15619 osd.2 up 1.00000 1.00000在面板中查看

2、设置RGW

ceph orch host label add cephnode121 RadosGateway

ceph orch host label add cephnode122 RadosGateway

ceph orch apply rgw foo '--placement=label:RadosGateway count-per-host:2' --port=8000也可以用以下命令不指定主机设置RGW

ceph orch apply rgw foo等待几分钟后,验证

3、设置CephFS

[root@cephnode120 ~]# ceph fs volume create cephFS-a --placement="cephnode120,cephnode121,cephnode122"

#验证

[root@cephnode120 ~]# ceph fs volume ls

[

{

"name": "cephFS-a"

}

]

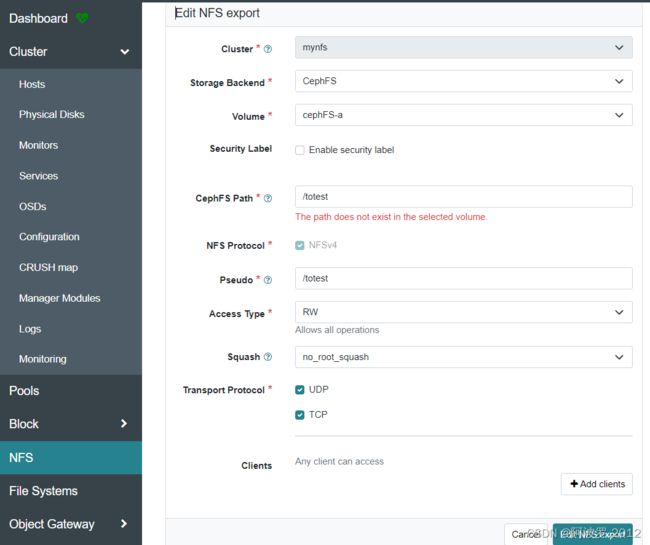

4、设置NFS

设置NFS,ceph 17版本仅支持NFSv4,可以在面板中设置

ceph面板还提供了总览图,可查看集群总体实时情况

四、参考文档

1、Installing Ceph — Ceph Documentation

2、Ceph 安装部署_ceph安装_CN-FuWei的博客-CSDN博客