Hudi编译安装

文章目录

- 编译环境准备

- 编译Hudi

-

- 上传源码包

- 修改pom文件

- 修改源码兼容hadoop3

- 手动安装Kafka依赖

- 解决spark模块依赖冲突

- 执行编译命令

- 编译成功

编译环境准备

| Hadoop | 3.1.3 |

|---|---|

| Hive | 3.1.2 |

| Flink | 1.13.6,scala-2.12 |

| Spark | 3.2.2,scala-2.12 |

1)安装Maven

(1)上传apache-maven-3.6.1-bin.tar.gz到/opt/software目录,并解压更名

tar -zxvf apache-maven-3.6.1-bin.tar.gz -C /opt/module/

mv apache-maven-3.6.1 maven-3.6.1

(2)添加环境变量到/etc/profile中

sudo vim /etc/profile

#MAVEN_HOME

export MAVEN_HOME=/opt/module/maven-3.6.1

export PATH=$PATH:$MAVEN_HOME/bin

(3)测试安装结果

source /etc/profile

mvn -v

2)修改为阿里镜像

(1)修改setting.xml,指定为阿里仓库地址

vim /opt/module/maven-3.6.1/conf/settings.xml

<mirror>

<id>nexus-aliyunid>

<mirrorOf>centralmirrorOf>

<name>Nexus aliyunname>

<url>http://maven.aliyun.com/nexus/content/groups/publicurl>

mirror>

编译Hudi

上传源码包

将hudi-0.12.0.src.tgz上传到/opt/software,并解压

tar -zxvf /opt/software/hudi-0.12.0.src.tgz -C /opt/software

也可以从github下载:https://github.com/apache/hudi

修改pom文件

vim /opt/software/hudi-0.12.0/pom.xml

1)新增repository加速依赖下载

<repository>

<id>nexus-aliyunid>

<name>nexus-aliyunname>

<url>http://maven.aliyun.com/nexus/content/groups/public/url>

<releases>

<enabled>trueenabled>

releases>

<snapshots>

<enabled>falseenabled>

snapshots>

repository>

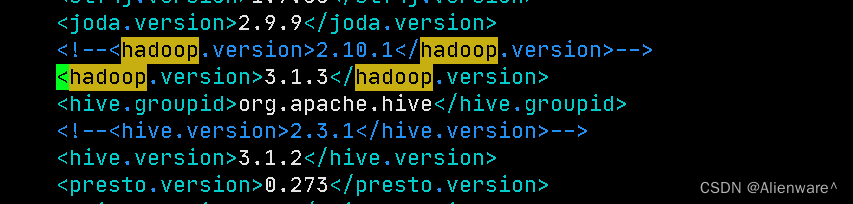

2)修改依赖的组件版本

<hadoop.version>3.1.3hadoop.version>

<hive.version>3.1.2hive.version>

修改源码兼容hadoop3

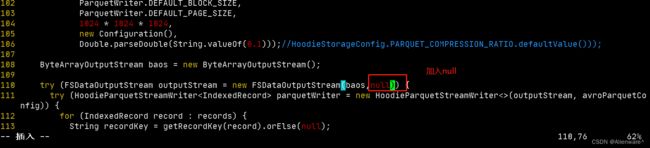

Hudi默认依赖的hadoop2,要兼容hadoop3,除了修改版本,还需要修改如下代码:

vim /opt/software/hudi/hudi-0.12.0/hudi-common/src/main/java/org/apache/hudi/common/table/log/block/HoodieParquetDataBlock.java

手动安装Kafka依赖

下载jar包

通过网址下载:http://packages.confluent.io/archive/5.3/confluent-5.3.4-2.12.zip

解压后找到以下jar包,上传服务器hadoop102

- common-config-5.3.4.jar

- common-utils-5.3.4.jar

- kafka-avro-serializer-5.3.4.jar

- kafka-schema-registry-client-5.3.4.jar

install到maven本地仓库

mvn install:install-file -DgroupId=io.confluent -DartifactId=common-config -Dversion=5.3.4 -Dpackaging=jar -Dfile=./common-config-5.3.4.jar

mvn install:install-file -DgroupId=io.confluent -DartifactId=common-utils -Dversion=5.3.4 -Dpackaging=jar -Dfile=./common-utils-5.3.4.jar

mvn install:install-file -DgroupId=io.confluent -DartifactId=kafka-avro-serializer -Dversion=5.3.4 -Dpackaging=jar -Dfile=./kafka-avro-serializer-5.3.4.jar

mvn install:install-file -DgroupId=io.confluent -DartifactId=kafka-schema-registry-client -Dversion=5.3.4 -Dpackaging=jar -Dfile=./kafka-schema-registry-client-5.3.4.jar

解决spark模块依赖冲突

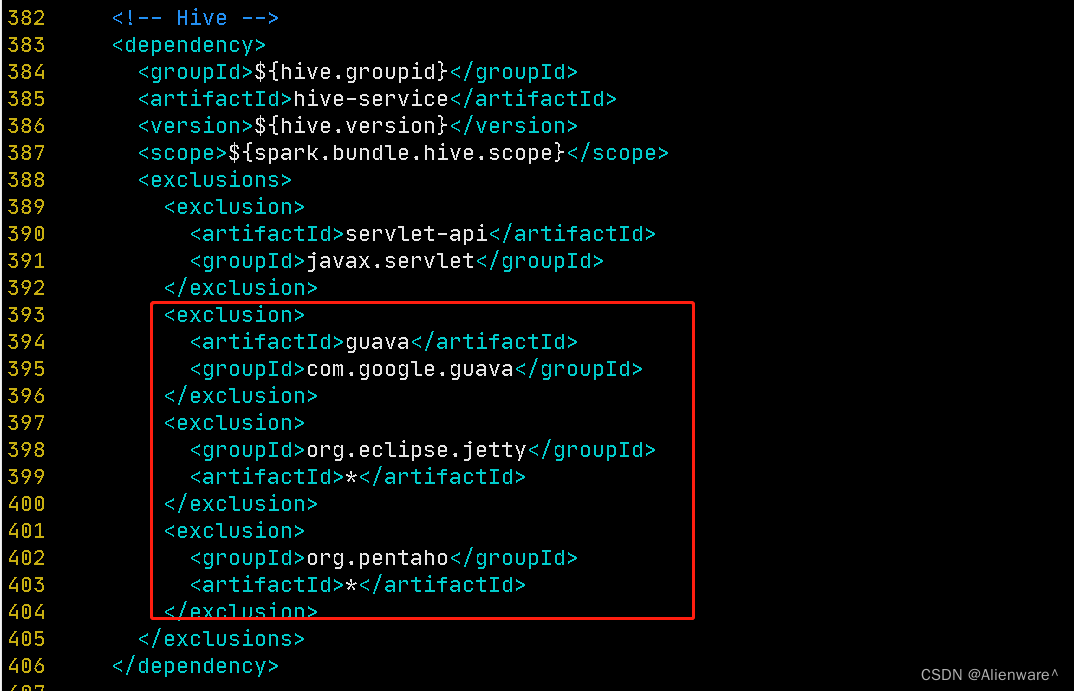

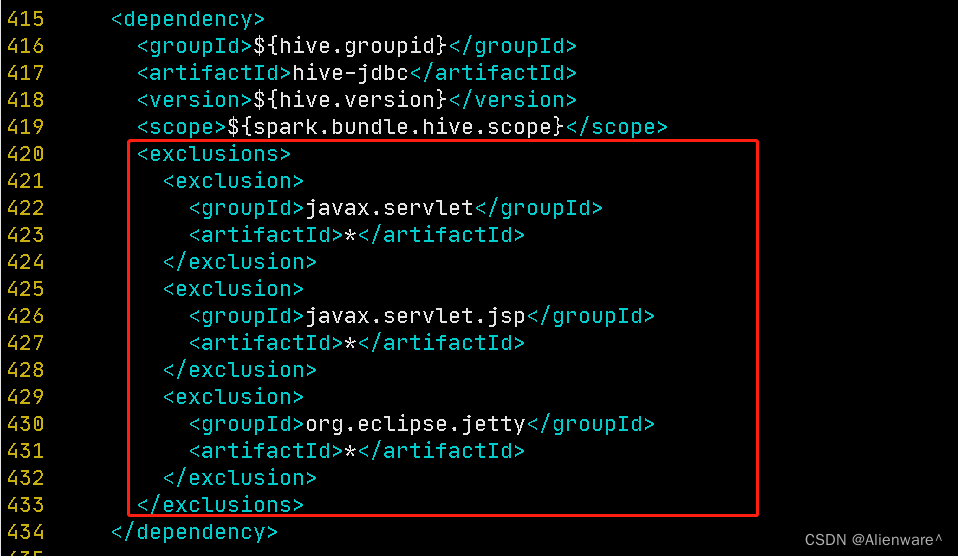

修改了Hive版本为3.1.2,其携带的jetty是0.9.3,hudi本身用的0.9.4,存在依赖冲突。

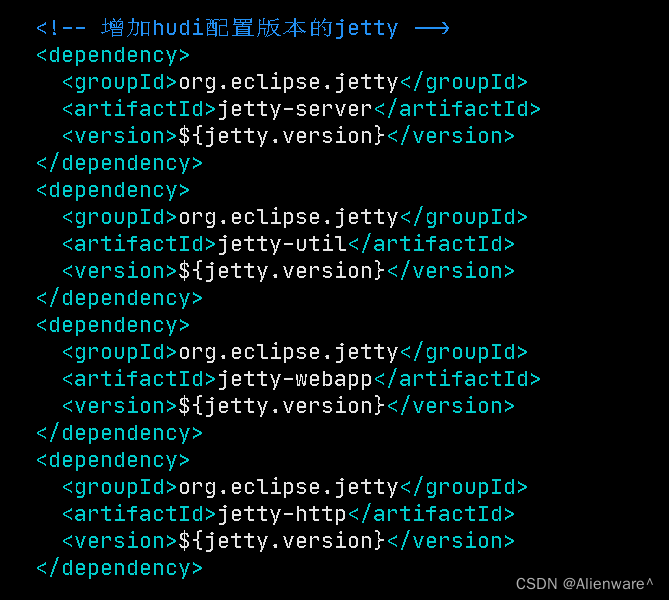

1)修改hudi-spark-bundle的pom文件,排除低版本jetty,添加hudi指定版本的jetty:

vim /opt/software/hudi/hudi-0.12.0/packaging/hudi-spark-bundle/pom.xml

在382行的位置,修改如下(往后看红色方框内部分):

<exclusion>

<artifactId>guavaartifactId>

<groupId>com.google.guavagroupId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.pentahogroupId>

<artifactId>*artifactId>

exclusion>

<exclusions>

<exclusion>

<groupId>javax.servletgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>javax.servlet.jspgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

<exclusions>

<exclusion>

<groupId>javax.servletgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.datanucleusgroupId>

<artifactId>datanucleus-coreartifactId>

exclusion>

<exclusion>

<groupId>javax.servlet.jspgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<artifactId>guavaartifactId>

<groupId>com.google.guavagroupId>

exclusion>

exclusions>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty.orbitgroupId>

<artifactId>javax.servletartifactId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-serverartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-utilartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-webappartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-httpartifactId>

<version>${jetty.version}version>

dependency>

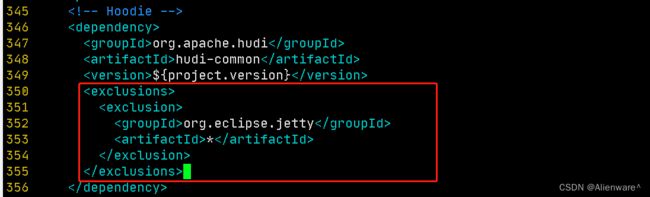

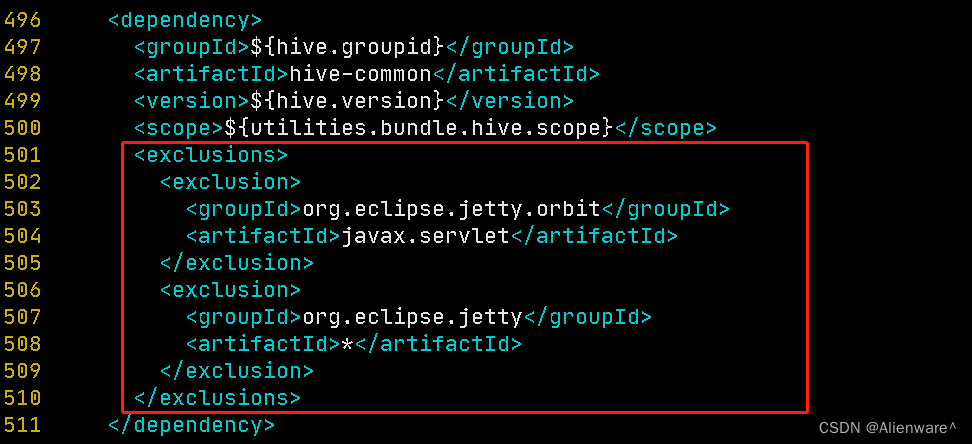

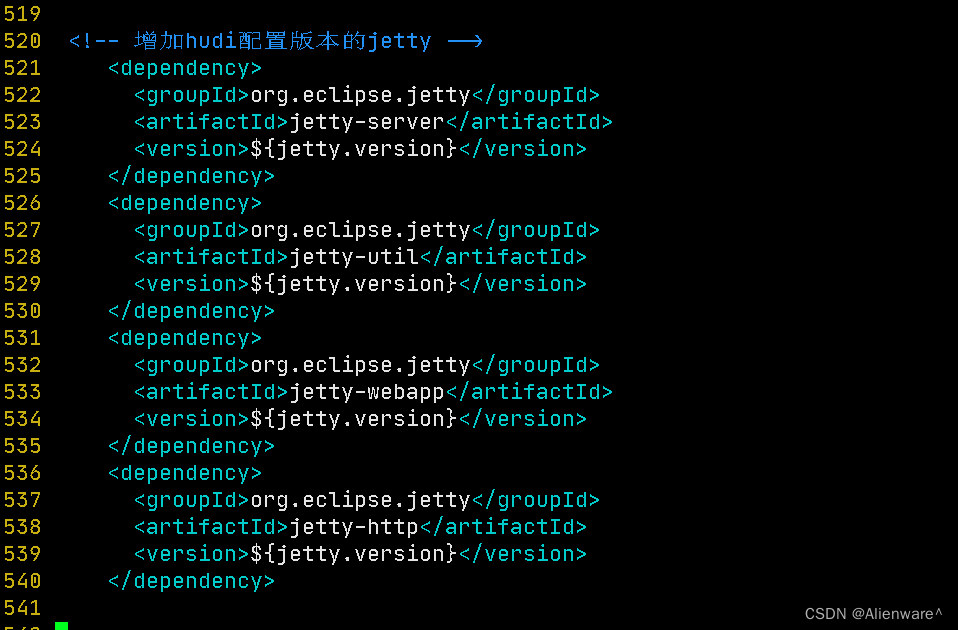

2)修改hudi-utilities-bundle的pom文件,排除低版本jetty,添加hudi指定版本的jetty:

vim /opt/software/hudi/hudi-0.12.0/packaging/hudi-utilities-bundle/pom.xml

345行部分

<exclusions>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

<exclusions>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

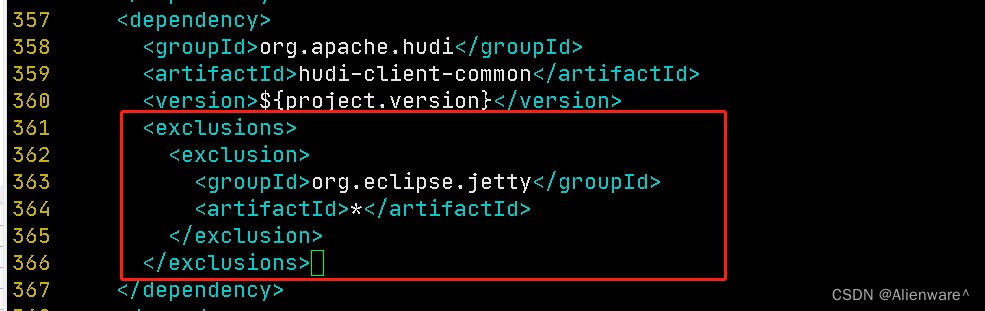

405行部分

<exclusions>

<exclusion>

<artifactId>servlet-apiartifactId>

<groupId>javax.servletgroupId>

exclusion>

<exclusion>

<artifactId>guavaartifactId>

<groupId>com.google.guavagroupId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.pentahogroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

<exclusions>

<exclusion>

<groupId>javax.servletgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>javax.servlet.jspgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

<exclusions>

<exclusion>

<groupId>javax.servletgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<groupId>org.datanucleusgroupId>

<artifactId>datanucleus-coreartifactId>

exclusion>

<exclusion>

<groupId>javax.servlet.jspgroupId>

<artifactId>*artifactId>

exclusion>

<exclusion>

<artifactId>guavaartifactId>

<groupId>com.google.guavagroupId>

exclusion>

exclusions>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty.orbitgroupId>

<artifactId>javax.servletartifactId>

exclusion>

<exclusion>

<groupId>org.eclipse.jettygroupId>

<artifactId>*artifactId>

exclusion>

exclusions>

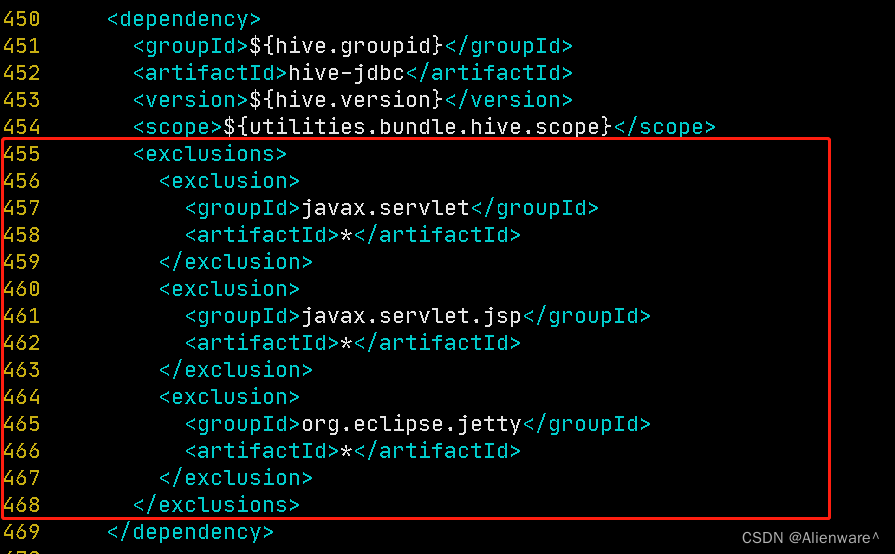

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-serverartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-utilartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-webappartifactId>

<version>${jetty.version}version>

dependency>

<dependency>

<groupId>org.eclipse.jettygroupId>

<artifactId>jetty-httpartifactId>

<version>${jetty.version}version>

dependency>

否则在使用DeltaStreamer工具向hudi表插入数据时,也会报Jetty的错误

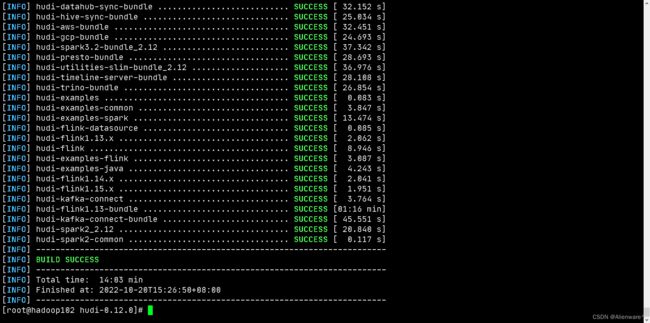

执行编译命令

mvn clean package -DskipTests -Dspark3.2 -Dflink1.13 -Dscala-2.12 -Dhadoop.version=3.1.3 -Pflink-bundle-shade-hive3

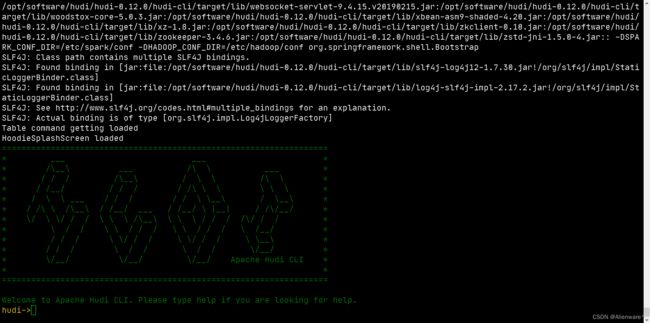

编译成功

编译成功后,进入hudi-cli说明成功:

比如,flink与hudi的包: