快速搭建测ceph

一、cephadm介绍

Cephadm是一个由Ceph社区维护的工具,它用于在Ceph集群中管理和部署Ceph服务。它是一个基于容器化的工具,使用了容器技术来部署Ceph集群的不同组件。

使用Cephadm,管理员可以通过简单的命令行界面在整个Ceph集群中进行自动化部署、更新和维护。它提供了一种简单而灵活的方法来管理Ceph集群,而无需深入了解Ceph集群的内部工作原理。

Cephadm还提供了一些有用的功能,如在集群中添加新的节点、删除现有节点、进行配置更改等等。它还可以自动检测并修复一些常见的问题,例如节点故障、网络问题等等。

从ceph5开始使用cephadm代替之前的ceph-ansible作为管理整个集群生命周期的工具,包括部署,管理,监控。

cephadm引导过程在单个节点(bootstrap节点)上创建一个小型存储集群,包括一个Ceph Monitor和一个Ceph Manager,以及任何所需的依赖项。

cephadm可以登录到容器仓库来拉取ceph镜像和使用对应镜像来在对应ceph节点进行部署。ceph容器镜像对于部署ceph集群是必须的,因为被部署的ceph容器是基于那些镜像。

为了和ceph集群节点通信,cephadm使用ssh。通过使用ssh连接,cephadm可以向集群中添加主机,添加存储和监控那些主机。

该节点让集群up的软件包就是cepadm,podman或docker,python3和chrony。总之,Cephadm是一个非常强大和实用的工具,可以大大简化Ceph集群的管理和部署过程,为管理员提供了更高效和可靠的管理方式。

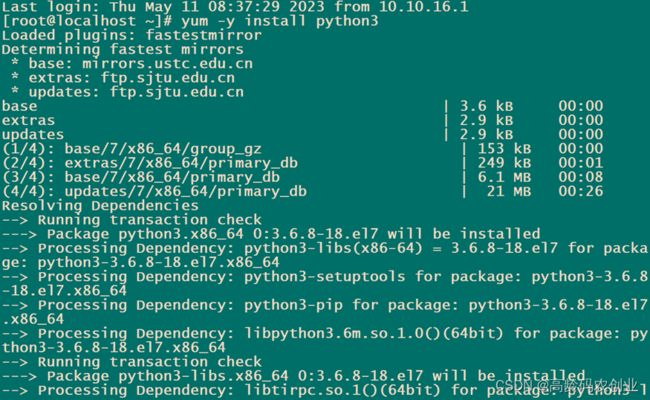

1、python3

yum -y install python3

2、使用docker来运行容器

安装步骤及docker学习资料可查看http://xingxingxiaowo.xyz:4000/

# 注: 自己搭建的网站,非商用

# 安装阿里云提供的docker-ce

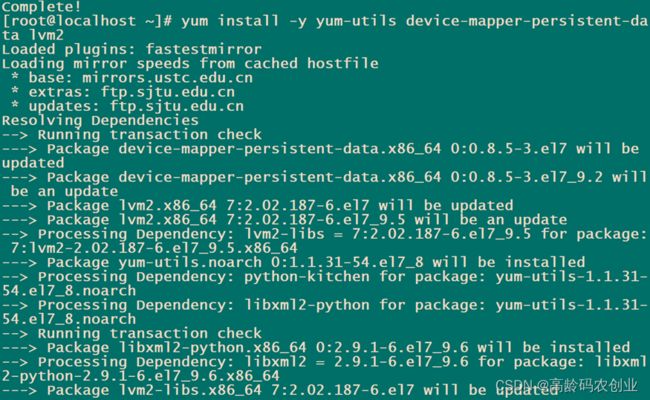

yum install -y yum-utils device-mapper-persistent-data lvm2

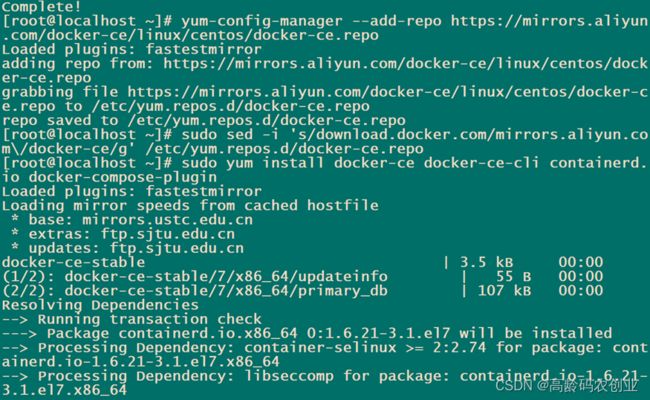

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo sed -i 's/download.docker.com/mirrors.aliyun.com\/docker-ce/g' /etc/yum.repos.d/docker-ce.repo

sudo yum install docker-ce docker-ce-cli containerd.io docker-compose-plugin

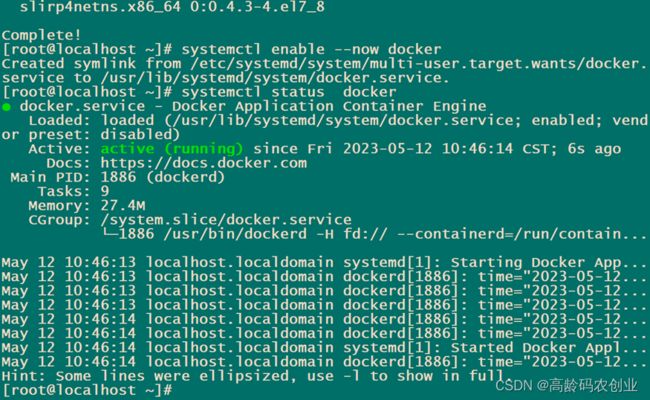

systemctl enable --now docker

systemctl status docker #查看状态

# 配置镜像加速器

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://bp1bh1ga.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

3、时间同步(比如chrony或者NTP)

# yum install chrony -y

vi /etc/chrony.conf

# cat /etc/chrony.conf |grep -v ^# |grep -v ^$

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server ntp1.aliyun.com iburst

server ntp2.aliyun.com iburst

server ntp3.aliyun.com iburst

#allow 192.168.0.0/16

allow all

local stratum 10

systemctl enable --now chronyd

systemctl status chronyd

设置时区Asia/ Shanghai

# timedatectl set-timezone Asia/Shanghai

# chronyc sources

# chronyc tracking #同步服务状态

显示当前正在访问的时间源

# chronyc sources -v

客户端

cat /etc/chrony.conf

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server node1 iburst

systemctl enable --now chronyd

systemctl status chronyd

二、部署ceph集群前准备

2.1、节点准备

| 系统 |

硬盘 |

|||

| node1 |

Centos7 |

192.168.116.10 |

mon,mgr,服务器端,管理节点 |

/dev/vdb,/dev/vdc/,dev/vdd |

| node2 |

Centos7 |

192.168.116.20 |

mon,mgr |

/dev/vdb,/dev/vdc/,dev/vdd |

| node3 |

Centos7 |

192.168.116.30 |

mon,mgr |

/dev/vdb,/dev/vdc/,dev/vdd |

2.2、修改每个节点的/etc/host

192.168.116.10 node1

192.168.116.20 node2

192.168.116.30 node3

主机名

hostnamectl set-hostname node1

hostnamectl set-hostname node2

hostnamectl set-hostname node3

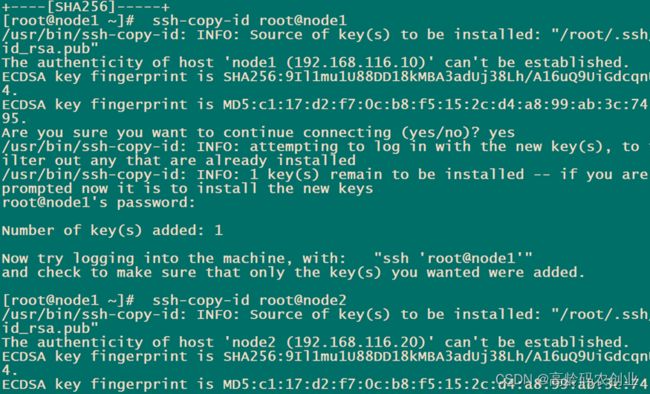

2.3、在node1节点上做免密登录

[root@node1 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:49VjCBjpqw380VbX61UyvfIg/CBGz7CvD/emhrWgta0 root@node1

The key's randomart image is:

+---[RSA 2048]----+

| .. |

| .o |

| .. . . . |

| . +.o. + o|

| . oS.O.+ +o|

| o o.oO O +...|

| = o=.O.=.+. |

| . o. oo=.o.. |

| E=oo. |

+----[SHA256]-----+

[root@node1 ~]# ssh-copy-id root@node2

[root@node1 ~]# ssh-copy-id root@node3

[root@node1 ~]# ssh-copy-id root@node1

三、node1节点安装cephadm

1.安装epel源

[root@node1 ~]# yum -y install epel-release

2.安装ceph源

[root@node1 ~]# yum search release-ceph

上次元数据过期检查:0:57:14 前,执行于 2023年02月14日 星期二 14时22分00秒。

================= 名称 匹配:release-ceph ============================================

centos-release-ceph-nautilus.noarch : Ceph Nautilus packages from the CentOS Storage SIG repository

centos-release-ceph-octopus.noarch : Ceph Octopus packages from the CentOS Storage SIG repository

centos-release-ceph-pacific.noarch : Ceph Pacific packages from the CentOS Storage SIG repository

centos-release-ceph-quincy.noarch : Ceph Quincy packages from the CentOS Storage SIG repository

#[root@node1 ~]# yum -y install centos-release-ceph-pacific

#3.安装cephadm

#[root@node1 ~]# yum -y install cephadm 这是红帽系统花钱的订阅,cephadm还是去网上拉一下吧

#4.安装ceph-common

#[root@node1 ~]# yum -y install ceph-common

[root@node1 ~]# curl --silent --remote-name --location https://github.com/ceph/ceph/raw/octopus/src/cephadm/cephadm

#多试几次

[root@node1 ~]# ls

anaconda-ks.cfg cephadm

[root@node1 ~]# chmod +x cephadm

# 此命令将生成 ceph yum 源

[root@node1 ~]# ./cephadm add-repo --release octopus

Writing repo to /etc/yum.repos.d/ceph.repo...

Enabling EPEL...

# 备份 ceph yum 源并将其替换使用 阿里云 yum 源

[root@node1 ~]# cp /etc/yum.repos.d/ceph.repo{,.back}

[root@node1 ~]# sed -i 's#download.ceph.com#mirrors.aliyun.com/ceph#' /etc/yum.repos.d/ceph.repo

[root@node1 ~]# yum list | grep ceph

# 安装 cephadm 到当前节点(其实就是将 cephadm 复制到环境变量)

[root@node1 ~]# ./cephadm install

Installing packages ['cephadm']...

[root@node1 ~]# which cephadm

/usr/sbin/cephadm

四、其它节点安装docker-ce,python3

具体过程看标题一。

五、部署ceph集群

5.1、部署ceph集群,顺便把dashboard(图形控制界面)安装上

[root@node1 ~]# mkdir -p /etc/ceph

[root@node1 ~]# cephadm bootstrap --mon-ip 192.168.116.10 --allow-fqdn-hostname --initial-dashboard-user admin --initial-dashboard-password admin --dashboard-password-noupdate

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit chronyd.service is enabled and running

Repeating the final host check...

podman|docker (/usr/bin/docker) is present

systemctl is present

lvcreate is present

Unit chronyd.service is enabled and running

Host looks OK

Cluster fsid: cbda57b6-f075-11ed-8063-000c29cf191a

Verifying IP 192.168.116.10 port 3300 ...

Verifying IP 192.168.116.10 port 6789 ...

Mon IP 192.168.116.10 is in CIDR network 192.168.116.0/24

Pulling container image quay.io/ceph/ceph:v15...

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting mon public_network...

Creating mgr...

Verifying port 9283 ...

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Wrote config to /etc/ceph/ceph.conf

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/10)...

mgr not available, waiting (2/10)...

mgr not available, waiting (3/10)...

mgr not available, waiting (4/10)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for Mgr epoch 5...

Mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to to /etc/ceph/ceph.pub

Adding key to root@localhost's authorized_keys...

Adding host node1...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Enabling mgr prometheus module...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for Mgr epoch 13...

Mgr epoch 13 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

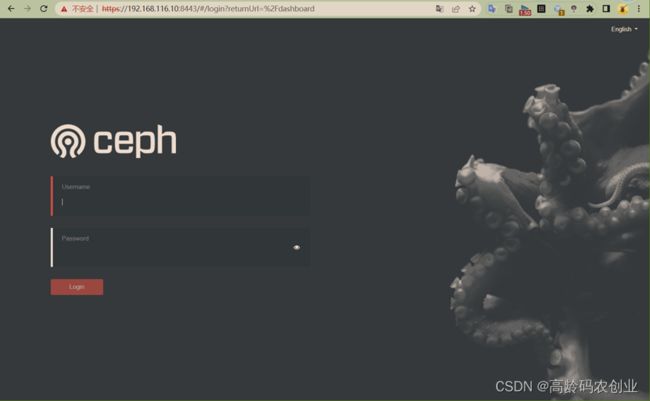

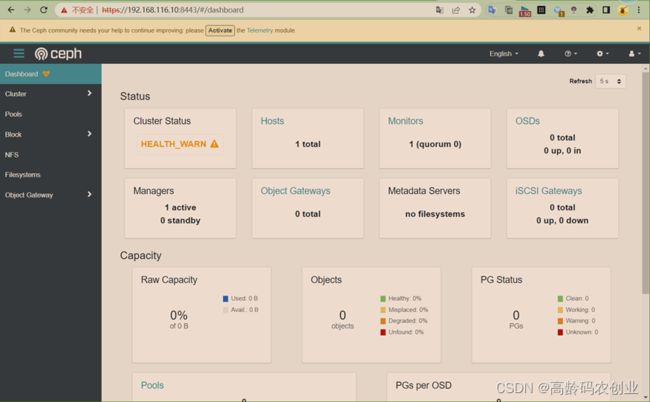

Ceph Dashboard is now available at:

URL: https://node1:8443/

User: admin

Password: admin

You can access the Ceph CLI with:

sudo /usr/sbin/cephadm shell --fsid cbda57b6-f075-11ed-8063-000c29cf191a -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/docs/master/mgr/telemetry/

Bootstrap complete.

- bootstrap: 用于引导新的Ceph集群。在此命令中,它表示要创建一个新的管理节点。

- --mon-ip: 指定新的管理节点的IP地址。

- --allow-fqdn-hostname: 允许使用完全限定域名(FQDN)的主机名,而不是只使用短名称。例如,可以使用ceph-node1.example.com而不是只使用ceph-node1。

- --initial-dashboard-user: 配置Ceph Dashboard的管理员用户名。在此命令中,用户名为admin。

- --initial-dashboard-password: 配置Ceph Dashboard的管理员用户密码。在此命令中,密码为admin。

- --dashboard-password-noupdate: 禁止要求管理员在首次登录Ceph Dashboard时更新其密码。这可以在测试环境中使用,但不建议在生产环境中使用。

访问 https://192.168.116.10:8443/

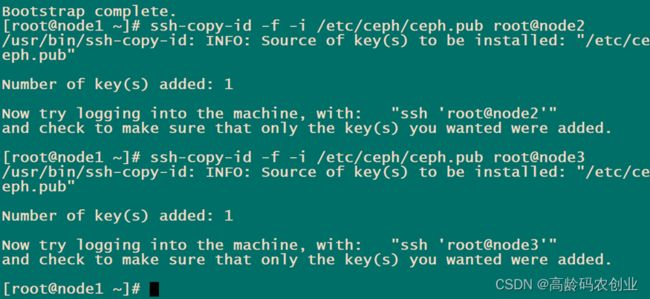

5.2、把集群公钥复制到将成为集群成员的节点

[root@node1 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@node2

[root@node1 ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@node3

5.3、添加节点node2.node3(各节点要先安装docker-ce,python3)

[root@node1 ~]# cephadm install ceph-common

Installing packages ['ceph-common']...

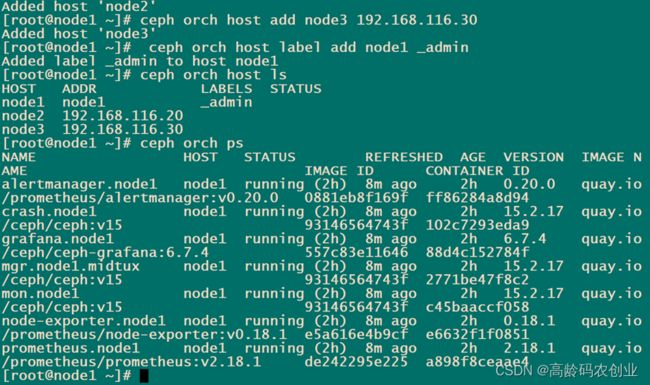

[root@node1 ~]# ceph orch host add node2 192.168.116.20

Added host 'node2'

[root@node1 ~]# ceph orch host add node3 192.168.116.30

Added host 'node3'

5.4、给node1打上管理员标签

[root@node1 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

node1 node1

node2 192.168.116.20

node3 192.168.116.30

[root@node1 ~]# ceph orch host label add node1 _admin

Added label _admin to host node1

[root@node1 ~]# ceph orch host ls

HOST ADDR LABELS STATUS

node1 node1 _admin

node2 192.168.116.20

node3 192.168.116.30

[root@node1 ~]# ceph orch ps

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

alertmanager.node1 node1 running (2h) 8m ago 2h 0.20.0 quay.io/prometheus/alertmanager:v0.20.0 0881eb8f169f ff86284a8d94

crash.node1 node1 running (2h) 8m ago 2h 15.2.17 quay.io/ceph/ceph:v15 93146564743f 102c7293eda9

grafana.node1 node1 running (2h) 8m ago 2h 6.7.4 quay.io/ceph/ceph-grafana:6.7.4 557c83e11646 88d4c152784f

mgr.node1.midtux node1 running (2h) 8m ago 2h 15.2.17 quay.io/ceph/ceph:v15 93146564743f 2771be47f8c2

mon.node1 node1 running (2h) 8m ago 2h 15.2.17 quay.io/ceph/ceph:v15 93146564743f c45baaccf058

node-exporter.node1 node1 running (2h) 8m ago 2h 0.18.1 quay.io/prometheus/node-exporter:v0.18.1 e5a616e4b9cf e6632f1f0851

prometheus.node1 node1 running (2h) 8m ago 2h 2.18.1 quay.io/prometheus/prometheus:v2.18.1 de242295e225 a898f8ceaae4

5.5、添加mon

[root@node1 ~]# ceph orch apply mon "node1,node2,node3"

Scheduled mon update...

5.6、添加mgr

[root@node1 ~]# ceph orch apply mgr --placement="node1,node2,node3"

Scheduled mgr update...

5.7、添加osd

[root@node1 ~]# ceph orch daemon add osd node1:/dev/sdb

Created osd(s) 0 on host 'node1'

[root@node1 ~]# ceph orch daemon add osd node2:/dev/sdb

Created osd(s) 1 on host 'node2'

[root@node1 ~]# ceph orch daemon add osd node3:/dev/sdb

Created osd(s) 2 on host 'node3'

[root@node1 ~]# ceph orch device ls

Hostname Path Type Serial Size Health Ident Fault Available

node1 /dev/sdb hdd 107G Unknown N/A N/A No

node2 /dev/sdb hdd 107G Unknown N/A N/A No

node3 /dev/sdb hdd 107G Unknown N/A N/A No

5.8、至此,ceph集群基础部署完毕!

[root@node1 ~]# ceph -s

cluster:

id: 3e635ab8-f08e-11ed-af2f-000c29cf191a

health: HEALTH_OK

services:

mon: 3 daemons, quorum node1,node3,node2 (age 85s)

mgr: node1.fcxhll(active, since 7m), standbys: node2.fugwlk

osd: 3 osds: 3 up (since 19s), 3 in (since 19s)

task status:

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 297 GiB / 300 GiB avail

pgs: 1 active+clean

5.9、客户端节点管理ceph

# 在目录5.4已经将ceph配置文件和keyring拷贝到node4节点

[root@node4 ~]# ceph -s

-bash: ceph: 未找到命令,需要安装ceph-common

# 安装ceph源

[root@node4 ~]# cephadm install ceph-common

# 安装ceph-common

[root@node4 ~]# yum -y install ceph-common

[root@node4 ~]# ceph -s

cluster:

id: 0b565668-ace4-11ed-960c-5254000de7a0

health: HEALTH_OK

services:

mon: 3 daemons, quorum node1,node2,node3 (age 7m)

mgr: node1.cxtokn(active, since 14m), standbys: node2.heebcb, node3.fsrlxu

osd: 9 osds: 9 up (since 59s), 9 in (since 81s)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 53 MiB used, 90 GiB / 90 GiB avail

pgs: 1 active+clean