kubernetes v1.23.1(k8s)在centos7上快速安装指导

kubernetes v1.23.1在centos7上快速安装指导

-

- 优势:全程操作总耗时<=30分钟,非常快捷方便

- 一、环境准备

-

- 1、环境信息

- 2、硬件环境信息

- 3、安装流程

- 二、开始安装

-

- 1、安装docker-ce 20.10.17(所有机器)

- 2、设置k8s环境准备条件(所有机器)

- 3、安装k8s v1.23.1 master管理节点

-

- 1)安装kubeadm、kubelet、kubectl

- 2)配置k8s阿里云源

- 3)安装kubeadm、kubectl、kubelet

- 4)启动kubelet服务

- 4、初始化k8s

- 5、安装k8s v1.23.1 node工作节点

-

- 1)安装kubeadm、kubelet

- 2)node节点加入集群

- 6、部署网络插件(Master节点)

-

- (1)安装calico网络插件(master节点)

- (2)安装flannel网络插件(master节点)

- 7、使node节点管理集群(kubectl命令在node节点可用生效)

- 7、安装完成

- 8、附录

- 三、证书过期

-

- 1、查看证书过期时间

- 2、续期证书(一键手动更新证书)

- 3、续期证书(使用 Kubernetes 证书 API 更新证书)

优势:全程操作总耗时<=30分钟,非常快捷方便

一、环境准备

1、环境信息

| 节点名称 | IP地址 |

|---|---|

| k8s-master01 | 192.168.227.131 |

| k8s-node01 | 192.168.227.132 |

| k8s-node02 | 192.168.227.133 |

2、硬件环境信息

| 名称 | 描述 |

|---|---|

| 办公电脑 | win10 |

| 虚拟机 | VMware® Workstation 15 Pro 15.5.1 build-15018445 |

| 操作系统 | CentOS Linux 7 (Core) |

| linux内核 | CentOS Linux (4.4.248-1.el7.elrepo.x86_64) 7 (Core) |

| CPU | 至少2核(此版本的k8s要求至少2核,否则kubeadm init会报错) |

| 内存 | 2G及其以上 |

3、安装流程

- 安装docker-ce 20.10.17(所有机器)

- 设置k8s环境前置条件(所有机器)

- 安装k8s v1.23.1 master管理节点

- 安装k8s v1.23.1 node1工作节点

- 安装k8s v1.23.1 node2工作节点

- 安装网络插件(master)

备注:请确保master和node之间通信的ip,能互相ping通。

二、开始安装

1、安装docker-ce 20.10.17(所有机器)

备注:这里请注意,我这里安装的最新版本的docker-ce,目前的版本是v20.10.17版本

所有安装k8s的机器都需要安装docker,命令如下:

- 安装docker所需的依赖软件包

yum install -y yum-utils device-mapper-persistent-data lvm2

- 配置阿里云的docker源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

- 查看所有仓库中所有docker版本

yum list docker-ce --showduplicates | sort -r

- 安装docker-ce

yum -y install docker-ce

- 配置docker仓库源

cat > /etc/docker/daemon.json << EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"registry-mirrors": ["https://vydiw6v2.mirror.aliyuncs.com"]

}

EOF

- 启动docker

systemctl enable docker && systemctl start docker

2、设置k8s环境准备条件(所有机器)

- 关闭防火墙

systemctl disable firewalld

systemctl stop firewalld

- 关闭selinux

临时禁用selinux

setenforce 0

- 永久关闭 修改/etc/sysconfig/selinux文件设置

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/sysconfig/selinux

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

- 禁用交换分区

swapoff -a - 永久禁用,打开/etc/fstab注释掉swap那一行。

sed -i 's/.*swap.*/#&/' /etc/fstab

- 修改内核参数,允许iptables 检查桥接流量

cat <3、安装k8s v1.23.1 master管理节点

1)安装kubeadm、kubelet、kubectl

备注:由于官方k8s源在google,国内无法访问,这里使用阿里云yum源

2)配置k8s阿里云源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

3)安装kubeadm、kubectl、kubelet

yum install -y kubelet-1.23.1 kubeadm-1.23.1 kubectl-1.23.1 --disableexcludes=kubernetes

4)启动kubelet服务

systemctl enable kubelet && systemctl start kubelet

#此时查看systemctl status kubelet服务的状态会存在启动失败的情况,这个不影响继续向下,执行kubeadm init会拉起Kubelet服务

4、初始化k8s

以下这个命令开始安装k8s需要用到的docker镜像,因为无法访问到国外网站,所以这条命令使用的是国内的阿里云的源(registry.aliyuncs.com/google_containers)。

另一个非常重要的是:这里的–apiserver-advertise-address使用的是master和node间能互相ping通的ip,我这里是192.168.227.131,请自己修改下ip执行。

这条命令执行时会卡在[preflight] You can also perform this action in beforehand using ''kubeadm config images pull,大概需要2分钟,请耐心等待。

- 下载管理节点中用到的6个docker镜像,你可以使用docker images查看到

- 这里需要大概两分钟等待,会卡在[preflight] You can also perform this action in beforehand using ''kubeadm config images pull

kubeadm init \

--apiserver-advertise-address=192.168.227.131 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.23.1 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--ignore-preflight-errors=all

上面安装完后,会提示你输入如下命令,复制粘贴过来,执行即可。

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.227.131:6443 --token vz1k7d.lkwb70m9pszfcxft \

--discovery-token-ca-cert-hash sha256:5dccf597dc0dc232a985905c05750c7e062dcc896839cac7f50817fc92f0868f

同时,提供了将节点加入集群的命令,

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.227.131:6443 --token vz1k7d.lkwb70m9pszfcxft \

--discovery-token-ca-cert-hash sha256:5dccf597dc0dc232a985905c05750c7e062dcc896839cac7f50817fc92f0868f

#记住node加入集群的命令

上面kubeadm init执行成功后会返回给你node节点加入集群的命令,等会要在node节点上执行,需要保存下来,如果忘记了,可以使用如下命令获取。

kubeadm token create --print-join-command

以上,安装master节点完毕。可以使用kubectl get nodes查看一下,此时master处于NotReady状态,暂时不用管。

5、安装k8s v1.23.1 node工作节点

1)安装kubeadm、kubelet

- 配置k8s阿里云源

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- 安装kubeadm、kubelet

yum install -y kubelet-1.23.1 kubeadm-1.23.1 kubectl-1.23.1 --disableexcludes=kubernetes

- 启动kubelet服务

systemctl enable kubelet && systemctl start kubelet

2)node节点加入集群

备注:这里加入集群的命令每个人都不一样,请根据实际情况获取命令:

# 加入集群,如果这里不知道加入集群的命令,可以登录master节点,使用kubeadm token create --print-join-command 来获取

kubeadm join 192.168.227.131:6443 --token vz1k7d.lkwb70m9pszfcxft \

--discovery-token-ca-cert-hash sha256:5dccf597dc0dc232a985905c05750c7e062dcc896839cac7f50817fc92f0868f

加入成功后,可以在master节点上使用kubectl get nodes命令查看到加入的节点。

另一个node节点也按照上面的步骤操作即可。

6、部署网络插件(Master节点)

以上步骤安装完后,机器搭建起来了,但状态还是NotReady状态,如下图,

此时,需要master节点安装网络插件。

Kubernetes 中可以部署很多种网络插件,不过比较流行也推荐的有两种:

Flannel: Flannel 是基于 Overlay 网络模型的网络插件,能够方便部署,一般部署后只要不出问题,一般不需要管它。

Calico: 与 Flannel 不同,Calico 是一个三层的数据中心网络方案,使用 BGP 路由协议在主机之间路由数据包,可以灵活配置网络策略。

这两种网络根据环境任选其一即可,操作步骤如下:

(1)安装calico网络插件(master节点)

下载 Calico 部署文件,并替换里面的网络范围为上面 kubeadm 中 pod-network-cidr 配置的值。

kubeadm init --apiserver-advertise-address=192.168.227.131 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.23.1 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=all

#下载 calico 部署文件

wget https://projectcalico.docs.tigera.io/manifests/tigera-operator.yaml --no-check-certificate

wget https://projectcalico.docs.tigera.io/manifests/custom-resources.yaml --no-check-certificate

#替换 calico 部署文件的 IP 为 kubeadm 中的 networking.podSubnet 参数 10.244.0.0。

$ sed -i 's/192.168.0.0/10.244.0.0/g' custom-resources.yaml

#设置通信网卡

vi custom-resources.yaml

# This section includes base Calico installation configuration.

# For more information, see: https://projectcalico.docs.tigera.io/v3.23/reference/installation/api#operator.tigera.io/v1.Installation

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

calicoNetwork:

# Note: The ipPools section cannot be modified post-install.

ipPools:

- blockSize: 26

cidr: 10.244.0.0/16

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

nodeAddressAutodetectionV4:

interface: ens32

保存!

#部署 Calico 插件

kubectl create -f tigera-operator.yaml

kubectl create -f custom-resources.yaml

如果上面wget命令出现错误“颁发的证书已经过期”,请执行命令“yum install -y ca-certificates”更新下证书。

[root@k8s-master1 calico-install]# wget https://docs.projectcalico.org/v3.10/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

--2022-06-10 22:32:27-- https://docs.projectcalico.org/v3.10/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

正在解析主机 docs.projectcalico.org (docs.projectcalico.org)... 18.140.226.100, 3.0.239.142, 2406:da18:880:3801:52c7:4593:210d:6aae, ...

正在连接 docs.projectcalico.org (docs.projectcalico.org)|18.140.226.100|:443... 已连接。

错误: 无法验证 docs.projectcalico.org 的由 “/C=US/O=Let's Encrypt/CN=R3” 颁发的证书:

颁发的证书已经过期。

要以不安全的方式连接至 docs.projectcalico.org,使用“--no-check-certificate”。

查看 Pod 是否成功启动

[root@k8s-master1 k8s1.23]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-apiserver calico-apiserver-564fd9ddf4-8tcqn 1/1 Running 1 (31m ago) 42m

calico-apiserver calico-apiserver-564fd9ddf4-zshpn 1/1 Running 0 42m

calico-system calico-kube-controllers-69cfd64db4-6b8v9 1/1 Running 1 (41m ago) 81m

calico-system calico-node-7c5v8 1/1 Running 1 (41m ago) 49m

calico-system calico-node-8r44s 1/1 Running 1 (41m ago) 81m

calico-system calico-node-mg5nt 1/1 Running 1 (41m ago) 81m

calico-system calico-typha-77f4db99cf-dj8zz 1/1 Running 1 (41m ago) 81m

calico-system calico-typha-77f4db99cf-kvjjm 1/1 Running 1 (41m ago) 81m

default nginx-85b98978db-r7z6d 1/1 Running 0 25m

kube-system coredns-6d8c4cb4d-6f2wr 1/1 Running 1 (41m ago) 95m

kube-system coredns-6d8c4cb4d-7bbfj 1/1 Running 1 (41m ago) 95m

kube-system etcd-k8s-master1 1/1 Running 1 (41m ago) 95m

kube-system kube-apiserver-k8s-master1 1/1 Running 0 31m

kube-system kube-controller-manager-k8s-master1 1/1 Running 2 (31m ago) 95m

kube-system kube-proxy-hsp5d 1/1 Running 1 (41m ago) 92m

kube-system kube-proxy-pksrp 1/1 Running 1 (41m ago) 91m

kube-system kube-proxy-tzvk5 1/1 Running 1 (41m ago) 95m

kube-system kube-scheduler-k8s-master1 1/1 Running 2 (31m ago) 95m

可以看到所以 Pod 都已经成功启,calico网络插件安装完成。

(2)安装flannel网络插件(master节点)

- 下载官方fannel配置文件

使用wget命令,地址为:(https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml),这个地址国内访问不了,所以我把内容复制下来,这个yml配置文件中配置了一个国内无法访问的地址(quay.io),我已经将其改为国内可以访问的地址(quay-mirror.qiniu.com)。我们新建一个kube-flannel.yml文件,复制粘贴该内容即可。

- 安装fannel

kubectl apply -f kube-flannel.yml

7、使node节点管理集群(kubectl命令在node节点可用生效)

如果是kubeadm安装,在node节点上管理时会报如下错误

[root@node1 ~]# kubectl get nodes

The connection to the server localhost:8080 was refused -did you specify the right host or port?

只要把master上的管理文件/etc/kubernetes/admin.conf拷贝到node 节点的$HOME/.kube/config就可以让node节点也可以实现kubectl命令管理,

- 1、在node节点的用户家目录创建.kube目录

[root@node1 ~]# mkdir /root/.kube

- 2、在master节点做如下操作

[root@master ~]# scp /etc/kubernetes/admin.conf k8s-node1:/root/.kube/config

[root@master ~]# scp /etc/kubernetes/admin.conf k8s-node2:/root/.kube/config

- 3、在node节点验证下kubeclt命令在worker node节点是否可用

[root@k8s-node1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready master 127m v1.16.0

k8s-node1 Ready 120m v1.16.0

k8s-node2 Ready 121m v1.16.0

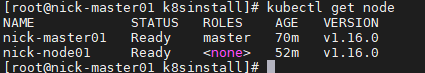

7、安装完成

至此,k8s集群搭建完成,如下图节点已为Ready状态,大功告成,此安装方法使用是阿里云的源,整体安装耗时较短,谢谢大家!欢迎留言讨论

8、附录

kube-flannel.yml文件内容如下,

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

三、证书过期

1、查看证书过期时间

[root@k8s-master1 ~]# date

2022年 06月 10日 星期五 15:12:53 CST

[root@k8s-master1 ~]#

[root@k8s-master1 ~]# kubeadm alpha certs check-expiration

CERTIFICATE EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

admin.conf May 29, 2022 17:43 UTC no

apiserver May 29, 2022 17:43 UTC no

apiserver-etcd-client May 29, 2022 17:43 UTC no

apiserver-kubelet-client May 29, 2022 17:43 UTC no

controller-manager.conf May 29, 2022 17:43 UTC no

etcd-healthcheck-client May 29, 2022 17:43 UTC no

etcd-peer May 29, 2022 17:43 UTC no

etcd-server May 29, 2022 17:43 UTC no

front-proxy-client May 29, 2022 17:43 UTC no

scheduler.conf May 29, 2022 17:43 UTC no

[root@k8s-master1 ~]#

以上信息可以看出来,当前时间是2022.6.10日,证书截止时间到2022-5-29日,证书已经过期,

问题现象是kubelet命令均无法连接到kube-apiserver

#master节点的kube-apiserver服务启动失败,

[root@k8s-master1 ~]# ps -ef|grep kube-apiserver

root 2066 1520 0 15:15 pts/0 00:00:00 grep --color=auto kube-apiserver

#无法连接到kube-apiserver

[root@k8s-master1 ~]# kubectl get node

The connection to the server 192.168.227.131:6443 was refused - did you specify the right host or port?

2、续期证书(一键手动更新证书)

一键手动更新证书操作步骤如下:

- a、接下来我们来更新我们的集群证书,下面的操作都是在 master 节点上进行,首先备份原有证书

$ mkdir /etc/kubernetes.bak

$ cp -r /etc/kubernetes/pki/ /etc/kubernetes.bak

$ cp /etc/kubernetes/*.conf /etc/kubernetes.bak

$ cp /etc/kubernetes/manifests/ etc/kubernetes/manifests.bak

然后备份 etcd 数据目录:

$ cp -r /var/lib/etcd /var/lib/etcd.bak

接下来执行更新证书的命令:

$ kubeadm alpha certs renew all

kubeadm alpha certs renew all

certificate embedded in the kubeconfig file for the admin to use and for kubeadm itself renewed

certificate for serving the Kubernetes API renewed

certificate the apiserver uses to access etcd renewed

certificate for the API server to connect to kubelet renewed

certificate embedded in the kubeconfig file for the controller manager to use renewed

certificate for liveness probes to healthcheck etcd renewed

certificate for etcd nodes to communicate with each other renewed

certificate for serving etcd renewed

certificate for the front proxy client renewed

certificate embedded in the kubeconfig file for the scheduler manager to use renewed

通过上面的命令证书就一键更新完成了,这个时候查看上面的证书可以看到过期时间已经是一年后的时间了:

[root@k8s-master1 etc]# kubeadm alpha certs check-expiration

CERTIFICATE EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

admin.conf Jun 10, 2023 15:09 UTC 364d no

apiserver Jun 10, 2023 15:09 UTC 364d no

apiserver-etcd-client Jun 10, 2023 15:09 UTC 364d no

apiserver-kubelet-client Jun 10, 2023 15:09 UTC 364d no

controller-manager.conf Jun 10, 2023 15:09 UTC 364d no

etcd-healthcheck-client Jun 10, 2023 15:09 UTC 364d no

etcd-peer Jun 10, 2023 15:09 UTC 364d no

etcd-server Jun 10, 2023 15:09 UTC 364d no

front-proxy-client Jun 10, 2023 15:09 UTC 364d no

scheduler.conf Jun 10, 2023 15:09 UTC 364d no

然后记得更新下 kubeconfig 文件:

[root@k8s-master1 etc]# kubeadm init phase kubeconfig all

I0610 23:09:55.708740 10668 version.go:251] remote version is much newer: v1.24.1; falling back to: stable-1.16

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/admin.conf"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/scheduler.conf"

将新生成的 admin 配置文件覆盖掉原本的 admin 文件:

$ mv $HOME/.kube/config $HOME/.kube/config.old

$ cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ chown $(id -u):$(id -g) $HOME/.kube/config

完成后重启 kube-apiserver、kube-controller、kube-scheduler、etcd 这4个容器即可(我这里直接使用reboot的方式重启的这几个服务),我们可以查看 apiserver 的证书的有效期来验证是否更新成功:

[root@k8s-master1 ~]# echo | openssl s_client -showcerts -connect 127.0.0.1:6443 -servername api 2>/dev/null | openssl x509 -noout -enddate

notAfter=Jun 10 15:09:31 2023 GMT

可以看到现在的有效期是一年过后的,证明已经更新成功了。

3、续期证书(使用 Kubernetes 证书 API 更新证书)

接下来,研究下如何续期一个10年有效期的 Kubernetes 证书

- 除了上述的一键手动更新证书之外,还可以使用 Kubernetes 证书 API 执行手动证书更新。对于生成环境多次更新证书存在风险,所以我们希望生成的证书有效期足够长,虽然从安全性角度来说不推荐这样做,但是对于某些场景下一个足够长的证书有效期也是非常有必要的。有很多管理员就是去手动更改 kubeadm 的源码为10年,然后重新编译来创建集群,这种方式虽然可以达到目的,但是不推荐使用这种方式,特别是当你想要更新集群的时候,还得用新版本进行更新。其实 Kubernetes 提供了一种 API 的方式可以来帮助我们生成一个足够长证书有效期。

- a、要使用内置的 API 方式来签名,首先我们需要配置 kube-controller-manager 组件的 --experimental-cluster-signing-duration 参数,将其调整为10年,我们这里是 kubeadm 安装的集群,所以直接修改静态 Pod 的 yaml 文件即可:

$ vi /etc/kubernetes/manifests/kube-controller-manager.yaml

......

spec:

containers:

- command:

- kube-controller-manager

# 设置证书有效期为 10 年,experimental-cluster-signing-duration参数不存在就新增,client-ca-file的文件路径由原来的/etc/kubernetes/pki/etcd修改为/etc/kubernetes/pki

- --experimental-cluster-signing-duration=87600h

- --client-ca-file=/etc/kubernetes/pki/ca.crt

......

修改完成后 kube-controller-manager 会自动重启生效。然后我们需要使用下面的命令为 Kubernetes 证书 API 创建一个证书签名请求。如果您设置例如 cert-manager 等外部签名者,则会自动批准证书签名请求(CSRs)。否者,您必须使用 kubectl certificate 命令手动批准证书。以下 kubeadm 命令输出要批准的证书名称,然后等待批准发生:

$ kubeadm alpha certs renew all --use-api &

输出类似于以下内容:

[root@k8s-master1 ~]# kubeadm alpha certs renew all --use-api &

[1] 4581

[root@k8s-master1 ~]# [certs] Certificate request "kubeadm-cert-kubernetes-admin-lrnvx" created

然后接下来我们需要去手动批准证书:

[root@k8s-master1 ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-k8bxs 49m system:node:k8s-master1 Approved,Issued

csr-ngn4j 43m system:bootstrap:od64zn Approved,Issued

csr-zctvz 44m system:bootstrap:od64zn Approved,Issued

kubeadm-cert-kubernetes-admin-lrnvx 18s kubernetes-admin Pending

然后接下来我们需要去手动批准证书:

[root@k8s-master1 ~]# kubectl certificate approve kubeadm-cert-kubernetes-admin-lrnvx

certificatesigningrequest.certificates.k8s.io/kubeadm-cert-kubernetes-admin-lrnvx approved

用同样的方式为处于 Pending 状态的 csr 执行批准操作,直到所有的 csr 都批准完成为止(多次执行kubectl get csr,查找pending状态的csr,然后进行一一审批即可)。最后所有的 csr 列表状态如下所示:

[root@k8s-master1 ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-k8bxs 55m system:node:k8s-master1 Approved,Issued

csr-ngn4j 50m system:bootstrap:od64zn Approved,Issued

csr-zctvz 50m system:bootstrap:od64zn Approved,Issued

kubeadm-cert-front-proxy-client-4b6dr 27s kubernetes-admin Approved,Issued

kubeadm-cert-k8s-master1-gqcnh 52s kubernetes-admin Approved,Issued

kubeadm-cert-k8s-master1-h72xj 40s kubernetes-admin Approved,Issued

kubeadm-cert-kube-apiserver-etcd-client-t5wc9 112s kubernetes-admin Approved,Issued

kubeadm-cert-kube-apiserver-kubelet-client-jm9rq 98s kubernetes-admin Approved,Issued

kubeadm-cert-kube-apiserver-xbxh9 6m2s kubernetes-admin Approved,Issued

kubeadm-cert-kube-etcd-healthcheck-client-ltzrc 65s kubernetes-admin Approved,Issued

kubeadm-cert-kubernetes-admin-lrnvx 6m46s kubernetes-admin Approved,Issued

kubeadm-cert-system:kube-controller-manager-lc778 85s kubernetes-admin Approved,Issued

kubeadm-cert-system:kube-scheduler-kqdzx 13s kubernetes-admin Approved,Issued

[1]+ 完成 kubeadm alpha certs renew all --use-api

最后会有一个提示语“[1]+完成 kubeadm alpha certs renew all --use-api”,表示证书更新了。

批准完成后检查证书的有效期:

[root@k8s-master1 ~]# kubeadm alpha certs check-expiration

CERTIFICATE EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

admin.conf Jun 07, 2032 14:20 UTC 9y no

apiserver Jun 07, 2032 14:20 UTC 9y no

apiserver-etcd-client Jun 07, 2032 14:21 UTC 9y no

apiserver-kubelet-client Jun 07, 2032 14:20 UTC 9y no

controller-manager.conf Jun 07, 2032 14:20 UTC 9y no

etcd-healthcheck-client Jun 07, 2032 14:20 UTC 9y no

etcd-peer Jun 07, 2032 14:21 UTC 9y no

etcd-server Jun 07, 2032 14:20 UTC 9y no

front-proxy-client Jun 07, 2032 14:20 UTC 9y no

scheduler.conf Jun 07, 2032 14:20 UTC 9y no

我们可以看到已经延长10年了, ca 证书的有效期更新为10年。

但是现在我们还不能直接重启控制面板的几个组件,这是因为使用 kubeadm 安装的集群对应的 etcd 默认是使用的 /etc/kubernetes/pki/etcd/ca.crt 这个证书进行前面的,而上面我们用命令 kubectl certificate approve 批准过后的证书是使用的默认的 /etc/kubernetes/pki/ca.crt 证书进行签发的,所以我们需要替换 etcd 中的 ca 机构证书:

# 先拷贝静态 Pod 资源清单

$ cp -r /etc/kubernetes/manifests/ /etc/kubernetes/manifests.bak

$ vi /etc/kubernetes/manifests/etcd.yaml

......

spec:

containers:

- command:

- etcd

# 修改为 CA 文件, 文件路径由原来的/etc/kubernetes/pki/etcd修改为/etc/kubernetes/pki

- --peer-trusted-ca-file=/etc/kubernetes/pki/ca.crt

- --trusted-ca-file=/etc/kubernetes/pki/ca.crt

......

volumeMounts:

- mountPath: /var/lib/etcd

name: etcd-data

- mountPath: /etc/kubernetes/pki # 更改证书目录,文件路径由原来的/etc/kubernetes/pki/etcd修改为/etc/kubernetes/pki

name: etcd-certs

volumes:

- hostPath:

path: /etc/kubernetes/pki # 将 pki 目录挂载到 etcd 中去,文件路径由原来的/etc/kubernetes/pki/etcd修改为/etc/kubernetes/pki

type: DirectoryOrCreate

name: etcd-certs

- hostPath:

path: /var/lib/etcd

type: DirectoryOrCreate

name: etcd-data

......

由于 kube-apiserver 要连接 etcd 集群,所以也需要重新修改对应的 etcd ca 文件:

vi /etc/kubernetes/manifests/kube-apiserver.yaml

......

spec:

containers:

- command:

- kube-apiserver

# 将etcd ca文件修改为默认的ca.crt文件,文件路径由原来的/etc/kubernetes/pki/etcd修改为/etc/kubernetes/pki

- --etcd-cafile=/etc/kubernetes/pki/ca.crt

......

除此之外还需要替换 requestheader-client-ca-file 文件,默认是 /etc/kubernetes/pki/front-proxy-ca.crt 文件,现在也需要替换成默认的 CA 文件,否则使用聚合 API,比如安装了 metrics-server 后执行 kubectl top 命令就会报错:

$ cp /etc/kubernetes/pki/ca.crt /etc/kubernetes/pki/front-proxy-ca.crt

$ cp /etc/kubernetes/pki/ca.key /etc/kubernetes/pki/front-proxy-ca.key

修改完成后重启控制面板的几个容器(建议reboot重启试试看)。由于我们当前版本的 kubelet 默认开启了证书自动轮转,所以 kubelet 的证书也不用再去管理了,这样我就将证书更新成10有效期了。在操作之前一定要先对证书目录进行备份,防止操作错误进行回滚。