centos7.x版本公网跨地域k8s集群搭建教程

centos7.x版本公网跨地域k8s集群搭建教程

跨地域搭建集群核心主要是pod间跨主机访问网络不通的问题解决!!

参考连接:

阿里云-ECS云服务器跨地域部署k8s集群

多个公网服务器搭建k8s集群

主机信息

| 名称 | 公网ip | 主机名 | 配置 | 操作系统 | 组件 |

|---|---|---|---|---|---|

| k8s管理节点1 | 47.101.143.185 | lx-test1 | 2C 8G 50G | centos7.9 | controlplane,etcd,worker |

| k8s管理节点2 | 120.79.186.239 | lx-test2 | 2C 8G 50G | centos7.9 | worker |

| k8s管理节点3 | 8.134.129.47 | lx-test3 | 2C 8G 50G | centos7.9 | worker |

Docker 安装请参考:Centos7.x版本docker与cuda安装

注意:如果主机没有GPU卡,可忽略GPU安装过程

结合实际场景:将通过2种安装方式实现公网跨地域搭建集群

方式一: RKE 方式搭建

Rancher Kubernetes Engine,简称 RKE,是一个经过 CNCF 认证的 Kubernetes 安装程序,具体docs请参考:RKE中文官网

方式二: kubeadm方式搭建

kubernetes官方安装方式之一,简化k8s安装步骤

安全组开放明细:

参考rancher通过firewalld放行端口和rancher端口要求开通安全组规则

放行端口介绍

| 协议 | 端口 | 描述 |

|---|---|---|

| TCP | 22 | 使用主机驱动通过 SSH 进行节点配置 |

| TCP | 80 | ingress (不使用ingress负载可以取消) |

| TCP | 443 | ingress (不使用ingress负载可以取消) |

| TCP | 2376 | 主机驱动与 Docker 守护进程通信的 TLS 端口 |

| TCP | 2379 | etcd 客户端请求 |

| TCP | 2380 | etcd 节点通信 |

| UDP | 8472 | Canal/Flannel VXLAN overlay 网络 |

| UDP | 8443 | Rancher webhook |

| TCP | 9099 | Canal/Flannel 健康检查 |

| TCP | 9796 | 集群监控拉取节点指标的默认端口(仅需要内网可达) |

| TCP | 10250 | Metrics server 与所有节点的通信 |

| TCP | 10254 | Ingress controller 健康检查 |

| TCP/UDP | 30000-32767 | NodePort 端口范围 |

实际开放端口截图

etd + Control节点 + worker节点

两种方式都需创建虚拟网卡绑定公网ip实现

创建虚拟网卡

集群主机都需执行,注意网卡名称 与 IPADDR=你的公网IP

# 写入虚拟网卡

cat > /etc/sysconfig/network-scripts/ifcfg-eth0:1 <<EOF

BOOTPROTO=static

DEVICE=eth0:1

IPADDR=你的公网IP

PREFIX=32

TYPE=Ethernet

USERCTL=no

ONBOOT=yes

EOF

# 重启网卡

systemctl restart network

# 查看ip

ip addr

方式一:RKE方式搭建

添加rke安装用户,集群各主机添加

[root@lx-test1 ~]# useradd rke

[root@lx-test1 ~]# passwd rke

Changing password for user rke.

New password:

BAD PASSWORD: The password is shorter than 8 characters

Retype new password:

passwd: all authentication tokens updated successfully.

#添加到docker组,使rke用户能操作docker

[root@lx-test1 ~]# usermod -aG docker rke

lx-test1主机 rke用户免密登录lx-test2主机,如果想通过主机名注册,可以配置hosts文件进行解析,本次使用ip形式

[rke@lx-test1 ~]$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/rke/.ssh/id_rsa):

Created directory '/home/rke/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/rke/.ssh/id_rsa.

Your public key has been saved in /home/rke/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:OA2DFdjsx94U9TBXGsdircH0E7lqll9YpbQzPxqk13U rke@lx-test1

The key's randomart image is:

+---[RSA 2048]----+

| +o. .+o+=+|

| .oo . =**=|

| ..o. . ooB+|

| .=o . .*.E|

| ooSo o +*o|

| .. .. B.oo|

| + + o|

| . . |

| |

+----[SHA256]-----+

[rke@lx-test1 ~]$ ssh-copy-id 47.101.143.185

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/rke/.ssh/id_rsa.pub"

The authenticity of host '47.101.143.185 (47.101.143.185)' can't be established.

ECDSA key fingerprint is SHA256:MeuNmOoJ2s6OrZnU0Qt2dtoELsc09smv3SDYXkYlT+s.

ECDSA key fingerprint is MD5:d6:e8:ba:7b:8a:61:a4:82:3d:57:e0:93:eb:b2:03:93.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

[email protected]'s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '47.101.143.185'"

and check to make sure that only the key(s) you wanted were added.

#配置另外主机密钥

[rke@lx-test1 ~]$ ssh-copy-id 120.79.186.239

[rke@lx-test1 ~]$ ssh-copy-id 8.134.129.47

#登录验证是否免密配置成功

[rke@lx-test1 ~]$ ssh 120.79.186.239

Last login: Fri Dec 23 13:40:55 2022 from 47.101.143.185

[rke@lx-test2 ~]$

上传RKE(下载地址),当前使用版本:v1.2.20,修改权限,移动到/usr/local/bin

[root@lx-test1 ~]# chmod +x rke_linux-amd64

[root@lx-test1 ~]# mv rke_linux-amd64 /usr/local/bin/rke

[root@lx-test1 ~]# rke -version

rke version v1.2.20

下载flannel网络插件

pod之间通信关键就在于flannel插件配置

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

vim kube-flannel.yml

由于指定了 --iface=网卡名称,需集群所有主机网卡名(内网实际网卡名)一致

# 需修改三个地方

#args下,添加

args:

- --public-ip=$(PUBLIC_IP) # 添加此参数,通过status.podIP获取,即公网ip

- --iface=eth0 # 添加此参数,绑定网卡(内网实际网卡名)

#- --iface=ens192

# env下添加

env:

- name: PUBLIC_IP #添加环境变量

valueFrom:

fieldRef:

fieldPath: status.podIP

#rke默认网络"Network": "10.42.0.0/16", (fannel默认为10.244.0.0/16)

net-conf.json: |

{

"Network": "10.42.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

创建集群配置文件#

RKE 使用集群配置文件cluster.yml规划集群中的节点,例如集群中应该包含哪些节点,如何部署 Kubernetes。您可以通过该文件修改很多集群配置选项。

切换至rke用户

[root@lx-test1 ~]#su - rke

[lx-test1 ~]$ vim rancher-cluster.yml

- network:不安装内置网络插件

- addons_include: 选项即flannel自定义插件配置

nodes:

- address: 47.101.143.185

# internal-address: 172.21.72.41

user: rke

role: [controlplane, worker, etcd]

#hostname_override: lx-test1

- address: 120.79.186.239

# internal-address: 172.26.194.119

user: rke

role: [worker]

- address: 8.134.129.47

# internal-address: 172.17.215.97

user: rke

role: [worker]

services:

etcd:

# 开启自动备份

## rke版本大于等于0.2.x或rancher版本大于等于v2.2.0时使用

backup_config:

enabled: true # 设置true启用ETCD自动备份,设置false禁用;

interval_hours: 12 # 快照创建间隔时间,不加此参数,默认5分钟;

retention: 6 # etcd备份保留份数;

# 修改空间配额为$((6*1024*1024*1024)),默认2G,最大8G

extra_args:

quota-backend-bytes: "6442450944"

auto-compaction-retention: 240 #(单位小时)

# 当使用外部 TLS 终止,并且使用 ingress-nginx v0.22或以上版本时,必须。

ingress:

provider: nginx

options:

use-forwarded-headers: "true"

private_registries:

- url: registry.cn-hangzhou.aliyuncs.com

is_default: true # 所有的系统镜像都将使用该注册表进行拉取

# user: Username # 请替换为真实的用户名

# password: password # 请替换为真实的密码

network:

plugin: none

addons_include:

- ./kube-flannel.yml

RKE配置文件由于自定义network,使用外置flannel插件,需安装kubernetes-cni

sudo yum install kubernetes-cni -y

ETCD调优 (仅在etcd所在节点执行)

[rke@lx-test1 ~]$ sudo chmod +x /etc/rc.d/rc.local

[rke@lx-test1 ~]$ sudo vim /etc/rc.local #新增如下内容(开机自启动)

########################################

#ETCD 调优

#修改CPU 优先级

renice -n -20 -P $(pgrep etcd)

#修改磁盘IO优先级

ionice -c2 -n0 -p $(pgrep etcd)

########################################

启动rke集群

rke up --config ./rancher-cluster.yml

安装kubectl

sudo yum install kubectl -y

查看rke集群状态

[root@lx-test1 ~]$ mkdir ~/.kube

[root@lx-test1 ~]$ cp kube_config_rancher-cluster.yml .kube/config

[root@lx-test1 ~]$ kubectl get node

NAME STATUS ROLES AGE VERSION

120.79.186.239 Ready worker 107m v1.20.15

47.101.143.185 Ready controlplane,etcd,worker 107m v1.20.15

8.134.129.47 Ready worker 107m v1.20.15

查看集群pod状态

kubectl get pod -A -owide

deploy测试

使用nginx进行ping与curl方式测试不同主机上pod的网络通信

vim my-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 4

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:latest

name: nginx

ports:

- containerPort: 80

resources: {}

status: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx

name: nginx

spec:

ports:

- name: "80"

port: 80

targetPort: 80

nodePort: 31040

protocol: TCP

selector:

app: nginx

type: NodePort

kubectl apply -f my-deploy.yaml

通过ping和curl测试服务测试是否可用

通过svc与nodeport测试

通过svc与nodeport测试

方式二: kubeadm方式搭建

kubeadm安装

使用 v1.23.0 版本进行部署

kubectl若已安装可不指定版本

yum install kubeadm-1.23.0 kubelet-1.23.0 kubectl-1.23.0 -y

修改kubelet启动参数

# 此文件安装kubeadm后就存在了

vim /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

# 注意,这步很重要,如果不做,节点仍然会使用内网IP注册进集群

# 在末尾添加参数 --node-ip=公网IP

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/sysconfig/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS --node-ip=公网IP

kubeadm init初始化集群

sudo kubeadm init \

--apiserver-advertise-address=47.101.143.185 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.23.0 \

--control-plane-endpoint=47.101.143.185 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--v=5

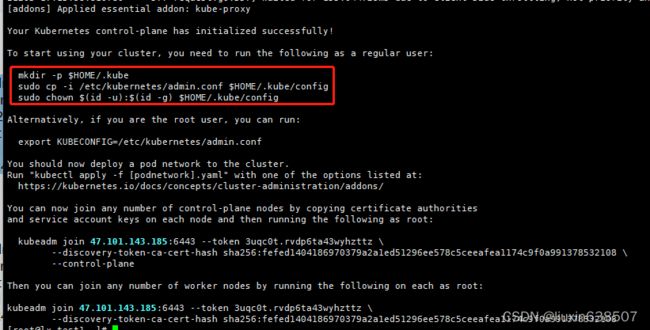

执行添加,使kubectl能管理集群

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

配置kube-apiserver参数:

--advertise-address已是公网ip可不修改

# 修改两个信息,添加--bind-address和修改--advertise-address

vim /etc/kubernetes/manifests/kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 47.101.143.185:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=47.101.143.185 #修改为公网IP

- --bind-address=0.0.0.0 #添加此参数

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

查看节点状态

[rke@lx-test1 ~]$ kubectl get node

The connection to the server 8.134.129.47:6443 was refused - did you specify the right host or port?

稍等片刻后再次执行

[root@lx-test1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

lx-test1 NotReady control-plane,master 4m8s v1.23.0

重新生成添加集群token

[root@lx-test1 ~]# kubeadm token create --print-join-command

kubeadm join 47.101.143.185:6443 --token kzopm0.5fwsue2zzpr0u0pq --discovery-token-ca-cert-hash sha256:38883c8698756b4822f1ecc8a7984c871dabd64405175b64fc0a7abc9e6ae99a

登录集群其他节点执行

[root@lx-test1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

lx-test1 NotReady control-plane,master 8m51s v1.23.0

lx-test2 NotReady <none> 100s v1.23.0

lx-test3 NotReady <none> 2s v1.23.0

下载flannel网络插件

pod之间通信关键就在于flannel插件配置

若指定pod与svc网段与fannel默认网段不同,还需更改network参数

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

vim kube-flannel.yml

由于指定了 --iface=网卡名称,需集群所有主机网卡名(内网实际网卡名)一致

# 需修改2个地方,一个是args下,添加

args:

- --public-ip=$(PUBLIC_IP) # 添加此参数,通过status.podIP获取,即公网ip

- --iface=eth0 # 添加此参数,绑定网卡(内网实际网卡名)

# 然后是env下

env:

- name: PUBLIC_IP #添加环境变量

valueFrom:

fieldRef:

fieldPath: status.podIP

[root@lx-test1 ~]# kubectl apply -f kube-flannel.yml

[root@lx-test1 ~]# kubectl apply -f /home/rke/my-deploy.yaml