Flink从Kafka读取数据流写入到MySQL

综合案例

今天来实现一个综合案例:Flink从独立部署的Kafka读取数据流,处理后,通过自定义的Sink函数写入到MySQL中

视频

配置

参考

FLINK -1 WordCount

FLINK -2 读取Kafka

FLINK -3 写入MySQL

Kafka

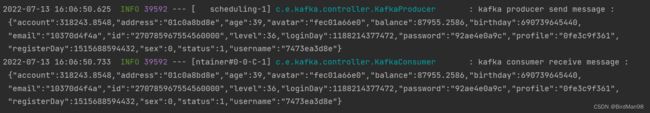

部署一个Kafka服务,源源不断的向主题kafka发送数据

参考

SpringBoot整合Kafka

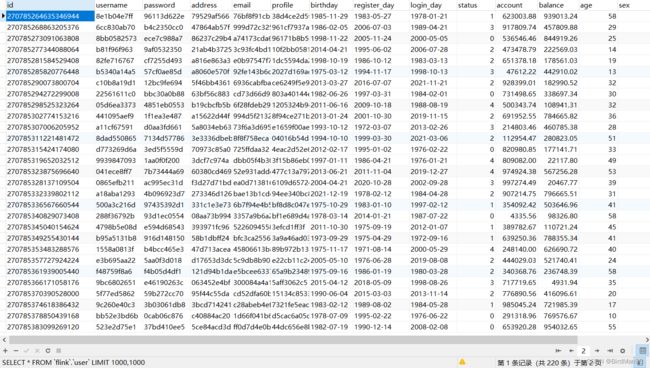

效果

创建数据表

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for user

-- ----------------------------

DROP TABLE IF EXISTS `user`;

CREATE TABLE `user` (

`id` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_bin NOT NULL,

`username` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_bin NOT NULL,

`password` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_bin NOT NULL,

`address` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_bin NOT NULL,

`email` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_bin NOT NULL,

`profile` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_bin NOT NULL,

`birthday` date NOT NULL,

`register_day` date NOT NULL,

`login_day` date NOT NULL,

`status` int NOT NULL,

`account` decimal(10, 2) NOT NULL,

`balance` decimal(10, 2) NOT NULL,

`age` int NOT NULL,

`sex` int NOT NULL,

`avatar` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_bin NOT NULL,

`level` int NOT NULL

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_bin ROW_FORMAT = Dynamic;

SET FOREIGN_KEY_CHECKS = 1;

Flink

思路:从Kafka源源不断获取的数据为User实体的JSON字符串,需要将JSON字符串解析成对象,然后通过自定义的Sink保存进MySQL。所以需要Kafka反序列化Schema,JSON转实体工具类,自定义Sink类,下面来依次看一下吧

User实体

package org.example.flink.user;

import lombok.Data;

import java.math.BigDecimal;

import java.util.Date;

@Data

public class User {

private String id;

private String username;

private String password;

private String address;

private String email;

private String profile;

private Date birthday;

private Date registerDay;

private Date loginDay;

private Integer status;

private BigDecimal account;

private BigDecimal balance;

private Integer age;

private Integer sex;

private String avatar;

private Integer level;

}

UserInfoSchema

主要是FlinkKafkaConsumer获取数据时反序列化为User对象

package org.example.flink.user;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.streaming.connectors.kafka.KafkaDeserializationSchema;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.example.flink.util.JSONUtil;

import java.nio.charset.Charset;

import java.nio.charset.StandardCharsets;

public class UserInfoSchema implements KafkaDeserializationSchema<User> {

public static final Charset UTF_8 = StandardCharsets.UTF_8;

private static User buildMsg(String jsonString) {

User user = JSONUtil.toBean(jsonString, User.class);

return user;

}

@Override

public boolean isEndOfStream(User user) {

return false;

}

@Override

public User deserialize(ConsumerRecord<byte[], byte[]> consumerRecord) throws Exception {

String value = new String(consumerRecord.value(), UTF_8.name());

return buildMsg(value);

}

@Override

public TypeInformation<User> getProducedType() {

return TypeInformation.of(User.class);

}

}

自定义Sink

主要使用原生JDBC向MySQL数据库写入数据

package org.example.flink.user;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import java.sql.Connection;

import java.sql.Date;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

public class UserSink extends RichSinkFunction<User> {

Connection connection = null;

PreparedStatement statement = null;

@Override

public void open(Configuration parameters) throws Exception {

String url = "jdbc:mysql://localhost:3306/flink?autoReconnect=true&useUnicode=true&characterEncoding=utf8&serverTimezone=GMT%2B8&useSSL=false";

connection = DriverManager.getConnection(url, "root", "root");

statement = connection.prepareStatement("replace into user (id,username,password,address,email,profile,birthday,register_day,login_day,status,account,balance,age,sex,avatar,`level`) values (?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?)");

}

@Override

public void invoke(User user, Context context) throws Exception {

//直接执行更新语句

statement.setString(1, user.getId());

statement.setString(2, user.getUsername());

statement.setString(3, user.getPassword());

statement.setString(4, user.getAddress());

statement.setString(5, user.getEmail());

statement.setString(6, user.getProfile());

statement.setDate(7, new Date(user.getBirthday().getTime()));

statement.setDate(8, new Date(user.getRegisterDay().getTime()));

statement.setDate(9, new Date(user.getLoginDay().getTime()));

statement.setInt(10, user.getStatus());

statement.setBigDecimal(11, user.getAccount());

statement.setBigDecimal(12, user.getBalance());

statement.setInt(13, user.getAge());

statement.setInt(14, user.getSex());

statement.setString(15, user.getAvatar());

statement.setInt(16, user.getLevel());

statement.execute();

}

@Override

public void close() throws Exception {

statement.close();

connection.close();

}

}

UserRunner

该类包含运行的主函数,运行时调用之前声明的类进行读取数据,处理数据,写入数据的操作

注:Flink为Maven项目

package org.example.flink.user;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import java.util.Properties;

public class UserRunner {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//kafka配置

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "121.5.160.142:9092");

properties.setProperty("group.id", "consumer-group");

properties.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("auto.offset.reset", "latest");

//从Kafka获取数据流,这里使用UserInfoSchema反序列化数据

DataStreamSource<User> stream = env.addSource(new FlinkKafkaConsumer<User>(

"kafka",

new UserInfoSchema(),

properties

)).setParallelism(1);

stream.print("kafka").setParallelism(1);

//写入MySQL

stream.addSink(new UserSink()).setParallelism(1);

env.execute();

}

}

总结

数据源源不断的写入MySQL数据库,实现了一个流式数据处理的总流程,当然这里也可以写入其他Flink支持写入的数据库