KVM安装和连接VNC

KVM安装

一 :简介

KVM自Linux 2.6.20版本后就直接整合到Linux内核,它依托CPU虚拟化指令集实现高性能的虚拟化支持。它与Linux内核高度整合,因此在性能、安全性、兼容性、稳定性上都有很好的表现。

在KVM环境中运行的每个虚拟化操作系统都将表现为单个独立的系统进程。因此它可以很方便地与Linux系统中的安全模块进行整合(SELinux),可以灵活的实现硬件资源的管理和分配,KVM虚拟化的架构图如下:

架构图

1.1 环境准备:

本博主使用 centos 7 1908 镜像

1.2 查看系统版本

[root@KVM ~]# cat /etc/centos-release

CentOS Linux release 7.5.1804 (Core)

1.3 查看 CPU 是否支持虚拟化**

[root@KVM ~]# egrep -c '(vmx|svm)' /proc/cpuinfo

4

如果是0就是不支持虚拟化,如果大于或者等于1就是支持虚拟化。

如不支持虚拟化,检查虚拟机是否开启Intel VT-X和AMD-V

注:vmx 是英特尔,svm 是 AMD。

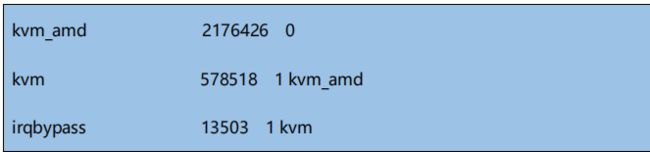

1.4 查看是否加载 KVM

[root@KVM ~]# lsmod | grep kvm

[root@KVM ~]# modprobe kvm

1.5 关闭 SELINUX

关闭防火墙

systemctl stop firewalld

临时关闭

[root@KVM ~]# setenforce 0

[root@KVM ~]# getenforce #查看

使用sed 命令 更改/etc/selinux/config 配置文件

[root@KVM ~]# sed -i s/enforcing/disabled/ /etc/selinux/config

[root@KVM ~]# cat /etc/sysconfig/selinux|grep disabled

SELINUX=disabled

重启

reboot

查看防火墙状态

systemctl status firewalld

1. 6 网络配置

编辑原来的网卡

/etc/sysconfig/network-scripts/ifcfg-ens33

修改一下内容

TYPE=Ethernet

BOOTPROTO=none #将这项改为none

DEFROUTE=yes

PEERDNS=yes

PEERROUTES=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_PEERDNS=yes

IPV6_PEERROUTES=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

DEVICE=ens33

ONBOOT=yes

BRIDGE=br0 #添加该行,若有UUID的配置项,建议删除。

复制原来的配置文件重命名为br0

cp /etc/sysconfig/network-scripts/ifcfg-ens33 /etc/sysconfig/network-scripts/ifcfg-br0

vi /etc/sysconfig/network-scripts/ifcfg-br0

修改以下内容

YPE=Bridge #将type改为Bridge

BOOTPROTO=static #这里根据实际情况改为static或dhcp

DEFROUTE=yes

PEERDNS=yes

PEERROUTES=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_PEERDNS=yes

IPV6_PEERROUTES=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=br0 #改名字

DEVICE=br0 #改名字

ONBOOT=yes

IPADDR=192.168.128.10 #该ip将为宿主机的IP地址

GATEWAY=192.168.128.2

重启网卡服务

systemctl restart network

查看网卡配置是否完成

ifconfig

br0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.128.140 netmask 255.255.255.0 broadcast 192.168.128.255

inet6 fe80::3b0c:d931:679d:ce5a prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:f9:bb:db txqueuelen 1000 (Ethernet)

RX packets 85 bytes 9604 (9.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 77 bytes 13555 (13.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

ether 00:0c:29:f9:bb:db txqueuelen 1000 (Ethernet)

RX packets 85 bytes 10794 (10.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 77 bytes 13615 (13.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

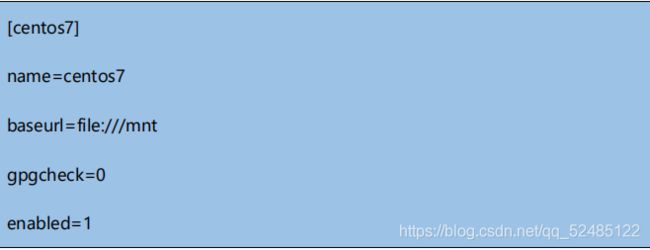

1. 7 配置本地 YUM

[root@KVM ~]# rm -rf /etc/yum.repos.d/*

[root@KVM ~]# mount /dev/sr0 /mnt

[root@KVM ~]# vi /etc/yum.repos.d/centos7.repo

[root@KVM ~]# yum clean all && yum makecache

mount /dev/sr0 /mnt #挂载

df -h #查看挂载

yum install vim -y #测试一下

二 : 安装KVM

2.1 安装kvm软件包,执行命令之前先配置好yum源~~~shell

#配置好yum环境,然后开始安装

yum install -y qemu-kvm qemu-img virt-manager libvirt libvirt-python libvirt-manager libvirt-client virt-install virt-viewer

#qemu-kvm: KVM 模块

#libvirt: 虚拟管理模块

#virt-manager: 图形界面管理虚拟机

#virt-install: 虚拟机命令行安装工具

2 .2 查看虚拟机环境以及安装情况 (启动libvirt,并设置开机自启动)

#启动libvirt,并设置开机自启动

[root@kvm ~]# systemctl restart libvirtd

[root@kvm ~]# systemctl enable libvirtd

#然后使用virsh命令进行查询虚拟机情况

[root@kvm ~]# virsh -c qemu:///system list

Id Name State

----------------------------------------------------

#查看虚拟机时候安装kvm核心模块

[root@kvm ~]# lsmod |grep kvm

kvm_intel 162153 0

kvm 5

#如果没查询到kvm核心模块,回看安装环节。

#然后建立软连接

[root@kvm ~]# ln -s /usr/libexec/qemu-kvm /usr/bin/qemu-kvm

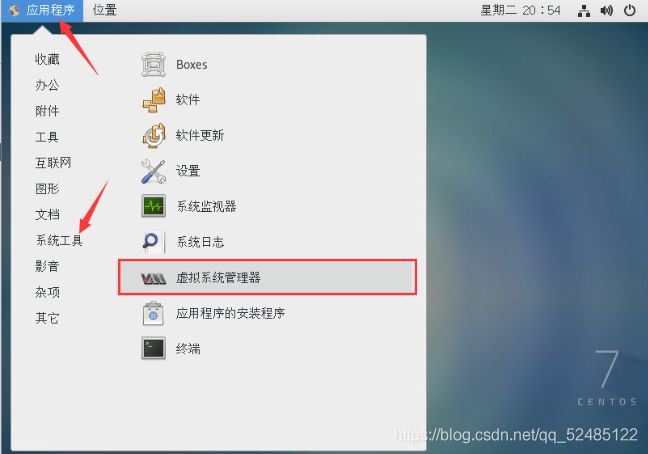

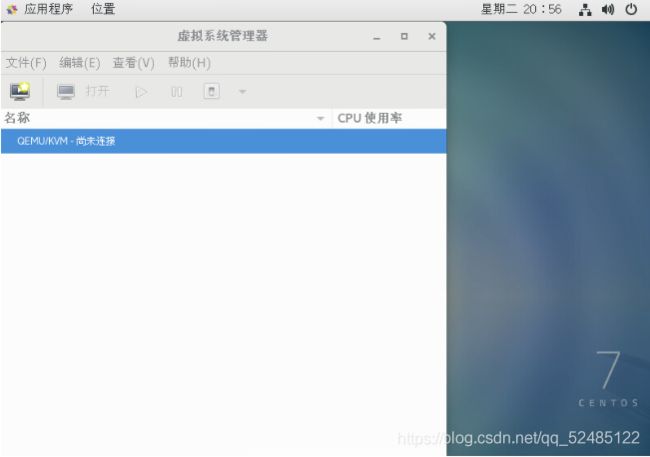

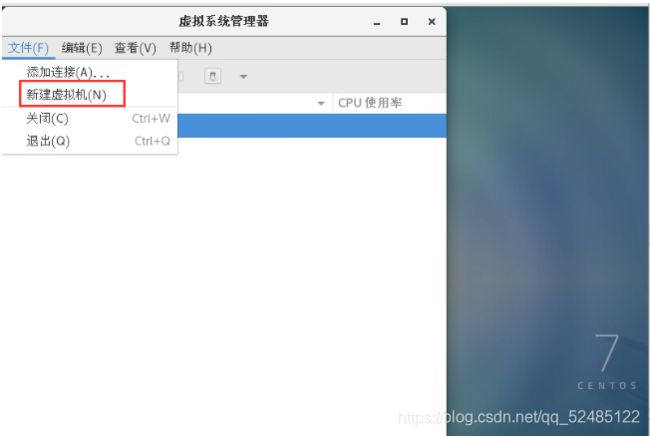

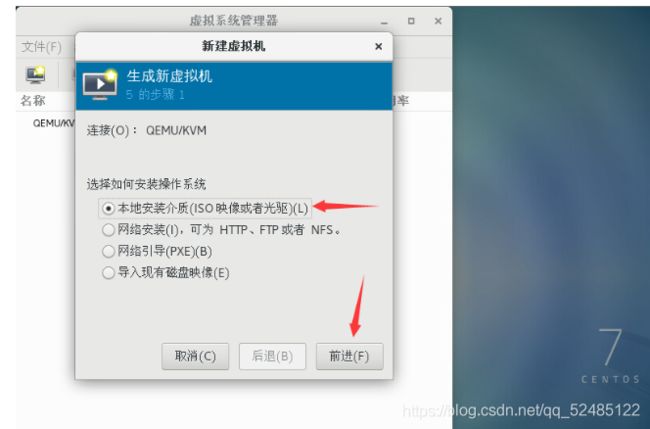

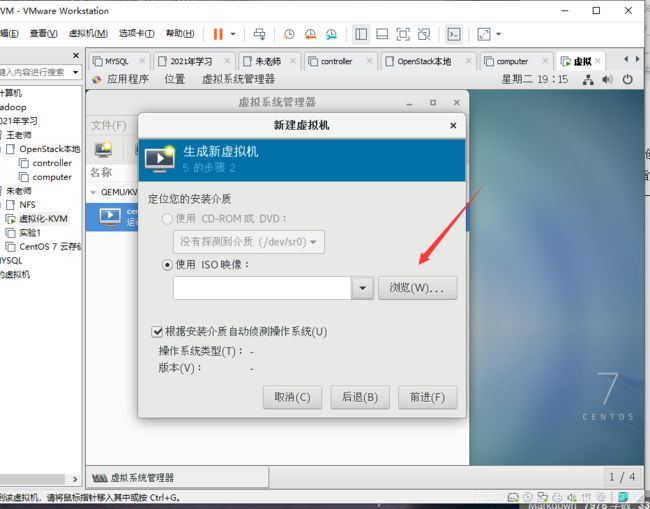

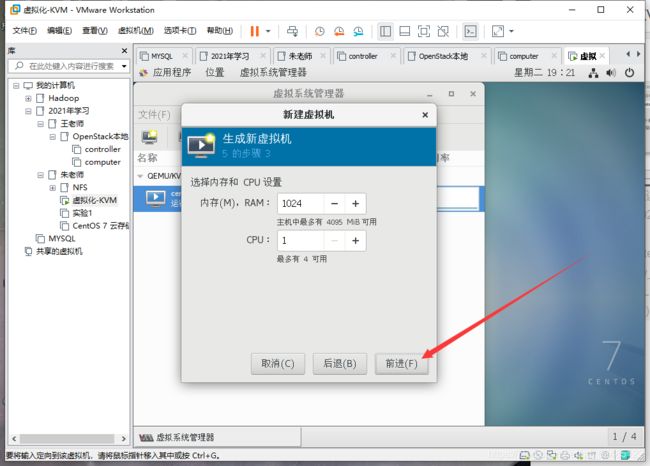

三 :KVM可视化管理工具

3.1 virt-manager

Virtual Machine Manager (virt-manager) 是一个轻量级应用程序套件,形式为一个管理虚拟机的命令行或图形用户界面 (GUI)。除了提供对虚拟机的管理功能外,virt-manager 还通过一个嵌入式虚拟网络计算 (VNC) 客户端查看器为 Guest 虚拟机提供一个完整图形控制台。

virt-manager可以通过命令行和图形化两种形式。

如linux未安装图形化,可使用一下命令来安装图形化:

yum -y groupinstall "GNOME Desktop"

#安装好图形化之后,重启系统。

reboot

#安装好之后切换到图形化界面

init 5 (start x)

#如果启动之后黑屏执行

yum groupinstall "X Window System" -y

四:创建 KVM 存储池

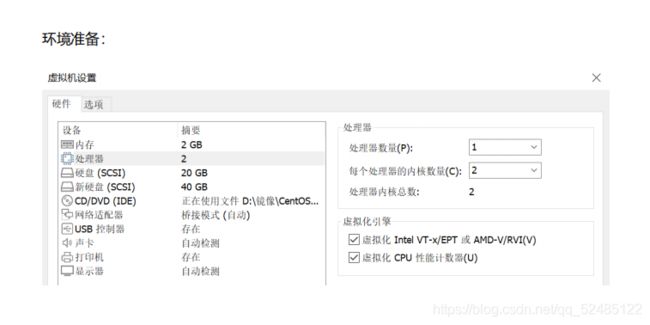

4.1 添加新的硬盘

4.2 按照提示添加硬盘,添加完成 (可添加多块)

[root@kvm opt]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─centos-root 253:0 0 17G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm [SWAP]

sr0 11:0 1 4.5G 0 rom /run/media/root/CentOS 7 x86_64

[root@kvm opt]# ls /sys/class/scsi_host/

host0 host1 host2

[root@kvm opt]# echo '- - -' > /sys/class/scsi_host/host0/scan

[root@kvm opt]# echo '- - -' > /sys/class/scsi_host/host1/scan

[root@kvm opt]# echo '- - -' > /sys/class/scsi_host/host2/scan

[root@kvm opt]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─centos-root 253:0 0 17G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm [SWAP]

sdb 8:16 0 40G 0 disk

sr0 11:0 1 4.5G 0 rom /mnt

[root@kvm opt]#

可以发现,新添加的40G的硬盘已经成功被linux读取。

#然后格式化硬盘

[root@localhost opt]# mkfs.xfs /dev/sdb

meta-data=/dev/sdb isize=512 agcount=4, agsize=2621440 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10485760, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

五 :挂载

5.1 临时挂载(在格式化的前提下)

格式化硬盘之后,就可以开始挂载使用了

[root@kvm opt]# mkdir /kvm

[root@kvm opt]# mount /dev/sdb /kvm/

[root@kvm opt]# df -h

文件系统 容量 已用 可用 已用% 挂载点

devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs 1.9G 0 1.9G 0% /dev/shm

tmpfs 1.9G 13M 1.9G 1% /run

tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/mapper/centos-root 17G 8.6G 8.5G 51% /

/dev/sda1 1014M 209M 806M 21% /boot

tmpfs 378M 64K 378M 1% /run/user/0

/dev/sr0 4.5G 4.5G 0 100% /run/media/root/CentOS 7 x86_64

/dev/sdb 40G 33M 40G 1% /kvm

[root@localhost opt]#

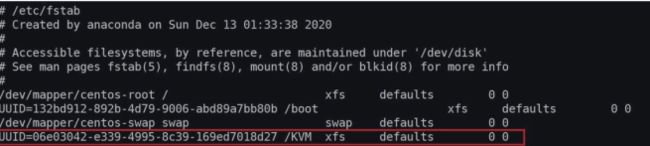

5.2 永久挂载 (在格式化的前提下)

查看硬盘 UUID

[root@KVM ~]# blkid|grep sdb

/dev/sdb: UUID="06e03042-e339-4995-8c39-169ed7018d27" TYPE="xfs"

挂载硬盘

[root@KVM ~]# mkdir /KVM

[root@KVM ~]# vi /etc/fstab #写入/etc/fstab 中

[root@KVM ~]# mount -a

查看挂在情况

[root@KVM ~]# df -Th|grep KVM

/dev/sdb xfs 40G 33M 40G 1% /KVM

六: 启动 virt-manager

[root@KVM ~]# virt-manager

virt-manager和linux图形化安装成功,已经正常启动。

也可以直接在Linux中创建一个目录 把镜像上传到此目录中 kvm中虚拟机镜像指定

也可以在此目录中新建一个文件夹来存放kvm虚拟机数据

mkdir /kvm-data

cd kvm-data

mkdir -p /images /ios

将镜像上传到/kvm-data/ios中

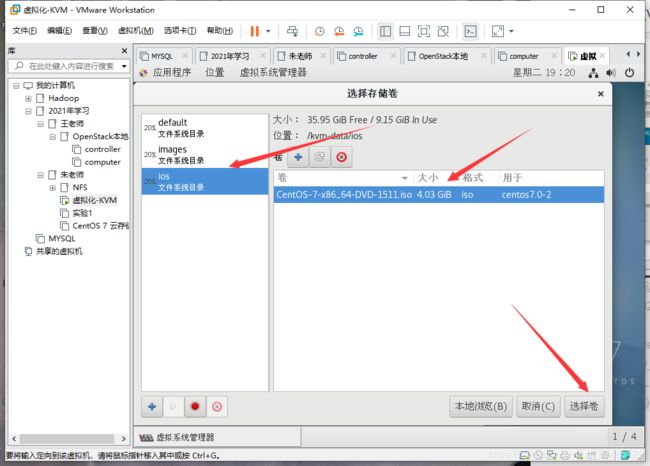

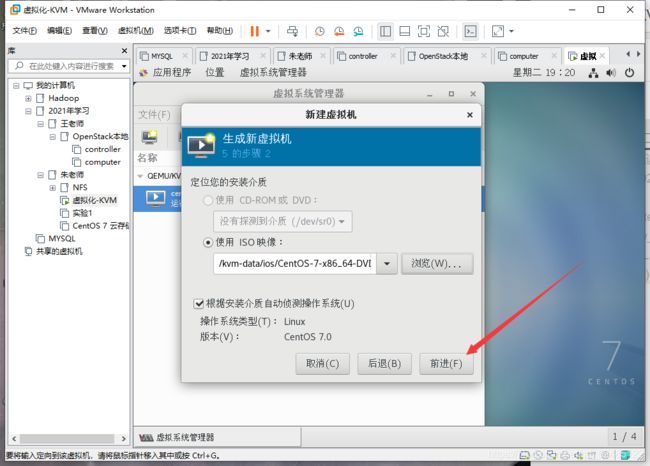

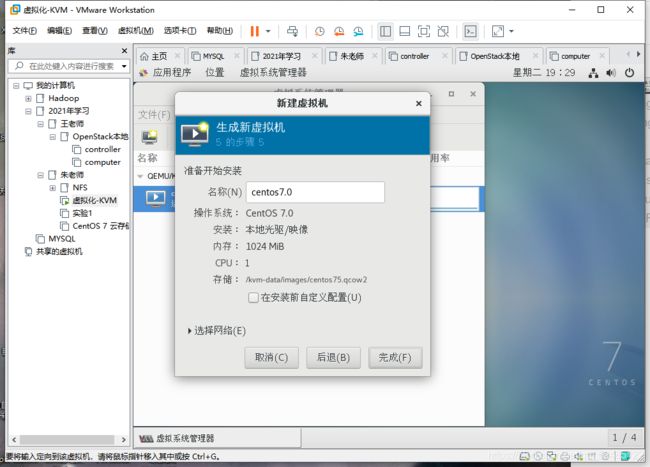

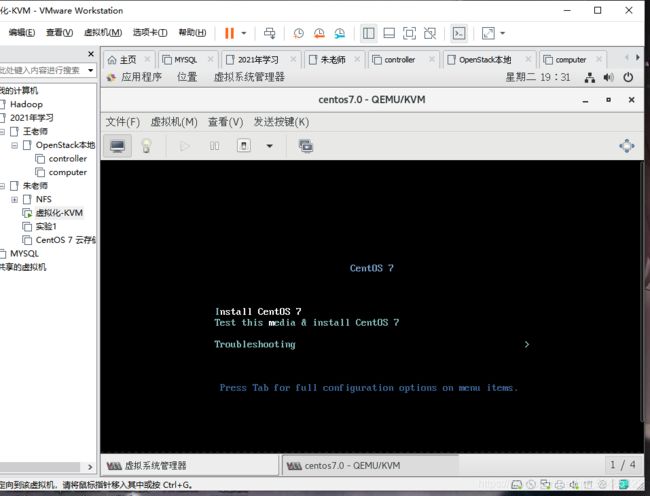

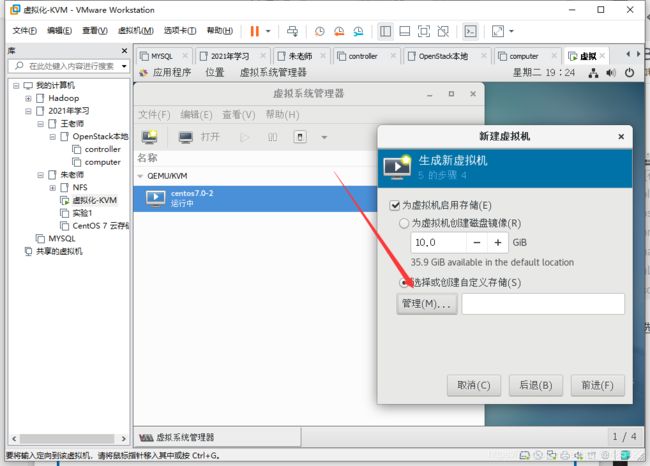

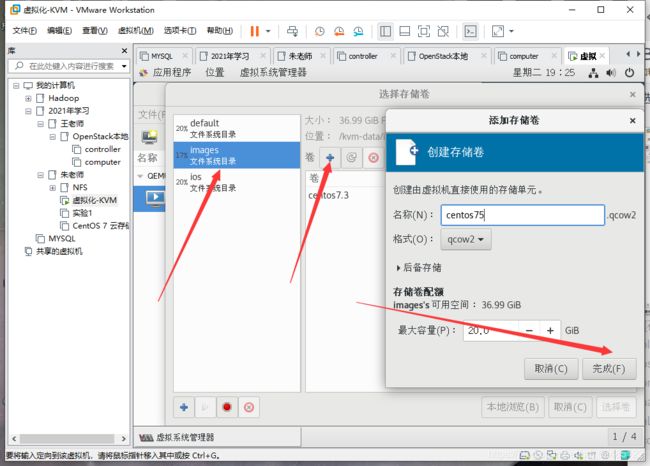

选择自定义存储,然后点击管理。

点击images目录 点击+好 创建存储卷 点击完成

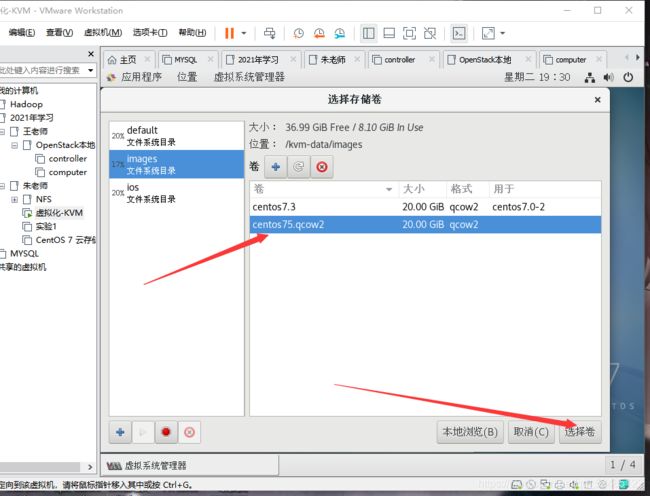

选择卷

七:VNC远程桌面连接 (VNC\SPice)

7.1 查看是否安装vnc安装包

rpm -qa tigervnc tigervnc-server

如果有直接启动服务并加到开机自启 没有就安装

yum install tigervnc tigervnc-server

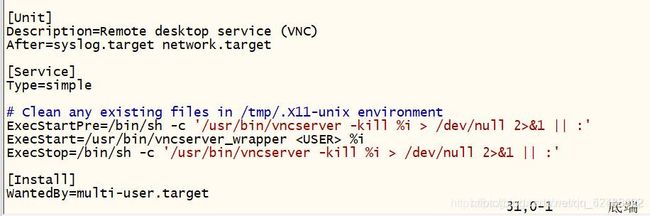

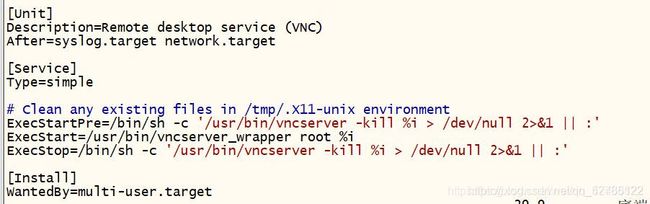

7.2拷贝服务到系统服务下面并重命名

cp /usr/lib/systemd/system/[email protected] /etc/systemd/system/vncserver@:1.service

7.3修改配置文件

vim /etc/systemd/system/vncserver@:1.service #把USER改成root

7.4重新加载配置

systemctl daemon-reload

7.5 设置VNC服务端访问密码

vncserver

Password: #输入设置的密码

Verify: #再次设置的密码

Would you like to enter a view-only password (y/n)? n

A view-only password is not used

7.6 关闭防火墙

systemctl restart firewalld

systemctl disabled firewalld

7.7 关闭SELINUX

修改/etc/selinux/config

vim /etc/selinux/config

修改SELINUX=enforcing 修改为 SELINUX=disabled

7.8 启动服务VNC服务

#设置开机自启

[root@localhost system]# systemctl enable vncserver@:1.service

Created symlink from /etc/systemd/system/multi-user.target.wants/vncserver@:1.service to /etc/systemd/system/vncserver@:1.service.

#开启服务

[root@localhost system]# systemctl start vncserver@:1.service

#查询服务

[root@localhost system]# systemctl status vncserver@:1.service

● vncserver@:1.service - Remote desktop service (VNC)

Loaded: loaded (/etc/systemd/system/vncserver@:1.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2021-03-24 23:02:34 CST; 8s ago

Process: 9896 ExecStartPre=/bin/sh -c /usr/bin/vncserver -kill %i > /dev/null 2>&1 || : (code=exited, status=0/SUCCESS)

Main PID: 9920 (Xvnc)

CGroup: /system.slice/system-vncserver.slice/vncserver@:1.service

‣ 9920 /usr/bin/Xvnc :1 -auth /root/.Xauthority -desktop localhost.localdomain:1 (root) -fp catalogue:/etc/X11/fontpath.d -geometry 1024x768 -pn -rfbauth /root/.vnc/passwd -rfbport 5901 -rfbwait 30000

3月 24 23:02:34 localhost.localdomain systemd[1]: Starting Remote desktop service (VNC)...

3月 24 23:02:34 localhost.localdomain systemd[1]: Started Remote desktop service (VNC).

#关闭服务

[root@localhost system]# systemctl stop vncserver@:1.service

#开启服务

[root@localhost system]# systemctl start vncserver@:1.service

#查询端口使用情况

[root@localhost system]# netstat -lnt |grep 590*

tcp 0 0 0.0.0.0:5901 0.0.0.0:* LISTEN

tcp6 0 0 :::5901 :::* LISTEN

[root@localhost system]#

7.9.1查看VNC服务端口

netstat -anp|grep 590*

默认端口5901

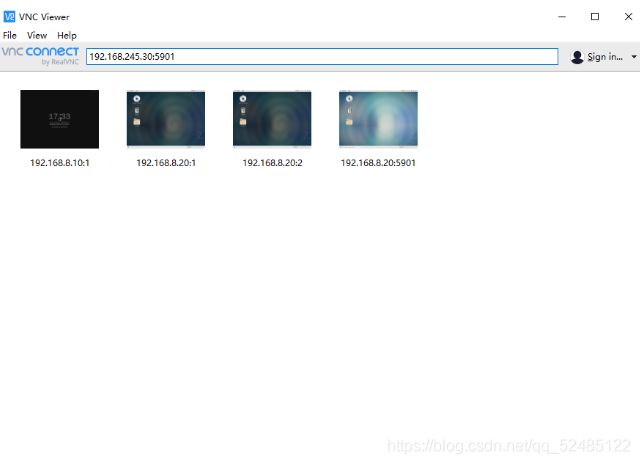

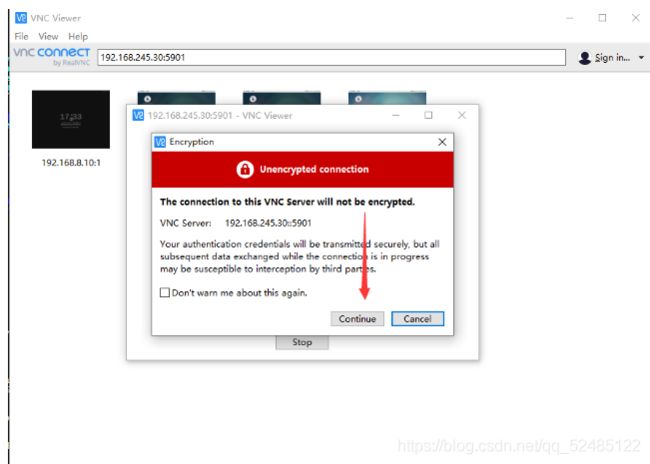

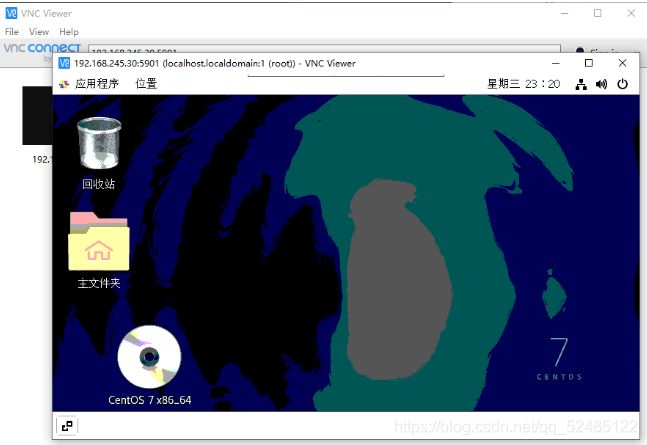

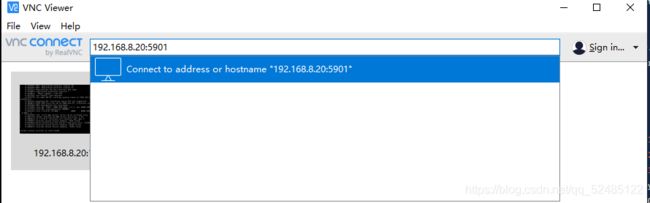

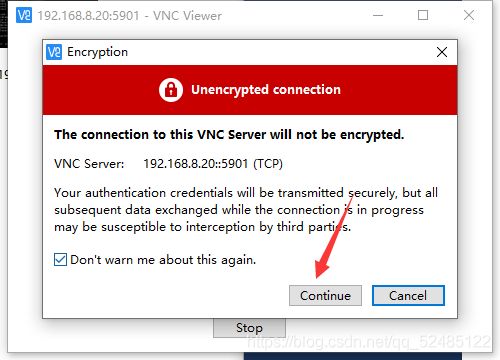

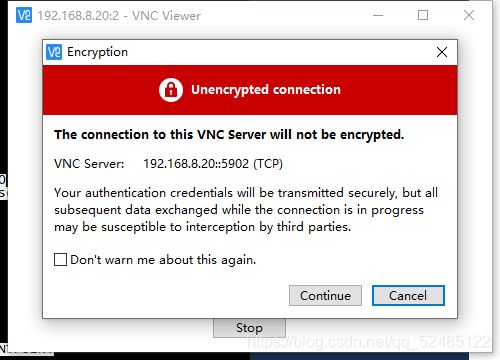

7.9.2 Windows 下载VNC-Viewer并安装连接

8:安装完后连接vncserver ip+端口

8. KVM网络

8.1 四种简单网络模型

8.1.1 隔离模式

虚拟机之间组建网络,该模式无法与宿主机通信,无法与其他网络通信,相当于虚拟机只是连接到一台交换机上。

8.1.2 路由模式

相当于虚拟机连接到一台路由器上,由路由器(物理网卡),统一转发,但是不会改变源地址。

8.1.3 NAT模式

在路由模式中,会出现虚拟机可以访问其他主机,但是其他主机的报文无法到达虚拟机,而NAT模式则将源地址转换为路由器(物理网卡)地址,这样其他主机也知道报文来自那个主机,在docker环境中经常被使用。

8.1.4桥接模式

在宿主机中创建一张虚拟网卡作为宿主机的网卡,而物理网卡则作为交换机。

8.2 NAT网络

#通过vi创建NAT脚本

vi /etc/qemu-ifup-NAT

#NAT脚本内容

#!/bin/bash

BRIDGE=virbr0

NETWORK=192.168.122.0

NETMASK=255.255.255.0

GATEWAY=192.168.122.1

DHCPRANGE=192.168.122.2,192.168.122.254

TFTPROOT=

BOOTP=

function check_bridge()

{

if brctl show | grep "^$BRIDGE" &> /dev/null; then

return 1

else

return 0

fi

}

function create_bridge()

{

brctl addbr "$BRIDGE"

brctl stp "$BRIDGE" on

brctl setfd "$BRIDGE" 0

ifconfig "$BRIDGE" "$GATEWAY" netmask "$NETMASK" up

}

function enable_ip_forward()

{

echo 1 > /proc/sys/net/ipv4/ip_forward

}

function add_filter_rules()

{

iptables -t nat -A POSTROUTING -s "$NETWORK"/"$NETMASK" \

! -d "$NETWORK"/"$NETMASK" -j MASQUERADE

}

function start_dnsmasq()

{

# don't run dnsmasq repeatedly

ps -ef | grep "dnsmasq" | grep -v "grep" &> /dev/null

if [ $? -eq 0 ]; then

echo "Warning:dnsmasq is already running. No need to run it again."

return 1

fi

dnsmasq \

--strict-order \

--except-interface=lo \

--interface=$BRIDGE \

--listen-address=$GATEWAY \

--bind-interfaces \

--dhcp-range=$DHCPRANGE \

--conf-file="" \

--pid-file=/var/run/qemu-dnsmasq-$BRIDGE.pid \

--dhcp-leasefile=/var/run/qemu-dnsmasq-$BRIDGE.leases \

--dhcp-no-override \

${TFTPROOT:+"--enable-tftp"} \

${TFTPROOT:+"--tftp-root=$TFTPROOT"} \

${BOOTP:+"--dhcp-boot=$BOOTP"}

}

function setup_bridge_nat()

{

check_bridge "$BRIDGE"

if [ $? -eq 0 ]; then

create_bridge

fi

enable_ip_forward

add_filter_rules "$BRIDGE"

start_dnsmasq "$BRIDGE"

}

# need to check $1 arg before setup

if [ -n "$1" ]; then

setup_bridge_nat

ifconfig "$1" 0.0.0.0 up

brctl addif "$BRIDGE" "$1"

exit 0

else

echo "Error: no interface specified."

exit 1

fi

#赋予脚本运行权限

[root@localhost ~]# chmod +x /etc/qemu-ifup-NAT

[root@localhost ~]# ll /etc/qemu-ifup-NAT

-rwxr-xr-x 1 root root 2099 4月 27 23:30 /etc/qemu-ifup-NAT

#创建文件存放镜像

[root@localhost ~]# mkdir /os

[root@localhost ~]# cd /os/

#通过wget下载镜像

[root@localhost os]# wget http://ftp.yudancha.cn/cirros/cirros-0.3.4-x86_64-disk.img

--2021-04-27 23:37:34-- http://ftp.yudancha.cn/cirros/cirros-0.3.4-x86_64-disk.img

正在解析主机 ftp.yudancha.cn (ftp.yudancha.cn)... 47.94.16.133

正在连接 ftp.yudancha.cn (ftp.yudancha.cn)|47.94.16.133|:80... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:13287936 (13M) [application/octet-stream]

正在保存至: “cirros-0.3.4-x86_64-disk.img”

100%[=======================================================================>] 13,287,936 680KB/s 用时 18s

2021-04-27 23:37:53 (706 KB/s) - 已保存 “cirros-0.3.4-x86_64-disk.img” [13287936/13287936])

#通过命令启动镜像并设置网络使用创建的NAT脚本所创建的网络

[root@localhost os]# qemu-kvm -m 1024 -drive file=/os/cirros-0.3.4-x86_64-disk.img,if=virtio -net nic,model=virtio -net tap,script=/etc/qemu-ifup-NAT -nographic -vnc :1

#截取部分镜像启动的显示

Starting acpid: OK

cirros-ds 'local' up at 0.70

no results found for mode=local. up 0.72. searched: nocloud configdrive ec2

Starting network...

udhcpc (v1.20.1) started

Sending discover...

Sending discover...

Sending select for 192.168.122.76...

Lease of 192.168.122.76 obtained, lease time 3600

cirros-ds 'net' up at 60.81

checking http://169.254.169.254/2009-04-04/instance-id

failed 1/20: up 60.82. request failed

failed 2/20: up 65.86. request failed

failed 3/20: up 70.87. request failed

failed 4/20: up 75.91. request failed

failed 5/20: up 80.95. request failed

failed 6/20: up 83.98. request failed

failed 7/20: up 89.02. request failed

failed 8/20: up 94.05. request failed

failed 9/20: up 97.08. request failed

failed 10/20: up 102.11. request failed

failed 11/20: up 105.14. request failed

failed 12/20: up 110.18. request failed

failed 13/20: up 115.22. request failed

failed 14/20: up 120.26. request failed

failed 15/20: up 123.26. request failed

failed 16/20: up 126.29. request failed

failed 17/20: up 131.34. request failed

failed 18/20: up 136.39. request failed

failed 19/20: up 141.43. request failed

failed 20/20: up 146.47. request failed

failed to read iid from metadata. tried 20

no results found for mode=net. up 151.52. searched: nocloud configdrive ec2

failed to get instance-id of datasource

Starting dropbear sshd: generating rsa key... generating dsa key... OK

=== system information ===

Platform: Red Hat KVM

Container: none

Arch: x86_64

CPU(s): 1 @ 3599.998 MHz

Cores/Sockets/Threads: 1/1/1

Virt-type:

RAM Size: 995MB

Disks:

NAME MAJ:MIN SIZE LABEL MOUNTPOINT

vda 253:0 41126400

vda1 253:1 32901120 cirros-rootfs /

sr0 11:0 1073741312

=== sshd host keys ===

-----BEGIN SSH HOST KEY KEYS-----

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgwCIjEP93pQeo36Lm61kjq1LAI0A2+NYovdWiu//55tLkLWWjdb4xMKTI8FrcHo9Vc8KxW+qqGgEs

ssh-dss AAAAB3NzaC1kc3MAAACBAJfyad6isulpjHcKhMXbLeUYRdKPOaJW4J0UaFV8RbvGSxHLz/iZIKqZWvLqLWdEHcePKVdGLeH318GxCg21s

-----END SSH HOST KEY KEYS-----

=== network info ===

if-info: lo,up,127.0.0.1,8,::1

if-info: eth0,up,192.168.122.76,24,fe80::5054:ff:fe12:3456

ip-route:default via 192.168.122.1 dev eth0

ip-route:192.168.122.0/24 dev eth0 src 192.168.122.76

=== datasource: None None ===

=== cirros: current=0.3.4 uptime=151.63 ===

____ ____ ____

/ __/ __ ____ ____ / __ \/ __/

/ /__ / // __// __// /_/ /\ \

\___//_//_/ /_/ \____/___/

http://cirros-cloud.net

login as 'cirros' user. default password: 'cubswin:)'. use 'sudo' for root.

cirros login:

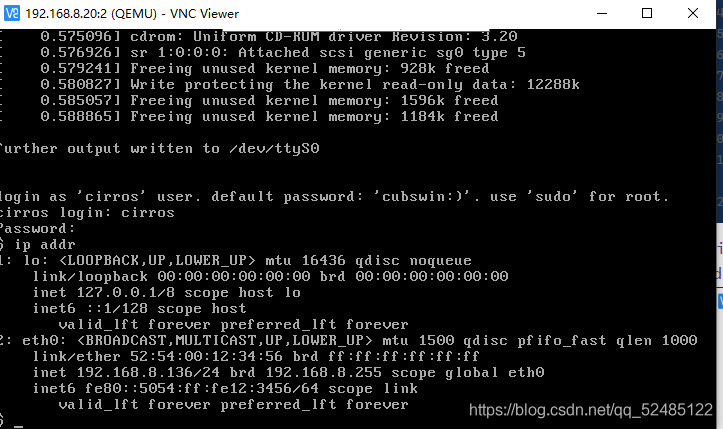

启动虚拟机成功,可以登录系统,查看虚拟机ip是不是按照脚本分配的ip

8.3 桥接网络

1: 在虚拟机设置中添加一块新的网卡

2: 根据新添加的网卡名创建新的网卡配置文件

#配置文件的名字根据网卡名字来修改

[root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens37

DEVICE=ens37 #根据网卡名来修改

BOOTPROTO=none

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=no

DRIDGE=br0

3:根据相创建的网桥名来创建网桥配置文件

[root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg-br0

DEVICE=br0 #根据网桥名修改

TYPE=bridge

ONBOOT=yes

NM_CONTROLLED=no

BOOTPROTO=static

#下面的信息根据新网卡的配置信息来修改

IPADDR=192.168.8.30

NETMASK=255.255.255.0

GATEWAY=192.168.8.2

DNS1=114.114.114.114

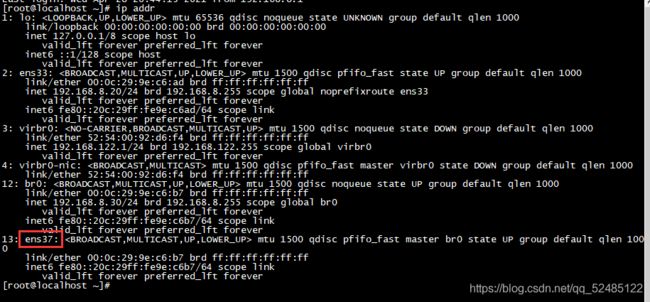

4: 配置完成,建立连接,重启服务,查看相关的信息

#新建br0网桥

[root@localhost images]# brctl addbr br0

#将网桥和ens37绑定

[root@localhost images]# brctl addif br0 ens37

#重启网络服务

[root@localhost ~]# systemctl restart network

[root@localhost ~]# ip addr list

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:9e:c6:ad brd ff:ff:ff:ff:ff:ff

inet 192.168.8.20/24 brd 192.168.8.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe9e:c6ad/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:92:d6:f4 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:92:d6:f4 brd ff:ff:ff:ff:ff:ff

12: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:0c:29:9e:c6:b7 brd ff:ff:ff:ff:ff:ff

inet 192.168.8.30/24 brd 192.168.8.255 scope global br0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe9e:c6b7/64 scope link

valid_lft forever preferred_lft forever

13: ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br0 state UP group default qlen 1000

link/ether 00:0c:29:9e:c6:b7 brd ff:ff:ff:ff:ff:ff

inet6 fe80::20c:29ff:fe9e:c6b7/64 scope link

valid_lft forever preferred_lft forever

创建脚本

[root@localhost ~]# vi /etc/qemu-ifup

#!/bin/bash

switch=br0

if [ -n $1 ];then

ifconfig $1 up

sleep 1

brctl addif $switch $1

exit 0

else

echo "Error: No Specifed interface."

exit 1

fi

#设置权限

[root@localhost ~]# chmod +x /etc/qemu-ifup

6:启动

[root@localhost ~]# qemu-kvm -m 512 -drive file=/os/cirros-0.3.4-x86_64-disk.img,if=virtio -net nic,model=virtio -net tap,script=/etc/qemu-ifup -nographic -vnc :2

#启动时部分代码

Initializing random number generator... done.

Starting acpid: OK

cirros-ds 'local' up at 0.69

no results found for mode=local. up 0.71. searched: nocloud configdrive ec2

Starting network...

udhcpc (v1.20.1) started

Sending discover...

Sending select for 192.168.8.136...

Lease of 192.168.8.136 obtained, lease time 1800

cirros-ds 'net' up at 1.73

checking http://169.254.169.254/2009-04-04/instance-id

failed 1/20: up 1.73. request failed

failed 2/20: up 3.77. request failed

failed 3/20: up 5.78. request failed

failed 4/20: up 7.78. request failed

failed 5/20: up 9.79. request failed

failed 6/20: up 11.79. request failed

failed 7/20: up 13.80. request failed

failed 8/20: up 15.81. request failed

failed 9/20: up 17.82. request failed

failed 10/20: up 19.83. request failed

failed 11/20: up 21.84. request failed

failed 12/20: up 23.84. request failed

failed 13/20: up 25.85. request failed

failed 14/20: up 27.86. request failed

failed 15/20: up 29.87. request failed

failed 16/20: up 31.89. request failed

failed 17/20: up 33.90. request failed

failed 18/20: up 35.90. request failed

failed 19/20: up 37.92. request failed

failed 20/20: up 39.93. request failed

failed to read iid from metadata. tried 20

no results found for mode=net. up 41.94. searched: nocloud configdrive ec2

failed to get instance-id of datasource

Starting dropbear sshd: OK

=== system information ===

Platform: Red Hat KVM

Container: none

Arch: x86_64

CPU(s): 1 @ 3599.998 MHz

Cores/Sockets/Threads: 1/1/1

Virt-type:

RAM Size: 491MB

Disks:

NAME MAJ:MIN SIZE LABEL MOUNTPOINT

vda 253:0 41126400

vda1 253:1 32901120 cirros-rootfs /

sr0 11:0 1073741312

=== sshd host keys ===

-----BEGIN SSH HOST KEY KEYS-----

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgwCIjEP93pQeo36Lm61kjq1LAI0A2+NYovdWiu//55ts

ssh-dss AAAAB3NzaC1kc3MAAACBAJfyad6isulpjHcKhMXbLeUYRdKPOaJW4J0UaFV8RbvGSxHLz/is

-----END SSH HOST KEY KEYS-----

=== network info ===

if-info: lo,up,127.0.0.1,8,::1

if-info: eth0,up,192.168.8.136,24,fe80::5054:ff:fe12:3456

ip-route:default via 192.168.8.2 dev eth0

ip-route:192.168.8.0/24 dev eth0 src 192.168.8.136

=== datasource: None None ===

=== cirros: current=0.3.4 uptime=41.99 ===

____ ____ ____

/ __/ __ ____ ____ / __ \/ __/

/ /__ / // __// __// /_/ /\ \

\___//_//_/ /_/ \____/___/

http://cirros-cloud.net

login as 'cirros' user. default password: 'cubswin:)'. use 'sudo' for root.

cirros login:

连接成功,查看ip网段为宿主机所在网段。

九:KVM快照

9.1 查看现有磁盘镜像格式与转换

KVM虚拟机默认使用raw格式的镜像格式,性能最好,速度最快,它的缺点就是不支持一些新的功能,如支持镜像,zlib磁盘压缩,AES加密等。要使用镜像功能,磁盘格式必须为qcow2。

#1、查看磁盘格式

#后面的地址根据自己的真实虚拟机位置填写

[root@localhost ~]# qemu-img info /kvm/centos7.0.img

image: /kvm/centos7.0.img

file format: raw

virtual size: 10G (10737418240 bytes)

disk size: 10G

#2、关闭虚拟机并转换格式

[root@localhost ~]# yum -y install acpid

已加载插件:fastestmirror, langpacks

Loading mirror speeds from cached hostfile

base | 3.6 kB 00:00:00

extras | 2.9 kB 00:00:00

updates | 2.9 kB 00:00:00

软件包 acpid-2.0.19-9.el7.x86_64 已安装并且是最新版本

无须任何处理

[root@localhost ~]# virsh shutdown centos7.0

域 centos7.0 被关闭

#3、转换磁盘格式

[root@localhost ~]# qemu-img convert -f raw -O qcow2 /kvm/centos7.0.img /kvm/centos7.0.qcow2

[root@localhost ~]# ll /kvm/

总用量 22548360

-rw------- 1 root root 21478375424 5月 11 19:49 centos7.0.img

-rw-r--r-- 1 root root 1186660352 5月 11 19:52 centos7.0.qcow2

#QCOW2(qemu copy on write 2) 格式包含一些特性,包括支持多重快照,占用更小的存储空间(不支持稀疏特性,也就是不会预先分配指定 size 的空间),可选的 AES 加密和可选的 zlib 压缩方式。

#RAW 的原意是「未被加工的」, 所以 RAW 格式镜像文件又被称为 原始镜像 或 裸设备镜像, 从这些称谓可以看出, RAW 格式镜像文件能够直接当作一个块设备, 以供 GuestOS 使用. 也就是说 KVM 的 GuestOS 可以直接从 RAW 镜像中启动, 就如 HostOS 直接从硬盘中启动一般。

#4、修改虚拟机配置文件

[root@localhost ~]# virsh edit centos7.0

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='none'/>

<source file='/kvm/centos7.0.qcow2'/>

<target dev='vda' bus='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/>

</disk>

编辑了域 centos7.0 XML 配置。

9.2 对虚拟机进行快照管理

保证以上步骤完成

#1、创建虚拟机快照

[root@localhost ~]# virsh snapshot-create-as centos7.0 centos_snaphshot

已生成域快照 centos_snaphshot

#2、查看虚拟机快照

[root@localhost ~]# virsh snapshot-list centos7.0

名称 生成时间 状态

------------------------------------------------------------

centos_snaphshot 2021-05-11 20:01:24 +0800 shutoff

#3、查看虚拟机最新的快照信息

[root@localhost ~]# virsh snapshot-current centos7.0

#4、查看快照信息

[root@localhost ~]# virsh snapshot-info centos7.0 centos_snaphshot

名称: centos_snaphshot

域: centos7.0

当前: 是

状态: shutoff

位置: 内部

上级: -

下级: 0

降序: 0

元数据: 是

#5、恢复虚拟机快照

#确定虚拟机在关机状态

[root@localhost ~]# virsh shutdown centos7.0

[root@localhost ~]# virsh domstate centos7.0

关闭

#查看虚拟机的快照,并确定要恢复到那个时间点的快照

[root@localhost ~]# virsh snapshot-list centos7.0

名称 生成时间 状态

------------------------------------------------------------

centos_snaphshot 2021-05-11 20:01:24 +0800 shutoff

#确定恢复快照

[root@localhost ~]# virsh snapshot-revert centos7.0 centos_snaphshot

#6、删除虚拟机快照

#查看虚拟机快照

[root@localhost ~]# qemu-img info /kvm/centos7.0.qcow2

image: /kvm/centos7.0.qcow2

file format: qcow2

virtual size: 20G (21478375424 bytes)

disk size: 1.1G

cluster_size: 65536

Snapshot list:

ID TAG VM SIZE DATE VM CLOCK

1 cen## 标题tos_snaphshot 0 2021-05-11 20:01:24 00:00:00.000

Format specific information:

compat: 1.1

lazy refcounts: false

#删除虚拟机快照

[root@localhost ~]# virsh snapshot-delete centos7.0 centos_snaphshot

已删除域快照 centos_snaphshot

[root@localhost ~]# qemu-img info /kvm/centos7.0.qcow2

image: /kvm/centos7.0.qcow2

file format: qcow2

virtual size: 20G (21478375424 bytes)

disk size: 1.1G

cluster_size: 65536

Format specific information:

compat: 1.1

lazy refcounts: false

十:虚拟机迁移

静态迁移就是虚拟机在关机状态下,拷贝虚拟机虚拟磁盘文件与配置文件到目标虚拟主机中,实现的迁移。

10.1 确定虚拟机关机状态

[root@localhost ~]# virsh list --all

Id 名称 状态

----------------------------------------------------

- centos7.0 关闭

10.2 迁移主机

#查看该虚拟机配置的磁盘文件

[root@localhost kvm]# virsh domblklist centos7.0

目标 源

------------------------------------------------

vda /kvm/centos7.0.qcow2

hda -

10.3 导出虚拟机配置文件

[root@localhost kvm]# virsh dumpxml centos7.0 > /opt/centos7.0.xml

[root@localhost kvm]# ll /opt/

总用量 8

-rw-r--r-- 1 root root 4422 5月 11 20:33 centos7.0.xml

drwxr-xr-x. 2 root root 6 10月 31 2018 rh

10.4 拷贝配置文件到目标机器上

[root@localhost kvm]# scp /opt/centos7.0.xml 192.168.8.21:/opt/

The authenticity of host '192.168.8.21 (192.168.8.21)' can't be established.

ECDSA key fingerprint is SHA256:fvtbHxBUlCA/5fL35C0s1TOiDErUEipSrj7FYr2rnZM.

ECDSA key fingerprint is MD5:ce:12:1f:35:aa:b6:89:28:fa:a9:90:50:b8:f9:b3:0c.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.8.21' (ECDSA) to the list of known hosts.

[email protected]'s password:

centos7.0.xml 100% 4422 5.6MB/s 00:00

[root@localhost kvm]# scp /kvm/centos7.0.qcow2 192.168.8.21:/kvm/

[email protected]'s password:

centos7.0.qcow2

10.5 在目标主机上查看

[root@localhost opt]# ll /opt/

总用量 8

-rw-r--r-- 1 root root 4422 5月 11 20:35 centos7.0.xml

drwxr-xr-x. 2 root root 6 10月 31 2018 rh

[root@localhost opt]# ll /kvm/

总用量 1158916

-rw-r--r-- 1 root root 1186726400 5月 11 20:36 centos7.0.qcow2

10.6 在目标主机上注册虚拟机

[root@localhost opt]# virsh define /opt/centos7.0.xml

定义域 centos7.0(从 /opt/centos7.0.xml)

#启动虚拟机

[root@localhost kvm]# virsh start centos7.0

域 centos7.0 已开始

#连接虚拟机

[root@localhost ~]# virsh console centos7.0

连接到域 centos7.0

换码符为 ^]

#在这里要回车

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:69:60:00 brd ff:ff:ff:ff:ff:ff

#退出kvm虚拟机的方式Ctrl+]

#这里需要注意,需要kvm中的虚拟机(也是就wmware虚拟机中centos通过kvm创建的虚拟机也是就是centos7.0)开启console功能才能正常连接

#开启方式,通过图形化,进入到虚拟机中,然后执行

[root@localhost ~]# grubby --update-kernel=ALL --args="console=ttyS0,115200n8"

#然后进行重启

[root@localhost ~]# reboot

至此,虚拟机静态迁移完成,本章结束。