数仓架构“瘦身”,Hologres 5000CU时免费试用

Hologres基于创新的HSAP架构,可以将您原先数仓架构中的OLAP系统(Greenplum、Presto、Impala、ClickHouse)、KV数据库/Serving系统(HBase、Redis)统一在一个大数据计算引擎中,并提供快速的离线实时一体化分析能力。

- Hologres 5000CU时,20GB存储免费试用,前往试用>>

产品核心优势:

1、简化数仓架构,减少数据搬运与多处维护成本

2、实时查询性能强,刷新TPC-H 30000GB世界纪录

3、融合湖仓查询,0 ETL导入离线MaxCompute数据

Hologres使用教程简介

基于MaxCompute中TPC-H数据集数据和GitHub公开事件数据,在阿里云实时数仓Hologres上创建Hologres的数据库、外部表、内部表、导入数据至内部表中以及使用Hologres分别查询内部表和外部表中数据的指引。Hologres在查询数据方面具有极速响应的优势。

准备环境和资源

开始教程前,请按以下步骤准备环境和资源:

- 已创建专有网络(VPC)和专有网络交换机,详情请参见创建专有网络和交换机。

- 访问阿里云免费试用。单击页面右上方的登录/注册按钮,并根据页面提示完成账号登录(已有阿里云账号)、账号注册(尚无阿里云账号)或实名认证(根据试用产品要求完成个人实名认证或企业实名认证)。

- 成功登录后,在产品类别下选择大数据计算 > 数据计算与分析,在实时数仓Hologres卡片上,单击立即试用。

- 在弹出的试用实时数仓Hologres产品的面板上完成参数信息配置。本试用教程以表格中的参数信息为例,未提及参数保持默认值。

| 参数 |

示例值 |

| 地域 |

华东1(杭州) |

| 实例类型 |

通用型 |

| 计算资源 |

8核32GB(计算节点数量:1) |

| 专有网络 |

选择步骤1中创建的VPC。 |

| 专有网络交换机 |

选择步骤1中创建的交换机。 |

| 实例名称 |

hologres_test |

| 资源组 |

默认资源组 |

- 勾选服务协议后,单击立即试用,并根据页面提示完成试用申请。

单击前往控制台,开启试用体验。

创建数据库

通过Hologres快速创建数据库,用于后续存放示例数据进行查询使用。

- 登录Hologres管理控制台,单击左侧实例列表。

- 在实例列表页面,单击对应实例名称。

- 在实例详情页左侧导航栏,单击数据库管理。

- 在DB授权页面,单击右上角新增数据库。

- 在新增数据库对话框,配置如下参数。

| 参数 |

说明 |

| 实例名 |

选择在哪个Hologres实例上创建数据库。默认展示当前已登录实例的名称,您也可以在下拉框中选择其他Hologres实例。 |

| 数据库名称 |

本示例数据库名称设置为 |

| 简单权限策略 |

您可以为创建的数据库选择一种权限策略。更多关于权限策略的说明,请参见:

|

- 单击确认。

创建表

数据库创建成功后,您需在数据库中创建对应的表。

- 登录数据库。

-

- 在DB授权页面的顶部菜单栏,单击元数据管理。

- 在元数据管理页面,双击左侧目录树中已创建成功的数据库名称,单击确认。

- 新建外部表。

-

- 在SQL编辑器页面,单击左上角的SQL图标。

- 新增使用TPC-H数据集数据的外部表,TPC-H数据引用自TPC,更多信息请参见TPC。

在新增的临时Query查询页面,选择已创建的实例名和数据库后,请您在SQL查询的编辑框输入示例代码,单击运行。

示例SQL语句用来创建一个映射到MaxCompute公共空间MAXCOMPUTE_PUBLIC_DATA中odps_customer_10g、odps_lineitem_10g等表的外部表,用于后续查询。

DROP FOREIGN TABLE IF EXISTS odps_customer_10g;

DROP FOREIGN TABLE IF EXISTS odps_lineitem_10g;

DROP FOREIGN TABLE IF EXISTS odps_nation_10g;

DROP FOREIGN TABLE IF EXISTS odps_orders_10g;

DROP FOREIGN TABLE IF EXISTS odps_part_10g;

DROP FOREIGN TABLE IF EXISTS odps_partsupp_10g;

DROP FOREIGN TABLE IF EXISTS odps_region_10g;

DROP FOREIGN TABLE IF EXISTS odps_supplier_10g;

IMPORT FOREIGN SCHEMA "MAXCOMPUTE_PUBLIC_DATA#default" LIMIT to

(

odps_customer_10g,

odps_lineitem_10g,

odps_nation_10g,

odps_orders_10g,

odps_part_10g,

odps_partsupp_10g,

odps_region_10g,

odps_supplier_10g

)

FROM SERVER odps_server INTO public OPTIONS(if_table_exist 'error',if_unsupported_type 'error');-

- 新增使用GitHub公开事件数据的外部表,数据引用自GitHub,更多信息请参见基于GitHub公开事件数据集的离线实时一体化实践。

单击左上角的SQL图标,在新增的临时Query查询页面,选择已创建的实例名和数据库后,请您在SQL查询的编辑框输入示例代码,单击运行。

示例SQL语句用来创建一个映射到MaxCompute公共空间MAXCOMPUTE_PUBLIC_DATA中github_eventsSchema下名为dwd_github_events_odps的外部表,用于后续查询。

- 新增使用GitHub公开事件数据的外部表,数据引用自GitHub,更多信息请参见基于GitHub公开事件数据集的离线实时一体化实践。

DROP FOREIGN TABLE IF EXISTS dwd_github_events_odps;

IMPORT FOREIGN SCHEMA "MAXCOMPUTE_PUBLIC_DATA#github_events" LIMIT to

(

dwd_github_events_odps

)

FROM SERVER odps_server INTO public OPTIONS(if_table_exist 'error',if_unsupported_type 'error');- 新建内部表。

-

- 在SQL编辑器页面,单击左上角角SQL图标。

- 新建使用TPC-H数据集数据的内部表。

在新增的临时Query查询页面,选择已创建的实例名和数据库后,请您在SQL查询的编辑框输入如下语句,单击运行。

示例SQL语句用来创建名称分别为LINEITEM、ORDERS、PARTSUPP、PART、CUSTOMER、SUPPLIER、NATION和REGION的表,用于后续存储数据。

DROP TABLE IF EXISTS LINEITEM;

BEGIN;

CREATE TABLE LINEITEM

(

L_ORDERKEY BIGINT NOT NULL,

L_PARTKEY INT NOT NULL,

L_SUPPKEY INT NOT NULL,

L_LINENUMBER INT NOT NULL,

L_QUANTITY DECIMAL(15,2) NOT NULL,

L_EXTENDEDPRICE DECIMAL(15,2) NOT NULL,

L_DISCOUNT DECIMAL(15,2) NOT NULL,

L_TAX DECIMAL(15,2) NOT NULL,

L_RETURNFLAG TEXT NOT NULL,

L_LINESTATUS TEXT NOT NULL,

L_SHIPDATE TIMESTAMPTZ NOT NULL,

L_COMMITDATE TIMESTAMPTZ NOT NULL,

L_RECEIPTDATE TIMESTAMPTZ NOT NULL,

L_SHIPINSTRUCT TEXT NOT NULL,

L_SHIPMODE TEXT NOT NULL,

L_COMMENT TEXT NOT NULL,

PRIMARY KEY (L_ORDERKEY,L_LINENUMBER)

);

CALL set_table_property('LINEITEM', 'clustering_key', 'L_SHIPDATE,L_ORDERKEY');

CALL set_table_property('LINEITEM', 'segment_key', 'L_SHIPDATE');

CALL set_table_property('LINEITEM', 'distribution_key', 'L_ORDERKEY');

CALL set_table_property('LINEITEM', 'bitmap_columns', 'L_ORDERKEY,L_PARTKEY,L_SUPPKEY,L_LINENUMBER,L_RETURNFLAG,L_LINESTATUS,L_SHIPINSTRUCT,L_SHIPMODE,L_COMMENT');

CALL set_table_property('LINEITEM', 'dictionary_encoding_columns', 'L_RETURNFLAG,L_LINESTATUS,L_SHIPINSTRUCT,L_SHIPMODE,L_COMMENT');

CALL set_table_property('LINEITEM', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS ORDERS;

BEGIN;

CREATE TABLE ORDERS

(

O_ORDERKEY BIGINT NOT NULL PRIMARY KEY,

O_CUSTKEY INT NOT NULL,

O_ORDERSTATUS TEXT NOT NULL,

O_TOTALPRICE DECIMAL(15,2) NOT NULL,

O_ORDERDATE timestamptz NOT NULL,

O_ORDERPRIORITY TEXT NOT NULL,

O_CLERK TEXT NOT NULL,

O_SHIPPRIORITY INT NOT NULL,

O_COMMENT TEXT NOT NULL

);

CALL set_table_property('ORDERS', 'segment_key', 'O_ORDERDATE');

CALL set_table_property('ORDERS', 'distribution_key', 'O_ORDERKEY');

CALL set_table_property('ORDERS', 'bitmap_columns', 'O_ORDERKEY,O_CUSTKEY,O_ORDERSTATUS,O_ORDERPRIORITY,O_CLERK,O_SHIPPRIORITY,O_COMMENT');

CALL set_table_property('ORDERS', 'dictionary_encoding_columns', 'O_ORDERSTATUS,O_ORDERPRIORITY,O_CLERK,O_COMMENT');

CALL set_table_property('ORDERS', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS PARTSUPP;

BEGIN;

CREATE TABLE PARTSUPP

(

PS_PARTKEY INT NOT NULL,

PS_SUPPKEY INT NOT NULL,

PS_AVAILQTY INT NOT NULL,

PS_SUPPLYCOST DECIMAL(15,2) NOT NULL,

PS_COMMENT TEXT NOT NULL,

PRIMARY KEY(PS_PARTKEY,PS_SUPPKEY)

);

CALL set_table_property('PARTSUPP', 'distribution_key', 'PS_PARTKEY');

CALL set_table_property('PARTSUPP', 'colocate_with', 'LINEITEM');

CALL set_table_property('PARTSUPP', 'bitmap_columns', 'PS_PARTKEY,PS_SUPPKEY,PS_AVAILQTY,PS_COMMENT');

CALL set_table_property('PARTSUPP', 'dictionary_encoding_columns', 'PS_COMMENT');

CALL set_table_property('PARTSUPP', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS PART;

BEGIN;

CREATE TABLE PART

(

P_PARTKEY INT NOT NULL PRIMARY KEY,

P_NAME TEXT NOT NULL,

P_MFGR TEXT NOT NULL,

P_BRAND TEXT NOT NULL,

P_TYPE TEXT NOT NULL,

P_SIZE INT NOT NULL,

P_CONTAINER TEXT NOT NULL,

P_RETAILPRICE DECIMAL(15,2) NOT NULL,

P_COMMENT TEXT NOT NULL

);

CALL set_table_property('PART', 'distribution_key', 'P_PARTKEY');

CALL set_table_property('PART', 'bitmap_columns', 'P_PARTKEY,P_SIZE,P_NAME,P_MFGR,P_BRAND,P_TYPE,P_CONTAINER,P_COMMENT');

CALL set_table_property('PART', 'dictionary_encoding_columns', 'P_NAME,P_MFGR,P_BRAND,P_TYPE,P_CONTAINER,P_COMMENT');

CALL set_table_property('PART', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS CUSTOMER;

BEGIN;

CREATE TABLE CUSTOMER

(

C_CUSTKEY INT NOT NULL PRIMARY KEY,

C_NAME TEXT NOT NULL,

C_ADDRESS TEXT NOT NULL,

C_NATIONKEY INT NOT NULL,

C_PHONE TEXT NOT NULL,

C_ACCTBAL DECIMAL(15,2) NOT NULL,

C_MKTSEGMENT TEXT NOT NULL,

C_COMMENT TEXT NOT NULL

);

CALL set_table_property('CUSTOMER', 'distribution_key', 'C_CUSTKEY');

CALL set_table_property('CUSTOMER', 'bitmap_columns', 'C_CUSTKEY,C_NATIONKEY,C_NAME,C_ADDRESS,C_PHONE,C_MKTSEGMENT,C_COMMENT');

CALL set_table_property('CUSTOMER', 'dictionary_encoding_columns', 'C_NAME,C_ADDRESS,C_PHONE,C_MKTSEGMENT,C_COMMENT');

CALL set_table_property('CUSTOMER', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS SUPPLIER;

BEGIN;

CREATE TABLE SUPPLIER

(

S_SUPPKEY INT NOT NULL PRIMARY KEY,

S_NAME TEXT NOT NULL,

S_ADDRESS TEXT NOT NULL,

S_NATIONKEY INT NOT NULL,

S_PHONE TEXT NOT NULL,

S_ACCTBAL DECIMAL(15,2) NOT NULL,

S_COMMENT TEXT NOT NULL

);

CALL set_table_property('SUPPLIER', 'distribution_key', 'S_SUPPKEY');

CALL set_table_property('SUPPLIER', 'bitmap_columns', 'S_SUPPKEY,S_NAME,S_ADDRESS,S_NATIONKEY,S_PHONE,S_COMMENT');

CALL set_table_property('SUPPLIER', 'dictionary_encoding_columns', 'S_NAME,S_ADDRESS,S_PHONE,S_COMMENT');

CALL set_table_property('SUPPLIER', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS NATION;

BEGIN;

CREATE TABLE NATION(

N_NATIONKEY INT NOT NULL PRIMARY KEY,

N_NAME text NOT NULL,

N_REGIONKEY INT NOT NULL,

N_COMMENT text NOT NULL

);

CALL set_table_property('NATION', 'distribution_key', 'N_NATIONKEY');

CALL set_table_property('NATION', 'bitmap_columns', 'N_NATIONKEY,N_NAME,N_REGIONKEY,N_COMMENT');

CALL set_table_property('NATION', 'dictionary_encoding_columns', 'N_NAME,N_COMMENT');

CALL set_table_property('NATION', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS REGION;

BEGIN;

CREATE TABLE REGION

(

R_REGIONKEY INT NOT NULL PRIMARY KEY,

R_NAME TEXT NOT NULL,

R_COMMENT TEXT

);

CALL set_table_property('REGION', 'distribution_key', 'R_REGIONKEY');

CALL set_table_property('REGION', 'bitmap_columns', 'R_REGIONKEY,R_NAME,R_COMMENT');

CALL set_table_property('REGION', 'dictionary_encoding_columns', 'R_NAME,R_COMMENT');

CALL set_table_property('REGION', 'time_to_live_in_seconds', '31536000');

COMMIT;-

- 新增使用GitHub公开事件数据的内部表。

单击左上角的SQL图标,在新增的临时Query查询页面,选择已创建的实例名和数据库后,请您在SQL查询的编辑框输入示例代码,单击运行。

示例SQL语句用来创建名称为gh_event_data的内部表,并设置distribution_key、event_time_column、clustering_key的表属性,用于后续数据导入和高性能查询。

- 新增使用GitHub公开事件数据的内部表。

DROP TABLE IF EXISTS gh_event_data;

BEGIN;

CREATE TABLE gh_event_data (

id bigint,

actor_id bigint,

actor_login text,

repo_id bigint,

repo_name text,

org_id bigint,

org_login text,

type text,

created_at timestamp with time zone NOT NULL,

action text,

iss_or_pr_id bigint,

number bigint,

comment_id bigint,

commit_id text,

member_id bigint,

rev_or_push_or_rel_id bigint,

ref text,

ref_type text,

state text,

author_association text,

language text,

merged boolean,

merged_at timestamp with time zone,

additions bigint,

deletions bigint,

changed_files bigint,

push_size bigint,

push_distinct_size bigint,

hr text,

month text,

year text,

ds text

);

CALL set_table_property('public.gh_event_data', 'distribution_key', 'id');

CALL set_table_property('public.gh_event_data', 'event_time_column', 'created_at');

CALL set_table_property('public.gh_event_data', 'clustering_key', 'created_at');

COMMIT;导入示例数据

内部表创建成功后,可以通过以下步骤将数据导入Hologres内部表中。外部表在Hologres中不存储数据,只进行字段映射。通过外部表您可以使用Hologres直接调用存储于MaxCompute公共空间MAXCOMPUTE_PUBLIC_DATA的数据。

- 在SQL编辑器页面,单击左上角的SQL图标。

- 导入TPC-H数据集数据。

在新增的临时Query查询页面,选择已创建的实例名和数据库后,请您在SQL查询的编辑框输入示例代码,单击运行。

示例SQL语句将MaxCompute公共空间MAXCOMPUTE_PUBLIC_DATA中public.odps_customer_10g、public.odps_lineitem_10g等表中数据导入到对应名称的内部表中,用于后续查询。

INSERT INTO public.customer SELECT * FROM public.odps_customer_10g ;

INSERT INTO public.lineitem SELECT * FROM public.odps_lineitem_10g ;

INSERT INTO public.nation SELECT * FROM public.odps_nation_10g ;

INSERT INTO public.orders SELECT * FROM public.odps_orders_10g ;

INSERT INTO public.part SELECT * FROM public.odps_part_10g ;

INSERT INTO public.partsupp SELECT * FROM public.odps_partsupp_10g ;

INSERT INTO public.region SELECT * FROM public.odps_region_10g ;

INSERT INTO public.supplier SELECT * FROM public.odps_supplier_10g ;

vacuum nation;

vacuum region;

vacuum supplier;

vacuum customer;

vacuum part;

vacuum partsupp;

vacuum orders;

vacuum lineitem;

analyze nation;

analyze region;

analyze lineitem;

analyze orders;

analyze customer;

analyze part;

analyze partsupp;

analyze supplier;

analyze lineitem (l_orderkey,l_partkey,l_suppkey);

analyze orders (o_custkey);

analyze partsupp(ps_partkey,ps_suppkey);- 导入GitHub公开事件数据。

单击左上角的SQL图标,在新增的临时Query查询页面,选择已创建的实例名和数据库后,请您在SQL查询的编辑框输入示例代码,单击运行。

示例SQL语句将MaxCompute公共空间MAXCOMPUTE_PUBLIC_DATA中的表dwd_github_events_odps中前一日的数据导入到内部表中,用于后续查询。由于本次活动中Hologres的资源有限,建议您导入并查询少于15天的数据。

INSERT INTO gh_event_data

SELECT

*

FROM

dwd_github_events_odps

WHERE

ds >= (CURRENT_DATE - interval '1 day')::text;

analyze gh_event_data;查询表中数据

- 在SQL编辑器页面,单击左上角的SQL图标。

- 基于TPC-H数据集数据查询。

在新增的临时Query查询页面,选择已创建的实例名和数据库后,请您在SQL查询的编辑框输入示例代码,单击运行。

下述SQL代码均为查询内部表数据使用,如需查询外部表,请将对应代码查询的表名更换为外部表名。

基于TPC-H演化的22条查询语句请参见查询表中数据。

select

l_returnflag,

l_linestatus,

sum(l_quantity) as sum_qty,

sum(l_extendedprice) as sum_base_price,

sum(l_extendedprice * (1 - l_discount)) as sum_disc_price,

sum(l_extendedprice * (1 - l_discount) * (1 + l_tax)) as sum_charge,

avg(l_quantity) as avg_qty,

avg(l_extendedprice) as avg_price,

avg(l_discount) as avg_disc,

count(*) as count_order

from

lineitem

where

l_shipdate <= date '1998-12-01' - interval '120' day

group by

l_returnflag,

l_linestatus

order by

l_returnflag,

l_linestatus;- 基于GitHub公开事件数据查询。单击左上角的SQL图标,在新增的临时Query查询页面,选择已创建的实例名和数据库后,请您在SQL查询的编辑框输入示例代码,单击运行。本文给出一些简单的数据分析语句,您可以基于表中字段,自行设计其他分析语句并查询。下述SQL代码均为查询内部表数据使用,如需查询外部表,请将对应代码查询的表名更换为外部表名。

-

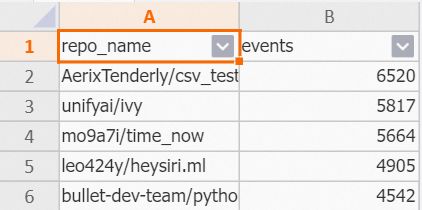

- 查询昨日最活跃项目。

SELECT

repo_name,

COUNT(*) AS events

FROM

gh_event_data

WHERE

created_at >= CURRENT_DATE - interval '1 day'

GROUP BY

repo_name

ORDER BY

events DESC

LIMIT 5;-

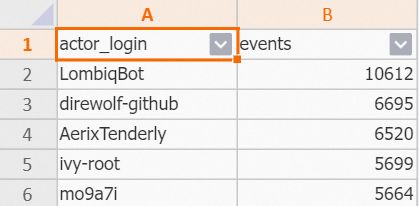

- 查询昨日最活跃开发者。

SELECT

actor_login,

COUNT(*) AS events

FROM

gh_event_data

WHERE

created_at >= CURRENT_DATE - interval '1 day'

AND actor_login NOT LIKE '%[bot]'

GROUP BY

actor_login

ORDER BY

events DESC

LIMIT 5;-

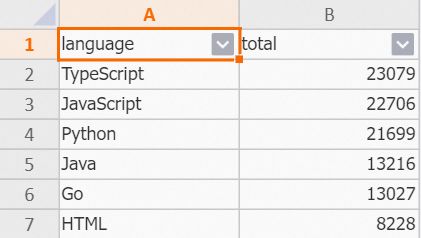

- 查询昨日编程语言排行。

SELECT

language,

count(*) total

FROM

gh_event_data

WHERE

created_at > CURRENT_DATE - interval '1 day'

AND language IS NOT NULL

GROUP BY

language

ORDER BY

total DESC

LIMIT 10;-

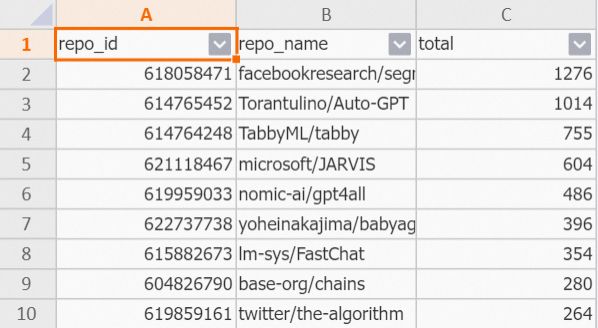

- 查询昨日项目新增星标数排行(不考虑取消星标的场景)。

SELECT

repo_id,

repo_name,

COUNT(actor_login) total

FROM

gh_event_data

WHERE

type = 'WatchEvent'

AND created_at > CURRENT_DATE - interval '1 day'

GROUP BY

repo_id,

repo_name

ORDER BY

total DESC

LIMIT 10;完成

完成以上操作后,您已经成功完成了Hologres数据查询操作。查询命令执行成功后,在临时Query查询页面下弹出结果页签,显示如下查询数据结果。

- Hologres 5000CU时,20GB存储免费试用,前往试用>>

- 了解Hologres:https://www.aliyun.com/product/bigdata/hologram

欢迎关注Hologres开发者社区