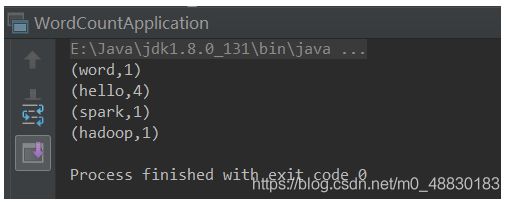

- 计算机专业大数据毕业设计-基于 Spark 的音乐数据分析项目(源码+LW+部署文档+全bao+远程调试+代码讲解等)

程序猿八哥

数据可视化计算机毕设spark大数据课程设计spark

博主介绍:✌️码农一枚,专注于大学生项目实战开发、讲解和毕业文撰写修改等。全栈领域优质创作者,博客之星、掘金/华为云/阿里云/InfoQ等平台优质作者、专注于Java、小程序技术领域和毕业项目实战✌️技术范围::小程序、SpringBoot、SSM、JSP、Vue、PHP、Java、python、爬虫、数据可视化、大数据、物联网、机器学习等设计与开发。主要内容:免费功能设计,开题报告、任务书、全b

- 绝佳组合 SpringBoot + Lua + Redis = 王炸!

Java精选面试题(微信小程序):5000+道面试题和选择题,真实面经,简历模版,包含Java基础、并发、JVM、线程、MQ系列、Redis、Spring系列、Elasticsearch、Docker、K8s、Flink、Spark、架构设计、大厂真题等,在线随时刷题!前言曾经有一位魔术师,他擅长将SpringBoot和Redis这两个强大的工具结合成一种令人惊叹的组合。他的魔法武器是Redis的

- AI日报-20250620:华为云重磅发布盘古大模型5.5!宇树科技C轮融资引爆资本圈!Genspark AI Pod震撼发布!

未来世界2099

AI日报人工智能华为云科技业界资讯

1、昆仑万维开源Skywork-SWE-32B:32B模型刷新代码修复SOTA,性能直逼闭源巨头2、腾讯AILab开源音乐生成大模型SongGeneration,人人皆可创作音乐!3、重磅!ManusAIWindows版免码开放,职场效率革命来袭!4、B站618商单效率飙升5倍!通义千问3助力AI选人功能大爆发5、HailuoVideoAgent震撼发布:零门槛生成专业级视频,创意秒变现实!6、中

- SPARKLE:深度剖析强化学习如何提升语言模型推理能力

摘要:强化学习(ReinforcementLearning,RL)已经成为赋予语言模型高级推理能力的主导范式。尽管基于RL的训练方法(例如GRPO)已经展示了显著的经验性收益,但对其优势的细致理解仍然不足。为了填补这一空白,我们引入了一个细粒度的分析框架,以剖析RL对推理的影响。我们的框架特别研究了被认为可以从RL训练中受益的关键要素:(1)计划遵循和执行,(2)问题分解,以及(3)改进的推理和知

- 24.park和unpark方法

卷土重来…

java并发编程java

1.park方法可以暂停线程,线程状态为wait。2.unpark方法可以恢复线程,线程状态为runnable。3.LockSupport的静态方法。4.park和unpark方法调用不分先后,unpark先调用,park后执行也可以恢复线程。publicclassParkDemo{publicstaticvoidmain(String[]args){Threadt1=newThread(()->

- 安全运维的 “五层防护”:构建全方位安全体系

KKKlucifer

安全运维

在数字化运维场景中,异构系统复杂、攻击手段隐蔽等挑战日益突出。保旺达基于“全域纳管-身份认证-行为监测-自动响应-审计溯源”的五层防护架构,融合AI、零信任等技术,构建全链路安全运维体系,以下从技术逻辑与实践落地展开解析:第一层:全域资产纳管——筑牢安全根基挑战云网基础设施包含分布式计算(Hadoop/Spark)、数据流处理(Storm/Flink)等异构组件,通信协议繁杂,传统方案难以全面纳管

- Hive 事务表(ACID)问题梳理

文章目录问题描述分析原因什么是事务表概念事务表和普通内部表的区别相关配置事务表的适用场景注意事项设计原理与实现文件管理格式参考博客问题描述工作中需要使用pyspark读取Hive中的数据,但是发现可以获取metastore,外部表的数据可以读取,内部表数据有些表报错信息是:AnalysisException:org.apache.hadoop.hive.ql.metadata.HiveExcept

- 云原生--微服务、CICD、SaaS、PaaS、IaaS

青秋.

云原生docker云原生微服务kubernetesserverlessservice_meshci/cd

往期推荐浅学React和JSX-CSDN博客一文搞懂大数据流式计算引擎Flink【万字详解,史上最全】-CSDN博客一文入门大数据准流式计算引擎Spark【万字详解,全网最新】_大数据spark-CSDN博客目录1.云原生概念和特点2.常见云模式3.云对外提供服务的架构模式3.1IaaS(Infrastructure-as-a-Service)3.2PaaS(Platform-as-a-Servi

- Spark运行架构

EmoGP

Sparkspark架构大数据

Spark框架的核心是一个计算引擎,整体来说,它采用了标准master-slave的结构 如下图所示,它展示了一个Spark执行时的基本结构,图形中的Driver表示master,负责管理整个集群中的作业任务调度,图形中的Executor则是slave,负责实际执行任务。由上图可以看出,对于Spark框架有两个核心组件:DriverSpark驱动器节点,用于执行Spark任务中的main方法,负

- Spark 各种配置项

zhixingheyi_tian

大数据sparkSparkConfsparkjvmjava

/bin/spark-shell--masteryarn--deploy-modeclient/bin/spark-shell--masteryarn--deploy-modeclusterTherearetwodeploymodesthatcanbeusedtolaunchSparkapplicationsonYARN.Inclustermode,theSparkdriverrunsinside

- Spark RDD 及性能调优

Aurora_NeAr

sparkwpfc#

RDDProgrammingRDD核心架构与特性分区(Partitions):数据被切分为多个分区;每个分区在集群节点上独立处理;分区是并行计算的基本单位。计算函数(ComputeFunction):每个分区应用相同的转换函数;惰性执行机制。依赖关系(Dependencies)窄依赖:1个父分区→1个子分区(map、filter)。宽依赖:1个父分区→多个子分区(groupByKey、join)。

- Apache Iceberg数据湖基础

Aurora_NeAr

apache

IntroducingApacheIceberg数据湖的演进与挑战传统数据湖(Hive表格式)的缺陷:分区锁定:查询必须显式指定分区字段(如WHEREdt='2025-07-01')。无原子性:并发写入导致数据覆盖或部分可见。低效元数据:LIST操作扫描全部分区目录(云存储成本高)。Iceberg的革新目标:解耦计算引擎与存储格式(支持Spark/Flink/Trino等);提供ACID事务、模式

- 大数据技术之Flink

第1章Flink概述1.1Flink是什么1.2Flink特点1.3FlinkvsSparkStreaming表Flink和Streaming对比FlinkStreaming计算模型流计算微批处理时间语义事件时间、处理时间处理时间窗口多、灵活少、不灵活(窗口必须是批次的整数倍)状态有没有流式SQL有没有1.4Flink的应用场景1.5Flink分层API第2章Flink快速上手2.1创建项目在准备

- Hadoop核心组件最全介绍

Cachel wood

大数据开发hadoop大数据分布式spark数据库计算机网络

文章目录一、Hadoop核心组件1.HDFS(HadoopDistributedFileSystem)2.YARN(YetAnotherResourceNegotiator)3.MapReduce二、数据存储与管理1.HBase2.Hive3.HCatalog4.Phoenix三、数据处理与计算1.Spark2.Flink3.Tez4.Storm5.Presto6.Impala四、资源调度与集群管

- 大数据分析技术的学习路径,不是绝对的,仅供参考

水云桐程序员

学习大数据数据分析学习方法

阶段一:基础筑基(1-3个月)1.编程语言:Python:掌握基础语法、数据结构、流程控制、函数、面向对象编程、常用库(NumPy,Pandas)。SQL:精通SELECT语句(过滤、排序、分组、聚合、连接)、DDL/DML基础。理解关系型数据库概念(表、主键、外键、索引)。MySQL或PostgreSQL是很好的起点。Java/Scala:深入理解Hadoop/Spark等框架会更有优势。初学者

- 大数据开发高频面试题:Spark与MapReduce解析

被招网约司机的盯上了好几天实习了六个月,到期被通知不能转正。外包裁员让我去友商我该去吗?offer比较华为状态码浏览器插件嵌入式项目推荐2019秋招总结+云从语音算法面经+银行群面面经科大讯飞语音算法面经语音算法美团一面已挂科大讯飞智能语音方向值得去吗?语音算法oc科大讯飞语音算法二面荣耀一面语音算法面经,已挂荣耀_语音算法工程一面科大讯飞语音一面凉经8.18携程机器学习(语音方向)一面【vivo

- spark处理kafka的用户行为数据写入hive

月光一族吖

sparkkafkahive

在CentOS上部署Hadoop(Hadoop3.4.1)和Hive(Hive3.1.2)的详细步骤说明。这份指南面向单机安装(伪集群模式),如果需要搭建真正的多节点集群,各节点间的网络互访、SSH免密登录以及配置同步需进一步调整。注意:本指南假设你已拥有root权限或者具有sudo权限,并且系统连接Internet(用于下载安装包)。步骤中的版本号可根据实际需要进行更改。一、环境准备更新系统软件

- Spark 4.0的VariantType 类型以及内部存储

鸿乃江边鸟

大数据SQLsparksparksql大数据

背景本文基于Spark4.0总结Spark中的VariantType类型,用尽量少的字节来存储Json的格式化数据分析这里主要介绍Variant的存储,我们从VariantBuilder.buildJson方法(把对应的json数据存储为VariantType类型)开始:publicstaticVariantparseJson(JsonParserparser,booleanallowDuplic

- 如何学习才能更好地理解人工智能工程技术专业和其他信息技术专业的关联性?

人工智能教学实践

python编程实践人工智能学习人工智能

要深入理解人工智能工程技术专业与其他信息技术专业的关联性,需要跳出单一专业的学习框架,通过“理论筑基-实践串联-跨学科整合”的路径构建系统性认知。以下是分阶段、可落地的学习方法:一、建立“专业关联”的理论认知框架绘制知识关联图谱操作方法:用XMind或Notion绘制思维导图,以AI为中心,辐射关联专业的核心技术节点。例如:AI(机器学习)├─数据支撑:大数据技术(Hadoop/Spark)+数据

- Spark从入门到熟悉(篇二)

本文介绍Spark的RDD编程,并进行实战演练,加强对编程的理解,实现快速入手知识脉络包含如下8部分内容:创建RDD常用Action操作常用Transformation操作针对PairRDD的常用操作缓存操作共享变量分区操作编程实战创建RDD实现方式有如下两种方式实现:textFile加载本地或者集群文件系统中的数据用parallelize方法将Driver中的数据结构并行化成RDD示例"""te

- Kafka生态整合深度解析:构建现代化数据架构的核心枢纽

Kafka生态整合深度解析:构建现代化数据架构的核心枢纽导语:在当今数据驱动的时代,ApacheKafka已经成为企业级数据架构的核心组件。本文将深入探讨Kafka与主流技术栈的整合方案,帮助架构师和开发者构建高效、可扩展的现代化数据处理平台。文章目录Kafka生态整合深度解析:构建现代化数据架构的核心枢纽一、Kafka与流处理引擎的深度集成1.1Kafka+ApacheSpark:批流一体化处理

- Spark on Docker:容器化大数据开发环境搭建指南

AI天才研究院

ChatGPT实战ChatGPTAI大模型应用入门实战与进阶大数据sparkdockerai

SparkonDocker:容器化大数据开发环境搭建指南关键词:Spark、Docker、容器化、大数据开发、分布式计算、开发环境搭建、容器编排摘要:本文系统讲解如何通过Docker实现Spark开发环境的容器化部署,涵盖从基础概念到实战部署的完整流程。首先分析Spark分布式计算框架与Docker容器技术的核心原理及融合优势,接着详细演示单节点开发环境和多节点集群环境的搭建步骤,包括Docker

- SeaTunnel 社区月报(5-6 月):全新功能上线、Bug 大扫除、Merge 之星是谁?

SeaTunnel

bugSeaTunnel开源数据集成大数据

在5月和6月,SeaTunnel社区迎来了一轮密集更新:2.3.11正式发布,新增对Databend、Elasticsearch向量、HTTP批量写入、ClickHouse多表写入等多个连接器能力,全面提升了数据同步灵活性。同时,近100个修复与优化PR合入,涵盖Spark引擎并行性修复、Paimon精度兼容性增强、Mongo-CDCExactlyOnce默认值优化、OracleDDL类型支持补全

- Spark从入门到熟悉(篇三)

小新学习屋

数据分析spark大数据分布式

本文介绍Spark的DataFrame、SparkSQL,并进行SparkSQL实战,加强对编程的理解,实现快速入手知识脉络包含如下7部分内容:RDD和DataFrame、SparkSQL的对比创建DataFrameDataFrame保存成文件DataFrame的API交互DataFrame的SQL交互SparkSQL实战参考资料RDD和DataFrame、SparkSQL的对比RDD对比Data

- 大数据集群架构hadoop集群、Hbase集群、zookeeper、kafka、spark、flink、doris、dataeas(二)

争取不加班!

hadoophbasezookeeper大数据运维

zookeeper单节点部署wget-chttps://dlcdn.apache.org/zookeeper/zookeeper-3.8.4/apache-zookeeper-3.8.4-bin.tar.gz下载地址tarxfapache-zookeeper-3.8.4-bin.tar.gz-C/data/&&mv/data/apache-zookeeper-3.8.4-bin//data/zoo

- Hadoop、Spark、Flink 三大大数据处理框架的能力与应用场景

一、技术能力与应用场景对比产品能力特点应用场景Hadoop-基于MapReduce的批处理框架-HDFS分布式存储-容错性强、适合离线分析-作业调度使用YARN-日志离线分析-数据仓库存储-T+1报表分析-海量数据处理Spark-基于内存计算,速度快-支持批处理、流处理(StructuredStreaming)-支持SQL、ML、图计算等-支持多语言(Scala、Java、Python)-近实时处

- SeaTunnel 社区月报(5-6 月):全新功能上线、Bug 大扫除、Merge 之星是谁?

数据库

在5月和6月,SeaTunnel社区迎来了一轮密集更新:2.3.11正式发布,新增对Databend、Elasticsearch向量、HTTP批量写入、ClickHouse多表写入等多个连接器能力,全面提升了数据同步灵活性。同时,近100个修复与优化PR合入,涵盖Spark引擎并行性修复、Paimon精度兼容性增强、Mongo-CDCExactlyOnce默认值优化、OracleDDL类型支持补全

- spark数据处理练习题番外篇【上】

一.单选题(共23题,100分)1.(单选题)maven依赖应该加在哪个文件中?A.pom.xmlB.log4j.propertiesC.src/main/scala.resourceD.src/test/scala.resource正确答案:A:pom.xml;Maven依赖应该添加在pom.xml文件中,这是Maven项目的核心配置文件。解释:pom.xml(ProjectObjectMode

- 基于django+Spark+大数据+爬虫技术的国漫推荐与可视化平台设计和实现(源码+论文+部署讲解等)

阿勇学长

大数据项目实战案例Java精品毕业设计实例Python数据可视化项目案例大数据djangospark国漫推荐与可视化平台毕业设计Java

博主介绍:✌全网粉丝50W+,csdn特邀作者、博客专家、CSDN新星计划导师、Java领域优质创作者,博客之星、掘金/华为云/阿里云/InfoQ等平台优质作者、专注于Java技术领域和学生毕业项目实战,高校老师/讲师/同行前辈交流✌技术范围:SpringBoot、Vue、SSM、HLMT、Jsp、PHP、Nodejs、Python、爬虫、数据可视化、小程序、安卓app、大数据、物联网、机器学习等

- spark写入hive表问题

qq_42265026

sparkhive大数据

1、httpclient发送post请求,当返回的数据过大时,报错socketclosed这个原因是客户端主动将连接关闭,根本原因是将httpclient。execute的返回结果closeableResponse作为a方法的返回结果,在b方法中进行解析虽然在b方法中没有关闭closeableResponse,但是在a方法中返回closeableResponse后,会进行httppost.real

- 遍历dom 并且存储(将每一层的DOM元素存在数组中)

换个号韩国红果果

JavaScripthtml

数组从0开始!!

var a=[],i=0;

for(var j=0;j<30;j++){

a[j]=[];//数组里套数组,且第i层存储在第a[i]中

}

function walkDOM(n){

do{

if(n.nodeType!==3)//筛选去除#text类型

a[i].push(n);

//con

- Android+Jquery Mobile学习系列(9)-总结和代码分享

白糖_

JQuery Mobile

目录导航

经过一个多月的边学习边练手,学会了Android基于Web开发的毛皮,其实开发过程中用Android原生API不是很多,更多的是HTML/Javascript/Css。

个人觉得基于WebView的Jquery Mobile开发有以下优点:

1、对于刚从Java Web转型过来的同学非常适合,只要懂得HTML开发就可以上手做事。

2、jquerym

- impala参考资料

dayutianfei

impala

记录一些有用的Impala资料

1. 入门资料

>>官网翻译:

http://my.oschina.net/weiqingbin/blog?catalog=423691

2. 实用进阶

>>代码&架构分析:

Impala/Hive现状分析与前景展望:http

- JAVA 静态变量与非静态变量初始化顺序之新解

周凡杨

java静态非静态顺序

今天和同事争论一问题,关于静态变量与非静态变量的初始化顺序,谁先谁后,最终想整理出来!测试代码:

import java.util.Map;

public class T {

public static T t = new T();

private Map map = new HashMap();

public T(){

System.out.println(&quo

- 跳出iframe返回外层页面

g21121

iframe

在web开发过程中难免要用到iframe,但当连接超时或跳转到公共页面时就会出现超时页面显示在iframe中,这时我们就需要跳出这个iframe到达一个公共页面去。

首先跳转到一个中间页,这个页面用于判断是否在iframe中,在页面加载的过程中调用如下代码:

<script type="text/javascript">

//<!--

function

- JAVA多线程监听JMS、MQ队列

510888780

java多线程

背景:消息队列中有非常多的消息需要处理,并且监听器onMessage()方法中的业务逻辑也相对比较复杂,为了加快队列消息的读取、处理速度。可以通过加快读取速度和加快处理速度来考虑。因此从这两个方面都使用多线程来处理。对于消息处理的业务处理逻辑用线程池来做。对于加快消息监听读取速度可以使用1.使用多个监听器监听一个队列;2.使用一个监听器开启多线程监听。

对于上面提到的方法2使用一个监听器开启多线

- 第一个SpringMvc例子

布衣凌宇

spring mvc

第一步:导入需要的包;

第二步:配置web.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<web-app version="2.5"

xmlns="http://java.sun.com/xml/ns/javaee"

xmlns:xsi=

- 我的spring学习笔记15-容器扩展点之PropertyOverrideConfigurer

aijuans

Spring3

PropertyOverrideConfigurer类似于PropertyPlaceholderConfigurer,但是与后者相比,前者对于bean属性可以有缺省值或者根本没有值。也就是说如果properties文件中没有某个bean属性的内容,那么将使用上下文(配置的xml文件)中相应定义的值。如果properties文件中有bean属性的内容,那么就用properties文件中的值来代替上下

- 通过XSD验证XML

antlove

xmlschemaxsdvalidationSchemaFactory

1. XmlValidation.java

package xml.validation;

import java.io.InputStream;

import javax.xml.XMLConstants;

import javax.xml.transform.stream.StreamSource;

import javax.xml.validation.Schem

- 文本流与字符集

百合不是茶

PrintWrite()的使用字符集名字 别名获取

文本数据的输入输出;

输入;数据流,缓冲流

输出;介绍向文本打印格式化的输出PrintWrite();

package 文本流;

import java.io.FileNotFound

- ibatis模糊查询sqlmap-mapping-**.xml配置

bijian1013

ibatis

正常我们写ibatis的sqlmap-mapping-*.xml文件时,传入的参数都用##标识,如下所示:

<resultMap id="personInfo" class="com.bijian.study.dto.PersonDTO">

<res

- java jvm常用命令工具——jdb命令(The Java Debugger)

bijian1013

javajvmjdb

用来对core文件和正在运行的Java进程进行实时地调试,里面包含了丰富的命令帮助您进行调试,它的功能和Sun studio里面所带的dbx非常相似,但 jdb是专门用来针对Java应用程序的。

现在应该说日常的开发中很少用到JDB了,因为现在的IDE已经帮我们封装好了,如使用ECLI

- 【Spring框架二】Spring常用注解之Component、Repository、Service和Controller注解

bit1129

controller

在Spring常用注解第一步部分【Spring框架一】Spring常用注解之Autowired和Resource注解(http://bit1129.iteye.com/blog/2114084)中介绍了Autowired和Resource两个注解的功能,它们用于将依赖根据名称或者类型进行自动的注入,这简化了在XML中,依赖注入部分的XML的编写,但是UserDao和UserService两个bea

- cxf wsdl2java生成代码super出错,构造函数不匹配

bitray

super

由于过去对于soap协议的cxf接触的不是很多,所以遇到了也是迷糊了一会.后来经过查找资料才得以解决. 初始原因一般是由于jaxws2.2规范和jdk6及以上不兼容导致的.所以要强制降为jaxws2.1进行编译生成.我们需要少量的修改:

我们原来的代码

wsdl2java com.test.xxx -client http://.....

修改后的代

- 动态页面正文部分中文乱码排障一例

ronin47

公司网站一部分动态页面,早先使用apache+resin的架构运行,考虑到高并发访问下的响应性能问题,在前不久逐步开始用nginx替换掉了apache。 不过随后发现了一个问题,随意进入某一有分页的网页,第一页是正常的(因为静态化过了);点“下一页”,出来的页面两边正常,中间部分的标题、关键字等也正常,唯独每个标题下的正文无法正常显示。 因为有做过系统调整,所以第一反应就是新上

- java-54- 调整数组顺序使奇数位于偶数前面

bylijinnan

java

import java.util.Arrays;

import java.util.Random;

import ljn.help.Helper;

public class OddBeforeEven {

/**

* Q 54 调整数组顺序使奇数位于偶数前面

* 输入一个整数数组,调整数组中数字的顺序,使得所有奇数位于数组的前半部分,所有偶数位于数组的后半

- 从100PV到1亿级PV网站架构演变

cfyme

网站架构

一个网站就像一个人,存在一个从小到大的过程。养一个网站和养一个人一样,不同时期需要不同的方法,不同的方法下有共同的原则。本文结合我自已14年网站人的经历记录一些架构演变中的体会。 1:积累是必不可少的

架构师不是一天练成的。

1999年,我作了一个个人主页,在学校内的虚拟空间,参加了一次主页大赛,几个DREAMWEAVER的页面,几个TABLE作布局,一个DB连接,几行PHP的代码嵌入在HTM

- [宇宙时代]宇宙时代的GIS是什么?

comsci

Gis

我们都知道一个事实,在行星内部的时候,因为地理信息的坐标都是相对固定的,所以我们获取一组GIS数据之后,就可以存储到硬盘中,长久使用。。。但是,请注意,这种经验在宇宙时代是不能够被继续使用的

宇宙是一个高维时空

- 详解create database命令

czmmiao

database

完整命令

CREATE DATABASE mynewdb USER SYS IDENTIFIED BY sys_password USER SYSTEM IDENTIFIED BY system_password LOGFILE GROUP 1 ('/u01/logs/my/redo01a.log','/u02/logs/m

- 几句不中听却不得不认可的话

datageek

1、人丑就该多读书。

2、你不快乐是因为:你可以像猪一样懒,却无法像只猪一样懒得心安理得。

3、如果你太在意别人的看法,那么你的生活将变成一件裤衩,别人放什么屁,你都得接着。

4、你的问题主要在于:读书不多而买书太多,读书太少又特爱思考,还他妈话痨。

5、与禽兽搏斗的三种结局:(1)、赢了,比禽兽还禽兽。(2)、输了,禽兽不如。(3)、平了,跟禽兽没两样。结论:选择正确的对手很重要。

6

- 1 14:00 PHP中的“syntax error, unexpected T_PAAMAYIM_NEKUDOTAYIM”错误

dcj3sjt126com

PHP

原文地址:http://www.kafka0102.com/2010/08/281.html

因为需要,今天晚些在本机使用PHP做些测试,PHP脚本依赖了一堆我也不清楚做什么用的库。结果一跑起来,就报出类似下面的错误:“Parse error: syntax error, unexpected T_PAAMAYIM_NEKUDOTAYIM in /home/kafka/test/

- xcode6 Auto layout and size classes

dcj3sjt126com

ios

官方GUI

https://developer.apple.com/library/ios/documentation/UserExperience/Conceptual/AutolayoutPG/Introduction/Introduction.html

iOS中使用自动布局(一)

http://www.cocoachina.com/ind

- 通过PreparedStatement批量执行sql语句【sql语句相同,值不同】

梦见x光

sql事务批量执行

比如说:我有一个List需要添加到数据库中,那么我该如何通过PreparedStatement来操作呢?

public void addCustomerByCommit(Connection conn , List<Customer> customerList)

{

String sql = "inseret into customer(id

- 程序员必知必会----linux常用命令之十【系统相关】

hanqunfeng

Linux常用命令

一.linux快捷键

Ctrl+C : 终止当前命令

Ctrl+S : 暂停屏幕输出

Ctrl+Q : 恢复屏幕输出

Ctrl+U : 删除当前行光标前的所有字符

Ctrl+Z : 挂起当前正在执行的进程

Ctrl+L : 清除终端屏幕,相当于clear

二.终端命令

clear : 清除终端屏幕

reset : 重置视窗,当屏幕编码混乱时使用

time com

- NGINX

IXHONG

nginx

pcre 编译安装 nginx

conf/vhost/test.conf

upstream admin {

server 127.0.0.1:8080;

}

server {

listen 80;

&

- 设计模式--工厂模式

kerryg

设计模式

工厂方式模式分为三种:

1、普通工厂模式:建立一个工厂类,对实现了同一个接口的一些类进行实例的创建。

2、多个工厂方法的模式:就是对普通工厂方法模式的改进,在普通工厂方法模式中,如果传递的字符串出错,则不能正确创建对象,而多个工厂方法模式就是提供多个工厂方法,分别创建对象。

3、静态工厂方法模式:就是将上面的多个工厂方法模式里的方法置为静态,

- Spring InitializingBean/init-method和DisposableBean/destroy-method

mx_xiehd

javaspringbeanxml

1.initializingBean/init-method

实现org.springframework.beans.factory.InitializingBean接口允许一个bean在它的所有必须属性被BeanFactory设置后,来执行初始化的工作,InitialzingBean仅仅指定了一个方法。

通常InitializingBean接口的使用是能够被避免的,(不鼓励使用,因为没有必要

- 解决Centos下vim粘贴内容格式混乱问题

qindongliang1922

centosvim

有时候,我们在向vim打开的一个xml,或者任意文件中,拷贝粘贴的代码时,格式莫名其毛的就混乱了,然后自己一个个再重新,把格式排列好,非常耗时,而且很不爽,那么有没有办法避免呢? 答案是肯定的,设置下缩进格式就可以了,非常简单: 在用户的根目录下 直接vi ~/.vimrc文件 然后将set pastetoggle=<F9> 写入这个文件中,保存退出,重新登录,

- netty大并发请求问题

tianzhihehe

netty

多线程并发使用同一个channel

java.nio.BufferOverflowException: null

at java.nio.HeapByteBuffer.put(HeapByteBuffer.java:183) ~[na:1.7.0_60-ea]

at java.nio.ByteBuffer.put(ByteBuffer.java:832) ~[na:1.7.0_60-ea]

- Hadoop NameNode单点问题解决方案之一 AvatarNode

wyz2009107220

NameNode

我们遇到的情况

Hadoop NameNode存在单点问题。这个问题会影响分布式平台24*7运行。先说说我们的情况吧。

我们的团队负责管理一个1200节点的集群(总大小12PB),目前是运行版本为Hadoop 0.20,transaction logs写入一个共享的NFS filer(注:NetApp NFS Filer)。

经常遇到需要中断服务的问题是给hadoop打补丁。 DataNod