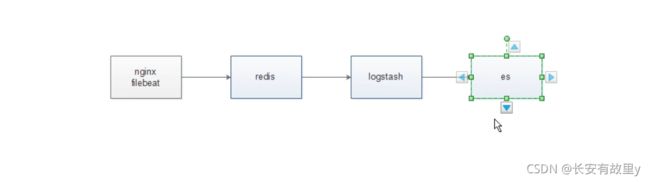

日志收集(nginx+filebeat +redis+logstash+elasticsearch+kibana)

日是收集redis作为存储

- 1. redis的安装

- 2. 配置nginx的日志格式json

- 3. filebeat的配置文件

- 4. logstash的配置

- 5 filebeat收集日志写入到一个key中

说明:该架构不论后边的日志收集损坏了,也不会影响nginx服务的正常使用

1. redis的安装

yum -y install redis

systemctl start redis

测试

redis-cli

[root@yw7 elk]# redis-cli

127.0.0.1:6379> set k1 v1

OK

127.0.0.1:6379> get k1

"v1"

127.0.0.1:6379>

2. 配置nginx的日志格式json

vim /etc/nginx/nginx.conf

log_format json '{ "time_local": "$time_local", '

'"remote_addr": "$remote_addr", '

'"referer": "$http_referer", '

'"request": "$request", '

'"status": $status, '

'"bytes": $body_bytes_sent, '

'"agent": "$http_user_agent", '

'"x_forwarded": "$http_x_forwarded_for", '

'"up_addr": "$upstream_addr",'

'"up_host": "$upstream_http_host",'

'"upstream_time": "$upstream_response_time",'

'"request_time": "$request_time"'

' }';

access_log /var/log/nginx/access.log json;

3. filebeat的配置文件

https://www.elastic.co/guide/en/beats/filebeat/6.6/redis-output.html

vim /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

host: "192.168.80.40:5601"

output.redis:

hosts: ["localhost"]

keys:

- key: "nginx_access"

when.contains:

tags: "access"

- key: "nginx_error"

when.contains:

tags: "error"

4. logstash的配置

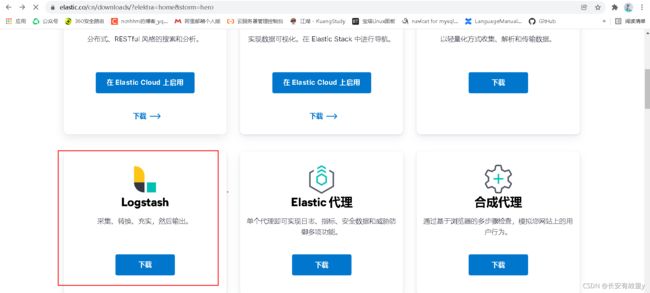

下载:版本要与elasticsearch的版本一致

https://www.elastic.co/cn/downloads/?elektra=home&storm=hero

安装:

rpm -ivh logstash-6.6.0.rpm

配置文件

cat /etc/logstash/conf.d/redis.conf

input {

redis {

host => "127.0.0.1"

port => "6379"

db => "0"

key => "nginx_access"

data_type => "list"

}

redis {

host => "127.0.0.1"

port => "6379"

db => "0"

key => "nginx_error"

data_type => "list"

}

}

filter {

mutate {

convert => ["upstream_time", "float"]

convert => ["request_time", "float"]

}

}

output {

stdout {}

if "access" in [tags] {

elasticsearch {

hosts => "http://localhost:9200"

manage_template => false

index => "nginx_access-%{+yyyy.MM.dd}"

}

}

if "error" in [tags] {

elasticsearch {

hosts => "http://localhost:9200"

manage_template => false

index => "nginx_error-%{+yyyy.MM.dd}"

}

}

}

/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis.conf

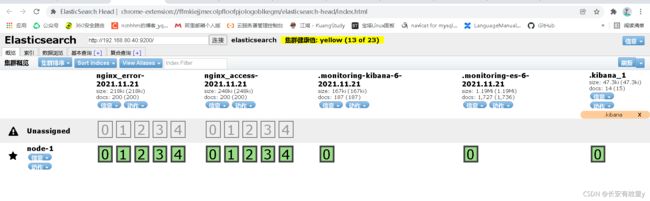

ab -c 10 -n 100 192.168.80.40/

ab -c 10 -n 100 192.168.80.40/test.html

我们在redis里边看不到数据的,数据被logstash取走了

5 filebeat收集日志写入到一个key中

filebeat配置文件

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

host: "192.168.47.175:5601"

output.redis:

hosts: ["localhost"]

key: "filebeat"

systemctl restart filebeat

redis.conf

input {

redis {

host => "127.0.0.1"

port => "6379"

db => "0"

key => "filebeat"

data_type => "list"

}

}

filter {

mutate {

convert => ["upstream_time", "float"]

convert => ["request_time", "float"]

}

}

output {

if "access" in [tags] {

elasticsearch {

hosts => "http://localhost:9200"

manage_template => false

index => "nginx_access-%{+yyyy.MM.dd}"

}

}

if "error" in [tags] {

elasticsearch {

hosts => "http://localhost:9200"

manage_template => false

index => "nginx_error-%{+yyyy.MM.dd}"

}

}

}

/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis.conf

ab -c 10 -n 100 192.168.80.40/

ab -c 10 -n 100 192.168.80.40/test.html