FFmpeg 内存模型分析

标题

- 1. 内存模型图

- 2. 分析流程

- 3.追溯本源————源码分析

-

- 3.1 AVPacket队列 什么时候生成的?

- 4 .AVPacket和AVFrame相关操作API

- 5. av_read_frame源码分析

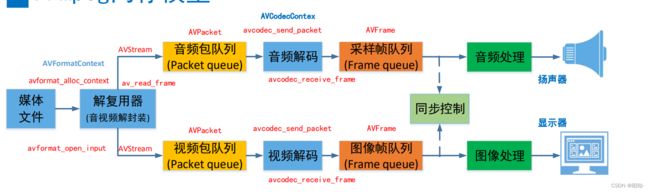

1. 内存模型图

2. 分析流程

我们解复用后,媒体流数据就会被分离开来,分别生成对应AVPacketList,然后通过av_read_frame读取一个AVPacket来读取,然而如果有一个新的AVPacket也想要指向之前的AVPacket,那么内存模型应该是什么样子的呢?

通过思考应该有2种模型:

1.两个AVPacket同时指向同一块数据区域

2.先拷贝之前的数据区域,然后再给给新的AVPacket使用

由于我们数据区域是只读的,因此我们可以采用第一种方案比较稳妥,但是又迎来的新的问题,如果我们avpacket释放了数据,那么数据就会消失,那么avpacket2就会失效,那么该如何解决呢?

如果有朋友了解过C++的智能指针设计的话,那么就知道这种情况改如何设计了.

我们通过引入一个变量来标记该数据被多少AVPacket所使用,当该变量为1时释放就会真正的释放掉改数据.

3.追溯本源————源码分析

3.1 AVPacket队列 什么时候生成的?

我们先看一下上面这个流程.

我们仔细阅读一下avformat_open_input这个函数.

int avformat_open_input(AVFormatContext **ps, const char *filename,

ff_const59 AVInputFormat *fmt, AVDictionary **options)

{

AVFormatContext *s = *ps;

int i, ret = 0;

AVDictionary *tmp = NULL;

ID3v2ExtraMeta *id3v2_extra_meta = NULL;

if (!s && !(s = avformat_alloc_context()))

return AVERROR(ENOMEM);

if (!s->av_class) {

av_log(NULL, AV_LOG_ERROR, "Input context has not been properly allocated by avformat_alloc_context() and is not NULL either\n");

return AVERROR(EINVAL);

}

if (fmt)

s->iformat = fmt;

if (options)

av_dict_copy(&tmp, *options, 0);

if (s->pb) // must be before any goto fail

s->flags |= AVFMT_FLAG_CUSTOM_IO;

if ((ret = av_opt_set_dict(s, &tmp)) < 0)

goto fail;

if (!(s->url = av_strdup(filename ? filename : ""))) {

ret = AVERROR(ENOMEM);

goto fail;

}

#if FF_API_FORMAT_FILENAME

FF_DISABLE_DEPRECATION_WARNINGS

av_strlcpy(s->filename, filename ? filename : "", sizeof(s->filename));

FF_ENABLE_DEPRECATION_WARNINGS

#endif

if ((ret = init_input(s, filename, &tmp)) < 0)

goto fail;

s->probe_score = ret;

if (!s->protocol_whitelist && s->pb && s->pb->protocol_whitelist) {

s->protocol_whitelist = av_strdup(s->pb->protocol_whitelist);

if (!s->protocol_whitelist) {

ret = AVERROR(ENOMEM);

goto fail;

}

}

if (!s->protocol_blacklist && s->pb && s->pb->protocol_blacklist) {

s->protocol_blacklist = av_strdup(s->pb->protocol_blacklist);

if (!s->protocol_blacklist) {

ret = AVERROR(ENOMEM);

goto fail;

}

}

if (s->format_whitelist && av_match_list(s->iformat->name, s->format_whitelist, ',') <= 0) {

av_log(s, AV_LOG_ERROR, "Format not on whitelist \'%s\'\n", s->format_whitelist);

ret = AVERROR(EINVAL);

goto fail;

}

avio_skip(s->pb, s->skip_initial_bytes);

/* Check filename in case an image number is expected. */

if (s->iformat->flags & AVFMT_NEEDNUMBER) {

if (!av_filename_number_test(filename)) {

ret = AVERROR(EINVAL);

goto fail;

}

}

s->duration = s->start_time = AV_NOPTS_VALUE;

/* Allocate private data. */

if (s->iformat->priv_data_size > 0) {

if (!(s->priv_data = av_mallocz(s->iformat->priv_data_size))) {

ret = AVERROR(ENOMEM);

goto fail;

}

if (s->iformat->priv_class) {

*(const AVClass **) s->priv_data = s->iformat->priv_class;

av_opt_set_defaults(s->priv_data);

if ((ret = av_opt_set_dict(s->priv_data, &tmp)) < 0)

goto fail;

}

}

/* e.g. AVFMT_NOFILE formats will not have a AVIOContext */

if (s->pb)

ff_id3v2_read_dict(s->pb, &s->internal->id3v2_meta, ID3v2_DEFAULT_MAGIC, &id3v2_extra_meta);

if (!(s->flags&AVFMT_FLAG_PRIV_OPT) && s->iformat->read_header)

if ((ret = s->iformat->read_header(s)) < 0)

goto fail;

if (!s->metadata) {

s->metadata = s->internal->id3v2_meta;

s->internal->id3v2_meta = NULL;

} else if (s->internal->id3v2_meta) {

int level = AV_LOG_WARNING;

if (s->error_recognition & AV_EF_COMPLIANT)

level = AV_LOG_ERROR;

av_log(s, level, "Discarding ID3 tags because more suitable tags were found.\n");

av_dict_free(&s->internal->id3v2_meta);

if (s->error_recognition & AV_EF_EXPLODE) {

ret = AVERROR_INVALIDDATA;

goto close;

}

}

if (id3v2_extra_meta) {

if (!strcmp(s->iformat->name, "mp3") || !strcmp(s->iformat->name, "aac") ||

!strcmp(s->iformat->name, "tta") || !strcmp(s->iformat->name, "wav")) {

if ((ret = ff_id3v2_parse_apic(s, &id3v2_extra_meta)) < 0)

goto close;

if ((ret = ff_id3v2_parse_chapters(s, &id3v2_extra_meta)) < 0)

goto close;

if ((ret = ff_id3v2_parse_priv(s, &id3v2_extra_meta)) < 0)

goto close;

} else

av_log(s, AV_LOG_DEBUG, "demuxer does not support additional id3 data, skipping\n");

}

ff_id3v2_free_extra_meta(&id3v2_extra_meta);

if ((ret = avformat_queue_attached_pictures(s)) < 0)

goto close;

if (!(s->flags&AVFMT_FLAG_PRIV_OPT) && s->pb && !s->internal->data_offset)

s->internal->data_offset = avio_tell(s->pb);

s->internal->raw_packet_buffer_remaining_size = RAW_PACKET_BUFFER_SIZE;

update_stream_avctx(s);

for (i = 0; i < s->nb_streams; i++)

s->streams[i]->internal->orig_codec_id = s->streams[i]->codecpar->codec_id;

if (options) {

av_dict_free(options);

*options = tmp;

}

*ps = s;

return 0;

close:

if (s->iformat->read_close)

s->iformat->read_close(s);

fail:

ff_id3v2_free_extra_meta(&id3v2_extra_meta);

av_dict_free(&tmp);

if (s->pb && !(s->flags & AVFMT_FLAG_CUSTOM_IO))

avio_closep(&s->pb);

avformat_free_context(s);

*ps = NULL;

return ret;

}

其中关键的是:

if ((ret = avformat_queue_attached_pictures(s)) < 0)

goto close;

avformat_open_input调用了avformat_queue_attached_pictures这个函数,那么我看一下这个函数是干嘛的?

int avformat_queue_attached_pictures(AVFormatContext *s)

{

int i, ret;

for (i = 0; i < s->nb_streams; i++)

if (s->streams[i]->disposition & AV_DISPOSITION_ATTACHED_PIC &&

s->streams[i]->discard < AVDISCARD_ALL) {

if (s->streams[i]->attached_pic.size <= 0) {

av_log(s, AV_LOG_WARNING,

"Attached picture on stream %d has invalid size, "

"ignoring\n", i);

continue;

}

ret = ff_packet_list_put(&s->internal->raw_packet_buffer,

&s->internal->raw_packet_buffer_end,

&s->streams[i]->attached_pic,

FF_PACKETLIST_FLAG_REF_PACKET);

if (ret < 0)

return ret;

}

return 0;

}

根据函数很明显可以看出这个函数是对每一个流进行调用ff_packet_list_put构建AVPacketList链表.

我们仔细看一下ff_packet_list_put这个函数:

int ff_packet_list_put(AVPacketList **packet_buffer,

AVPacketList **plast_pktl,

AVPacket *pkt, int flags)

{

AVPacketList *pktl = av_mallocz(sizeof(AVPacketList));

int ret;

if (!pktl)

return AVERROR(ENOMEM);

if (flags & FF_PACKETLIST_FLAG_REF_PACKET) {

if ((ret = av_packet_ref(&pktl->pkt, pkt)) < 0) {

av_free(pktl);

return ret;

}

} else {

// TODO: Adapt callers in this file so the line below can use

// av_packet_move_ref() to effectively move the reference

// to the list.

pktl->pkt = *pkt;

}

if (*packet_buffer)

(*plast_pktl)->next = pktl;

else

*packet_buffer = pktl;

/* Add the packet in the buffered packet list. */

*plast_pktl = pktl;

return 0;

}

这个函数其实就是一个链表的尾插函数,每次都创建一个AVPacketList,而AVPacketList结构体中封装的是:AVPacket

typedef struct AVPacketList {

AVPacket pkt;

struct AVPacketList *next;

} AVPacketList;

那么到这里我们就回答了一开始标题的问题了.

4 .AVPacket和AVFrame相关操作API

5. av_read_frame源码分析

int av_read_frame(AVFormatContext *s, AVPacket *pkt)

{

const int genpts = s->flags & AVFMT_FLAG_GENPTS;

int eof = 0;

int ret;

AVStream *st;

if (!genpts) {

ret = s->internal->packet_buffer

? ff_packet_list_get(&s->internal->packet_buffer,

&s->internal->packet_buffer_end, pkt)

: read_frame_internal(s, pkt);

if (ret < 0)

return ret;

goto return_packet;

}

for (;;) {

AVPacketList *pktl = s->internal->packet_buffer;

if (pktl) {

AVPacket *next_pkt = &pktl->pkt;

if (next_pkt->dts != AV_NOPTS_VALUE) {

int wrap_bits = s->streams[next_pkt->stream_index]->pts_wrap_bits;

// last dts seen for this stream. if any of packets following

// current one had no dts, we will set this to AV_NOPTS_VALUE.

int64_t last_dts = next_pkt->dts;

av_assert2(wrap_bits <= 64);

while (pktl && next_pkt->pts == AV_NOPTS_VALUE) {

if (pktl->pkt.stream_index == next_pkt->stream_index &&

av_compare_mod(next_pkt->dts, pktl->pkt.dts, 2ULL << (wrap_bits - 1)) < 0) {

if (av_compare_mod(pktl->pkt.pts, pktl->pkt.dts, 2ULL << (wrap_bits - 1))) {

// not B-frame

next_pkt->pts = pktl->pkt.dts;

}

if (last_dts != AV_NOPTS_VALUE) {

// Once last dts was set to AV_NOPTS_VALUE, we don't change it.

last_dts = pktl->pkt.dts;

}

}

pktl = pktl->next;

}

if (eof && next_pkt->pts == AV_NOPTS_VALUE && last_dts != AV_NOPTS_VALUE) {

// Fixing the last reference frame had none pts issue (For MXF etc).

// We only do this when

// 1. eof.

// 2. we are not able to resolve a pts value for current packet.

// 3. the packets for this stream at the end of the files had valid dts.

next_pkt->pts = last_dts + next_pkt->duration;

}

pktl = s->internal->packet_buffer;

}

/* read packet from packet buffer, if there is data */

st = s->streams[next_pkt->stream_index];

if (!(next_pkt->pts == AV_NOPTS_VALUE && st->discard < AVDISCARD_ALL &&

next_pkt->dts != AV_NOPTS_VALUE && !eof)) {

ret = ff_packet_list_get(&s->internal->packet_buffer,

&s->internal->packet_buffer_end, pkt);

goto return_packet;

}

}

ret = read_frame_internal(s, pkt);

if (ret < 0) {

if (pktl && ret != AVERROR(EAGAIN)) {

eof = 1;

continue;

} else

return ret;

}

ret = ff_packet_list_put(&s->internal->packet_buffer,

&s->internal->packet_buffer_end,

pkt, FF_PACKETLIST_FLAG_REF_PACKET);

av_packet_unref(pkt);

if (ret < 0)

return ret;

}

return_packet:

st = s->streams[pkt->stream_index];

if ((s->iformat->flags & AVFMT_GENERIC_INDEX) && pkt->flags & AV_PKT_FLAG_KEY) {

ff_reduce_index(s, st->index);

av_add_index_entry(st, pkt->pos, pkt->dts, 0, 0, AVINDEX_KEYFRAME);

}

if (is_relative(pkt->dts))

pkt->dts -= RELATIVE_TS_BASE;

if (is_relative(pkt->pts))

pkt->pts -= RELATIVE_TS_BASE;

return ret;

}

有了前面的知识铺垫,不难分析出read_frame_internal是调用ff_packet_list_put来获取AVPacket的,但是这里要注意ff_packet_list_put并不是简单的将AVPacket给read_frame_internal。

int ff_packet_list_get(AVPacketList **pkt_buffer,

AVPacketList **pkt_buffer_end,

AVPacket *pkt)

{

AVPacketList *pktl;

av_assert0(*pkt_buffer);

pktl = *pkt_buffer;

*pkt = pktl->pkt;

*pkt_buffer = pktl->next;

if (!pktl->next)

*pkt_buffer_end = NULL;

av_freep(&pktl);

return 0;

}

根据源码可以得出,ff_packet_list_get先通过 *pkt = pktl->pkt; 将原来的数据直接拷贝给pkt,然后就释放掉了pkt1,也就是AVPacketList链表上的一个节点。那么这时候链表上就会消失一个AVPacket,这样做的好出就是,会将AVPacketList链表中的AVPacket慢慢释放掉,从而减少内存占用!

对于AVFrame相关API详解,我留到介绍编解码API流程时详细介绍,敬请期待~