Ubuntu20.4下安装k8s

一、环境说明

采用三台主机部署,其中1台master,2台node,因为资源比较不足,所以配置也比较低,目前按照2C\2G\40G的配置来的。

| 主机名 | IP地址 |

| k8s-master | 192.168.186.111 |

| k8s-node1 | 192.168.186.112 |

| k8s-node2 | 192.168.186.113 |

二、系统初始化

1. 配置hosts

修改所有主机hosts,把每台主机IP和主机名对应关系写入host文件

#执行三条命令

echo "192.168.186.111 k8s-master" >> /etc/hosts

echo "192.168.186.112 k8s-node1" >> /etc/hosts

echo "192.168.186.113 k8s-node2" >> /etc/hosts

检查确认下:

root@k8s-node2:~# cat /etc/hosts

127.0.0.1 localhost

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

192.168.186.111 k8s-master

192.168.186.112 k8s-node1

192.168.186.113 k8s-node2

2. 关闭防火墙

sudo systemctl status ufw.service

sudo systemctl stop ufw.service

sudo systemctl disable ufw.service3. 关闭swap:

systemctl stop swap.target

systemctl disable swap.target

systemctl status swap.target

systemctl stop swap.img.swap

systemctl status swap.img.swap

vim /etc/fstab

#把/swap.img开头的这一行注释掉,同时修改swap挂在为sw,noauto,如下所以:

cat /etc/fstab

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a

# device; this may be used with UUID= as a more robust way to name devices

# that works even if disks are added and removed. See fstab(5).

#

#

/dev/disk/by-uuid/fb8812a4-bbb4-4ab4-a0a6-5dcdc103c2fc none swap sw,noauto 0 0

# / was on /dev/sda4 during curtin installation

/dev/disk/by-uuid/f797ac9a-ece0-4fae-af84-cc3ec41d0419 / ext4 defaults 0 1

# /boot was on /dev/sda2 during curtin installation

/dev/disk/by-uuid/3e71e4e2-538e-4340-9239-eef1675baa0d /boot ext4 defaults 0 1

#/swap.img none swap sw 0 0 完成之后,重启系统,使用free -m检查一下是否禁用成功,如下就是禁用成功:

4. 安装IPVS,加载内核模块

sudo apt -y install ipvsadm ipset sysstat conntrack#创建目录

mkdir ~/k8s-init/

#写入参数配置

tee ~/k8s-init/ipvs.modules <<'EOF'

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_lc

modprobe -- ip_vs_lblc

modprobe -- ip_vs_lblcr

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- ip_vs_dh

modprobe -- ip_vs_fo

modprobe -- ip_vs_nq

modprobe -- ip_vs_sed

modprobe -- ip_vs_ftp

modprobe -- ip_vs_sh

modprobe -- ip_tables

modprobe -- ip_set

modprobe -- ipt_set

modprobe -- ipt_rpfilter

modprobe -- ipt_REJECT

modprobe -- ipip

modprobe -- xt_set

modprobe -- br_netfilter

modprobe -- nf_conntrack

EOF

#加载内核配置(临时|永久)注意管理员执行

chmod 755 ~/k8s-init/ipvs.modules && sudo bash ~/k8s-init/ipvs.modules

sudo cp ~/k8s-init/ipvs.modules /etc/profile.d/ipvs.modules.sh

lsmod | grep -e ip_vs -e nf_conntrack

#ip_vs_ftp 16384 0

#nf_nat 45056 1 ip_vs_ftp

#ip_vs_sed 16384 0

#ip_vs_nq 16384 0

#ip_vs_fo 16384 0

#ip_vs_dh 16384 0

#ip_vs_sh 16384 0

#ip_vs_wrr 16384 0

#ip_vs_rr 16384 0

#ip_vs_lblcr 16384 0

#ip_vs_lblc 16384 0

#ip_vs_lc 16384 0

#ip_vs 155648 22 #ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp

#nf_conntrack 139264 2 nf_nat,ip_vs

#nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs

#nf_defrag_ipv4 16384 1 nf_conntrack

#libcrc32c 16384 5 nf_conntrack,nf_nat,btrfs,raid456,ip_vs

5. 内核参数调整

# 1.Kernel 参数调整

mkdir ~/k8s-init/

cat > ~/k8s-init/kubernetes-sysctl.conf <6. 设置 rsyslogd 和 systemd journald 记录

sudo mkdir -pv /var/log/journal/ /etc/systemd/journald.conf.d/

sudo tee /etc/systemd/journald.conf.d/99-prophet.conf <<'EOF'

[Journal]

# 持久化保存到磁盘

Storage=persistent

# 压缩历史日志

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

# 最大占用空间 10G

SystemMaxUse=10G

# 单日志文件最大 100M

SystemMaxFileSize=100M

# 日志保存时间 2 周

MaxRetentionSec=2week

# 不将日志转发到syslog

ForwardToSyslog=no

EOF

cp /etc/systemd/journald.conf.d/99-prophet.conf ~/k8s-init/journald-99-prophet.conf

sudo systemctl restart systemd-journald三、安装docker

# 1.卸载旧版本

sudo apt-get remove docker docker-engine docker.io containerd runc

# 2.更新apt包索引并安装包以允许apt在HTTPS上使用存储库

sudo apt-get install -y \

apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-common

# 3.添加Docker官方GPG密钥 # -fsSL

curl https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

# 4.通过搜索指纹的最后8个字符进行密钥验证

sudo apt-key fingerprint 0EBFCD88

# 5.设置稳定存储库

sudo add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"

# 6.Install Docker Engine 默认最新版本

sudo apt-get update && sudo apt-get install -y docker-ce docker-ce-cli containerd.io

sudo apt-get install -y docker-ce=5:19.03.15~3-0~ubuntu-focal docker-ce-cli=5:19.03.15~3-0~ubuntu-focal containerd.io

# 7.安装特定版本的Docker引擎,请在repo中列出可用的版本

# $apt-cache madison docker-ce

# docker-ce | 5:20.10.2~3-0~ubuntu-focal | https://download.docker.com/linux/ubuntu focal/stable amd64 Packages

# docker-ce | 5:18.09.1~3-0~ubuntu-xenial | https://download.docker.com/linux/ubuntu xenial/stable amd64 Packages

# 使用第二列中的版本字符串安装特定的版本,例如:5:18.09.1~3-0~ubuntu-xenial。

# $sudo apt-get install docker-ce= docker-ce-cli= containerd.io

#8.将当前用户加入docker用户组然后重新登陆当前用户使得低权限用户

sudo gpasswd -a ${USER} docker

#9.加速器建立

mkdir -vp /etc/docker/

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://xlx9erfu.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

}

}

EOF

# 9.自启与启动

sudo systemctl enable --now docker

sudo systemctl restart docker

# 10.退出登陆生效

exit 四、K8S安装

1.安装kubeadm

使用阿里云镜像源,安装kubeadm,可参考:https://developer.aliyun.com/mirror/kubernetes

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat </etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

#安装最新版的kubelet kubeadm kubectl(二选一)

sudo apt-get install -y kubelet kubeadm kubectl

#安装指定版本的 kubelet kubeadm kubectl,我这里安装的是1.22.2的版本(二选一)

sudo apt-get install -y kubelet=1.22.2-00 kubeadm=1.22.2-00 kubectl=1.22.2-00

#设置开机自启动

systemctl enable kubelet.service

#查看版本

kubeadm version 2.初始化集群

使用kubeadm 初始化集群(仅master节点运行)

sudo kubeadm init \

--kubernetes-version=1.22.1 \

--apiserver-advertise-address=192.168.186.111 \

--pod-network-cidr=10.244.0.0/16 \

--image-repository registry.aliyuncs.com/google_containers

#kubernetes-version,是k8s的版本,不设置默认是最新

#apiserver-advertise-address是填 master节点的IP

#pod-network-cidr pod的网络,取决了flannel的网络(本文)

#image-repository 镜像源地址直到出现"Your Kubernetes control-plane has initialized successfully!"就表示初始化完成,如下图所示:

执行下面命令保存k8s的配置文件:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

3. 安装flannel(仅master节点运行)

#使用gitee,github因为网络原因可能无法正常下载

git clone --depth 1 https://gitee.com/CaiJinHao/flannel.git

#安装

kubectl apply -f flannel/Documentation/kube-flannel.yml

#查看pod状态,当flannel状态为Running的时候就表示安装完成

kubectl get pod -n kube-system

4. 配置kube-proxy开启IPVS模式

kubectl edit configmap kube-proxy -n kube-system

#把原来mode配置为ipvs,默认是空

42 kind: KubeProxyConfiguration

43 metricsBindAddress: ""

44 mode: "ipvs"

45 nodePortAddresses: null

5. 重启kube-proxy pod,并且查看状态

#删除pod重新运行

kubectl delete pod -n kube-system kube-proxy-mtkns

#查看状态

ipvsadm -Ln6. 添加节点

#运行此命令把其他node节点加入集群,具体命令是自己集群kubeadm初始化完成之后的提示命令中

kubeadm join 192.168.186.111:6443 --token hhwuqb.cs92xded67e0cj8v \

--discovery-token-ca-cert-hash sha256:6f318d778732870d8b9fdaacf8522c47fe28a1d441da0db90f14535484f5434c

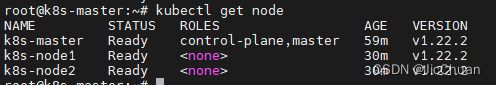

完成之后之后,等待几分钟,使用kubectl get node查看集群状态,当所有节点状态都是Ready时,就表示成功。

五、部署服务

部署nginx(deployment )

kubectl create deployment nginx --image=nginx:1.17.1暴露端口(创建svc)

kubectl expose deployment nginx --port=80 --type=NodePort查看 deployment和svc

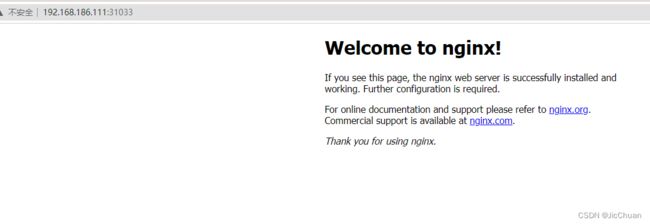

kubectl get deployment,pod,svc使用集群内任意node的IP加NodePort的端口访问,验证是否正常

本文部分配置参考了如下链接:5.我在B站学云原生之Kubernetes入门实践Ubuntu系统上安装部署K8S单控制平面环境 - 哔哩哔哩