Kubernetes那点事儿——集群搭建1.22(kubeadm部署)

K8s集群搭建部署1.22

- 一、k8s节点初始化

-

- 1、centos7.4版本以上

- 2、关闭防火墙及selinux

- 3、关闭swap

- 4、设置主机名并写入hosts

- 5、将桥接的IPv4流量传递到iptables的链

- 6、时间同步

- 7、安装docker

- 二、k8s集群部署

-

- 1、添加kubeadm阿里云yum源(所有节点)

- 2、安装kubeadm,kubelet和kubectl(所有节点)

- 3、master执行kubeadm init

- 4、所有节点启动kubelet

- 5、将node加入到k8s cluster

- 6、给node节点打label,使ROLES可以识别

- 7、部署网络组件(Calico)

- 8、测试k8s集群

- 9、部署Dashboard

-

- 方式一 token登录

- 方式二 kubeconfig

一、k8s节点初始化

1、centos7.4版本以上

yum -y update

2、关闭防火墙及selinux

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时

3、关闭swap

swapoff -a # 临时

vim /etc/fstab # 永久 (注释掉swap分区挂载)

4、设置主机名并写入hosts

hostnamectl set-hostname <hostname>

5、将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

6、时间同步

yum install ntpdate -y

ntpdate time.windows.com

7、安装docker

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo # 配置yum源

yum install -y docker-ce

二、k8s集群部署

1、添加kubeadm阿里云yum源(所有节点)

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2、安装kubeadm,kubelet和kubectl(所有节点)

1.18 1.19 版本:

yum install -y kubelet-1.19.0 kubeadm-1.19.0 kubectl-1.19.0

systemctl start kubelet.service && systemctl enable kubelet.service

1.22版本:

yum install -y kubelet-1.22.2 kubeadm-1.22.2 kubectl-1.22.2

docker info |grep 'Cgroup Driver' |awk '{print $3}' # 获取docker cgroups

vim /etc/sysconfig/kubelet # 配置kubelet启动参数

KUBELET_EXTRA_ARGS="--cgroup-driver=cgroupfs --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.5"

systemctl daemon-reload

[root@k8s-master ~]# cat /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/sysconfig/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

[root@k8s-master ~]# cat /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=cgroupfs --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.5"

kubeadm所有组件容器化,会拉取镜像,docker管理,【1.23版本遗弃docker使用containerd】。只有kubelet组件没有容器化,本地服务,systemctl管理。二进制安装方式中所有组件均为本地服务,systemctl管理。

其次,在1.18 1.19版本中kubelet存在 ,是一个常规的systemctl管理的服务yum后可直接start启动,在1.22版本中kubelet中存在了变量,需要我们手动去设置,否则变量会获取失败kubelet启动失败。

1.19版本与1.22版本 kubectl服务systemd文件对比:

cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=kubelet: The Kubernetes Node Agent

Documentation=https://kubernetes.io/docs/

Wants=network-online.target

After=network-online.target

[Service]

ExecStart=/usr/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

cat /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/sysconfig/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

3、master执行kubeadm init

官网参考:https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

kubeadm init \

--apiserver-advertise-address=10.7.7.220 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.22.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--ignore-preflight-errors=all

- --apiserver-advertise-address 集群通告地址,集群访问地址

- --image-repository 由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

- --kubernetes-version K8s版本,与上面安装的一致

- --service-cidr 集群内部虚拟网络,Pod统一访问入口

- --pod-network-cidr Podip,与后续部署的CNI网络组件yaml中保持一致

4、所有节点启动kubelet

systemctl start kubelet.service

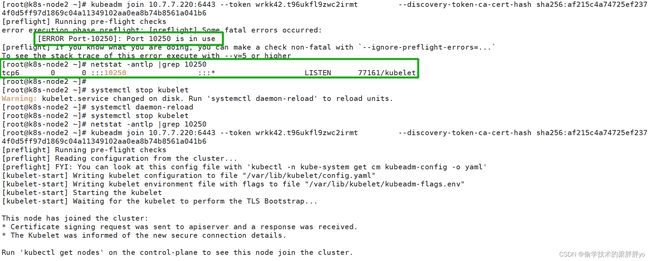

启动完kubelet后,在执行kubeadm join加入k8s集群时报错端口占用,可先停掉kuebelet再执行kubeadm join,kubelet会自动启动。

5、将node加入到k8s cluster

在node节点执行kubeadm join

kubeadm join 10.7.7.220:6443 --token wrkk42.t96ukfl9zwc2irmt --discovery-token-ca-cert-hash sha256:af215c4a74725ef2374f0d5ff97d1869c04a11349102aa0ea8b74b8561a041b6

此命令在master 节点kubeadm init成功会显示。直接复制到node节点执行就可以。

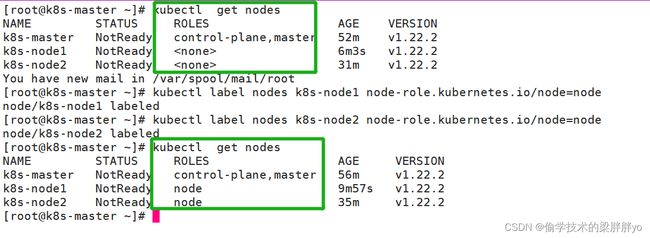

6、给node节点打label,使ROLES可以识别

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane,master 52m v1.22.2

k8s-node1 NotReady <none> 6m3s v1.22.2

k8s-node2 NotReady <none> 31m v1.22.2

此时查看node信息发现STATUS为NotReady状态切node节点ROLES显示为none。

STATUS为NotReady是因为集群暂未安装网络插件,如flannel、calico

ROLES为none则需要给node节点打label是ROLES可识别

command:kubectl label nodes <节点名字> node-role.kubernetes.io/node=node

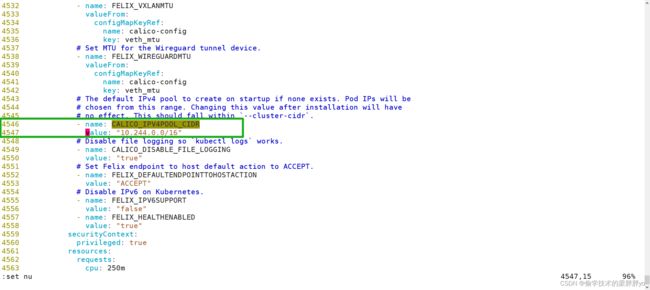

7、部署网络组件(Calico)

Calico是一个纯三层的数据中心网络方案,Calico支持广泛的平台,包括Kubernetes、OpenStack等。

Calico 在每一个计算节点利用 Linux Kernel 实现了一个高效的虚拟路由器( vRouter) 来负责数据转发,而每个 vRouter 通过 BGP 协议负责把自己上运行的 workload 的路由信息向整个 Calico 网络内传播。此外,Calico 项目还实现了 Kubernetes 网络策略,提供ACL功能。

官网参考:https://docs.projectcalico.org/getting-started/kubernetes/quickstart

wget https://docs.projectcalico.org/manifests/calico.yaml

vim calico.yaml

修改Pod网络(CALICO_IPV4POOL_CIDR),与前面kubeadm init指定的子网一样

kubectl apply -f calico.yaml # 创建calico资源

kubectl get pods -n kube-system # 查看pod

外网镜像如果拉取失败自行解决。

[root@k8s-master ~]# cat calico.yaml |grep image

image: docker.io/calico/cni:v3.24.0

imagePullPolicy: IfNotPresent

image: docker.io/calico/cni:v3.24.0

imagePullPolicy: IfNotPresent

image: docker.io/calico/node:v3.24.0

imagePullPolicy: IfNotPresent

image: docker.io/calico/node:v3.24.0

imagePullPolicy: IfNotPresent

image: docker.io/calico/kube-controllers:v3.24.0

imagePullPolicy: IfNotPresen

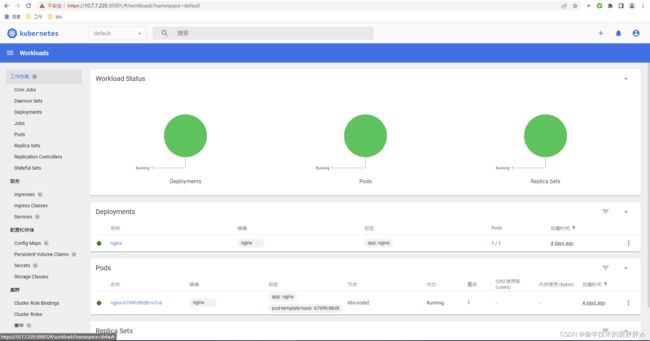

8、测试k8s集群

简单使用命令行创建deployment、svc,,实用nodeport暴露nginx访问测试

[root@k8s-master release-v3.24.0]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@k8s-master release-v3.24.0]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@k8s-master release-v3.24.0]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-rn7c6 0/1 ContainerCreating 0 18s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6h16m

service/nginx NodePort 10.111.2.36 <none> 80:32207/TCP 7s

[root@k8s-master release-v3.24.0]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-rn7c6 1/1 Running 0 3m57s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6h19m

service/nginx NodePort 10.111.2.36 <none> 80:32207/TCP 3m46s

[root@k8s-master release-v3.24.0]# curl http://10.7.7.222:32207 && curl http://10.7.7.221:32207 && curl http://10.7.7.220:32207

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

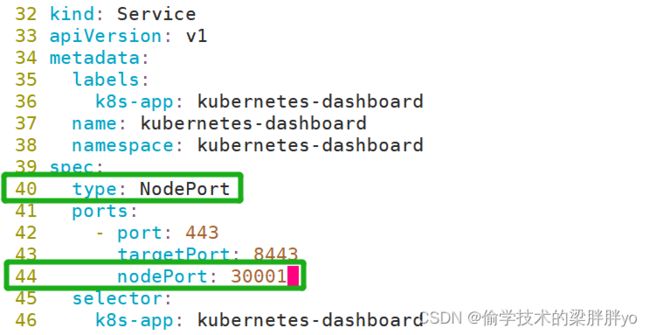

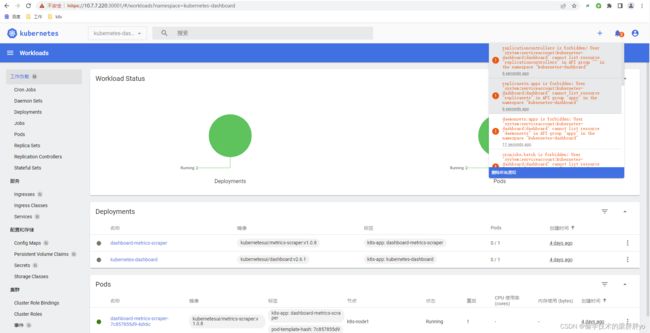

9、部署Dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.6.1/aio/deploy/recommended.yaml

默认Dashboard只能集群内部访问,需要修改yaml文件Service为NodePort类型,暴露到外部

这里监听30001端口,这时我们可以通过访问集群任意节点的30001端口来访问dashboard

vim recommended.yaml 新增第40行、第44行

方式一 token登录

创建service account并绑定默认cluster-admin管理员集群角色,使用输出的token登录Dashboard。(获取token)

创建用户

kubectl create serviceaccount dashboard-admin -n kube-system

用户授权

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

获取用户token

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-h5nmv

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 24106d77-7428-426c-a2a2-17147d320020

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InR4ZEg2UFpjemREaGsxRFhKTTVDYWhpTTBTY2taNnZ1b0VlNUxzWlJFR1kifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4taDVubXYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMjQxMDZkNzctNzQyOC00MjZjLWEyYTItMTcxNDdkMzIwMDIwIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.MsH0-CXkau6rXVBkKG8piy9WGSW7U8Rw2lTD_3K7UGNZhBFbFArEgr59Khoj3NfJG9aGuDQPnCNCsr-6xtNmNXRRx0BSy0FX7iFGEEErRz6xAcfKPYMSf0f_fTdXFuBENSCIHrRipdm-5x76gnY3y6ifcA-2AORYow8gBhde3_7wlw-4V1i6EBppRHb9DHVEba2uMhKJM4QbgY1yaOCfrE1rEPCFb3mYhlblZq2PI_-z18hw12N0YhRN4aExFTkSqoX8sUQ7vku-NwvtpVpcsaNlNjmGIC4YpIRu7_B5nwqi72Gryqhm10EU-1uJzQRD11pdJ_dGKRM_VQgVRSPz8A

方式二 kubeconfig

获取dashboard token

kubectl -n kubernetes-dashboard get secrets

DASH_TOCKEN=$(kubectl -n kubernetes-dashboard get secrets kubernetes-dashboard-token-h8lcq -o jsonpath={.data.token} |base64 -d)

设置 kubeconfig 文件中的一个集群条目

kubectl config set-cluster kubernetes --server=10.7.7.220:6443 --kubeconfig=/root/dashboard-admin.conf

设置 kubeconfig 文件中的一个用户条目

kubectl config set-credentials kubernetes-dashboard --token=$DASH_TOCKEN --kubeconfig=/root/dashboard-admin.conf

设置 kubeconfig 文件中的一个上下文条目

kubectl config set-context kubernetes-dashboard@kubernetes --cluster=kubernetes --user=kubernetes-dashboard --kubeconfig=/root/dashboard-admin.conf

设置 kubeconfig 文件中的当前上下文

kubectl config use-context kubernetes-dashboard@kubernetes --kubeconfig=dashboard-admin.conf

下载到local

sz /root/dashboard-admin.conf

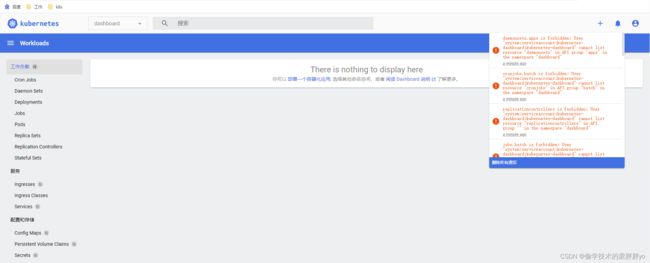

登录后会报错,没有权限访问资源,这里需要使用RBAC完成授权,后续篇章会详解RBAC

[root@k8s-master ~]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.crt --embed-certs=true --server=https://10.7.7.220:6443 --kubeconfig=dashboard.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master ~]# kubectl config set-credentials dashboard --client-key=/root/ca/dashboard-key.pem --client-certificate=/root/ca/dashboard.pem --embed-certs=true --kubeconfig=dashboard.kubeconfig

[root@k8s-master ~]# kubectl config set-context kubernetes-dashboard@kubernetes --cluster=kubernetes --user=dashboard --kubeconfig=dashboard.kubeconfig

Context "kubernetes-dashboard@kubernetes" modified.

[root@k8s-master ~]# kubectl config use-context kubernetes-dashboard@kubernetes --kubeconfig=dashboard.kubeconfig

Switched to context "kubernetes-dashboard@kubernetes"

[root@k8s-master ~]# cat rbac-dashboard.yaml

---

#ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard

namespace: kubernetes-dashboard

---

#对default命名空间下的pods资源进行get、watch、list操作

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: kubernetes-dashboard

name: dashboardrole

rules:

- apiGroups: [""] # api组 , 空为核心组

resources: ["pods"]

verbs: ["get", "watch", "list"]

- apiGroups: ["extensions", "apps"]

resources: ["deployments", "secrets", "namespaces"] # 资源

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"] # 对资源的操作

---

#角色绑定,权限绑定到哪些用户

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: dashboardrolebind

namespace: kubernetes-dashboard

roleRef:

kind: Role

name: dashboardrole # 绑定权限

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: dashboard # 授权用户,与证书的名称一致:w

namespace: kubernetes-dashboard

[root@k8s-master ~]# kubectl describe secrets dashboard-token-fk4vv -n kubernetes-dashboard

Name: dashboard-token-fk4vv

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard

kubernetes.io/service-account.uid: 81c93e7c-f16c-4563-b0a4-e410c985872b

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InR4ZEg2UFpjemREaGsxRFhKTTVDYWhpTTBTY2taNnZ1b0VlNUxzWlJFR1kifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtdG9rZW4tZms0dnYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiODFjOTNlN2MtZjE2Yy00NTYzLWIwYTQtZTQxMGM5ODU4NzJiIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZCJ9.AygW0TtmRfq0dVD3dP047nnW2dZ9QnFCG7vFObuROANj0k2B2pGZepw41cEGyOYJ4zbW5FrTGzbqK32LIt0sD_VTzUQhUGO1gtRXlvGWVPiDAeXorDa2z3FzW_dKCGbRNXqYyHvVrnsZ2a82wxgdyHsymX4WjdpePngNO5Eqh-ZQnrVcFr3BzFTHqjLAXQIDOVFSIL3kisN3fm0WRZkMvNb-18W5KCjIzuOudhWN3dCnaRUhDH247RRIoSzRxHLqtj4gdL9QMqYp9RZIhXkPP3V_w-n3GaOyLaygCHXYwkrR9Y60xb60gLN8DhQP5kmOwVjuNrIi_dHEbjC906wA5Q

将 token放入的kubeconfig文件

这次我们可以看到资源信息,还有报错是因为我们这个token对应的用serviceacount没有这些资源的访问权限,权限可以按需使用RBAC设置。