hive-0.12升级成hive 0.13.1

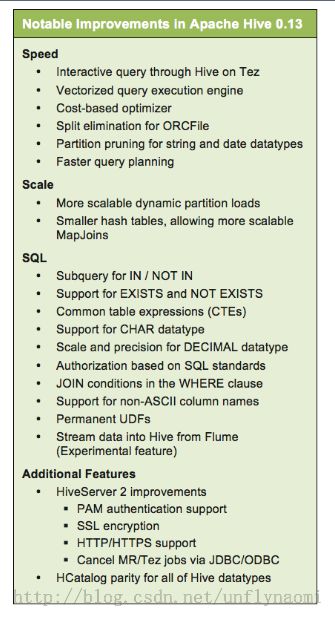

安装了0.12之后,听说0.13.1有许多新的特性,包括永久函数,所以想更新成0.13版的(元数据放在mysql中)

新特性详见

http://zh.hortonworks.com/blog/announcing-apache-hive-0-13-completion-stinger-initiative/

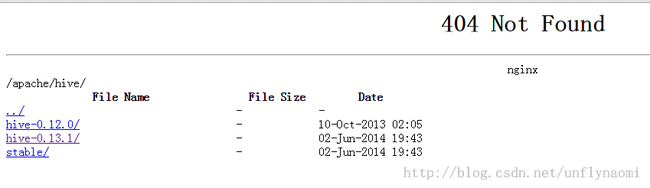

1.下载0.13.1压缩包

地址http://mirrors.hust.edu.cn/apache/hive/

打开后有

点开hive-0.13.1,有如下两个文件

下载apache-hive-0.13.1-bin.tar.gz即可,而src.tar.gz是源代码压缩包,不用下载,

2.解压压缩包

sudo tar xzvf apache-hive-0.13.1-bin.tar.gz -C /opt 我解压到了/opt下

3.执行升级脚本进行mysql元数据库的升级

执行以下命令

cd /opt/apache-hive-0.13.1-bin/scripts/metastore/upgrade/mysql

ls

在文件夹下有如下文件

001-HIVE-972.mysql.sql hive-schema-0.13.0.mysql.sql

002-HIVE-1068.mysql.sql hive-schema-0.3.0.mysql.sql

003-HIVE-675.mysql.sql hive-schema-0.4.0.mysql.sql

004-HIVE-1364.mysql.sql hive-schema-0.4.1.mysql.sql

005-HIVE-417.mysql.sql hive-schema-0.5.0.mysql.sql

006-HIVE-1823.mysql.sql hive-schema-0.6.0.mysql.sql

007-HIVE-78.mysql.sql hive-schema-0.7.0.mysql.sql

008-HIVE-2246.mysql.sql hive-schema-0.8.0.mysql.sql

009-HIVE-2215.mysql.sql hive-schema-0.9.0.mysql.sql

010-HIVE-3072.mysql.sql hive-txn-schema-0.13.0.mysql.sql

011-HIVE-3649.mysql.sql README

012-HIVE-1362.mysql.sql upgrade-0.10.0-to-0.11.0.mysql.sql

013-HIVE-3255.mysql.sql upgrade-0.11.0-to-0.12.0.mysql.sql

014-HIVE-3764.mysql.sql upgrade-0.12.0-to-0.13.0.mysql.sql

015-HIVE-5700.mysql.sql upgrade-0.5.0-to-0.6.0.mysql.sql

016-HIVE-6386.mysql.sql upgrade-0.6.0-to-0.7.0.mysql.sql

017-HIVE-6458.mysql.sql upgrade-0.7.0-to-0.8.0.mysql.sql

018-HIVE-6757.mysql.sql upgrade-0.8.0-to-0.9.0.mysql.sql

hive-schema-0.10.0.mysql.sql upgrade-0.9.0-to-0.10.0.mysql.sql

hive-schema-0.11.0.mysql.sql upgrade.order.mysql

hive-schema-0.12.0.mysql.sql

用hive用户登录mysql(hive 0.12.0的用户)

mysql-h localhost -u hive -p

执行upgrade-0.12.0-to-0.13.0.mysql.sql脚本

mysql> use hive

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> source upgrade-0.12.0-to-0.13.0.mysql.sql

执行过程如下:

+--------------------------------------------------+

| |

+--------------------------------------------------+

| Upgrading MetaStore schema from 0.12.0 to 0.13.0 |

+--------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

+-----------------------------------------------------------------------+

| |

+-----------------------------------------------------------------------+

| < HIVE-5700 enforce single date format for partition column storage > |

+-----------------------------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

Query OK, 0 rows affected (0.22 sec)

Rows matched: 0 Changed: 0 Warnings: 0

+--------------------------------------------+

| |

+--------------------------------------------+

| < HIVE-6386: Add owner filed to database > |

+--------------------------------------------+

1 row in set, 1 warning (0.00 sec)

Query OK, 1 row affected (0.33 sec)

Records: 1 Duplicates: 0 Warnings: 0

Query OK, 1 row affected (0.16 sec)

Records: 1 Duplicates: 0 Warnings: 0

+---------------------------------------------------------------------------------------------+

| |

+---------------------------------------------------------------------------------------------+

| <HIVE-6458 Add schema upgrade scripts for metastore changes related to permanent functions> |

+---------------------------------------------------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

Query OK, 0 rows affected (0.06 sec)

Query OK, 0 rows affected (0.06 sec)

+----------------------------------------------------------------------------------+

| |

+----------------------------------------------------------------------------------+

| <HIVE-6757 Remove deprecated parquet classes from outside of org.apache package> |

+----------------------------------------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

Query OK, 0 rows affected (0.04 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.07 sec)

Query OK, 0 rows affected (0.12 sec)

Query OK, 0 rows affected (0.07 sec)

Query OK, 0 rows affected (0.06 sec)

Query OK, 1 row affected (0.05 sec)

Query OK, 0 rows affected (0.06 sec)

Query OK, 0 rows affected (0.15 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.06 sec)

Query OK, 1 row affected (0.05 sec)

Query OK, 0 rows affected (0.07 sec)

Query OK, 0 rows affected (0.06 sec)

Query OK, 1 row affected (0.05 sec)

Query OK, 1 row affected (0.07 sec)

Rows matched: 1 Changed: 1 Warnings: 0

+-----------------------------------------------------------+

| |

+-----------------------------------------------------------+

| Finished upgrading MetaStore schema from 0.12.0 to 0.13.0 |

+-----------------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

成功完成

4.在mysql中新创建一个用户hivenew给hive0.13.1用

root用户登录mysql创建hive用户并授权,执行命令:

use mysql;

insert into user(Host,User,Password)values("localhost","hivenew",password("hivenew"));密码也是hivenew

GRANTALL PRIVILEGES ON *.* TO 'hivenew'@'localhost' IDENTIFIED BY 'hivenew';

FLUSH PRIVILEGES;

可以用hivenew用户登录观察是否创建用户成功

授予用户hive足够大的权限

GRANT ALL PRIVILEGES ON *.* TO'hive'@'localhost' IDENTIFIED BY 'hive';

FLUSH PRIVILEGES;

可以用hive用户登录观察是否创建用户成功

hadoop@ubuntu:/usr/local$ mysql -h localhost -uhive -p

5.将mysql的 jdbc拷贝到apache-hive-0.13.1-bin/lib文件夹下

cp mysql-connector-java-5.1.31/*.jar apache-hive-0.13.1-bin/lib (这个jar包hive .12.0版本时已经配置好了)

6.开始配置文件千万不可以把0.12.0的文件直接复制过来,会导致无法成功

首先复制配置文件

hadoop@ubuntu:/usr/local/hadoop/hive/conf$cphive-default.xml.template hive-default.xml

hadoop@ubuntu:/usr/local/hadoop/hive/conf$cp hive-default.xml.templatehive-site.xml

hadoop@ubuntu:/usr/local/hadoop/hive/conf$cp hive-env.sh.template hive-env.sh

hadoop@ubuntu:/usr/local/hadoop/hive/conf$cp hive-log4j.properties.template hive-log4j.properties

再创建存储目录

hadoop@ubuntu:/usr/local/hadoop/hive$mkdir -p /usr/local/hadoop/hive-0.13.1/warehouse

hadoop@ubuntu:/usr/local/hadoop/hive$mkdir -p /usr/local/hadoop/hive-0.13.1/log

然后配置hive-env.sh文件

用vim打开

将exportHADOOP_HEAPSIZE=1024前面的‘#’去掉,当然可以根据自己的环境对这个默认的1024进行优化;

把#去掉使得改动生效

将exportHADOOP_HOME前面的‘#’号去掉,并让它指向您所安装hadoop的目录,我的/usr/local/hadoop;

将exportHIVE_CONF_DIR=/opt/apache-hive-0.13.1-bin 并且把‘#’号去掉;

将exportHIVE_AUX_JARS_PATH=/opt/apache-hive-0.13.1-bin/lib,并且把‘#’号去掉。

esc(键) :wq

配置hive-site.xml,改以下几个地方

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/usr/local/hadoop/hive-0.13.1/warehouse</value>//与上面创建的文件夹一致

<description>location of default database for thewarehouse</description>

</property>

#存放hive相关日志的目录

<property>

<name>hive.querylog.location</name>

<value>/usr/local/hadoop/hive-0.13.1/log</value>

<description>

Location of Hive run time structured log file

</description>

</property>

接着修改hive-site.xml 这一步将mysql与hive连接

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hivenew?createDatabaseIfNotExist=true</value>//这次新创建的元数据库是hivemew

<description>JDBC connect string for aJDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for aJDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hivenew</value>//用新创建的用户

<description>username to use againstmetastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hivenew</value>

<description>password to use againstmetastore database</description>

</property>

</span>

<pre name="code" class="html"> <property> <name>hive.stats.dbclass</name> <value>jdbc:mysql</value> <description>The default database that stores temporary hive statistics.</description> </property> <property> <name>hive.stats.jdbcdriver</name> <value>com.mysql.jdbc.Driver</value> <description>The JDBC driver for the database that stores temporary hive statistics.</description> . </property> <property> <name>hive.stats.dbconnectionstring</name> <value>jdbc:mysql://localhost:3306/hivenew</value> description>The default connection string for the database that stores temporary hive statistics.</description> </property>

另外为了避免启动时发生警报,所以把hive.metastore.ds.retry打头的都换成 hive.hmshandler.retry(retry后面不动)

7.激动人心的时刻到了,启动hive

bin/hive

再看看mysql里有没有元数据库

mysql -h localhost -u hivenew -p

mysql> use hivenew;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+---------------------------+

| Tables_in_hivenew |

+---------------------------+

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| DATABASE_PARAMS |

| DBS |

| FUNCS |

| FUNC_RU |

| GLOBAL_PRIVS |

| PARTITIONS |

| PARTITION_KEYS |

| PARTITION_KEY_VALS |

| PARTITION_PARAMS |

| PART_COL_STATS |

| ROLES |

| SDS |

| SD_PARAMS |

| SEQUENCE_TABLE |

| SERDES |

| SERDE_PARAMS |

| SKEWED_COL_NAMES |

| SKEWED_COL_VALUE_LOC_MAP |

| SKEWED_STRING_LIST |

| SKEWED_STRING_LIST_VALUES |

| SKEWED_VALUES |

| SORT_COLS |

| TABLE_PARAMS |

| TAB_COL_STATS |

| TBLS |

| VERSION |

+---------------------------+

29 rows in set (0.00 sec)

hive 0.13.1竟然只有29个表,hive-0.12.0有40个表呢

更新成功!!!![]()

![]()

![]()

8.要安装hive 0.13.1网络接口的见我的另外一篇博客